图示-实现hive的文件与hdfs的导入导出

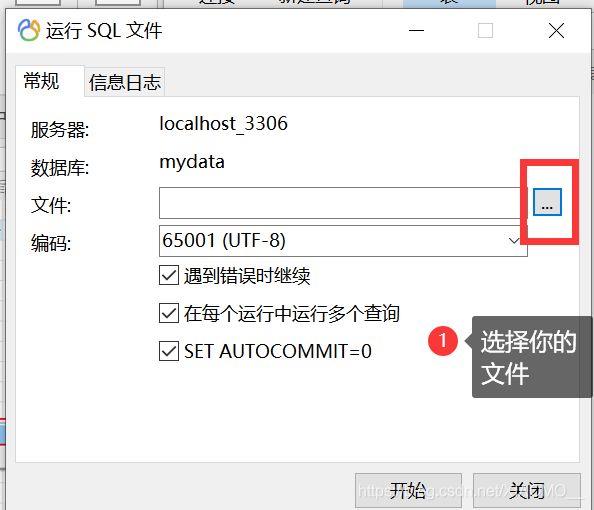

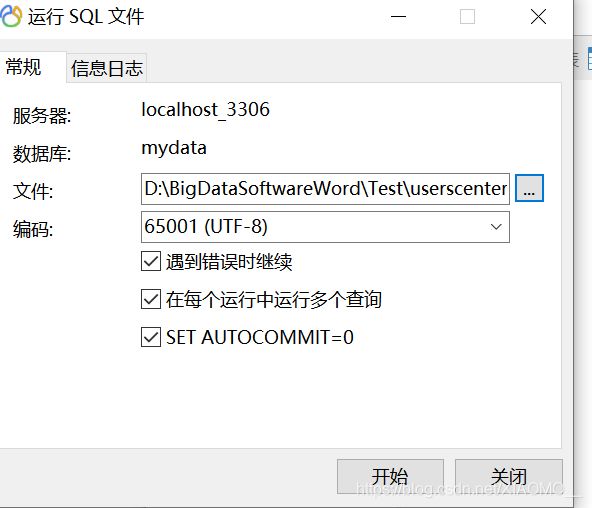

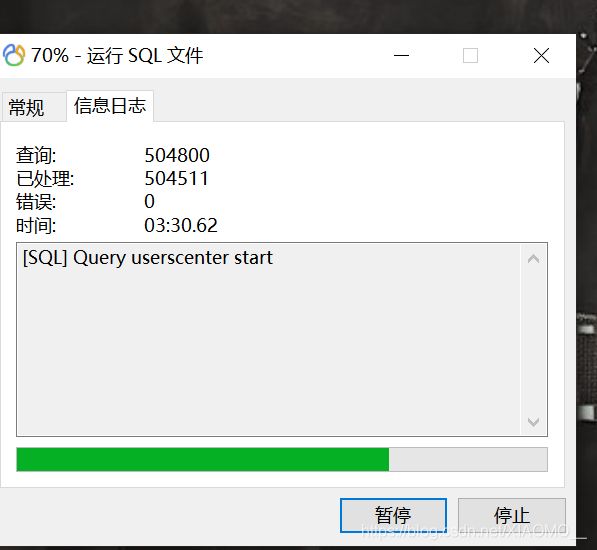

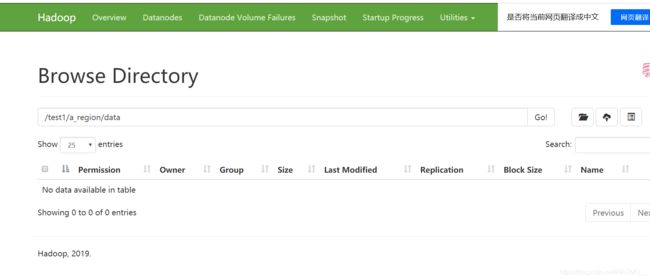

已知一堆sql导入数据库。

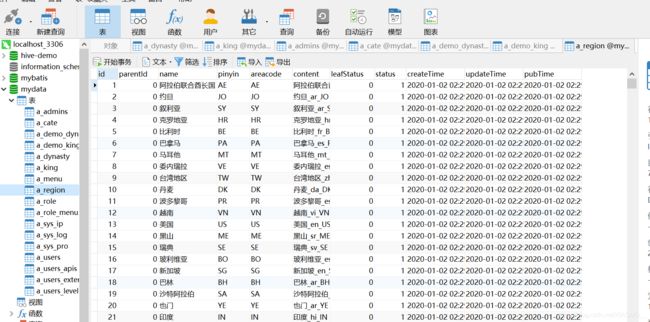

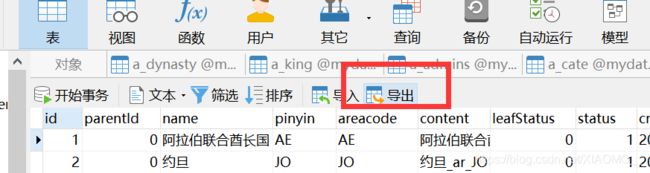

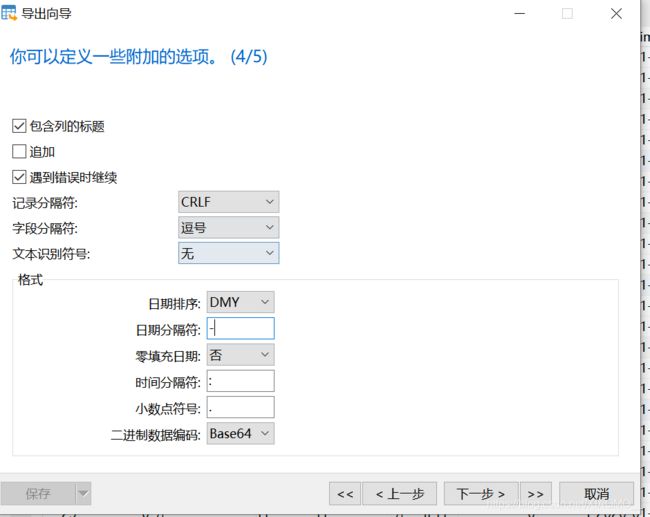

Navite导出成文本

导为txt文件成功

导成结构,并修改成hive能看懂的sql

给hive中新建数据库并切换到数据库中:

hive> create database test1; OK Time taken: 2.757 seconds hive> show databases; OK default my_1 my_2 mydata test test1 test_1 Time taken: 0.122 seconds, Fetched: 7 row(s) hive> use test1; OK Time taken: 0.105 seconds

创表a_users:

CREATE TABLE `a_users` ( `id` int COMMENT '主键;自动递增', `regionId` int COMMENT '地区id', `parentId` int COMMENT '上一级,推荐Id', `level` int COMMENT '第几级', `email` varchar(255) COMMENT '邮箱', `password` varchar(255) COMMENT '密码', `nickName` varchar(255) COMMENT '昵称', `trueName` varchar(255) COMMENT '真实姓名', `qq` varchar(255) COMMENT 'qq', `phone` varchar(255) COMMENT '电话', `weiXin` varchar(255) COMMENT '微信', `birthday` timestamp COMMENT '生日', `education` tinyint COMMENT '最高学历;\r\n0:未知,1:小学,2:初中,3:高中,4:中专,5:大专,6:本科,7:研究生,8:博士生;9:其他', `loginCount` int COMMENT '登陆次数', `failedCount` int COMMENT '密码错误次数', `failedTime` timestamp COMMENT '失败次数', `address` varchar(255) COMMENT '详细地址', `photoPath` varchar(255) COMMENT '头像', `recomCode` varchar(255) COMMENT '邀请码', `souPass` varchar(255) COMMENT 'smm', `emailStatus` tinyint COMMENT '邮箱状态:0:未认证;1:已认证;2:认证不通过', `phoneStatus` tinyint COMMENT '手机状态:0:未认证;1:已认证;2:认证不通过', `idcardStatus` tinyint COMMENT '身份证状态:0:未认证;1:认证通过;2:认证不通过', `sex` tinyint COMMENT '性别:0:无,1:男;2:女', `usersType` tinyint COMMENT '用户类别:0:学生,1:老师,2:辅导员', `status` tinyint COMMENT '状态:0:禁用,1:启用', `createTime` timestamp COMMENT '创建时间', `updateTime` timestamp COMMENT '更新时间', `lastLoginTime` timestamp COMMENT '发布时间:用来排序' ) -- 记录行的分隔符 row format delimited -- 列的分隔符 fields terminated by ',' -- 存储文件的格式;textfile是默认的,写与不写都是一样的 stored as textfile ; ;

创表a_region:

CREATE TABLE `a_region` ( `id` int COMMENT '主键;自动递增', `parentId` int COMMENT '上一级Id;0:表示国家;', `name` varchar(255)COMMENT '名称', `pinyin` varchar(255)COMMENT '拼音', `areacode` varchar(255)COMMENT '地区编码', `content` string COMMENT '内容', `leafStatus` tinyint COMMENT '叶子节点状态:0:非叶子;1:叶子', `status` tinyint COMMENT '状态:0:禁用,1:启用', `createTime` timestamp COMMENT '创建时间', `updateTime` timestamp COMMENT '更新时间', `pubTime` timestamp COMMENT '发布时间:用来排序' ) -- 记录行的分隔符 row format delimited -- 列的分隔符 fields terminated by ',' -- 存储文件的格式;textfile是默认的,写与不写都是一样的 stored as textfile ; ;

查看创建的表:

hive> > show tables; OK a_region a_users Time taken: 0.11 seconds, Fetched: 2 row(s)

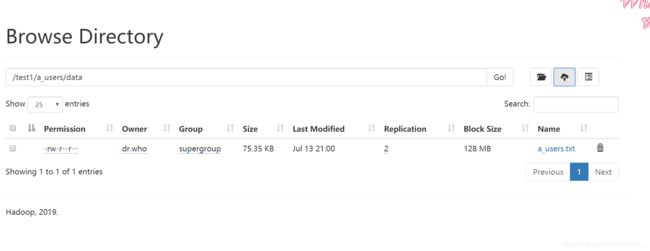

导出一个目录到hdfs上:

hive> export table a_region to '/test1/a_region' ; OK Time taken: 3.242 seconds hive> export table a_users to '/test1/a_users' ; OK Time taken: 0.546 seconds

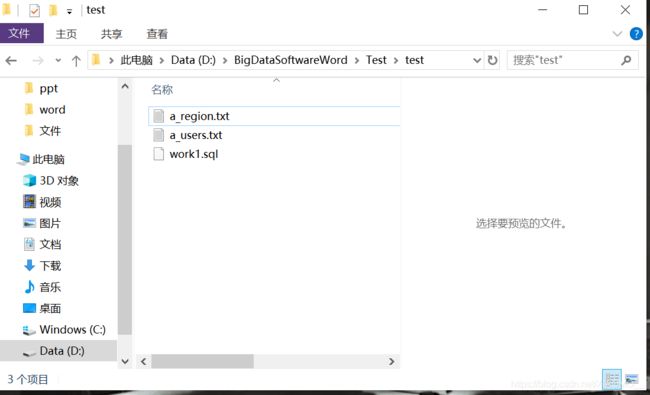

node7-1:9870 和 node7-2:9870:

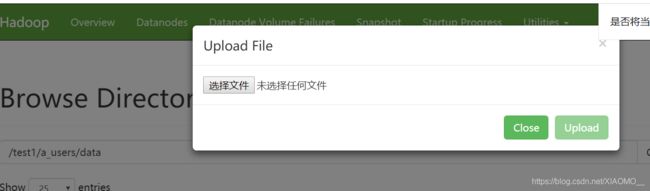

把txt文件上传到hdfs,两个

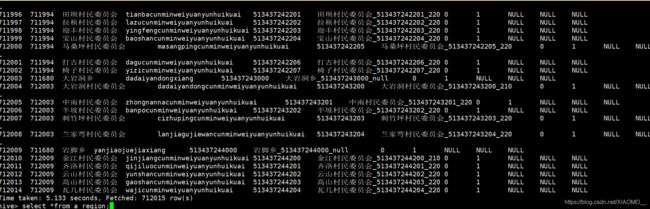

导入并查询:

导入并查询: hive> import table a_region from '/test1/a_region' ; Copying data from hdfs://jh/test1/a_region/data Copying file: hdfs://jh/test1/a_region/data/a_region.txt Loading data to table test1.a_region OK Time taken: 5.895 seconds hive>select *from a_region; 导入并查询: hive> import table a_users from '/test1/a_users' ; Copying data from hdfs://jh/test1/a_users/data Copying file: hdfs://jh/test1/a_users/data/a_users.txt Loading data to table test1.a_users OK Time taken: 0.946 seconds hive>select *from a_users;

a_region查询完成,a_users同理:

题一:-- 统计不同学历的不同人数;请列出sql 语句+截图

hive> select education,count(*) from a_users group by education ;

Query ID = root_20200713211401_6f716ab4-e6c2-4b4b-984d-9571d37b0873

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks not specified. Estimated from input data size: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=

In order to set a constant number of reducers:

set mapreduce.job.reduces=

Starting Job = job_1594600282321_0001, Tracking URL = http://node7-1:8088/proxy/application_1594600282321_0001/

Kill Command = /data/hadoop/hadoop/bin/mapred job -kill job_1594600282321_0001

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

2020-07-13 21:14:33,100 Stage-1 map = 0%, reduce = 0%

2020-07-13 21:14:48,146 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 4.0 sec

2020-07-13 21:15:07,026 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 8.87 sec

MapReduce Total cumulative CPU time: 8 seconds 870 msec

Ended Job = job_1594600282321_0001

MapReduce Jobs Launched:

Stage-Stage-1: Map: 1 Reduce: 1 Cumulative CPU: 8.87 sec HDFS Read: 96758 HDFS Write: 271 SUCCESS

Total MapReduce CPU Time Spent: 8 seconds 870 msec

OK

NULL 81

0 107

1 1

2 6

3 14

4 14

5 27

6 10

7 1

8 4

9 3

Time taken: 67.004 seconds, Fetched: 11 row(s)

题二:-- 统计每天用户的注册人数;请列出sql 语句+截图

hive> select cast(createTime as date),count(*) from a_users GROUP BY cast( createTime as date);

Query ID = root_20200713211645_d85411cd-f6da-4d26-99ca-853c0a5861ed

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks not specified. Estimated from input data size: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=

In order to set a constant number of reducers:

set mapreduce.job.reduces=

Starting Job = job_1594600282321_0002, Tracking URL = http://node7-1:8088/proxy/application_1594600282321_0002/

Kill Command = /data/hadoop/hadoop/bin/mapred job -kill job_1594600282321_0002

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

2020-07-13 21:17:15,538 Stage-1 map = 0%, reduce = 0%

2020-07-13 21:17:31,705 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 4.59 sec

2020-07-13 21:17:44,766 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 8.73 sec

MapReduce Total cumulative CPU time: 8 seconds 730 msec

Ended Job = job_1594600282321_0002

MapReduce Jobs Launched:

Stage-Stage-1: Map: 1 Reduce: 1 Cumulative CPU: 8.73 sec HDFS Read: 96619 HDFS Write: 106 SUCCESS

Total MapReduce CPU Time Spent: 8 seconds 730 msec

OK

NULL 268

Time taken: 61.579 seconds, Fetched: 1 row(s)

题三:统计每天用户的注册人数(只统计河南的用户);

hive> select cast(createTime as date),count(*) from a_users where address like "%河南省%" GROUP BY cast( createTime as date);

Query ID = root_20200713211955_33340ba8-9656-4d32-87f9-ccbf6945206e

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks not specified. Estimated from input data size: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=

In order to set a constant number of reducers:

set mapreduce.job.reduces=

Starting Job = job_1594600282321_0003, Tracking URL = http://node7-1:8088/proxy/application_1594600282321_0003/

Kill Command = /data/hadoop/hadoop/bin/mapred job -kill job_1594600282321_0003

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

2020-07-13 21:20:19,162 Stage-1 map = 0%, reduce = 0%

2020-07-13 21:20:29,926 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 4.8 sec

2020-07-13 21:20:41,126 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 9.38 sec

MapReduce Total cumulative CPU time: 9 seconds 380 msec

Ended Job = job_1594600282321_0003

MapReduce Jobs Launched:

Stage-Stage-1: Map: 1 Reduce: 1 Cumulative CPU: 9.38 sec HDFS Read: 97826 HDFS Write: 104 SUCCESS

Total MapReduce CPU Time Spent: 9 seconds 380 msec

OK

NULL 7

Time taken: 48.073 seconds, Fetched: 1 row(s)

题四:-- 统计每天用户的注册人数;按照创建时间升序,每天只列出10个人;

hive> select cast(createTime as date),count(*),trueName from

> (select *, row_number() over (partition by cast(createTime as date)) as bh from a_users ) t

> where bh <11 GROUP BY cast(createTime as date),trueName;

Query ID = root_20200713212220_65a08e64-0b87-4d8d-b4bc-dbe2456713da

Total jobs = 2

Launching Job 1 out of 2

Number of reduce tasks not specified. Estimated from input data size: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=

In order to set a constant number of reducers:

set mapreduce.job.reduces=

Starting Job = job_1594600282321_0004, Tracking URL = http://node7-1:8088/proxy/application_1594600282321_0004/

Kill Command = /data/hadoop/hadoop/bin/mapred job -kill job_1594600282321_0004

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

2020-07-13 21:22:38,447 Stage-1 map = 0%, reduce = 0%

2020-07-13 21:22:53,496 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 3.79 sec

2020-07-13 21:23:06,031 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 9.96 sec

MapReduce Total cumulative CPU time: 9 seconds 960 msec

Ended Job = job_1594600282321_0004

Launching Job 2 out of 2

Number of reduce tasks not specified. Estimated from input data size: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=

In order to set a constant number of reducers:

set mapreduce.job.reduces=

Starting Job = job_1594600282321_0005, Tracking URL = http://node7-1:8088/proxy/application_1594600282321_0005/

Kill Command = /data/hadoop/hadoop/bin/mapred job -kill job_1594600282321_0005

Hadoop job information for Stage-2: number of mappers: 1; number of reducers: 1

2020-07-13 21:23:34,372 Stage-2 map = 0%, reduce = 0%

2020-07-13 21:23:46,039 Stage-2 map = 100%, reduce = 0%, Cumulative CPU 3.12 sec

2020-07-13 21:23:56,749 Stage-2 map = 100%, reduce = 100%, Cumulative CPU 7.29 sec

MapReduce Total cumulative CPU time: 7 seconds 290 msec

Ended Job = job_1594600282321_0005

MapReduce Jobs Launched:

Stage-Stage-1: Map: 1 Reduce: 1 Cumulative CPU: 9.96 sec HDFS Read: 97476 HDFS Write: 224 SUCCESS

Stage-Stage-2: Map: 1 Reduce: 1 Cumulative CPU: 7.29 sec HDFS Read: 8662 HDFS Write: 243 SUCCESS

Total MapReduce CPU Time Spent: 17 seconds 250 msec

OK

NULL 6

NULL 1 嵇荟茹

NULL 1 李瑶

NULL 1 牛真真

NULL 1 王向旗

Time taken: 99.565 seconds, Fetched: 5 row(s)