深蓝学院视觉slam十四讲第7章作业

目录

-

-

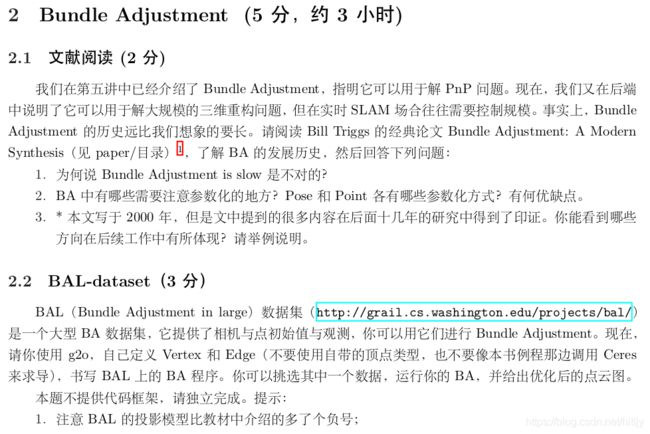

- 2. Bundle Adjustment

-

- 2.1 文献阅读

- 2.2 BAL-dataset

- 3. 直接法的 Bundle Adjustment

-

- 3.1 数学模型

- 3.2 实现

-

2. Bundle Adjustment

2.1 文献阅读

[1] Bill Triggs, Philip Mclauchlan, Richard Hartley, Andrew Fitzgibbon. Bundle Ajustment — A

Modern Synthesis.

1.矩阵H具有稀疏性,对角块矩阵有加速计算技巧

2.相机内参 位姿 3D点坐标 投影后的像素坐标;

pose: 欧拉角/四元数/旋转矩阵/李代数+平移坐标

point:齐次和齐次

3.文中提到的“网络图”即“图优化”当下几乎代替了以往的滤波算法

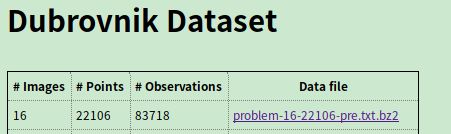

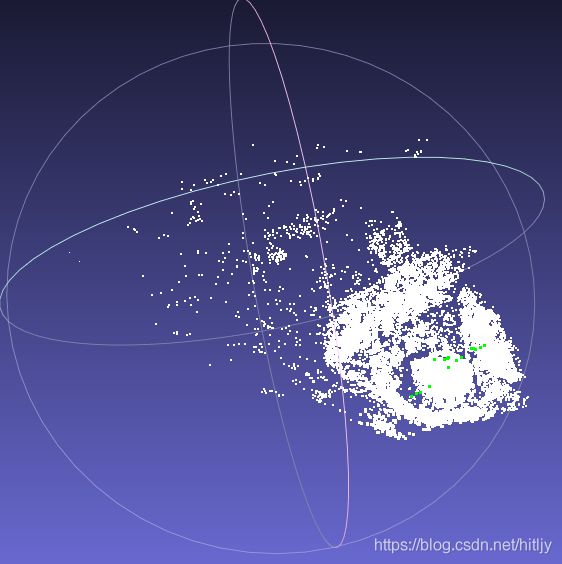

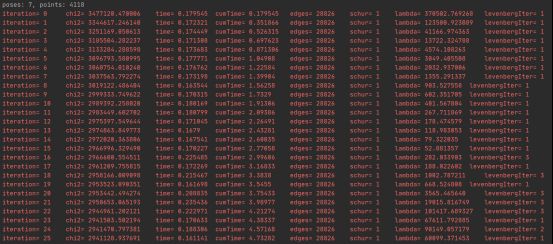

2.2 BAL-dataset

/// 位姿加相机内参的顶点,9维,前三维为so3,接下去为t, f, k1, k2

class VertexPoseAndIntrinsics : public g2o::BaseVertex<9, PoseAndIntrinsics> {

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW;

VertexPoseAndIntrinsics() {}

virtual void setToOriginImpl() override {

_estimate = PoseAndIntrinsics();

}

virtual void oplusImpl(const double *update) override {

_estimate.rotation = SO3d::exp(Vector3d(update[0], update[1], update[2])) * _estimate.rotation;

_estimate.translation += Vector3d(update[3], update[4], update[5]);

_estimate.focal += update[6];

_estimate.k1 += update[7];

_estimate.k2 += update[8];

}

/// 根据估计值投影一个点

Vector2d project(const Vector3d &point) {

Vector3d pc = _estimate.rotation * point + _estimate.translation;

pc = -pc / pc[2];

double r2 = pc.squaredNorm();

double distortion = 1.0 + r2 * (_estimate.k1 + _estimate.k2 * r2);

return Vector2d(_estimate.focal * distortion * pc[0],

_estimate.focal * distortion * pc[1]);

}

virtual bool read(istream &in) {}

virtual bool write(ostream &out) const {}

};

这题基本就是把示例代码看懂跑了一下,示例中定义Vertex为9维包括:3维旋转、3维平移、1维焦距、2维畸变参数,本想自己写一下雅克比但畸变参数中包括 X c X_c Xc、 Y c Y_c Yc相关项求导起来太麻烦,个人感觉后面的焦距和畸变参数没必要包含在Vertex中,毕竟是给定的相机参数没必要包含在优化中

3. 直接法的 Bundle Adjustment

3.1 数学模型

1.计算一个窗口中灰度误差之和

∑ W ∥ I ( p i ) − I j ( π ( K T j p i ) ) ∥ 2 2 \sum_{W}\left\|I\left(\mathbf{p}_{i}\right)-I_{j}\left(\pi\left(\mathbf{K} \mathbf{T}_{j} \mathbf{p}_{i}\right)\right)\right\|_{2}^{2} W∑∥I(pi)−Ij(π(KTjpi))∥22

2.关联相机位姿(6维)和3D点坐标(3维)2个优化变量

3.

∂ u ∂ P = [ ∂ u ∂ X ∂ u ∂ Y ∂ u ∂ Z ∂ v ∂ X ∂ v ∂ Y ∂ v ∂ Z ] = [ f x Z 0 − f x X Z 2 0 f y Z − f y Y Z 2 ] \frac{\partial \boldsymbol{u}}{\partial \boldsymbol{P}^{}}=\left[\begin{array}{ccc} \frac{\partial u}{\partial X^{}} & \frac{\partial u}{\partial Y^{}} & \frac{\partial u}{\partial Z^{}} \\ \frac{\partial v}{\partial X^{}} & \frac{\partial v}{\partial Y^{}} & \frac{\partial v}{\partial Z^{}} \end{array}\right]= \left[\begin{array}{ccc} \frac{f_{x}}{Z^{}} & 0 & -\frac{f_{x} X^{}}{Z^{ 2}} \\ 0 & \frac{f_{y}}{Z^{}} & -\frac{f_{y} Y^{}}{Z^{ 2}} \end{array}\right] ∂P∂u=[∂X∂u∂X∂v∂Y∂u∂Y∂v∂Z∂u∂Z∂v]=[Zfx00Zfy−Z2fxX−Z2fyY]

∂ P ∂ δ ξ = [ I , − P ∧ ] \frac{\partial \boldsymbol{P}}{\partial \delta \boldsymbol{\xi}}=\left[\boldsymbol{I},-\boldsymbol{P}^{\wedge}\right] ∂δξ∂P=[I,−P∧]

∂ u ∂ δ ξ = [ f x Z 0 − f x X Z 2 − f x X Y Z 2 f x + f x X 2 Z 2 − f x Y Z 0 f y Z − f y Y Z 2 − f y − f y Y 2 Z 2 f y X Y Z 2 f y X Z ] \frac{\partial \boldsymbol{u}}{\partial \delta \boldsymbol{\xi}}=\left[\begin{array}{ccccc} \frac{f_{x}}{Z} & 0 & -\frac{f_{x} X}{Z^{2}} & -\frac{f_{x} X Y}{Z^{2}} & f_{x}+\frac{f_{x} X^{2}}{Z^{2}} & -\frac{f_{x} Y}{Z} \\ 0 & \frac{f_{y}}{Z} & -\frac{f_{y} Y}{Z^{2}} & -f_{y}-\frac{f_{y} Y^{2}}{Z^{2}} & \frac{f_{y} X Y}{Z^{2}} & \frac{f_{y} X}{Z} \end{array}\right] ∂δξ∂u=[Zfx00Zfy−Z2fxX−Z2fyY−Z2fxXY−fy−Z2fyY2fx+Z2fxX2Z2fyXY−ZfxYZfyX]

对位姿的雅克比:

J = − ∂ I 2 ∂ u ∂ u ∂ δ ξ J=-\frac{\partial \boldsymbol{I}_{2}}{\partial \boldsymbol{u}} \frac{\partial \boldsymbol{u}}{\partial \delta \boldsymbol{\xi}} J=−∂u∂I2∂δξ∂u

对3D点坐标的雅克比:

J = − ∂ I 2 ∂ u ∂ u ∂ P J=-\frac{\partial \boldsymbol{I}_{2}}{\partial \boldsymbol{u}} \frac{\partial \boldsymbol{u}}{\partial \boldsymbol{P}} J=−∂u∂I2∂P∂u

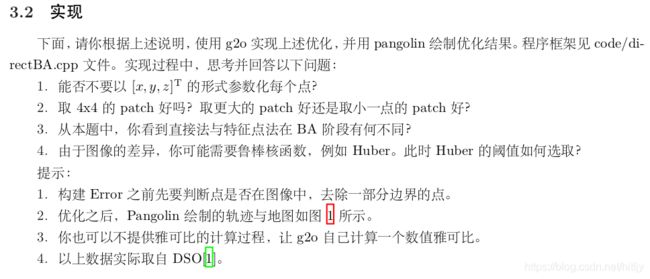

3.2 实现

1.可以,题中提到的逆深度参数化

1.可以,题中提到的逆深度参数化

2.结果可以,更大增加计算量,更小鲁棒性差

3.直接法计算光度误差,特征点法计算重投影误差,误差形式和雅克比形式都不一样

4.可以先计算一步得到总误差除以点数获得一个平均误差值,再根据该值设置

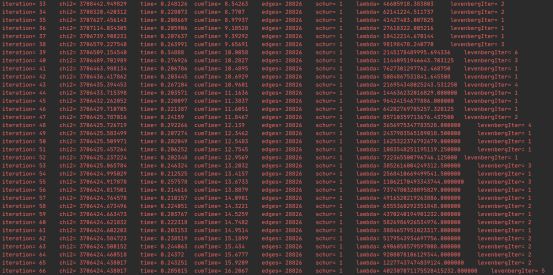

注释掉手写雅克比,采用g2o自动计算雅克比不知道为什么误差一直降不下来

// 16x1 error, which is the errors in patch

typedef Eigen::Matrix<double,16,1> Vector16d;

class EdgeDirectProjection : public g2o::BaseBinaryEdge<16, Vector16d, g2o::VertexSBAPointXYZ, VertexSophus> {

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW;

EdgeDirectProjection(float *color, cv::Mat &target) {

this->origColor = color;

this->targetImg = target;

}

~EdgeDirectProjection() {}

virtual void computeError() override {

// TODO START YOUR CODE HERE

// compute projection error ...

// 每条边包含的一个位姿结点,一个路标结点,其设置结点时的索引与计算error时的结点索引一致,即setVertex(0,…)和_Vertex[0]对应

const g2o::VertexSBAPointXYZ *vertexPw = static_cast<const g2o::VertexSBAPointXYZ * >(vertex(0));

const VertexSophus *vertexTcw = static_cast<const VertexSophus * >(vertex(1));

Eigen::Vector3d Pc = vertexTcw->estimate() * vertexPw->estimate();

float u = Pc[0] / Pc[2] * fx + cx;

float v = Pc[1] / Pc[2] * fy + cy;

if (u - 2 < 0 || v - 2 <0 || u+1 >= targetImg.cols || v + 1 >= targetImg.rows) {

//边界点的处理(error设为0,setLevel(1))

this->setLevel(1);

for (int n = 0; n < 16; n++)

_error[n] = 0;

} else {

for (int i = -2; i < 2; i++) {

for (int j = -2; j < 2; j++) {

int num = 4 * i + j + 10;

_error[num] = origColor[num] - GetPixelValue(targetImg, u + i, v + j);

}

}

}

// END YOUR CODE HERE

}

virtual void linearizeOplus() override {

if (level() == 1) {

_jacobianOplusXi = Eigen::Matrix<double, 16, 3>::Zero();

_jacobianOplusXj = Eigen::Matrix<double, 16, 6>::Zero();

return;

}

const g2o::VertexSBAPointXYZ *vertexPw = static_cast<const g2o::VertexSBAPointXYZ * >(vertex(0));

const VertexSophus *vertexTcw = static_cast<const VertexSophus * >(vertex(1));

Eigen::Vector3d Pc = vertexTcw->estimate() * vertexPw->estimate();

float x = Pc[0];

float y = Pc[1];

float z = Pc[2];

float inv_z = 1.0 / z;

float inv_z2 = inv_z * inv_z;

float u = x * inv_z * fx + cx;

float v = y * inv_z * fy + cy;

Eigen::Matrix<double, 2, 3> J_Puv_Pc;

J_Puv_Pc(0, 0) = fx * inv_z;

J_Puv_Pc(0, 1) = 0;

J_Puv_Pc(0, 2) = -fx * x * inv_z2;

J_Puv_Pc(1, 0) = 0;

J_Puv_Pc(1, 1) = fy * inv_z;

J_Puv_Pc(1, 2) = -fy * y * inv_z2;

Eigen::Matrix<double, 3, 6> J_Pc_kesi = Eigen::Matrix<double, 3, 6>::Zero();

J_Pc_kesi(0, 0) = 1;

J_Pc_kesi(0, 4) = z;

J_Pc_kesi(0, 5) = -y;

J_Pc_kesi(1, 1) = 1;

J_Pc_kesi(1, 3) = -z;

J_Pc_kesi(1, 5) = x;

J_Pc_kesi(2, 2) = 1;

J_Pc_kesi(2, 3) = y;

J_Pc_kesi(2, 4) = -x;

Eigen::Matrix<double, 1, 2> J_I_Puv;

for (int i = -2; i < 2; i++)

for (int j = -2; j < 2; j++) {

int num = 4 * i + j + 10;

J_I_Puv(0, 0) =

(GetPixelValue(targetImg, u + i + 1, v + j) - GetPixelValue(targetImg, u + i - 1, v + j)) / 2;

J_I_Puv(0, 1) =

(GetPixelValue(targetImg, u + i, v + j + 1) - GetPixelValue(targetImg, u + i, v + j - 1)) / 2;

_jacobianOplusXi.block<1, 3>(num, 0) = -J_I_Puv * J_Puv_Pc * vertexTcw->estimate().rotationMatrix();

_jacobianOplusXj.block<1, 6>(num, 0) = -J_I_Puv * J_Puv_Pc * J_Pc_kesi;

}

}

virtual bool read(istream &in) {}

virtual bool write(ostream &out) const {}

private:

cv::Mat targetImg; // the target image

float *origColor = nullptr; // 16 floats, the color of this point

};

// build optimization problem

typedef g2o::BlockSolver<g2o::BlockSolverTraits<6, 3>> DirectBlock; // 求解的向量是6*1的

typedef g2o::LinearSolverDense<DirectBlock::PoseMatrixType> LinearSolverType; // 线性求解器类型

// 梯度下降方法,可以从GN, LM, DogLeg 中选

auto solver = new g2o::OptimizationAlgorithmLevenberg(

g2o::make_unique<DirectBlock>(g2o::make_unique<LinearSolverType>()));

g2o::SparseOptimizer optimizer;

optimizer.setAlgorithm(solver);

optimizer.setVerbose(true);

// TODO add vertices, edges into the graph optimizer

// START YOUR CODE HERE

// 每条边包含的一个位姿结点,一个路标结点,其设置结点时的索引与计算error时的结点索引一致,即setVertex(0,…)和_Vertex[0]对应

for (int k = 0; k < points.size(); ++k) {

g2o::VertexSBAPointXYZ* pPoint = new g2o::VertexSBAPointXYZ();

pPoint->setId(k);

pPoint->setEstimate(points[k]);

pPoint->setMarginalized(true);//使用稀疏优化时手动设置需要边缘化的顶点 优化目标仅为位姿结点,所以路标结点需要边缘化

optimizer.addVertex(pPoint);

}

for (int j = 0; j < poses.size(); j++) {

VertexSophus *vertexTcw = new VertexSophus();

vertexTcw->setEstimate(poses[j]);

// 两种节点的id顺序保持连续

vertexTcw->setId(j + points.size());

optimizer.addVertex(vertexTcw);

}

for (int c = 0; c < poses.size(); c++)

for (int p = 0; p < points.size(); p++) {

EdgeDirectProjection *edge = new EdgeDirectProjection(color[p], images[c]);

// 先point后pose

edge->setVertex(0, dynamic_cast<g2o::VertexSBAPointXYZ *>(optimizer.vertex(p)));

edge->setVertex(1, dynamic_cast<VertexSophus *>(optimizer.vertex(c + points.size())));

// 信息矩阵可直接设置为 error_dim*error_dim 的单位阵

edge->setInformation(Eigen::Matrix<double, 16, 16>::Identity());

// 设置Huber核函数,减小错误点影响,加强鲁棒性

g2o::RobustKernelHuber *rk = new g2o::RobustKernelHuber;

rk->setDelta(1.0);

edge->setRobustKernel(rk);

optimizer.addEdge(edge);

}

// END YOUR CODE HERE

// perform optimization

optimizer.initializeOptimization(0);

optimizer.optimize(200);

// TODO fetch data from the optimizer

// START YOUR CODE HERE

for (int c = 0; c < poses.size(); c++)

for (int p = 0; p < points.size(); p++) {

Eigen::Vector3d Pw = dynamic_cast<g2o::VertexSBAPointXYZ *>(optimizer.vertex(p))->estimate();

points[p] = Pw;

Sophus::SE3d Tcw = dynamic_cast<VertexSophus *>(optimizer.vertex(c + points.size()))->estimate();

poses[c] = Tcw;

}