《PyTorch深度学习实践》完结合集 · Hongpu Liu · PyTorch梯度下降法(2)

目录

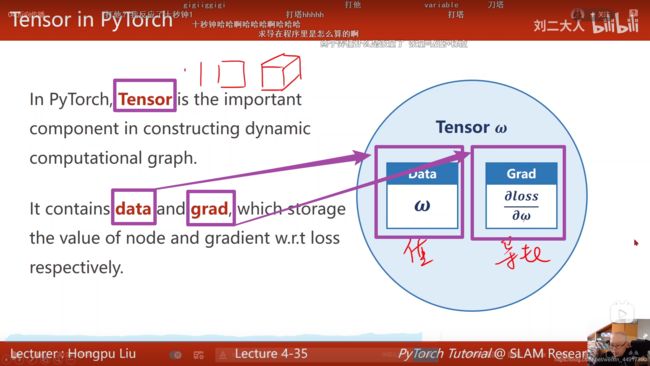

4.反向传播

4.反向传播

#! /usr/bin/env python

# -*- coding: utf-8 -*-

'''

============================================

时间:2021.8.13

作者:手可摘星辰不去高声语

文件名:04-PyTorch梯度下降法.py

功能:

1、Ctrl + Enter 在下方新建行但不移动光标;

2、Shift + Enter 在下方新建行并移到新行行首;

3、Shift + Enter 任意位置换行

4、Ctrl + D 向下复制当前行

5、Ctrl + Y 删除当前行

6、Ctrl + Shift + V 打开剪切板

7、Ctrl + / 注释(取消注释)选择的行;

8、Ctrl + E 可打开最近访问过的文件

9、Double Shift + / 万能搜索

============================================

'''

import matplotlib.pyplot as plt

import torch

# 准备训练集

x_data = [0.9, 1.8, 4.1]

y_data = [2.9, 6.1, 9.2]

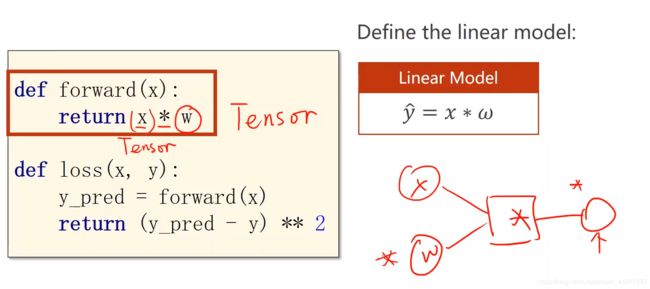

def forward(x_train, w_train):

return x_train * w_train

def loss(x_train, y_train, w_train):

y_pred = forward(x_train, w_train)

return (y_pred - y_train) ** 2

if __name__ == '__main__':

loss_list = []

epoch_list = []

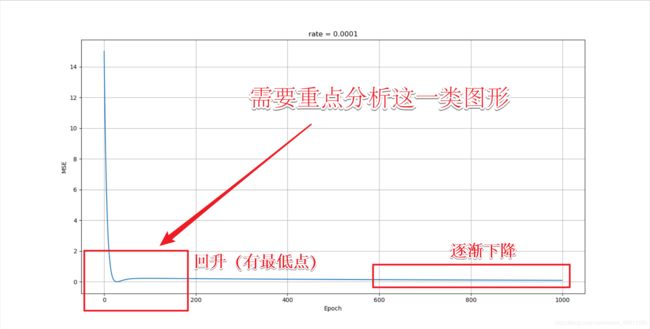

rate = 0.001

w = torch.Tensor([10.0])

w.requires_grad = True

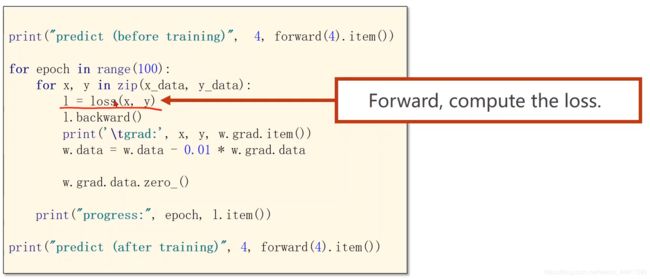

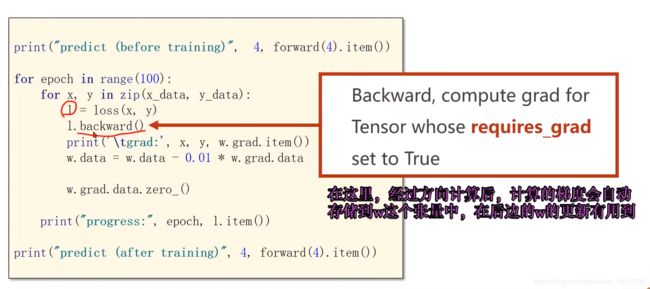

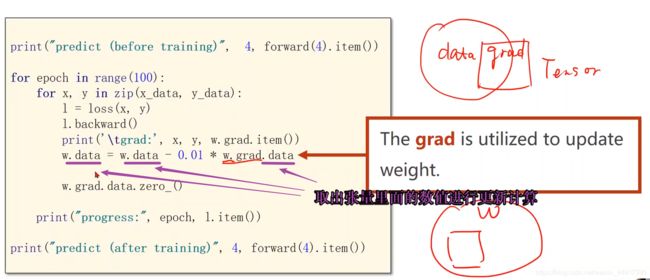

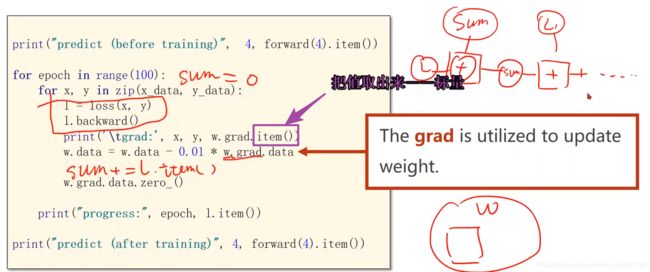

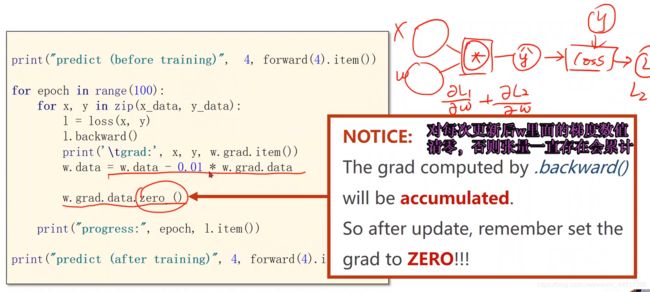

for epoch in range(100):

for x, y in zip(x_data, y_data):

l = loss(x, y, w)

l.backward()

print('\tgrad:', x, y, w.grad.item())

w.data = w.data - rate * w.grad.data

w.grad.data.zero_()

epoch_list.append(epoch)

loss_list.append(l.item())

print('progress:', epoch, l.item())

plt.plot(epoch_list, loss_list)

plt.title("rate = {}".format(rate))

plt.xlabel("Epoch")

plt.ylabel("MSE")

plt.grid()

plt.show()

作业:

#! /usr/bin/env python

# -*- coding: utf-8 -*-

'''

============================================

时间:2021.8.14

作者:手可摘星辰不去高声语

文件名:04-PyTorch梯度下降法2.py

功能:梯度下降法求 y = x*x + x*2 + 1的参数

1、Ctrl + Enter 在下方新建行但不移动光标;

2、Shift + Enter 在下方新建行并移到新行行首;

3、Shift + Enter 任意位置换行

4、Ctrl + D 向下复制当前行

5、Ctrl + Y 删除当前行

6、Ctrl + Shift + V 打开剪切板

7、Ctrl + / 注释(取消注释)选择的行;

8、Ctrl + E 可打开最近访问过的文件

9、Double Shift + / 万能搜索

============================================

'''

import matplotlib.pyplot as plt

import torch

# 准备训练集

# 假设的二次函数为 y = x*x + x*2 + 1

# 对于该函数,w1 = 1, w2 = 2, b=1

x_data = [0, 1, 2, 3, 4]

y_data = [1.001, 3.998, 9.002, 15.999, 25.001]

def forward(x_train, w1_train, w2_train, b_train):

return w1_train*(x_train**2) + w2_train*x_train + b_train

def loss(x_train, y_train, w1_train, w2_train, b_train):

y_pred = forward(x_train, w1_train, w2_train, b_train)

return (y_pred - y_train) ** 2

if __name__ == '__main__':

loss_list = []

epoch_list = []

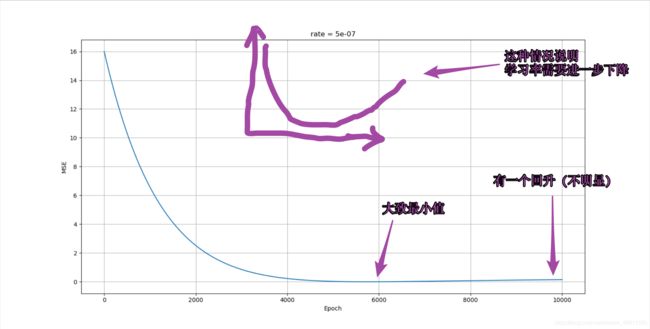

rate = 0.0000005

b = torch.Tensor([1.0])

w1 = torch.Tensor([1.0])

w2 = torch.Tensor([1.0])

global w1_pro

global w2_pro

global b_pro

w1.requires_grad = True

w2.requires_grad = True

b.requires_grad = True

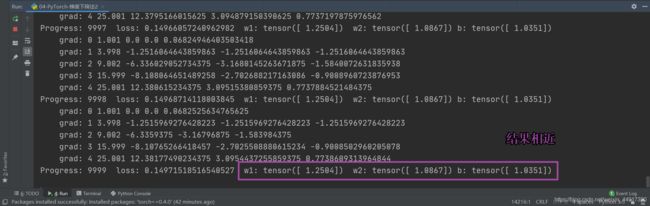

for epoch in range(10000):

for x, y in zip(x_data, y_data):

l = loss(x, y, w1, w2, b)

l.backward()

print('\tgrad:', x, y, w1.grad.item(), w2.grad.item(), b.grad.item())

w1.data = w1.data - rate * w1.grad.data

w2.data = w2.data - rate * w2.grad.data

b.data = b.data - rate * b.grad.data

w1_pro = w1.data

w2_pro = w2.data

b_pro = b.data

w1.grad.data.zero_()

w2.grad.data.zero_()

b.grad.data.zero_()

epoch_list.append(epoch)

loss_list.append(l.item())

print('Progress:', epoch

, " loss:", l.item()

, " w1:", w1_pro.data

, " w2:", w2_pro.data

, "b:", b_pro.data)

plt.plot(epoch_list, loss_list)

plt.title("rate = {}".format(rate))

plt.xlabel("Epoch")

plt.ylabel("MSE")

plt.grid()

plt.show()

希望能够解答!!!谢谢✍