基于自适应对数映射的局部对比度增强算法

基于自适应对数映射的局部对比度增强算法

目录

基于自适应对数映射的局部对比度增强算算法

一 、应用背景

二、算法细节

三、具体实现

四、实际效果

一 、应用背景

光照环境不是很好的环境下所拍摄的图片,要么不是太亮,要么就是太暗,不能很好的兼顾亮区和暗区的细节。解决方式有很多种,一种是使用宽动态的sensor,获取一帧长曝光图像和一帧短曝光图像,再将两帧图像进行融合,这种合成的方式可以获取到比较高动态范围图像。当然使用宽动态的sensor成本会比一般的非宽动态sensor高;另外一种是从软件上进行处理,一般这种处理方式称为Tone Mapping,译为色调映射。色调映射的方法既可以提高图像暗区细节又不过多损失亮区图像信息。

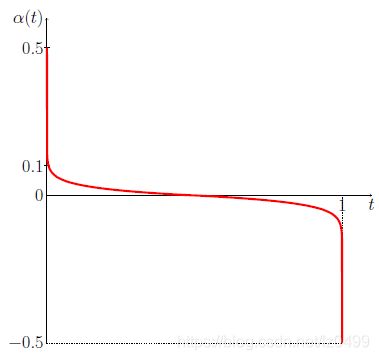

今天打算分享一篇论文《Local Contrast Enhancement based on Adaptive Logarithmic Mappings》,翻译过来即是:基于自适应对数映射的局部对比度增强算算法。算法的主要思路是:根据每个像素的邻域信息,对图像暗区使用凸色调映射函数(图1 左侧所示),提高暗区像素值;对图像亮区使用凹色调映射函数(图二右侧所示),减小亮区像素值。如下图1所示:

图1

二、算法细节

算法的主要思路为:

各部分含义如下:

一、对于任意给定的输入像素 ![]() ,输出像素

,输出像素 ![]() 主要计算公式为:

主要计算公式为:

上述公式主要包含三部分:第一部分是映射函数 ,第二部分是权重映射函数 ,第三部分是转换函数 。

映射函数 是基于对数的色调映射函数,主要参数为 。定义为:

色调映射函数可以保证无论亮度范围多大,最亮的部分总是能够映射为255,其他亮度也能够平缓变化。但是有些图像如果压缩过大的话,将会损失图像的对比度。图二显示的是不同a 取值的图像。

图2

- 权重映射。权重映射主要是用了三种方式,我只实现了第一种方式。利用高斯滤波计算其权重,然后再归一化到[0,1]。一方面消除噪声的影响,另一方面利用像素的邻域信息自适应在转换函数中确定不同等级的色调映射曲线。

- 转换函数。转换函数定义为:

如图3所示:

图3

为了保证不同的 能够平滑转换, 一般设置为0.05。

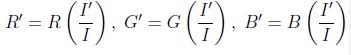

对于彩色图像而言,我们作用于彩色图像的每一个通道,但是经过上述非线性处理之后,改变了原始图像R/G/B的比例关系,为了保证原始图像R/G/B的比例关系,需要进行以下处理:

其中, 是经过局部自适应对比度增强后的亮度值, 是原始图像的亮度值。

三、具体实现

参考代码为:

#include"cv.h"

#include"highgui.h"

#include"cxcore.h"

#include

#include

#include"types.h"

void color_processing(IplImage *B, IplImage *G,IplImage *R,

IplImage *local_img,IplImage *loglocal_outimg,

int w,int h)

{

float factor,rr,gg,bb,outmax;

int i,j,step,nChannels;

for(i = 0;i < h;i++)

{

for(j = 0;j imageData+i*local_img->widthStep))[j] != 0)

{

factor = (float)(((uchar*)(loglocal_outimg->imageData+i*loglocal_outimg->widthStep))[j])/(float)(((uchar*)(local_img->imageData+i*local_img->widthStep))[j]);

rr= ((uchar*)(R->imageData+i*R->widthStep))[j]*factor;

gg= ((uchar*)(G->imageData+i*G->widthStep))[j]*factor;

bb= ((uchar*)(B->imageData+i*B->widthStep))[j]*factor;

outmax = (rr > gg)?((rr > bb)?(rr):(bb)):((gg > bb)?(gg):(bb));

if(outmax > 255)

{

rr *= 255.0f/outmax;

gg *= 255.0f/outmax;

bb *= 255.0f/outmax;

}

((uchar*)(R->imageData+i*R->widthStep))[j] = CLIP((int)(rr+0.5),0,255);

((uchar*)(G->imageData+i*G->widthStep))[j] = CLIP((int)(gg+0.5),0,255);

((uchar*)(B->imageData+i*B->widthStep))[j] = CLIP((int)(bb+0.5),0,255);

}

else

{

((uchar*)(R->imageData+i*R->widthStep))[j] = 0;

((uchar*)(G->imageData+i*G->widthStep))[j] = 0;

((uchar*)(B->imageData+i*B->widthStep))[j] = 0;

}

}

}

}

void loglocal_correction(IplImage *local_img,IplImage *loglocal_outimg,float sigma,int w,int h,float gammalog)

{

int i,j,aai;

float pix,aag,aagmax,alpha,*imgdata,*alphavalue_imgdata,*loglocal_outimg_data,*local_imgdata;

IplImage *local_img_normalize,*mask,*alphavalue;

local_img_normalize = cvCreateImage(cvSize(w,h),IPL_DEPTH_32F,1);

mask = cvCreateImage(cvSize(w,h),IPL_DEPTH_32F,1);

alphavalue= cvCreateImage(cvSize(w,h),IPL_DEPTH_32F,1);

cvZero(local_img_normalize);

cvZero(mask);

cvZero(alphavalue);

#if 0

for(i = 0;i < h;i++)

{

for(j = 0;j < w;j++)

{

pix = ((uchar*)(local_img->imageData+i*local_img->widthStep))[j]/255.0;

((float*)(local_img_normalize->imageData+i*local_img_normalize->widthStep))[j] = pix;

}

}

#endif

cvNormalize(local_img,local_img_normalize,0.0f,1.0f,CV_MINMAX,NULL);

cvSmooth(local_img_normalize,mask,CV_GAUSSIAN,5,5,sigma,0);

for(i = 0;i < h;i++)

{

for(j = 0;j < w;j++)

{

pix = ((float*)(mask->imageData+i*mask->widthStep))[j];

if(pix <= 0.5)

{

((float*)(alphavalue->imageData+i*alphavalue->widthStep))[j] = 0.5-0.5*pow((pix/0.5),gammalog);

}

else

{

((float*)(alphavalue->imageData+i*alphavalue->widthStep))[j] = -(0.5-0.5*pow(((1-pix)/0.5),gammalog));

}

}

}

for(i = 0; i < h;i++)

{

for(j = 0; j < w;j++)

{

alpha = ((float*)(alphavalue->imageData+i*alphavalue->widthStep))[j];

aagmax = 255.0f/log(fabs(alpha)*255+1);

if(alpha > 0)

aag= aagmax*log(alpha*(float)(((uchar*)(local_img->imageData+i*local_img->widthStep))[j])+1);

if(alpha < 0)

aag= 255.0f-aagmax*log(fabs(alpha)*(float)(255.0-((uchar*)(local_img->imageData+i*local_img->widthStep))[j])+1);

if(alpha == 0)

aag = (((uchar*)(local_img->imageData+i*local_img->widthStep))[j]);

aai = (int)(aag+0.5);

((uchar*)(loglocal_outimg->imageData+i*loglocal_outimg->widthStep))[j] = CLIP(aai,0,255);

}

}

cvReleaseImage(&local_img_normalize);

cvReleaseImage(&mask);

cvReleaseImage(&alphavalue);

}

void Calc_aveage(IplImage *average_img,IplImage *B_img,IplImage *G_img,IplImage *R_img,int w,int h)

{

int row,col,R,G,B;

for(row = 0;row < h;row++)

{

for(col = 0; colimageData+row*B_img->widthStep))[col];

G = ((uchar*)(G_img->imageData+row*G_img->widthStep))[col];

R = ((uchar*)(R_img->imageData+row*R_img->widthStep))[col];

((uchar*)(average_img->imageData+row*average_img->widthStep))[col] = (uchar)((0.5+(float)B+(float)G+(float)R)/3.0);

}

}

}

void stretch_range(IplImage *average_img,IplImage *stretch_img,int min,int max,int w,int h)

{

int i,j;

uchar pix;

if(max == min)

return ;

for(i = 0;i < h;i++)

{

for(j = 0;j < w;j++)

{

pix = ((uchar*)(average_img->imageData+i*average_img->widthStep))[j];

pix = (int)(255.0*(float)(pix-min)/(max-min));

pix = CLIP(pix,0,255);

((uchar*)(stretch_img->imageData+i*stretch_img->widthStep))[j] = pix;

}

}

}

int main()

{

double min,max;

int w,h;

CvPoint minloc,maxloc;

CvSize img_size;

IplImage *src ,*RImage,*GImage,*BImage,*Average_Image,*strech_Image,*loglocal_outimg,*mergeImg;

src = cvLoadImage("input_3.bmp",-1);

img_size = cvGetSize(src);

w = img_size.width;

h = img_size.height;

RImage = cvCreateImage(img_size,IPL_DEPTH_8U,1);

GImage = cvCreateImage(img_size,IPL_DEPTH_8U,1);

BImage = cvCreateImage(img_size,IPL_DEPTH_8U,1);

strech_Image = cvCreateImage(img_size,IPL_DEPTH_8U,1);

Average_Image = cvCreateImage(img_size,IPL_DEPTH_8U,1);

mergeImg = cvCreateImage(img_size,IPL_DEPTH_8U,3);

loglocal_outimg = cvCreateImage(cvSize(w,h),IPL_DEPTH_8U,1);

cvZero(RImage);

cvZero(GImage);

cvZero(BImage);

cvZero(Average_Image);

cvZero(strech_Image);

cvZero(mergeImg);

cvZero(loglocal_outimg);

cvNamedWindow("Show original image",0);

cvShowImage("Show original image",src);

cvSplit(src,BImage,GImage,RImage,0);

Calc_aveage(Average_Image,BImage,GImage,RImage,w,h);

//cvNamedWindow("Show average image",0);

//cvShowImage("Show average image",Average_Image);

cvMinMaxLoc(Average_Image,&min,&max,&minloc,&maxloc,NULL);

stretch_range(Average_Image,strech_Image,min,max,w,h);

//cvNamedWindow("Show stretch image",0);

//cvShowImage("Show stretch image",Average_Image);

printf("min:%lf, max:%lf,int_min:%d,int_max:%d minloc:(%d,%d),maxloc:(%d,%d)\n",min,max,(int)min,(int)max,minloc.x,minloc.y,maxloc.x,maxloc.y);

//Create weight image

loglocal_correction(strech_Image,loglocal_outimg,20,w,h,0.05);

color_processing(BImage,GImage,RImage,Average_Image,loglocal_outimg,w,h);

cvMerge(BImage,GImage,RImage,NULL,mergeImg);

cvNamedWindow("Merge image",0);

cvShowImage("Merge image",mergeImg);

cvSaveImage("input_3_out.bmp",mergeImg);

cvWaitKey(0);

//cvDestroyWindow("Show average image");

//cvDestroyWindow("Show stretch image");

cvDestroyWindow("Merge image");

cvDestroyWindow("Show original image");

cvReleaseImage(&RImage);

cvReleaseImage(&GImage);

cvReleaseImage(&BImage);

cvReleaseImage(&src);

cvReleaseImage(&Average_Image);

cvReleaseImage(&strech_Image);

cvReleaseImage(&mergeImg);

cvReleaseImage(&loglocal_outimg);

return 0;

} 四、实际效果

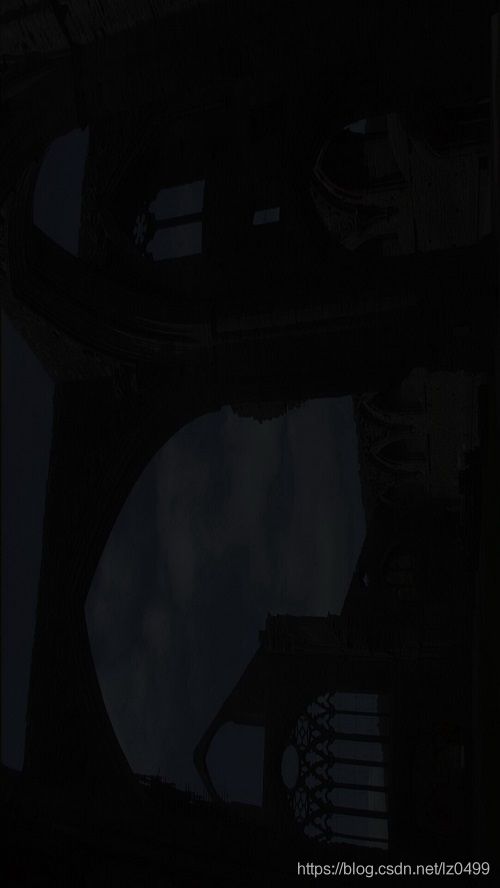

对较暗图像处理:

对一般图像处理:

对于比较暗得图像还是可以处理得比较好,对于一般图像,一方面降低了对比度,一方面出现了亮度过渡不均匀得地方。