动手学深度学习 第三章 线性神经网络 练习(Pytorch版)

文章目录

- 线性神经网络

-

- 线性回归

-

- 小结

- 练习

- 线性回归的从零开始实现

-

- 小结

- 练习

- 线性回归的简洁实现

-

- 小结

- 练习

- softmax回归

-

- 小结

- 练习

- 图像分类数据集

-

- 小结

- 练习

- softmax回归的从零开始实现

-

- 小结

- 练习

- softmax回归的简洁实现

-

- 小结

- 练习

线性神经网络

线性回归

小结

- 机器学习模型中的关键要素是训练数据、损失函数、优化算法,还有模型本身。

- 矢量化使数学表达上更简洁,同时运行的更快。

- 最小化目标函数和执行极大似然估计等价。

- 线性回归模型也是一个简单的神经网络。

练习

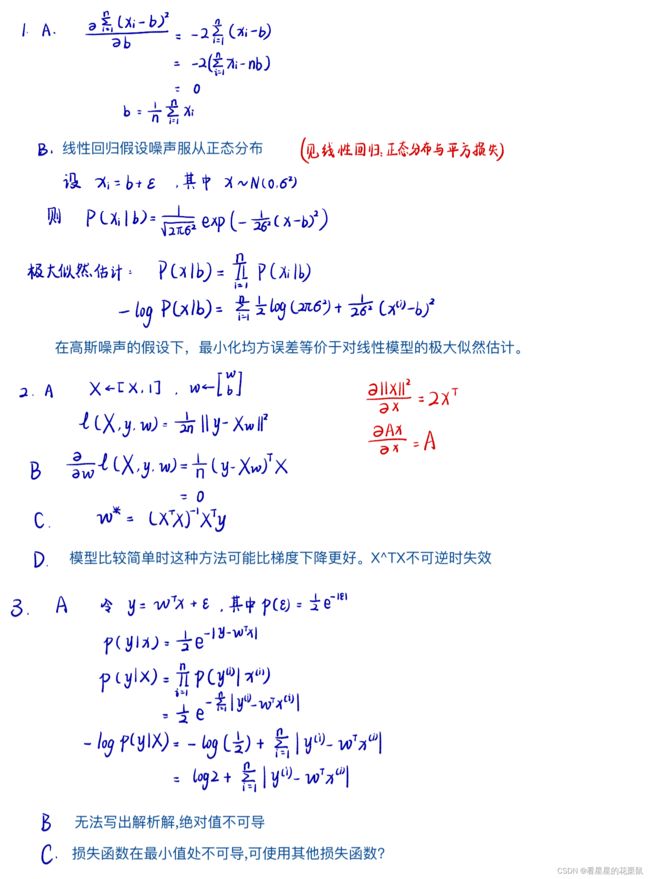

- 假设我们有一些数据 x 1 , … , x n ∈ R x_1, \ldots, x_n \in \mathbb{R} x1,…,xn∈R。我们的目标是找到一个常数 b b b,使得最小化 ∑ i ( x i − b ) 2 \sum_i (x_i - b)^2 ∑i(xi−b)2。

- 找到最优值 b b b的解析解。

- 这个问题及其解与正态分布有什么关系?

- 推导出使用平方误差的线性回归优化问题的解析解。为了简化问题,可以忽略偏置 b b b(我们可以通过向 X \mathbf X X添加所有值为1的一列来做到这一点)。

- 用矩阵和向量表示法写出优化问题(将所有数据视为单个矩阵,将所有目标值视为单个向量)。

- 计算损失对 w w w的梯度。

- 通过将梯度设为0、求解矩阵方程来找到解析解。

- 什么时候可能比使用随机梯度下降更好?这种方法何时会失效?

- 假定控制附加噪声 ϵ \epsilon ϵ的噪声模型是指数分布。也就是说, p ( ϵ ) = 1 2 exp ( − ∣ ϵ ∣ ) p(\epsilon) = \frac{1}{2} \exp(-|\epsilon|) p(ϵ)=21exp(−∣ϵ∣)

- 写出模型 − log P ( y ∣ X ) -\log P(\mathbf y \mid \mathbf X) −logP(y∣X)下数据的负对数似然。

- 你能写出解析解吗?

- 提出一种随机梯度下降算法来解决这个问题。哪里可能出错?(提示:当我们不断更新参数时,在驻点附近会发生什么情况)你能解决这个问题吗?

线性回归的从零开始实现

小结

- 我们学习了深度网络是如何实现和优化的。在这一过程中只使用张量和自动微分,不需要定义层或复杂的优化器。

- 这一节只触及到了表面知识。在下面的部分中,我们将基于刚刚介绍的概念描述其他模型,并学习如何更简洁地实现其他模型。

练习

- 如果我们将权重初始化为零,会发生什么。算法仍然有效吗?

- 假设你是乔治·西蒙·欧姆,试图为电压和电流的关系建立一个模型。你能使用自动微分来学习模型的参数吗?

- 您能基于普朗克定律使用光谱能量密度来确定物体的温度吗?

- 如果你想计算二阶导数可能会遇到什么问题?你会如何解决这些问题?

- 为什么在

squared_loss函数中需要使用reshape函数? - 尝试使用不同的学习率,观察损失函数值下降的快慢。

- 如果样本个数不能被批量大小整除,

data_iter函数的行为会有什么变化?

1 . 将权重初始化为零,算法依然有效。但网络层数加深后,在全连接的情况下,反向传播时,由于权重的对称性会导致出现隐藏神经元的对称性,是的多个隐藏神经元的作用就如同一个神经元,影响算法效果。

2.

import torch

import random

from d2l import torch as d2l

#生成数据集

def synthetic_data(r, b, num_examples):

I = torch.normal(0, 1, (num_examples, len(r)))

u = torch.matmul(I, r) + b

u += torch.normal(0, 0.01, u.shape) # 噪声

return I, u.reshape((-1, 1)) # 标量转换为向量

true_r = torch.tensor([20.0])

true_b = 0.01

features, labels = synthetic_data(true_r, true_b, 1000)

#读取数据集

def data_iter(batch_size, features, labels):

num_examples = len(features)

indices = list(range(num_examples))

random.shuffle(indices)

for i in range(0, num_examples, batch_size):

batch_indices = torch.tensor(indices[i: min(i + batch_size, num_examples)])

yield features[batch_indices],labels[batch_indices]

batch_size = 10

# 初始化权重

r = torch.normal(0,0.01,size = ((1,1)), requires_grad = True)

b = torch.zeros(1, requires_grad = True)

# 定义模型

def linreg(I, r, b):

return torch.matmul(I, r) + b

# 损失函数

def square_loss(u_hat, u):

return (u_hat - u.reshape(u_hat.shape)) ** 2/2

# 优化算法

def sgd(params, lr, batch_size):

with torch.no_grad():

for param in params:

param -= lr * param.grad/batch_size

param.grad.zero_()

lr = 0.03

num_epochs = 10

net = linreg

loss = square_loss

for epoch in range(num_epochs):

for I, u in data_iter(batch_size, features, labels):

l = loss(net(I, r, b), u)

l.sum().backward()

sgd([r, b], lr, batch_size)

with torch.no_grad():

train_l = loss(net(features, r, b), labels)

print(f'epoch {epoch + 1}, loss {float(train_l.mean()):f}')

print(r)

print(b)

print(f'r的估计误差: {true_r - r.reshape(true_r.shape)}')

print(f'b的估计误差: {true_b - b}')

epoch 1, loss 0.329473

epoch 2, loss 0.000541

epoch 3, loss 0.000050

epoch 4, loss 0.000050

epoch 5, loss 0.000050

epoch 6, loss 0.000050

epoch 7, loss 0.000050

epoch 8, loss 0.000050

epoch 9, loss 0.000050

epoch 10, loss 0.000050

tensor([[19.9997]], requires_grad=True)

tensor([0.0093], requires_grad=True)

r的估计误差: tensor([0.0003], grad_fn=)

b的估计误差: tensor([0.0007], grad_fn=)

3 . 尝试写了一下,但好像出错了,结果loss = nan

M λ b b = 2 π h c 2 λ 5 ⋅ 1 e h c λ k T − 1 = c 1 λ 5 ⋅ 1 e c 2 k T − 1 M_{\lambda b b}=\frac{2 \pi h c^{2}}{\lambda^{5}} \cdot \frac{1}{e^{\frac{h c}{\lambda k T}}-1}=\frac{c_{1}}{\lambda^{5}} \cdot \frac{1}{e^{\frac{c_{2}}{k T}}-1} Mλbb=λ52πhc2⋅eλkThc−11=λ5c1⋅ekTc2−11

# 3

# 普朗克公式

# x:波长

# T:温度

import torch

import random

from d2l import torch as d2l

#生成数据集

def synthetic_data(x, num_examples):

T = torch.normal(0, 1, (num_examples, len(x)))

u = c1 / ((x ** 5) * ((torch.exp(c2 / (x * T))) - 1));

u += torch.normal(0, 0.01, u.shape) # 噪声

return T, u.reshape((-1, 1)) # 标量转换为向量

c1 = 3.7414*10**8 # c1常量

c2 = 1.43879*10**4 # c2常量

true_x = torch.tensor([500.0])

features, labels = synthetic_data(true_x, 1000)

#读取数据集

def data_iter(batch_size, features, labels):

num_examples = len(features)

indices = list(range(num_examples))

random.shuffle(indices)

for i in range(0, num_examples, batch_size):

batch_indices = torch.tensor(indices[i: min(i + batch_size, num_examples)])

yield features[batch_indices],labels[batch_indices]

batch_size = 10

# 初始化权重

x = torch.normal(0,0.01,size = ((1,1)), requires_grad = True)

# 定义模型

def planck_formula(T, x):

return c1 / ((x ** 5) * ((torch.exp(c2 / (x * T))) - 1))

# 损失函数

def square_loss(u_hat, u):

return (u_hat - u.reshape(u_hat.shape)) ** 2/2

# 优化算法

def sgd(params, lr, batch_size):

with torch.no_grad():

for param in params:

param -= lr * param.grad/batch_size

param.grad.zero_()

lr = 0.001

num_epochs = 10

net = planck_formula

loss = square_loss

for epoch in range(num_epochs):

for T, u in data_iter(batch_size, features, labels):

l = loss(net(T, x), u)

l.sum().backward()

sgd([x], lr, batch_size)

with torch.no_grad():

train_l = loss(net(features, x), labels)

print(f'epoch {epoch + 1}, loss {float(train_l.mean()):f}')

print(f'r的估计误差: {true_x - x.reshape(true_x.shape)}')

- 二阶导数可能不存在,或无法得到显式的一阶导数。可以在求一阶导数时使用retain_graph=True参数保存计算图,进而求二阶导。

- y_hat和y的维度可能不相同,分别为行向量和列向量,维度(n,1)(n, )

- 学习率越大,损失函数下降越快。但学习率过大可能导致无法收敛。

learning rate = 0.03

epoch 1, loss 0.039505

epoch 2, loss 0.000141

epoch 3, loss 0.000048

learning rate = 0.05

epoch 1, loss 0.000576

epoch 2, loss 0.000052

epoch 3, loss 0.000052

learning rate = 0.5

epoch 1, loss 0.000057

epoch 2, loss 0.000053

epoch 3, loss 0.000051

- 设置了

indices[i: min(i + batch_size, num_examples)],样本个数不能被批量大小整除不会导致data_iter变化,不设置的话可能会报错。

线性回归的简洁实现

小结

- 我们可以使用PyTorch的高级API更简洁地实现模型。

- 在PyTorch中,

data模块提供了数据处理工具,nn模块定义了大量的神经网络层和常见损失函数。 - 我们可以通过

_结尾的方法将参数替换,从而初始化参数。

练习

- 如果将小批量的总损失替换为小批量损失的平均值,你需要如何更改学习率?

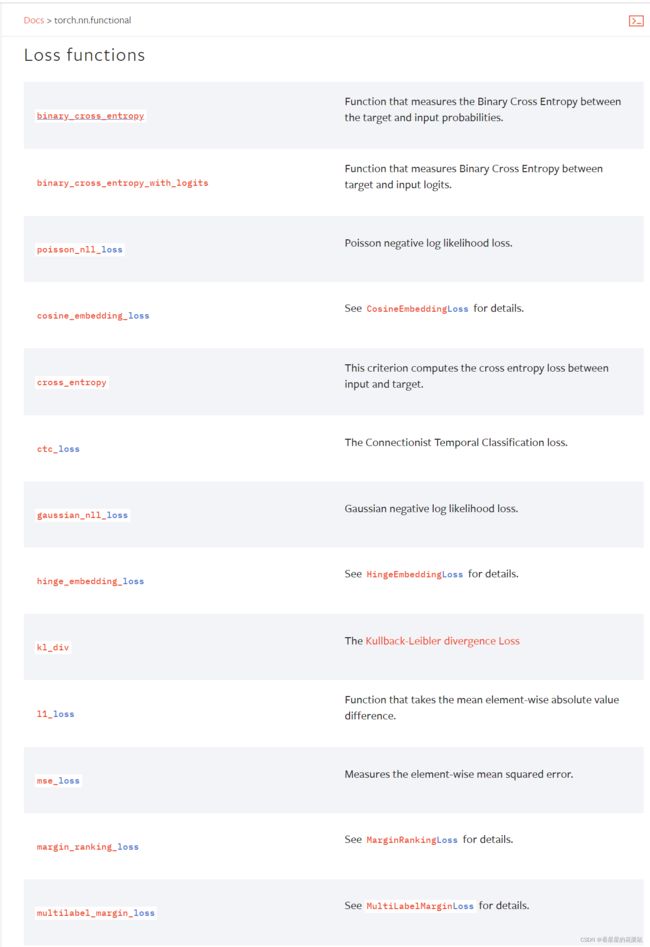

- 查看深度学习框架文档,它们提供了哪些损失函数和初始化方法?用Huber损失代替原损失,即

l ( y , y ′ ) = { ∣ y − y ′ ∣ − σ 2 if ∣ y − y ′ ∣ > σ 1 2 σ ( y − y ′ ) 2 其它情况 l(y,y') = \begin{cases}|y-y'| -\frac{\sigma}{2} & \text{ if } |y-y'| > \sigma \\ \frac{1}{2 \sigma} (y-y')^2 & \text{ 其它情况}\end{cases} l(y,y′)={∣y−y′∣−2σ2σ1(y−y′)2 if ∣y−y′∣>σ 其它情况 - 你如何访问线性回归的梯度?

1 . 将学习率缩小为之前的1/n

2 . 部分loss function

将损失函数更改为HuberLoss(仅1.9.0及以上版本的pytorch才有HuberLoss)

#%%

import numpy as np

import torch

from torch.utils import data

from d2l import torch as d2l

#%%

true_w = torch.tensor([2, -3.4])

true_b = 4.2

features, labels = d2l.synthetic_data(true_w, true_b, 1000)

def load_array(data_arrays, batch_size, is_train = True): #@save

'''pytorch数据迭代器'''

dataset = data.TensorDataset(*data_arrays) # 把输入的两类数据一一对应;*表示对list解开入参

return data.DataLoader(dataset, batch_size, shuffle = is_train) # 重新排序

batch_size = 10

data_iter = load_array((features, labels), batch_size) # 和手动实现中data_iter使用方法相同

#%%

# 构造迭代器并验证data_iter的效果

next(iter(data_iter)) # 获得第一个batch的数据

#%% 定义模型

from torch import nn

net = nn.Sequential(nn.Linear(2, 1)) # Linear中两个参数一个表示输入形状一个表示输出形状

# sequential相当于一个存放各层数据的list,单层时也可以只用Linear

#%% 初始化模型参数

# 使用net[0]选择神经网络中的第一层

net[0].weight.data.normal_(0, 0.01) # 正态分布

net[0].bias.data.fill_(0)

#%% 定义损失函数

loss = torch.nn.HuberLoss()

#%% 定义优化算法

trainer = torch.optim.SGD(net.parameters(), lr=0.03) # optim module中的SGD

#%% 训练

num_epochs = 3

for epoch in range(num_epochs):

for X, y in data_iter:

l = loss(net(X), y)

trainer.zero_grad()

l.backward()

trainer.step()

l = loss(net(features), labels)

print(f'epoch {epoch+1}, loss {l:f}')

#%% 查看误差

w = net[0].weight.data

print('w的估计误差:', true_w - w.reshape(true_w.shape))

b = net[0].bias.data

print('b的估计误差:', true_b - b)

3 . 线性回归的梯度

print(net[0].weight.grad)

print(net[0].bias.grad)

softmax回归

小结

- softmax运算获取一个向量并将其映射为概率。

- softmax回归适用于分类问题,它使用了softmax运算中输出类别的概率分布。

- 交叉熵是一个衡量两个概率分布之间差异的很好的度量,它测量给定模型编码数据所需的比特数。

练习

- 我们可以更深入地探讨指数族与softmax之间的联系。

- 计算softmax交叉熵损失 l ( y , y ^ ) l(\mathbf{y},\hat{\mathbf{y}}) l(y,y^)的二阶导数。

- 计算 s o f t m a x ( o ) \mathrm{softmax}(\mathbf{o}) softmax(o)给出的分布方差,并与上面计算的二阶导数匹配。

- 假设我们有三个类发生的概率相等,即概率向量是 ( 1 3 , 1 3 , 1 3 ) (\frac{1}{3}, \frac{1}{3}, \frac{1}{3}) (31,31,31)。

- 如果我们尝试为它设计二进制代码,有什么问题?

- 你能设计一个更好的代码吗?提示:如果我们尝试编码两个独立的观察结果会发生什么?如果我们联合编码 n n n个观测值怎么办?

- softmax是对上面介绍的映射的误称(虽然深度学习领域中很多人都使用这个名字)。真正的softmax被定义为 R e a l S o f t M a x ( a , b ) = log ( exp ( a ) + exp ( b ) ) \mathrm{RealSoftMax}(a, b) = \log (\exp(a) + \exp(b)) RealSoftMax(a,b)=log(exp(a)+exp(b))。

- 证明 R e a l S o f t M a x ( a , b ) > m a x ( a , b ) \mathrm{RealSoftMax}(a, b) > \mathrm{max}(a, b) RealSoftMax(a,b)>max(a,b)。

- 证明 λ − 1 R e a l S o f t M a x ( λ a , λ b ) > m a x ( a , b ) \lambda^{-1} \mathrm{RealSoftMax}(\lambda a, \lambda b) > \mathrm{max}(a, b) λ−1RealSoftMax(λa,λb)>max(a,b)成立,前提是 λ > 0 \lambda > 0 λ>0。

- 证明对于 λ → ∞ \lambda \to \infty λ→∞,有 λ − 1 R e a l S o f t M a x ( λ a , λ b ) → m a x ( a , b ) \lambda^{-1} \mathrm{RealSoftMax}(\lambda a, \lambda b) \to \mathrm{max}(a, b) λ−1RealSoftMax(λa,λb)→max(a,b)。

- soft-min会是什么样子?

- 将其扩展到两个以上的数字。

2 . 可以使用one-hot编码,需要的元素个数等于label数量

图像分类数据集

小结

- Fashion-MNIST是一个服装分类数据集,由10个类别的图像组成。我们将在后续章节中使用此数据集来评估各种分类算法。

- 我们将高度 h h h像素,宽度 w w w像素图像的形状记为 h × w h \times w h×w或( h h h, w w w)。

- 数据迭代器是获得更高性能的关键组件。依靠实现良好的数据迭代器,利用高性能计算来避免减慢训练过程。

练习

- 减少

batch_size(如减少到1)是否会影响读取性能? - 数据迭代器的性能非常重要。你认为当前的实现足够快吗?探索各种选择来改进它。

- 查阅框架的在线API文档。还有哪些其他数据集可用?

- 会影响,batch_size越小,读取越慢

- 可以调节进程数

- torchvision可用数据集:https://pytorch.org/vision/stable/datasets.html

softmax回归的从零开始实现

小结

- 借助softmax回归,我们可以训练多分类的模型。

- 训练softmax回归循环模型与训练线性回归模型非常相似:先读取数据,再定义模型和损失函数,然后使用优化算法训练模型。大多数常见的深度学习模型都有类似的训练过程。

练习

- 在本节中,我们直接实现了基于数学定义softmax运算的

softmax函数。这可能会导致什么问题?提示:尝试计算 exp ( 50 ) \exp(50) exp(50)的大小。 - 本节中的函数

cross_entropy是根据交叉熵损失函数的定义实现的。它可能有什么问题?提示:考虑对数的定义域。 - 你可以想到什么解决方案来解决上述两个问题?

- 返回概率最大的分类标签总是最优解吗?例如,医疗诊断场景下你会这样做吗?

- 假设我们使用softmax回归来预测下一个单词,可选取的单词数目过多可能会带来哪些问题?

-

数值稳定性问题,可能会导致上溢(overflow

)。如果数值过大, e x p ( o ) exp(o) exp(o)可能会大于数据类型允许的最大数字,这将使分母或分子变为inf(无穷大),最后得到的是0、inf或nan(不是数字)的 y ^ j \hat y_j y^j。 -

若最大的 y ^ \hat y y^的值极小,接近0,可能会导致 − l o g y ^ -log\hat y −logy^的值过大,超出数据类型的范围。或出现下溢(underflow)问题,由于精度限制, y ^ \hat y y^四舍五入为0,并且使得 log ( y ^ j ) \log(\hat y_j) log(y^j)的值为

-inf。

反向传播几步后,可能会出现nan结果。 -

使用LogSumExp技巧。

在继续softmax计算之前,先从所有 o k o_k ok中减去 max ( o k ) \max(o_k) max(ok)。

可以看到每个 o k o_k ok按常数进行的移动不会改变softmax的返回值:

y ^ j = exp ( o j − max ( o k ) ) exp ( max ( o k ) ) ∑ k exp ( o k − max ( o k ) ) exp ( max ( o k ) ) = exp ( o j − max ( o k ) ) ∑ k exp ( o k − max ( o k ) ) . \begin{aligned} \hat y_j & = \frac{\exp(o_j - \max(o_k))\exp(\max(o_k))}{\sum_k \exp(o_k - \max(o_k))\exp(\max(o_k))} \\ & = \frac{\exp(o_j - \max(o_k))}{\sum_k \exp(o_k - \max(o_k))}. \end{aligned} y^j=∑kexp(ok−max(ok))exp(max(ok))exp(oj−max(ok))exp(max(ok))=∑kexp(ok−max(ok))exp(oj−max(ok)).

之后,将softmax和交叉熵结合在一起,如下面的等式所示,我们避免计算 exp ( o j − max ( o k ) ) \exp(o_j - \max(o_k)) exp(oj−max(ok)),

而可以直接使用 o j − max ( o k ) o_j - \max(o_k) oj−max(ok),因为 log ( exp ( ⋅ ) ) \log(\exp(\cdot)) log(exp(⋅))被抵消了。

log ( y ^ j ) = log ( exp ( o j − max ( o k ) ) ∑ k exp ( o k − max ( o k ) ) ) = log ( exp ( o j − max ( o k ) ) ) − log ( ∑ k exp ( o k − max ( o k ) ) ) = o j − max ( o k ) − log ( ∑ k exp ( o k − max ( o k ) ) ) . \begin{aligned} \log{(\hat y_j)} & = \log\left( \frac{\exp(o_j - \max(o_k))}{\sum_k \exp(o_k - \max(o_k))}\right) \\ & = \log{(\exp(o_j - \max(o_k)))}-\log{\left( \sum_k \exp(o_k - \max(o_k)) \right)} \\ & = o_j - \max(o_k) -\log{\left( \sum_k \exp(o_k - \max(o_k)) \right)}. \end{aligned} log(y^j)=log(∑kexp(ok−max(ok))exp(oj−max(ok)))=log(exp(oj−max(ok)))−log(k∑exp(ok−max(ok)))=oj−max(ok)−log(k∑exp(ok−max(ok))).

-

返回概率最大的分类标签不总是最优解。一些情况下可能需要输出具体的概率值来辅助判断。

-

可能会造成单词之间概率差距不大,或概率过于接近0的情况。在计算过程中loss也会较高。

softmax回归的简洁实现

小结

- 使用深度学习框架的高级API,我们可以更简洁地实现softmax回归。

- 从计算的角度来看,实现softmax回归比较复杂。在许多情况下,深度学习框架在这些著名的技巧之外采取了额外的预防措施,来确保数值的稳定性。这使我们避免了在实践中从零开始编写模型时可能遇到的陷阱。

练习

- 尝试调整超参数,例如批量大小、迭代周期数和学习率,并查看结果。

- 增加迭代周期的数量。为什么测试精度会在一段时间后降低?我们怎么解决这个问题?

- 可能出现过拟合现象。可以观察一下精度的变化,若精度一直下降,则在合适的位置终止计算。