【PyTorch】使用MLP和CNN实现mnist的识别

摘要

MNIST 包括6万张28x28的训练样本,1万张测试样本,可以说是CV里的“Hello Word”。本文使用pytorch分别以多层感知器MLP和卷积神经网络CNN两种方法识别mnist数据集。

1.使用pytorch搭建多层感知器MLP进行mnist的识别

1.1 导入相应的包

import numpy as np

import torchvision

import torch

from torch.utils.data import DataLoader

from torchvision import datasets,transforms

1.2 导入数据集

img_size = 28*28

n_classes = 10

num_epoches = 6

data_tf = torchvision.transforms.Compose([transforms.ToTensor(),transforms.Normalize([0.5],[0.5])])

train_dataset = datasets.MNIST(root="./data",train=True,transform=data_tf,download=True)

test_dataset = datasets.MNIST(root="./data",train=False,transform=data_tf)

train_loader = DataLoader(train_dataset,batch_size=64,shuffle=True)

test_loader = DataLoader(test_dataset,batch_size=64,shuffle=True)

1.3 搭建模型

class MLP(torch.nn.Module):

def __init__(self,in_dim,n_hidden_1,n_hidden_2,out_dim):

super(MLP,self).__init__()

self.linear1 = torch.nn.Linear(in_dim,n_hidden_1)

self.batchnormal1d1 = torch.nn.BatchNorm1d(n_hidden_1)

self.relu1 = torch.nn.ReLU()

self.linear2 = torch.nn.Linear(n_hidden_1,n_hidden_2)

self.batchnormal1d2 = torch.nn.BatchNorm1d(n_hidden_2)

self.relu2 = torch.nn.ReLU()

self.linear3 = torch.nn.Linear(n_hidden_2,out_dim)

def forward(self,x):

x = self.linear1(x)

x = self.batchnormal1d1(x)

x = self.relu1(x)

x = self.linear2(x)

x = self.batchnormal1d2(x)

x = self.relu2(x)

x = self.linear3(x)

return x

model_MLP = MLP(img_size,300,100,n_classes)

1.4 定义损失函数与优化器

criterion = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.SGD(model_MLP.parameters(),lr=0.01)

1.5 训练模型

if torch.cuda.is_available():

model=model.cuda()

for epoch in range(num_epoches):

loss_sum,cort_num_sum,acc = 0,0,0

for data in train_loader:

img,label = data

img = img.view(img.size(0),-1)

if torch.cuda.is_available():

inputs = torch.autograd.Variable(img).cuda()

target = torch.autograd.Variable(label).cuda()

else:

inputs = torch.autograd.Variable(img)

target = torch.autograd.Variable(label)

outputs = model_MLP(inputs)

loss = criterion(outputs,target)

optimizer.zero_grad()

loss.backward()

optimizer.step()

loss_sum += loss.data

_,pred = outputs.data.max(1)

num_correct = pred.eq(target).sum()

cort_num_sum += num_correct

acc = cort_num_sum.float()/len(train_dataset)

print( "After %d epoch , training loss is %.2f , correct_number is %d accuracy is %.6f. "%(epoch,loss_sum,cort_num_sum,acc))

1.6 验证模型

# 验证模型

model_MLP.eval()

eval_loss = 0

eval_acc = 0

for data in test_loader:

img,label = data

img = img.view(img.size(0),-1)

if torch.cuda.is_available():

img=torch.autograd.Variable(img).cuda()

label=torch.autograd.Variable(label).cuda()

else:

img = torch.autograd.Variable(img)

label = torch.autograd.Variable(label)

out = model_MLP(img)

loss = criterion(out,label)

eval_loss += loss.data*label.size(0)

_,pred = out.data.max(1)

num_correct = pred.eq(label).sum()

eval_acc += num_correct.data

print('Test loss: {:.6f},ACC: {:.6f}'.format(eval_loss.float()/(len(test_dataset)),eval_acc.float()/(len(test_dataset))))

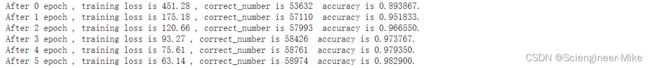

测试结果展示:

![]()

2.使用pytorch搭建卷积神经网络CNN进行mnist的识别

2.1 导入相应的包

import torch

from torch.autograd import *

from torch import nn,optim

from torch.utils.data import DataLoader

from torchvision import datasets,transforms

import torch.nn.functional as F

2.2 导入数据集

batch_size = 64

num_epoches = 6

data_tf=transforms.Compose([transforms.ToTensor(),transforms.Normalize([0.5],[0.5])])

train_dataset=datasets.MNIST(root='./data',train=True,transform=data_tf,download=True)

test_dataset=datasets.MNIST(root="./data",train=False,transform=data_tf)

train_loader=DataLoader(train_dataset,batch_size=batch_size,shuffle=True)

test_loader=DataLoader(test_dataset,batch_size=batch_size,shuffle=False)

2.3 搭建模型

class CNN(nn.Module):

def __init__(self):

super(CNN, self).__init__()

self.conv1 = torch.nn.Conv2d(1, 10, kernel_size=5)

self.conv2 = torch.nn.Conv2d(10, 20, kernel_size=5)

self.pooling = torch.nn.MaxPool2d(2)

self.fc = torch.nn.Linear(320, 10)

def forward(self, x):

# flatten data from (n,1,28,28) to (n, 784)

batch_size = x.size(0)

x = F.relu(self.pooling(self.conv1(x)))

x = F.relu(self.pooling(self.conv2(x)))

x = x.view(batch_size, -1) # -1 此处自动算出的是320

x = self.fc(x)

return x

model=CNN()

2.4 定义损失函数与优化器

criterion=nn.CrossEntropyLoss()

optimizer=optim.SGD(model.parameters(),lr=0.01)

2.5 训练模型

if torch.cuda.is_available():

model=model.cuda()

for epoch in range(num_epoches):

loss_sum, cort_num_sum,acc = 0, 0,0

for data in train_loader:

img,label=data

if torch.cuda.is_available():

inputs = Variable(img).cuda()

target = Variable(label).cuda()

else:

inputs = Variable(img)

target = Variable(label)

output =model(inputs)

loss = criterion(output, target)

optimizer.zero_grad()

loss.backward()

optimizer.step()

loss_sum += loss.data

_, pred = output.data.max(1)

num_correct = pred.eq(target).sum()

cort_num_sum += num_correct

acc=cort_num_sum.float()/len(train_dataset)

print( "After %d epoch , training loss is %.2f , correct_number is %d accuracy is %.6f. "%(epoch,loss_sum,cort_num_sum,acc))

2.6 验证模型

# 验证模型

model.eval()

eval_loss=0

eval_acc=0

for data in test_loader:

img ,label =data

if torch.cuda.is_available():

img=Variable(img).cuda()

label=Variable(label).cuda()

else:

img = Variable(img)

label = Variable(label)

out=model(img)

loss=criterion(out,label)

eval_loss+=loss.data*label.size(0)

_,pred=out.data.max(1)

num_correct=pred.eq(label).sum()

eval_acc+=num_correct.data

print('Test loss: {:.6f},ACC: {:.6f}'.format(eval_loss.float()/(len(test_dataset)),eval_acc.float()/(len(test_dataset))))

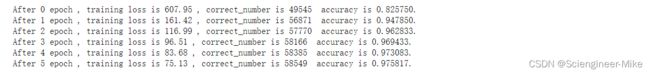

测试结果展示:

以上,我们完成了MLP和CNN对mnist数据集进行了识别实战,目的是为了让大家对二者之间的区别有更直观的感受。