基于卷积神经网络的CIFAR10分类识别

今天带来一个小小的PyTorch项目,利用PyTorch搭建卷积神经网络完成对CIFAR10数据集的分类

CIFAR10:由 10 个类中的 60000 张 32x32 彩色图像组成,每类 6000 张图像。有50000个训练图像和10000个测试图像。数据集分为五个培训批次和一个测试批次,每个测试批次有 10000 张

图像。测试批次包含来自每个类的 1000 个随机选择的图像。培训批次随机包含剩余图像,但某些培训批次可能包含来自一个班级的图像。培训批次包含每节课的 5000 张图片

十个类分别是:【飞机,汽车,鸟,猫,鹿,狗,青蛙,马,船,卡车】

------------------------------------------------------------------------------------------------------------

下面正式开始

首先我们先明确一下总体步骤:Load data->Build Model ->Train->Test

1.Load data

在这里还是利用torchvision.datasets直接完成CIFAR10数据集的下载

transform = transforms.Compose(

[transforms.ToTensor(),

transforms.Normalize((0.5,0.5,0.5),(0.5,0.5,0.5))])

train_data = torchvision.datasets.CIFAR10(root='./CIFAR10data',train=True,

download=True,transform=transform )

train_loader = torch.utils.data.DataLoader(train_data,batch_size = 4,

shuffle = True,num_workers=2)

test_data = torchvision.datasets.CIFAR10(root='./CIFAR10data',train=False,

download=True,transform=transform)

test_loader = torch.utils.data.DataLoader(test_data,batch_size = 4,

shuffle = False,num_workers=2)在这里我们将对图片的预处理直接整合好,对于shuffle和num_workers这两个属性在说明下:

shuffle:用于打乱数据集,每次以不同数据返回(这里好像还有不少坑,但是我还没亲自掉里面 过,不过迟早的事,关于shuffle的坑以后有机会在进行详细阐述)

num_workers:当dataloader加载数据时,一次性创建num_workers个工作进程,并用 batch_sampler将指定batch分配给指定worker,worker将它负责的batch加载进RAM。

(1)num_workers设置的很大:好处是处理速度快,寻找速度快,可以需要的数据之前已经加载过了;坏处就是内存开销大,占用很多内存空间,加重CPU负担

(2)num_workers设置为0:意味着每一轮迭代时,dataloader不再有自主加载数据到RAM这一步骤,而是在RAM中找batch,找不到时再加载相应的batch:缺点是速度降下来了

2.Build Model

定义卷积神经网络模型

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(3,6,5)

self.pool = nn.MaxPool2d(2,2)

self.conv2 = nn.Conv2d(6,16,5)

self.fc1 = nn.Linear(16*5*5,120)

self.fc2 = nn.Linear(120,84)

self.fc3 = nn.Linear(84,10)

def forward(self,x):

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

x = x.view(-1,16*5*5) #拉成向量

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = F.relu(self.fc3(x))

return x关于Conv2d(),参考了nn.Conv2d卷积_落地生根-CSDN博客

二维卷积可以处理二维数据

nn.Conv2d(self, in_channels, out_channels, kernel_size, stride=1, padding=0, dilation=1, groups=1, bias=True))

参数:

in_channel: 输入数据的通道数,例RGB图片通道数为3;

out_channel: 输出数据的通道数,这个根据模型调整;

kennel_size: 卷积核大小,可以是int,或tuple;kennel_size=2,意味着卷积大小(2,2), kennel_size=(2,3),意味着卷积大小(2,3)即非正方形卷积

stride:步长,默认为1,与kennel_size类似,stride=2,意味着步长上下左右扫描皆为2, stride=(2,3),左右扫描步长为2,上下为3;

padding:对图像矩阵周边零填充

3.Train

Train之前定义一下优化器和损失函数

net = Net()

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(net.parameters(),lr = 0.001,momentum=0.9) #SGD(传入参数,学习率,动量)start Training~

for epoch in range(1):

running_loss = 0.0

# 0 用于指定索引起始值

for i, data in enumerate(train_loader, 0):

input, target = data

input, target = Variable(input), Variable(target)

optimizer.zero_grad()

output = net(input)

loss = criterion(output, target) # out 和target的交叉熵损失

loss.backward()

optimizer.step()

running_loss += loss.data

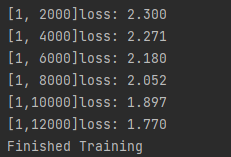

if i % 2000 == 1999: ## print every 2000 mini_batches,1999,because of index from 0 on

print('[%d,%5d]loss: %.3f' % (epoch + 1, i + 1, running_loss / 2000))

running_loss = 0.0

print('Finished Training')训练完毕:

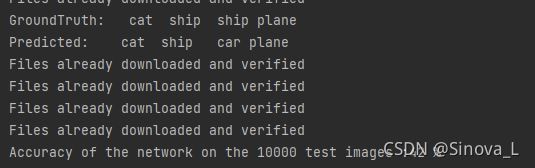

4.Test

dataier = iter(test_loader)

images, labels = dataier.next()

imshow(torchvision.utils.make_grid(images))

print('GroundTruth:', ' '.join('%5s' % classes[labels[j]] for j in range(4)))

outputs = net(Variable(images))

_, pred = torch.max(outputs.data, 1)

print('Predicted: ', ' '.join('%5s' % classes[pred[j]] for j in range(4)))

correct = 0.0

total = 0

for data in test_loader:

images, labels = data

outputs = net(Variable(images))

_, pred = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (pred == labels).sum()

print('Accuracy of the network on the 10000 test images :%d %%' % (100 * correct / total))这里还定义了一个函数用于展示一下数据集识别的图像

def imshow(img):

img = img / 2 + 0.5 # unnormalize

npimg = img.numpy()

# np.transpose :按需求转置

plt.imshow(np.transpose(npimg,(1,2,0)))

plt.show()因为CIFAR10中的图像像素都是32*32的,很小,所以这个观赏效果很模糊,不过大概能看得出来

可以看得出来,识别的准确度还是有的,不过样板数据少,其实真实的识别精度还不是很高

下面是对十类不同物体的分类分析

class_correct = list(0. for i in range(10))

class_total = list(0. for i in range(10))

for data in test_loader:

images, labels = data

outputs = net(Variable(images))

_, pred = torch.max(outputs.data, 1)

c = (pred == labels).squeeze() # 1*10000*10-->10*10000

for i in range(4):

label = labels[i]

class_correct[label] += c[i]

class_total[label] += 1

for i in range(10):

print('Accuracy of %5s : %2d %%' % (classes[i], 100 * class_correct[i] / class_total[i]))识别的结果会打印在Console

每次学习的结果都不一样,如何正确提高识别精度,我还需要再努力学习!!

最后贴一下完整代码:

import torch

import torchvision

import torchvision.transforms as transforms

from torch.autograd import Variable

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

import matplotlib.pyplot as plt

import numpy as np

#导入数据并及进行标准化处理,转换成需要的格式

transform = transforms.Compose(

[transforms.ToTensor(),

transforms.Normalize((0.5,0.5,0.5),(0.5,0.5,0.5))])

#下载数据

train_data = torchvision.datasets.CIFAR10(root='./CIFAR10data',train=True,

download=True,transform=transform )

train_loader = torch.utils.data.DataLoader(train_data,batch_size = 4,

shuffle = True,num_workers=2)

test_data = torchvision.datasets.CIFAR10(root='./CIFAR10data',train=False,

download=True,transform=transform)

test_loader = torch.utils.data.DataLoader(test_data,batch_size = 4,

shuffle = False,num_workers=2)

classes = ('plane','car','bird','cat','deer','dog','frog','horse','ship','truck')

def imshow(img):

img = img / 2 + 0.5 # unnormalize

npimg = img.numpy()

# np.transpose :按需求转置

plt.imshow(np.transpose(npimg,(1,2,0)))

plt.show()

#定义卷积神经网络模型

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(3,6,5)

self.pool = nn.MaxPool2d(2,2)

self.conv2 = nn.Conv2d(6,16,5)

self.fc1 = nn.Linear(16*5*5,120)

self.fc2 = nn.Linear(120,84)

self.fc3 = nn.Linear(84,10)

def forward(self,x):

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

x = x.view(-1,16*5*5) #拉成向量

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = F.relu(self.fc3(x))

return x

net = Net()

#定义loss函数和优化器

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(net.parameters(),lr = 0.001,momentum=0.9) #SGD(传入参数,学习率,动量)

#训练网络

if __name__ == '__main__':

for epoch in range(1):

running_loss = 0.0

# 0 用于指定索引起始值

for i, data in enumerate(train_loader, 0):

input, target = data

input, target = Variable(input), Variable(target)

optimizer.zero_grad()

output = net(input)

loss = criterion(output, target) # out 和target的交叉熵损失

loss.backward()

optimizer.step()

running_loss += loss.data

if i % 2000 == 1999: ## print every 2000 mini_batches,1999,because of index from 0 on

print('[%d,%5d]loss: %.3f' % (epoch + 1, i + 1, running_loss / 2000))

running_loss = 0.0

print('Finished Training')

dataier = iter(test_loader)

images, labels = dataier.next()

imshow(torchvision.utils.make_grid(images))

print('GroundTruth:', ' '.join('%5s' % classes[labels[j]] for j in range(4)))

outputs = net(Variable(images))

_, pred = torch.max(outputs.data, 1)

print('Predicted: ', ' '.join('%5s' % classes[pred[j]] for j in range(4)))

correct = 0.0

total = 0

for data in test_loader:

images, labels = data

outputs = net(Variable(images))

_, pred = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (pred == labels).sum()

print('Accuracy of the network on the 10000 test images :%d %%' % (100 * correct / total))

class_correct = list(0. for i in range(10))

class_total = list(0. for i in range(10))

for data in test_loader:

images, labels = data

outputs = net(Variable(images))

_, pred = torch.max(outputs.data, 1)

c = (pred == labels).squeeze() # 1*10000*10-->10*10000

for i in range(4):

label = labels[i]

class_correct[label] += c[i]

class_total[label] += 1

for i in range(10):

print('Accuracy of %5s : %2d %%' % (classes[i], 100 * class_correct[i] / class_total[i]))

本片只是一味的贴了一下这个分类识别过程中具体实现代码,对于卷积神经网络的理解以及测试相关说明还不够,尤其是对于卷积神经网络中卷积层Conv2d的数据说明还不够透彻且网络最后识别精度不够高,学习路漫漫,感觉这期不太含有什么营养,只是简单的搬运项目代码

但还是感谢观看,如果有帮助到您,点个赞再走吧!

如果有错误,请立刻指出批评,尽快修改!