Pandas基础二重点 -----------分组聚合、agg函数、apply函数(可传参)

Pandas基础二 -----------分组聚合

- 一、分组 groupby

-

- 1.1 如何对二维数组分组查询

- 1.2 使用pd.cut分段

- 二、聚合

-

- 2.1 分组后直接聚合

- 2.2 agg()函数,可同时做多个聚合

- 2.3 ※※自定义函数,传入agg方法中使用

- 2.4 apply函数,自由度最高的,可传参

一、分组 groupby

1.1 如何对二维数组分组查询

import pandas as pd

import numpy as np

df1=pd.DataFrame({

'name':['lilei','rose','jack','haha','rose','jack'],

'expend':[100,200,300,500,800,100],

'year':[2019,2018,2019,2019,2017,2017],

'salary':[1000,2000,3000,3000,4000,500]

})

print(df1)

group_name=df1.groupby('name')

#分组后是对象

print(group_name,type(group_name))

#查看分组概览

print(group_name.groups)

#分组后数量统计

print(group_name.count())

name expend year salary

0 lilei 100 2019 1000

1 rose 200 2018 2000

2 jack 300 2019 3000

3 haha 500 2019 3000

4 rose 800 2017 4000

5 jack 100 2017 500

#分组后是对象

#查看分组概览

{‘haha’: Int64Index([3], dtype=‘int64’), ‘jack’: Int64Index([2, 5], dtype=‘int64’), ‘lilei’: Int64Index([0], dtype=‘int64’), ‘rose’: Int64Index([1, 4], dtype=‘int64’)}

#分组后数量统计

expend year salary

name

haha 1 1 1

jack 2 2 2

lilei 1 1 1

rose 2 2 2

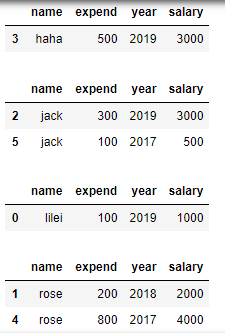

#遍历分组后的数据

for name,group in group_name:

display(group)

#按多列进行分组

group_two_name=df1.groupby(['name','year'])

print(group_two_name.groups)

1.2 使用pd.cut分段

- 先使用pd.cut对目标数据分段

- 按照分段后的数据进行分组

- 可使用交叉表

import pandas as pd

from pandas import Series,DataFrame

import numpy as np

import random

df2=pd.DataFrame({

'age':[20,31,41,66,67],

'sex':['M','M','M','F','F']

})

print(df2)

print("================================================")

#将数据按段分成不同范围

cut_age=pd.cut(df2['age'],bins=[19,40,65,100])

print(cut_age)

print("================================================")

#按照上述范围对数据分组

group_age=df2.groupby(cut_age).count()

print(group_age)

print("================================================")

#交叉表,统计分组后,‘sex’出现的频次

print(pd.crosstab(cut_age,df2['sex'],margins=True))

age sex

0 20 M

1 31 M

2 41 M

3 66 F

4 67 F

================================================

0 (19, 40]

1 (19, 40]

2 (40, 65]

3 (65, 100]

4 (65, 100]

Name: age, dtype: category

Categories (3, interval[int64]): [(19, 40] < (40, 65] < (65, 100]]

================================================

age sex

age

(19, 40] 2 2

(40, 65] 1 1

(65, 100] 2 2

================================================

sex F M All

age

(19, 40] 0 2 2

(40, 65] 0 1 1

(65, 100] 2 0 2

All 2 3 5

二、聚合

2.1 分组后直接聚合

分组后直接求和

取某列的结果:

- 可先取出对应列,后分组

- 也可先分组后取对应列

df3=pd.DataFrame({

'data1':np.random.randint(1,10,5),

'data2':np.random.randint(11,20,5),

'key1':list('aabba'),

'key2':list('xyyxy')

})

print(df3)

print("================================================")

#分组后直接聚合计算,不是数值的列(麻烦列)会被清除

print(df3.groupby('key1').sum())

print("================================================")

#只想要data1的数据,那么可以先取出对应列,后分组

print(df3['data1'].groupby(df3['key1']).sum())

#也可以先分组后取对应列。效率基本无差别

print(df3.groupby('key1')['data1'].sum())

data1 data2 key1 key2

0 1 17 a x

1 1 15 a y

2 7 18 b y

3 1 11 b x

4 8 11 a y

================================================

data1 data2

key1

a 10 43

b 8 29

================================================

key1

a 10

b 8

Name: data1, dtype: int32

key1

a 10

b 8

Name: data1, dtype: int32

1

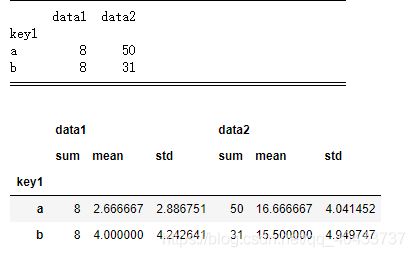

2.2 agg()函数,可同时做多个聚合

print(df3.groupby('key1').agg('sum'))

print("================================================")

display(df3.groupby('key1').agg(['sum','mean','std']))

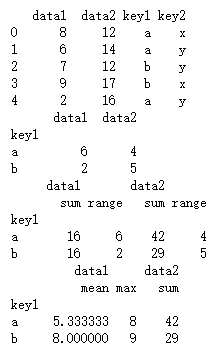

2.3 ※※自定义函数,传入agg方法中使用

日常可自定义聚合运算,传入agg()中调用

agg中使用元祖可给自定义函数起别名

注:只有pandas中函数作为参数传给agg,故函数不用加括号

import pandas as pd

from pandas import Series,DataFrame

import numpy as np

import random

df4=pd.DataFrame({

'data1':np.random.randint(1,10,5),

'data2':np.random.randint(11,20,5),

'key1':list('aabba'),

'key2':list('xyyxy')

})

print(df4)

def num_range(df):

"""

返回最大值与最小值的差值

"""

return df.max() - df.min()

print(df4.groupby('key1').agg(num_range))

#重命名自定义函数

print(df4.groupby('key1').agg(['sum',('range',num_range)]))

#agg中传入字典,key代表对应列,value代表对应列的聚合函数

data_map={

'data1':['mean','max'],

'data2':'sum'

}

print(df4.groupby('key1').agg(data_map))

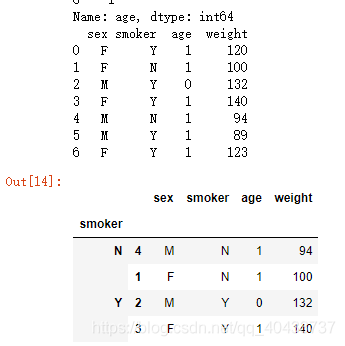

2.4 apply函数,自由度最高的,可传参

跟agg函数相比,apply可给函数添加参数

DataFrame.apply(func, axis=0, broadcast=False, raw=False, reduce=None, args=(), **kwds)

df1=pd.DataFrame({'sex':list('FFMFMMF'),'smoker':list('YNYYNYY'),'age':[21,30,17,37,40,18,26],'weight':[120,100,132,140,94,89,123]})

print(df1)

def bin_age(age):

if age>=18:

return 1

else:

return 0

#改变age列的值

print(df1['age'].apply(bin_age))

df1['age']=df1['age'].apply(bin_age)

print(df1)

#分别取出抽烟和不抽烟的体重前2

def weight_top(self,col,n=5):

return self.sort_values(by=col)[-n:]

df1.groupby('smoker').apply(weight_top,col='weight',n=2)

#另一种写法

#df1.groupby('smoker').apply(weight_top,args=('weight',2))