简介

本文目标:说明 Envoy 连接控制相关参数作用。以及在临界异常情况下的细节逻辑。目标是如何减少连接异常而引起的服务访问失败,提高服务成功率。

近期为解决一个生产环境中的 Istio Gateway 连接偶尔 Reset 问题,研究了一下 Envoy/Kernel 在 socket 连接关闭上的事。其中包括 Envoy 的连接管理相关参数和 Linux 系统网络编程的细节。写本文以备忘。

原文:https://blog.mygraphql.com/zh/posts/cloud/envoy/connection-life/

引

封面简介:《硅谷(Silicon Valley)》

《硅谷》是一部美国喜剧电视连续剧,由迈克·贾奇、约翰·阿尔舒勒和戴夫·克林斯基创作。 它于 2014 年 4 月 6 日在 HBO 首播,并于 2019 年 12 月 8 日结束,共 53 集。该系列模仿了硅谷的技术行业文化,重点讲述了创建一家名为

Pied Piper的初创公司的程序员 Richard Hendricks,并记录了他在面对来自更大实体的竞争时维持公司的努力。该系列因其写作和幽默而受到好评。 它获得了无数荣誉提名,包括连续五次获得黄金时段艾美奖杰出喜剧系列提名。

我在 2018 年看过这部连续剧。当时英文水平有限,能听懂的只有 F*** 的单词。但透过屏幕,还是可以感受到一群有创业激情的如何各展所能,去应对一个又一个挑战的过程。在某种程度上,满足我这种现实世界无法完成的愿望。

剧中一个经典场景是玩一种可以抛在空中后,随机变红或蓝的玩具球。玩家得到蓝色,就算赢。叫:

Based on a patented ‘inside-out’ mechanism, this lightweight ball changes colors when it is flipped in the air. The Switch Pitch entered the market in 2001 and has been in continuous production since then. The toy won the Oppenheim Platinum Award for Design and has been featured numerous times on HBO’s

Silicon Valley.

好了,写篇技术文章需要那么长的引子吗?是的,这文章有点长和枯燥,正所谓 TL;DR 。

大家知道,所有重网络的应用,包括 Envoy 在内,都有玩随机 SWITCH PITCH BALL 的时候。随机熵可以来源于一个特别慢的对端,可以来源于特别小的网络 MTU,或者是特别大的 HTTP Body,一个特别长的 Http Keepalive 连接,甚至一个实现不规范的 Http Client。

Envoy 连接生命周期管理

摘自我的:https://istio-insider.mygraphql.com/zh_CN/latest/ch2-envoy/connection-life/connection-life.html

Upstream/Downstream 连接解藕

HTTP/1.1 规范有这个设计:

HTTP Proxy 是 L7 层的代理,应该和 L3/L4 层的连接生命周期分开。

所以,像从 Downstream 来的 Connection: Close 、 Connection: Keepalive 这种 Header, Envoy 不会 Forward 到 Upstream 。 Downstream 连接的生命周期,当然会遵从 Connection: xyz 的指示控制。但 Upstream 的连接生命周期不会被 Downstream 的连接生命周期影响。 即,这是两个独立的连接生命周期管理。

Github Issue: HTTP filter before and after evaluation of Connection: Close header sent by upstream#15788 说明了这个问题:

This doesn't make sense in the context of Envoy, where downstream and upstream are decoupled and can use different protocols. I'm still not completely understanding the actual problem you are trying to solve?

连接超时相关配置参数

图:Envoy 连接 timeout 时序线

idle_timeout

(Duration) The idle timeout for connections. The idle timeout is defined as the period in which there are no active requests. When the idle timeout is reached the connection will be closed. If the connection is an HTTP/2 downstream connection a drain sequence will occur prior to closing the connection, see drain_timeout. Note that request based timeouts mean that HTTP/2 PINGs will not keep the connection alive. If not specified, this defaults to 1 hour. To disable idle timeouts explicitly set this to 0.

Warning

Disabling this timeout has a highly likelihood of yielding connection leaks due to lost TCP FIN packets, etc.

If the overload action “envoy.overload\_actions.reduce\_timeouts” is configured, this timeout is scaled for downstream connections according to the value for HTTP\_DOWNSTREAM\_CONNECTION\_IDLE.

max_connection_duration

(Duration) The maximum duration of a connection. The duration is defined as a period since a connection was established. If not set, there is no max duration. When max_connection_duration is reached and if there are no active streams, the connection will be closed. If the connection is a downstream connection and there are any active streams, the drain sequence will kick-in, and the connection will be force-closed after the drain period. See drain\_timeout.

Github Issue: http: Allow upper bounding lifetime of downstream connections #8302

Github PR: upstream: support max connection duration for upstream HTTP connections #17932

Github Issue: Forward Connection:Close header to downstream#14910

For HTTP/1, Envoy will send aConnection: closeheader aftermax_connection_durationif another request comes in. If not, after some period of time, it will just close the connection.https://github.com/envoyproxy...

Note that

max_requests_per_connectionisn't (yet) implemented/supported for downstream connections.For HTTP/1, Envoy will send a

Connection: closeheader aftermax_connection_duration(且在drain_timeout前) if another request comes in. If not, after some period of time, it will just close the connection.I don't know what your downstream LB is going to do, but note that according to the spec, the

Connectionheader is hop-by-hop for HTTP proxies.

max_requests_per_connection

(UInt32Value) Optional maximum requests for both upstream and downstream connections. If not specified, there is no limit. Setting this parameter to 1 will effectively disable keep alive. For HTTP/2 and HTTP/3, due to concurrent stream processing, the limit is approximate.

Github Issue: Forward Connection:Close header to downstream#14910

We are having this same issue when using istio (istio/istio#32516). We are migrating to use istio with envoy sidecars frontend be an AWS ELB. We see that connections from ELB -> envoy stay open even when our application is sending

Connection: Close.max_connection_durationworks but does not seem to be the best option. Our applications are smart enough to know when they are overloaded from a client and sendConnection: Closeto shard load.I tried writing an envoy filter to get around this but the filter gets applied before the stripping. Did anyone discover a way to forward the connection close header?

drain_timeout - for downstream only

Envoy Doc%20The)

(Duration) The time that Envoy will wait between sending an HTTP/2 “shutdown notification” (GOAWAY frame with max stream ID) and a final GOAWAY frame. This is used so that Envoy provides a grace period for new streams that race with the final GOAWAY frame. During this grace period, Envoy will continue to accept new streams.

After the grace period, a final GOAWAY frame is sent and Envoy will start refusing new streams. Draining occurs both when:

a connection hits the

idle timeout- 即系连接到达

idle_timeout或max_connection_duration后,都会开始draining的状态和drain_timeout计时器。对于 HTTP/1.1,在draining状态下。如果 downstream 过来请求,Envoy 都在响应中加入Connection: closeheader。 - 所以只有连接发生

idle_timeout或max_connection_duration后,才会进入draining的状态和drain_timeout计时器。

- 即系连接到达

- or during general server draining.

The default grace period is 5000 milliseconds (5 seconds) if this option is not specified.

https://www.envoyproxy.io/docs/envoy/latest/intro/arch_overview/operations/draining

By default, the

HTTP connection manager filterwill add “Connection: close” to HTTP1 requests(笔者注:By HTTP Response), send HTTP2 GOAWAY, and terminate connections on request completion (after the delayed close period).

我曾经认为, drain 只在 Envoy 要 shutdown 时才触发。现在看来,只要是有计划的关闭连接(连接到达 idle_timeout 或 max_connection_duration后),都应该走 drain 流程。

delayed_close_timeout - for downstream only

(Duration) The delayed close timeout is for downstream connections managed by the HTTP connection manager. It is defined as a grace period after connection close processing has been locally initiated during which Envoy will wait for the peer to close (i.e., a TCP FIN/RST is received by Envoy from the downstream connection) prior to Envoy closing the socket associated with that connection。

即系在一些场景下,Envoy 会在未完全读取完 HTTP Request 前,就回写 HTTP Response 且希望关闭连接。这叫 服务端过早关闭连接(Server Prematurely/Early Closes Connection)。这时有几种可能情况:

- downstream 还在发送 HTTP Reqest 当中(socket write)。

- 或者是 Envoy 的 kernel 中,还有

socket recv buffer未被 Envoy user-space 进取。通常是 HTTP Conent-Lentgh 大小的 BODY 还在内核的socket recv buffer中,未完整加载到 Envoy user-space

这两种情况下, 如果 Envoy 调用 close(fd) 去关闭连接, downstream 均可能会收到来自 Envoy kernel 的 RST 。最终 downstream 可能不会 read socket 中的 HTTP Response 就直接认为连接异常,向上层报告异常:Peer connection rest。

详见:{doc}connection-life-race 。

为缓解这种情况,Envoy 提供了延后关闭连接的配置。希望等待 downstream 完成 socket write 的过程。让 kernel socket recv buffer 数据都加载到 user space 中。再去调用 close(fd)。

NOTE: This timeout is enforced even when the socket associated with the downstream connection is pending a flush of the write buffer. However, any progress made writing data to the socket will restart the timer associated with this timeout. This means that the total grace period for a socket in this state will be

即系,每次 write socket 成功,这个 timer 均会被 rest.

Delaying Envoy’s connection close and giving the peer the opportunity to initiate the close sequence mitigates(缓解) a race condition that exists when downstream clients do not drain/process data in a connection’s receive buffer after a remote close has been detected via a socket write(). 即系,可以缓解 downsteam 在 write socket 失败后,就不去 read socket 取 Response 的情况。

This race leads to such clients failing to process the response code sent by Envoy, which could result in erroneous downstream processing.

If the timeout triggers, Envoy will close the connection’s socket.

The default timeout is 1000 ms if this option is not specified.

Note:

To be useful in avoiding the race condition described above, this timeout must be set to at least

+<100ms to account for a reasonable “worst” case processing time for a full iteration of Envoy’s event loop>.

Warning:

A value of 0 will completely disable delayed close processing. When disabled, the downstream connection’s socket will be closed immediately after the write flush is completed or will never close if the write flush does not complete.

需要注意的是,为了不影响性能,delayed_close_timeout 在很多情况下是不会生效的:

Github PR: http: reduce delay-close issues for HTTP/1.1 and below #19863

Skipping delay close for:

- HTTP/1.0 framed by connection close (as it simply reduces time to end-framing)

- as well as HTTP/1.1 if the request is fully read (so there's no FIN-RST race)。即系如果

Addresses the Envoy-specific parts of #19821

Runtime guard:envoy.reloadable_features.skip_delay_close同时出现在 Envoy 1.22.0 的 Release Note 里:

http: avoiding

delay-closefor:

- HTTP/1.0 responses framed by

connection: close- as well as HTTP/1.1 if the request is fully read.

This means for responses to such requests, the FIN will be sent immediately after the response. This behavior can be temporarily reverted by setting

envoy.reloadable_features.skip_delay_closeto false. If clients are seen to be receiving sporadic partial responses and flipping this flag fixes it, please notify the project immediately.

Envoy 连接关闭后的竞态条件

摘自我的:https://istio-insider.mygraphql.com/zh_CN/latest/ch2-envoy/connection-life/connection-life-race.html

由于下面使用到了 socket 一些比较底层和冷门的知识点。如 close socket 的一些临界状态和异常逻辑。如果不太了解,建议先阅读我写的:

《Mark’s DevOps 雜碎》 中 《Socket Close/Shutdown 的临界状态与异常逻辑》 一文。

Envoy 与 Downstream/Upstream 连接状态不同步

以下大部分情况,算是个发生可能性低的 race condition。但,在大流量下,再少的可能性也是有遇到的时候。Design For Failure 是程序员的天职。

Downstream 向 Envoy 关闭中的连接发送请求

Github Issue: 502 on our ALB when traffic rate drops#13388

Fundamentally, the problem is that ALB is reusing connections that Envoy is closing. This is an inherent(固有) race condition with HTTP/1.1.

You need to configure theALB max connection/idle timeoutto be <any envoy timeout.To have no race conditions, the ALB needs to support

max_connection_durationand have that be less than Envoy's max connection duration. There is no way to fix this with Envoy.

本质上是:

Envoy 调用

close(fd)关闭了 socket。同时关闭了 fd。如果

close(fd)时:- kernel 的 socket recv buffer 有数据未加载到 user-space ,那么 kernel 会发送 RST 给 downstream。原因是这数据是已经 TCP ACK 过的,而应用却丢弃了。

- 否则,kernel 发送 FIN 给 downstream.

- 由于关闭了 fd,这注定了如果 kernel 还在这个 TCP 连接上收到 TCP 数据包,就会丢弃且以

RST回应。

- Envoy 发出了

FIN - Envoy socket kernel 状态更新为

FIN_WAIT_1或FIN_WAIT_2。

对于 Downstream 端,有两种可能:

- Downstream 所在 kernel 中的 socket 状态已经被 Envoy 发过来的

FIN更新为CLOSE_WAIT状态,但 Downstream 程序(user-space)中未更新(即未感知到CLOSE_WAIT状态)。 - Downstream 所在 kernel 因网络延迟等问题,还未收到

FIN。

所以 Downstream 程序 re-use 了这个 socket ,并发送 HTTP Request(假设拆分为多个 IP 包) 。结果都是在某个 IP 包到达 Envoy kernel 时,Envoy kernel 返回了 RST。于是 Downstream kernel 在收到 RST 后,也关闭了socket。所以从某个 socket write 开始均会失败。失败说明是类似 Upstream connection reset. 这里需要注意的是, socket write() 是个异步的过程,不会等待对端的 ACK 就返回了。

- 一种可能是,某个

write()时发现失败。这更多是 http keepalive 的 http client library 的行为。或者是 HTTP Body 远远大于 socket sent buffer 时,分多 IP 包的行为。 - 一种可能是,直到

close()时,要等待 ACK 了,才发现失败。这更多是非 http keepalive 的 http client library 的行为。或者是 http keepalive 的 http client library 的最后一个请求时的行为。

从 HTTP 层面来看,有两种场景可能出现这个问题:

服务端过早关闭连接(Server Prematurely/Early Closes Connection)。

Downsteam 在 write HTTP Header 后,再 write HTTP Body。然而,Envoy 在未读完 HTTP Body 前,就已经 Write Response 且

close(fd)了 socket。这叫服务端过早关闭连接(Server Prematurely/Early Closes Connection)。别以为 Envoy 不会出现未完全读完 Request 就 write Response and close socket 的情况。最少有几个可能性:- 只需要 Header 就可以判断一个请求是非法的。所以大部分是返回 4xx/5xx 的 status code。

- HTTP Request Body 超过了 Envoy 的最大限制

max_request_bytes

这时,有两个情况:

- Downstream 的 socket 状态可能是

CLOSE_WAIT。还可以write()的状态。但这个 HTTP Body 如果被 Envoy 的 Kernel 收到,由于 socket 已经执行过close(fd), socket 的文件 fd 已经关闭,所以 Kernel 直接丢弃 HTTP Body 且返回RST给对端(因为 socket 的文件 fd 已经关闭,已经没进程可能读取到数据了)。这时,可怜的 Downstream 就会说:Connection reset by peer之类的错误。 Envoy 调用

close(fd)时,kernel 发现 kernel 的 socket buffer 未被 user-space 完全消费。这种情况下, kernel 会发送RST给 Downstream。最终,可怜的 Downstream 就会在尝试write(fd)或read(fd)时说:Connection reset by peer之类的错误。见:Github Issue: http: not proxying 413 correctly#2929

+----------------+ +-----------------+ |Listner A (8000)|+---->|Listener B (8080)|+----> (dummy backend) +----------------+ +-----------------+This issue is happening, because Envoy acting as a server (i.e. listener B in @lizan's example) closes downstream connection with pending (unread) data, which results in TCP RST packet being sent downstream.

Depending on the timing, downstream (i.e. listener A in @lizan's example) might be able to receive and proxy complete HTTP response before receiving TCP RST packet (which erases low-level TCP buffers), in which case client will receive response sent by upstream (413 Request Body Too Large in this case, but this issue is not limited to that response code), otherwise client will receive 503 Service Unavailable response generated by listener A (which actually isn't the most appropriate response code in this case, but that's a separate issue).

The common solution for this problem is to half-close downstream connection using ::

shutdown(fd_, SHUT_WR)and then read downstream until EOF (to confirm that the other side received complete HTTP response and closed connection) orshort timeout.

减少这种 race condition 的可行方法是:delay close socket。 Envoy 已经有相关的配置:delayed_close_timeout%20The)

Downstream 未感知到 HTTP Keepalive 的 Envoy 连接已经关闭,re-use 了连接。

上面提到的 Keepalive 连接复用的时候。Envoy 已经调用内核的

close(fd)把 socket 变为FIN_WAIT_1/FIN_WAIT_2的 状态,且已经发出FIN。但 Downstream 未收到,或已经收到但应用未感知到,且同时 reuse 了这个 http keepalive 连接来发送 HTTP Request。在 TCP 协议层面看来,这是个half-close连接,未 close 的一端的确是可以发数据到对端的。但已经调用过close(fd)的 kernel (Envoy端) 在收到数据包时,直接丢弃且返回RST给对端(因为 socket 的文件 fd 已经关闭,已经没进程可能读取到数据了)。这时,可怜的 Downstream 就会说:Connection reset by peer之类的错误。- 减少这种 race condition 的可行方法是:让 Upstream 对端配置比 Envoy 更小的 timeout 时间。让 Upsteam 主动关闭连接。

Envoy 实现上的缓解

缓解 服务端过早关闭连接(Server Prematurely/Early Closes Connection)

Github Issue: http: not proxying 413 correctly #2929

In the case envoy is proxying large HTTP request, even upstream returns 413, the client of proxy is getting 503.

Github PR: network: delayed conn close #4382,增加了

delayed_close_timeout配置。Mitigate client read/close race issues on downstream HTTP connections by adding a new connection

close type 'FlushWriteAndDelay'. This new close type flushes the write buffer on a connection but

does not immediately close after emptying the buffer (unlikeConnectionCloseType::FlushWrite).A timer has been added to track delayed closes for both '

FlushWrite' and 'FlushWriteAndDelay'. Upon

triggering, the socket will be closed and the connection will be cleaned up.Delayed close processing can be disabled by setting the newly added HCM '

delayed_close_timeout'

config option to 0.Risk Level: Medium (changes common case behavior for closing of downstream HTTP connections)

Testing: Unit tests and integration tests added.

但上面的 PR 在缓解了问题的同时也影响了性能:

Github Issue: HTTP/1.0 performance issues #19821

I was about to say it's probably delay-close related.

So HTTP in general can frame the response with one of three ways: content length, chunked encoding, or frame-by-connection-close.

If you don't haven an explicit content length, HTTP/1.1 will chunk, but HTTP/1.0 can only frame by

connection close(FIN).Meanwhile, there's another problem which is that if a client is sending data, and the request has not been completely read, a proxy responds with an error and closes the connection, many clients will get a TCP RST (due to uploading after FIN(

close(fd))) and not actually read the response. That race is avoided withdelayed_close_timeout.It sounds like Envoy could do better detecting if a request is complete, and if so, framing with immediate close and I can pick that up. In the meantime if there's any way to have your backend set a

content lengththat should work around the problem, or you can lower delay close in the interim.

于是需要再 Fix:

Github PR: http: reduce delay-close issues for HTTP/1.1 and below #19863

Skipping delay close for:

- HTTP/1.0 framed by connection close (as it simply reduces time to end-framing)

- as well as HTTP/1.1 if the request is fully read (so there's no FIN-RST race)。即系如果

Addresses the Envoy-specific parts of #19821

Runtime guard:envoy.reloadable_features.skip_delay_close同时出现在 Envoy 1.22.0 的 Release Note 里。需要注意的是,为了不影响性能,delayed_close_timeout 在很多情况下是不会生效的::

http: avoiding

delay-closefor:

- HTTP/1.0 responses framed by

connection: close- as well as HTTP/1.1 if the request is fully read.

This means for responses to such requests, the FIN will be sent immediately after the response. This behavior can be temporarily reverted by setting

envoy.reloadable_features.skip_delay_closeto false. If clients are seen to be receiving sporadic partial responses and flipping this flag fixes it, please notify the project immediately.

Envoy 向已被 Upstream 关闭的 Upstream 连接发送请求

Github Issue: Envoy (re)uses connection after receiving FIN from upstream #6815

With Envoy serving as HTTP/1.1 proxy, sometimes Envoy tries to reuse a connection even after receiving FIN from upstream. In production I saw this issue even with couple of seconds from FIN to next request, and Envoy never returned FIN ACK (just FIN from upstream to envoy, then PUSH with new HTTP request from Envoy to upstream). Then Envoy returns 503 UC even though upstream is up and operational.

Istio: 503's with UC's and TCP Fun Times

一个经典场景的时序图:from https://medium.com/@phylake/why-idle-timeouts-matter-1b3f7d4469fe

图中 Reverse Proxy 可以理解为 Envoy.

本质上是:

- Upstream 对端调用

close(fd)关闭了 socket。这注定了如果 kernel 还在这个 TCP 连接上收到数据,就会丢弃且以RST回应。 - Upstream 对端发出了

FIN - Upstream socket 状态更新为

FIN_WAIT_1或FIN_WAIT_2。

对于 Envoy 端,有两种可能:

- Envoy 所在 kernel 中的 socket 状态已经被对端发过来的

FIN更新为CLOSE_WAIT状态,但 Envoy 程序(user-space)中未更新。 - Envoy 所在 kernel 因网络延迟等问题,还未收到

FIN。

但 Envoy 程序 re-use 了这个 socket ,并发送(write(fd)) HTTP Request(假设拆分为多个 IP 包) 。

这里又有两个可能:

- 在某一个 IP 包到达 Upstream 对端时,Upstream 返回了

RST。于是 Envoy 后继的 socketwrite均可能会失败。失败说明是类似Upstream connection reset. - 因为 socket

write是有 send buffer 的,是个异步操作。可能只在收到 RST 的下一个 epoll event cycle 中,发生EV_CLOSED事件,Envoy 才发现这个 socket 被 close 了。失败说明是类似Upstream connection reset.

Envoy 社区在这个问题有一些讨论,只能减少可能,不可能完全避免:

Github Issue: HTTP1 conneciton pool attach pending request to half-closed connection #2715

The HTTP1 connection pool attach pending request when a response is complete. Though the upstream server may already closed the connection, this will result the pending request attached to it end up with 503.协议与配置上的应对之法:

HTTP/1.1 has this inherent timing issue. As I already explained, this is solved in practice by

a) setting Connection: Closed when closing a connection immediately and

b) having a reasonable idle timeout.

The feature @ramaraochavali is adding will allow setting the idle timeout to less than upstream idle timeout to help with this case. Beyond that, you should be using

router level retries.

说到底,这种问题由于 HTTP/1.1 的设计缺陷,不可能完全避免。对于等幂的操作,还得依赖于 retry 机制。

Envoy 实现上的缓解

实现上,Envoy 社区曾经想用让 upstream 连接经历多次 epool event cycle 再复用的方法得到连接状态更新的事件。但这个方案不太好:

Github PR: Delay connection reuse for a poll cycle to catch closed connections.#7159(Not Merged)

So poll cycles are not an elegant way to solve this, when you delay N cycles, EOS may arrive in N+1-th cycle. The number is to be determined by the deployment so if we do this it should be configurable.

As noted in #2715, a retry (at Envoy level or application level) is preferred approach, #2715 (comment). Regardless of POST or GET, the status code 503 has a retry-able semantics defined in RFC 7231.

但最后,是用 connection re-use delay timer 的方法去实现:

All well behaving HTTP/1.1 servers indicate they are going to close the connection if they are going to immediately close it (Envoy does this). As I have said over and over again here and in the linked issues, this is well known timing issue with HTTP/1.1.

So to summarize, the options here are to:

Drop this change

Implement it correctly with an optional re-use delay timer.

最后的方法是:

Github PR: http: delaying attach pending requests #2871(Merged)

Another approach to #2715, attach pending request in next event after

onResponseComplete.即系限制一个 Upstream 连接在一个 epoll event cycle 中,只能承载一个 HTTP Request。即一个连接不能在同一个 epoll event cycle 中被多个 HTTP Request re-use 。这样可以减少 kernel 中已经是

CLOSE_WAIT状态(取到 FIN) 的请求,Envoy user-space 未感知到且 re-use 来发请求的可能性。https://github.com/envoyproxy/envoy/pull/2871/files

@@ -209,25 +215,48 @@ void ConnPoolImpl::onResponseComplete(ActiveClient& client) { host_->cluster().stats().upstream_cx_max_requests_.inc(); onDownstreamReset(client); } else { - processIdleClient(client); // Upstream connection might be closed right after response is complete. Setting delay=true // here to attach pending requests in next dispatcher loop to handle that case. // https://github.com/envoyproxy/envoy/issues/2715 + processIdleClient(client, true); } }一些描述:https://github.com/envoyproxy/envoy/issues/23625#issuecomment-1301108769

There's an inherent race condition that an upstream can close a connection at any point and Envoy may not yet know, assign it to be used, and find out it is closed. We attempt to avoid that by returning all connections to the pool to give the kernel a chance to inform us of

FINsbut can't avoid the race entirely.实现细节上,这个 Github PR 本身还有一个 bug ,在后面修正了:

Github Issue: Missed upstream disconnect leading to 503 UC#6190Github PR: http1: enable reads when final pipeline response received#6578

这里有个插曲,Istio 在 2019 年是自己 fork 了一个 envoy 源码的,自己去解决这个问题:Istio Github PR: Fix connection reuse by delaying a poll cycle. #73 。不过最后,Istio 还是回归原生的 Envoy,只加了一些必要的 Envoy Filter Native C++ 实现。

Istio 配置上缓解

Istio Github Issue: Almost every app gets UC errors, 0.012% of all requests in 24h period#13848

apiVersion: networking.istio.io/v1alpha3

kind: EnvoyFilter

metadata:

name: passthrough-retries

namespace: myapp

spec:

workloadSelector:

labels:

app: myapp

configPatches:

- applyTo: HTTP_ROUTE

match:

context: SIDECAR_INBOUND

listener:

portNumber: 8080

filterChain:

filter:

name: "envoy.filters.network.http_connection_manager"

subFilter:

name: "envoy.filters.http.router"

patch:

operation: MERGE

value:

route:

retry_policy:

retry_back_off:

base_interval: 10ms

retry_on: reset

num_retries: 2或

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: qqq-destination-rule

spec:

host: qqq.aaa.svc.cluster.local

trafficPolicy:

connectionPool:

http:

idleTimeout: 3s

maxRetries: 3Linux 连接关闭的临界状态与异常逻辑

摘自我的:https://devops-insider.mygraphql.com/zh_CN/latest/kernel/network/socket/socket-close/socket-close.html

如果你能坚持看到这里,恭喜你,已经到戏玉了。

Socket 的关闭,听起来是再简单不过的事情,不就是一个 close(fd) 的调用吗? 下面慢慢道来。

Socket 关闭相关模型

在开始分析一个事情前,我习惯先为事件相关方建立模型,然后边分析,边完善模型。这样分析逻辑时,就可以比较全面,且前后因果逻辑可以推演和检查。关键是,模型可以重用。

研究 Socket 关闭也不例外。

图:Socket 关闭相关模型

上图是 机器A 与 机器B 建议了 TCP socket 的情况。以 机器A 为例,分析一个模型:

自底向上层有:

- sott-IRQ/进程内核态 处理 IP 包接收

- socket 对象

- socket 对象相关的 send buffer

- socket 对象相关的 recv buffer

进程不直接访问 socket 对象,而是有个 VFS 层,以

File Descriptor(fd)为句柄读写 socket- 一个 socket 可以被多个 fd 引用

进程以一个整数作为 fd 的 id ,在操作(调用kernel) 时带上这个 id 作为调用参数。

- 每个 fd 均有可独立关闭的 read channel 和 write channel

看完模型的静态元素后,说说模型的一些规则,这些规则在 kernel 程序中执行。且在下文中引用:

socket FD read channel 关闭

- socket FD read channel 关闭时,如果发现

recv buffer中有已经 ACK 的数据,未被应用(user-space)读取,将向对端发送RST。详述在这:{ref}kernel/network/kernel-tcp/tcp-reset/tcp-reset:TCP RST and unread socket recv buffer - socket FD read channel 关闭后,如果还收到对端的数据(TCP Half close),将丢弃,且无情地以

RST回应。

- socket FD read channel 关闭时,如果发现

相关的 TCP 协议知识

这里只想说说关闭相关的部分。

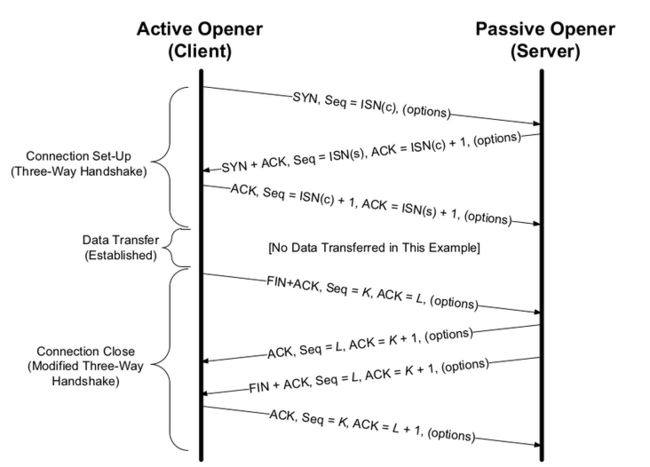

:::{figure-md} 图:TCP 一般关闭流程

:class: full-width

图:TCP 一般关闭流程 - from [TCP.IP.Illustrated.Volume.1.The.Protocols]

:::

TCP Half-Close

TCP 是全双工连接。从协议设计上,是支持长期的,关闭一方 stream 的情况,保留另一方 stream 的情况的。还有个专门的术语:Half-Close。

:::{figure-md} 图:TCP Half-Close 流程

:class: full-width

图:TCP Half-Close 流程 - from [TCP.IP.Illustrated.Volume.1.The.Protocols]

:::

说白了,就是一方觉得自己不会再发数据了,就可以先 Close 了自己的 write stream,发 FIN 给对端,告诉它,我不会再发数据了。

socket fd 的关闭

[W. Richard Stevens, Bill Fenner, Andrew M. Rudoff - UNIX Network Programming, Volume 1] 一书中,说 socket fd 有两种关闭函数:

- close(fd)

- shutdown(fd)

close(fd)

[W. Richard Stevens, Bill Fenner, Andrew M. Rudoff - UNIX Network Programming, Volume 1] - 4.9 close Function

Since the reference count was still greater than 0, this call to close did not

initiate TCP’s four-packet connection termination sequence.

意思是, socket 是有 fd 的引用计数的。close 会减少引用计数。只在引用计数为 0 时,发会启动连接关闭流程(下面描述这个流程)。

The default action of close with a TCP socket is to mark the socket as closed and return to the process immediately. The fd is no longer usable by the process: It cannot be used as an argument to read or write. But, TCP will try to send any data that is already queued to be sent to the other end, and after this occurs, the normal TCP connection termination sequence takes place.

we will describe theSO_LINGERsocket option, which lets us change this default action with a TCP socket.

意思是:这个函数默认会立即返回。关闭了的 fd ,将不可以再读写。kernel 在后台,会启动连接关闭流程,在所有 socket send buffer 都发送完后,最后发 FIN 给对端。

这里暂时不说 SO_LINGER。

close(fd) 其实是同时关闭了 fd 的 read channel 与 write channel。所以根据上面的模型规则:

socket FD read channel 关闭后,如果还收到对端的数据,将丢弃,且无情地以 RST 回应。

如果用 close(fd) 方法关闭了一个 socket后(调用返回后),对端因未收到 FIN,或虽然收到 FIN 但认为只是 Half-close TCP connection 而继续发数据过来的话,kernel 将无情地以 RST 回应:

图:关闭的 socket 收到数据,以 RST 回应 - from [UNIX Network Programming, Volume 1] - SO_LINGER Socket Option

这是 TCP 协议设计上的一个“缺陷”。FIN 只能告诉对端我要关闭出向流,却没方法告诉对端:我不想再收数据了,要关闭入向流。但内核实现上,是可以关闭入向流的,且这个关闭在 TCP 层面无法通知对方,所以就出现误会了。

shutdown(fd)

作为程序员,先看看函数文档。

##include

int shutdown(int sockfd, int howto); [UNIX Network Programming, Volume 1] - 6.6 shutdown Function

The action of the function depends on the value of the howto argument.

- SHUT_RD

The read half of the connection is closed—No more data can be

received on the socket and any data currently in the socket receive buffer is discarded. The process can no longer issue any of the read functions on the socket. Any data received after this call for a TCP socket is acknowledged and then silently discarded. - SHUT_WR

The write half of the connection is closed—In the case of TCP, this is

called ahalf-close(Section 18.5 of TCPv1). Any data currently in the socket send buffer will be sent, followed by TCP’s normal connection termination sequence. As we mentioned earlier, this closing of the write half is done regardless of whether or not the socket descriptor’s reference count is currently greater than 0. The process can no longer issue any of the write functions on the socket. SHUT_RDWR

The read half and the write half of the connection are both closed — This is equivalent to calling shutdown twice: first with

SHUT_RDand then withSHUT_WR.

[UNIX Network Programming, Volume 1] - 6.6 shutdown Function

The normal way to terminate a network connection is to call the

closefunction. But,

there are two limitations with close that can be avoided with shutdown:

closedecrements the descriptor’s reference count and closes the socket only if

thecountreaches 0. We talked about this in Section 4.8.With

shutdown, we can initiate TCP’s normal connection termination sequence (the four segments

beginning with a FIN), regardless of thereference count.closeterminates both directions of data transfer, reading and writing. Since a TCP connection is full-duplex, there are times when we want to tell the other end that we have finished sending, even though that end might have more data to send us(即系 Half-Close TCP connection).

意思是:shutdown(fd) 可以选择双工中的一个方向关闭 fd。一般来说有两种使用场景:

- 只关闭出向(write)的,实现

Half close TCP - 同时关闭出向与入向(write&read)

图:TCP Half-Close shutdown(fd) 流程 - from [UNIX Network Programming, Volume 1]

SO_LINGER

[UNIX Network Programming, Volume 1] -

SO_LINGERSocket OptionThis option specifies how the

closefunction operates for a connection-oriented proto-

col (e.g., for TCP and SCTP, but not for UDP). By default, close returns immediately, but if there is any data still remaining in the socket send buffer, the system will try to deliver the data to the peer. 默认的close(fd)的行为是:

- 函数立刻返回。kernel 后台开启正常的关闭流程:异步发送在 socket send buffer 中的数据,最后发送 FIN。

- 如果 socket receive buffer 中有数据,数据将丢弃。

SO_LINGER ,顾名思义,就是 “徘徊” 的意思。

SO_LINGER 是一个 socket option,定义如下:

struct linger {

int l_onoff; /* 0=off, nonzero=on */

int l_linger; /* linger time, POSIX specifies units as seconds */

};SO_LINGER 如下影响 close(fd) 的行为:

- If

l_onoffis 0, the option is turned off. The value ofl_lingeris ignored and

the previously discussed TCP default applies:closereturns immediately. 这是协默认的行为。 If

l_onoffis nonzerol_lingeris zero, TCP aborts the connection when it is closed (pp. 1019 – 1020 of TCPv2). That is, TCP discards any data still remaining in thesocket send bufferand sends anRSTto the peer, not the normal four-packet connection termination sequence . This avoids TCP’sTIME_WAITstate, but in doing so, leaves open the possibility of another incarnation of this connection being created within 2MSL seconds and having old duplicate segments from the just-terminated connection being incorrectly delivered to the new incarnation.(即,通过 RST 实现本地 port 的快速回收。当然,有副作用)l_lingeris nonzero, then the kernel will linger when the socket is closed (p. 472 of TCPv2). That is, if there is any data still remaining in thesocket send buffer, the process is put to sleep until either: (即,进程在调用close(fd)时,会等待发送成功ACK 或 timeout)

- all the data is sent and acknowledged by the peer TCP

- or the linger time expires.

If the socket has been set to nonblocking, it will not wait for the

closeto complete, even if the linger time is nonzero.When using this feature of the

SO_LINGERoption, it is important for the application to check the return value fromclose, because if the linger time expires before the remaining data is sent and acknowledged,closereturnsEWOULDBLOCKand any remain ing data in thesend bufferis discarded.(即开启了后,如果在 timeout 前还未收到 ACK,socket send buffer 中的数据可能丢失)

close/shutdown 与 SO_LINGER 小结

[UNIX Network Programming, Volume 1] - SO_LINGER Socket Option

| Function | Description |

|---|---|

shutdown, SHUT_RD |

No more receives can be issued on socket; process can still send on socket; socket receive buffer discarded; any further data received is discarded no effect on socket send buffer. |

shutdown, SHUT_WR(这是大部分使用 shutdown 的场景) |

No more sends can be issued on socket; process can still receive on socket; contents of socket send buffer sent to other end, followed by normal TCP connection termination (FIN); no effect on socket receive buffer. |

close, l_onoff = 0(default) |

No more receives or sends can be issued on socket; contents of socket send buffer sent to other end. If descriptor reference count becomes 0: - normal TCP connection termination (FIN) sent following data in send buffer. - socket receive buffer discarded.(即丢弃 recv buffer 未被 user space 读取的数据。注意:对于 Linux 如果是已经 ACK 的数据未被 user-space 读取,将发送 RST 给对端) |

close, l_onoff = 1l_linger = 0 |

No more receives or sends can be issued on socket. If descriptor reference count becomes 0: - RST sent to other end; connection state set to CLOSED(no TIME_WAIT state); - socket send buffer and socket receive buffer discarded. |

close, l_onoff = 1l_linger != 0 |

No more receives or sends can be issued on socket; contents of socket send buffer sent to other end. If descriptor reference count becomes 0:- normal TCP connection termination (FIN) sent following data in send buffer; - socket receive buffer discarded; and if linger time expires before connection CLOSED, close returns EWOULDBLOCK. and any remain ing data in the send buffer is discarded.(即开启了后,如果在 timeout 前还未收到 ACK,socket send buffer 中的数据可能丢失) |

不错的扩展阅读:

结尾

在长期方向无大错的前提下,长线的投入和专注不一定可以现成地解决问题和收到回报。但总会有天,会给人惊喜。致每一个技术人。