python和苹果_苹果手机评论情感分析(附python源码和评论数据)

原标题:苹果手机评论情感分析(附python源码和评论数据)

首先抓取网页上的数据,每一页十条评论,生成为一个txt文件。

数据链接

回复公众号 datadw 关键字“苹果”获取。

以下采用既有词典的方式:

准备四本词典,停用词,否定词,程度副词,情感词,链接也给出来:

回复公众号 datadw 关键字“苹果”获取。

[python]view plaincopy

f=open(r'C:/Users/user/Desktop/stopword.dic')#停止词

stopwords = f.readlines()

stopwords=[i.replace("n","").decode("utf-8")foriinstopwords]

fromcollectionsimportdefaultdict

# (1) 情感词

f1 =open(r"C:UsersuserDesktopBosonNLP_sentiment_score.txt")

senList = f1.readlines()

senDict = defaultdict()

forsinsenList:

s=s.decode("utf-8").replace("n","")

senDict[s.split(' ')[0]] = float(s.split(' ')[1])

# (2) 否定词

f2=open(r"C:UsersuserDesktopnotDict.txt")

notList = f2.readlines()

notList=[x.decode("utf-8").replace("n","")forxinnotListifx !='']

# (3) 程度副词

f3=open(r"C:UsersuserDesktopdegreeDict.txt")

degreeList = f3.readlines()

degreeDict = defaultdict()

fordindegreeList:

d=d.decode("utf-8")

degreeDict[d.split(',')[0]] = float(d.split(',')[1])

导入数据并且分词

[python]view plaincopy

importjieba

defsent2word(sentence):

"""

Segment a sentence to words

Delete stopwords

"""

segList = jieba.cut(sentence)

segResult = []

forwinsegList:

segResult.append(w)

newSent = []

forwordinsegResult:

ifwordinstopwords:

# print "stopword: %s" % word

continue

else:

newSent.append(word)

returnnewSent

importos

path = u"C:/Users/user/Desktop/comments/"

listdir = os.listdir(path)

t=[]

foriinlistdir:

f=open(path+i).readlines()

forjinf:

t.append(sent2word(j))

计算一下得分,注意,程度副词和否定词只修饰后面的情感词,这是缺点之一,之二是无法判断某些贬义词其实是褒义的,之三是句子越长得分高的可能性比较大,在此可能应该出去词的总数。

[python]view plaincopy

defclass_score(word_lists):

id=[]

foriinword_lists:

ifiinsenDict.keys():

id.append(1)

elifiinnotList:

id.append(2)

elifiindegreeDict.keys():

id.append(3)

word_nake=[]

foriinword_lists:

ifiinsenDict.keys():

word_nake.append(i)

elifiinnotList:

word_nake.append(i)

elifiindegreeDict.keys():

word_nake.append(i)

score=0

w=1

score0=0

foriinrange(len(id)):

# if id[i] ==3 and id[i+1]==2 and id[i+2]==1:

# score0 = (-1)*degreeWord[word_nake[i+1]]*senWord[word_nake[i+2]]

ifid[i]==1:

score0=w*senDict[word_nake[i]]

w=1

elifid[i]==2:

w=-1

elifid[i]==3:

w=w*degreeDict[word_nake[i]]

# print degreeWord[word_nake[i]]

score=score+score0

score0=0

returnscore

[python]view plaincopy

importxlwt

wb=xlwt.Workbook()

sheet=wb.add_sheet('score')

num=390

writings=""

foriint[389:]:

print"第",num,"条得分",class_score(i[:-1])

sheet.write(num-1,0,class_score(i[:-1]))

num=num+1

wb.save(r'C:/Users/userg/Desktop/result.xlsx')

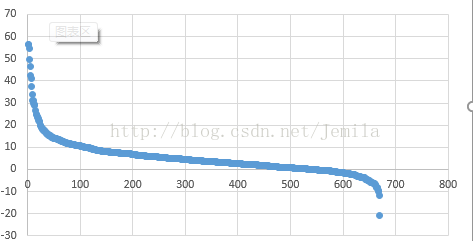

排序之后图标如下,可以看出积极正面的得分比较多,负面的比较少,根据原网页的评分确实如此,然而点评为1星的有1半得分为正,点评为5星的有四分之一得分为负。基于词典的方式严重依赖词典的质量,以及这种方式的缺点都可能造成得分的偏差,所以接下来打算利用word2vec试试。

词向量的变换方式如下:

[python]view plaincopy

fromgensim.modelsimportword2vec

importlogging

logging.basicConfig(format = '%(asctime)s : %(levelname)s : %(message)s', level = logging.INFO)

sentences = word2vec.Text8Corpus("corpus.csv")# 加载语料

model = word2vec.Word2Vec(sentences, size = 400)# 训练skip-gram模型,根据单词寻找周边词

# 保存模型,以便重用

model.save("corpus.model")

# 对应的加载方式

# model = word2vec.Word2Vec.load("corpus.model")

from gensim.models import word2vec

# load word2vec model

model = word2vec.Word2Vec.load("corpus.model")

model.save_word2vec_format("corpus.model.bin", binary =True)

model = word2vec.Word2Vec.load_word2vec_format("corpus.model.bin", binary =True)

加载一下评分

[python]view plaincopy

stars=open("C:UsersuserDesktopstarsstars.txt").readlines()

stars=[ int(i.split(".")[0])foriinstars]

#三类

y=[]

foriinstars:

ifi ==1ori ==2:

y.append(-1)

elifi ==3:

y.append(0)

elifi==4ori==5:

y.append(1)

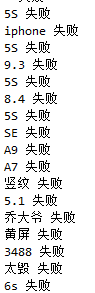

转换成词向量,发现里面有2个失败并且删除

[python]view plaincopy

importnumpy as np

importsys

reload(sys)

sys.setdefaultencoding("utf-8")

defgetWordVecs(wordList):

vecs = []

forwordinwordList:

try:

vecs.append(model[word])

exceptKeyError:

continue

returnnp.array(vecs, dtype ='float')

defbuildVecs(list):

posInput = []

# print txtfile

forlineinlist:

# print u"第",id,u"条"

resultList = getWordVecs(line)

# for each sentence, the mean vector of all its vectors is used to represent this sentence

iflen(resultList) !=0:

resultArray = sum(np.array(resultList))/len(resultList)

posInput.append(resultArray)

else:

returnposInput

X = np.array(buildVecs(t))

#327 408失败

del(y[326])

del(y[407])

y = np.array(y)

PCA降维并运用SVM进行分类

[python]view plaincopy

importmatplotlib.pyplot as plt

fromsklearn.decompositionimportPCA

# Plot the PCA spectrum

pca = PCA(n_components=400)

pca.fit(X)

plt.figure(1, figsize=(4,3))

plt.clf()

plt.axes([.2, .2, .7, .7])

plt.plot(pca.explained_variance_, linewidth=2)

plt.axis('tight')

plt.xlabel('n_components')

plt.ylabel('explained_variance_')

X_reduced = PCA(n_components = 100).fit_transform(X)

fromsklearn.cross_validationimporttrain_test_split

X_reduced_train,X_reduced_test,y_reduced_train,y_reduced_test= train_test_split(X, y, test_size=0.33, random_state=42)

fromsklearn.svmimportSVC

fromsklearnimportmetrics#准确度

clf = SVC(C = 2, probability =True)

clf.fit(X_reduced_train, y_reduced_train)

pred_probas = clf.predict(X_reduced_test)

scores =[]

scores.append(metrics.accuracy_score(pred_probas, y_reduced_test))

printscores

降维后的准确度为auc=0.83,相比MLP神经网络的准确度0.823来说结果差不多,以下是MLP的代码。对于利用word2vec来说,其结果依赖于语料库的词语量大小,我打印了部分失败的词语如下,表明在语料库中并没有找到相关的词,导致向量的表达信息有所缺失。

[python]view plaincopy

fromkeras.modelsimportSequential

fromkeras.layersimportDense, Dropout, Activation

fromkeras.optimizersimportSGD

model = Sequential()

model.add(Dense(512, input_dim =400, init ='uniform', activation ='tanh'))

model.add(Dropout(0.7))

# Dropout的意思就是训练和预测时随机减少特征个数,即去掉输入数据中的某些维度,用于防止过拟合。

model.add(Dense(256, activation ='relu'))

model.add(Dropout(0.7))

model.add(Dense(128, activation ='relu'))

model.add(Dropout(0.7))

model.add(Dense(64, activation ='relu'))

model.add(Dropout(0.7))

model.add(Dense(32, activation ='relu'))

model.add(Dropout(0.7))

model.add(Dense(16, activation ='relu'))

model.add(Dropout(0.7))

model.add(Dense(1, activation ='sigmoid'))

model.compile(loss = 'binary_crossentropy',

optimizer = 'adam',

metrics = ['accuracy'])

model.fit(X_reduced_train, y_reduced_train, nb_epoch = 20, batch_size =16)

score = model.evaluate(X_reduced_test, y_reduced_test, batch_size = 16)

print('Test accuracy: ', score[1])

原文:http://blog.csdn.net/Jemila/article/details/62887907?locationNum=7&fps=1返回搜狐,查看更多

责任编辑: