PyTorch-StudioGAN readme 翻译

StudioGAN

StudioGAN是一个Python库,提供具有代表性的生成对抗性网络(GANS)的实现,用于条件/无条件的图像生成。

StudioGAN旨在为现代GANS提供一个相同的操场,以便机器学习研究人员能够很容易地比较和分析一种新的想法。

ipynb documentations

how to use StudioGAN in Colab environment

特征

1.使用PyTorch的广泛GAN实现

2.使用CIFAR 10、微型ImageNet和ImageNet数据集的GANS综合基准测试

3.比原始实现更好的性能和更低的内存消耗

4.提供预先训练的模型,与最新的PyTorch环境完全兼容。

5.支持多GPU(DP、DDP和Multinode分布式数据并行)、混合精度、同步批标准化、LARS、Tensorboard可视化等分析方法。

Implemented GANs

项目作者提供了 18 + 个 SOTA GAN 的实现,包括 DCGAN、LSGAN、GGAN、WGAN-WC、WGAN-GP、WGAN-DRA、ACGAN、ProjGAN、SNGAN、SAGAN、BigGAN、BigGAN-Deep、CRGAN、ICRGAN、LOGAN、DiffAugGAN、ADAGAN、ContraGAN 和 FreezeD。

Tiny ImageNet 数据集上的实验使用的是 ResNet 架构而不是 CNN

EMA: Exponential Moving Average update to the generator.

生成器中应用更新后的指数移动平均线

cBN : conditional Batch Normalization.

AC : Auxiliary Classifier. 辅助分类器。

PD : Projection Discriminator. 投影鉴别器

CL : Contrastive Learning. 对比学习

To be Implemented 即将实现的 GAN 网络

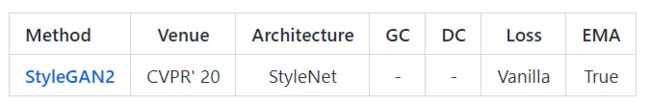

StyleGAN2

GC为AdaIN 表示自适应实例归一化(Adaptive Instance Normalization)

Requirements

请参阅Requirements.md想了解更多信息

requirements.md

Requirements

Anaconda

Python >= 3.6

6.0.0 <= Pillow <= 7.0.0

scipy == 1.1.0 (Recommended for fast loading of Inception Network)

sklearn

seaborn

h5py

tqdm

torch >= 1.6.0 (Recommended for mixed precision training and knn analysis)

torchvision >= 0.7.0

tensorboard

5.4.0 <= gcc <= 7.4.0 (Recommended for proper use of adaptive discriminator augmentation module)

torchlars (need to use LARS optimizer, can install by typing “pip install torchlars” in the command line)

您可以按以下方式安装推荐的环境:

conda env create -f environment.yml -n studiogan

在 docker 中还可以采用以下方式:

docker pull mgkang/studiogan:latest

以下是创建名字为「studioGAN」容器的命令,同样也可以使用端口号为 6006 来连接 tensoreboard。

docker run -it --gpus all --shm-size 128g -p 6006:6006 --name studioGAN -v /home/USER:/root/code --workdir /root/code mgkang/studiogan:latest /bin/bash

Quick Start

使用 GPU 0 的情况下,在 CONFIG_PATH 中对于模型的训练「-t」和评估「-e」进行了定义:

CUDA_VISIBLE_DEVICES=0 python3 src/main.py -t -e -c CONFIG_PATH

在使用 GPU (0, 1, 2, 3) 和 DataParallel 情况下,在 CONFIG_PATH 中对于模型的训练「-t」和评估「-e」进行了定义:

CUDA_VISIBLE_DEVICES=0,1,2,3 python3 src/main.py -t -e -c CONFIG_PATH

在 python3 src/main.py 程序中查看可用选项

通过 Tensorboard 可以监控 IS、FID、F_beta、Authenticity Accuracies 以及最大奇异值:

~ PyTorch-StudioGAN/logs/RUN_NAME>>> tensorboard --logdir=./ --port PORT

数据集

- CIFAR 10:一旦执行

main.py,StudioGAN将自动下载数据集. - 微型Imagenet、Imagenet或自定义数据集:

download Tiny Imagenet and Imagenet. 准备你自己的数据集。

使数据集的文件夹结构如下所示:

┌── docs

├── src

└── data

└── ILSVRC2012 or TINY_ILSVRC2012 or CUSTOM

├── train

│ ├── cls0

│ │ ├── train0.png

│ │ ├── train1.png

│ │ └── ...

│ ├── cls1

│ └── ...

└── valid

├── cls0

│ ├── valid0.png

│ ├── valid1.png

│ └── ...

├── cls1

└── ...

Supported Training Techniques

DistributedDataParallel (Please refer to Here)

### NODE_0, 4_GPUs, All ports are open to NODE_1

docker run -it --gpus all --shm-size 128g --name studioGAN --network=host -v /home/USER:/root/code --workdir /root/code mgkang/studiogan:latest /bin/bash

~/code>>> export NCCL_SOCKET_IFNAME=^docker0,lo

~/code>>> export MASTER_ADDR=PUBLIC_IP_OF_NODE_0

~/code>>> export MASTER_PORT=AVAILABLE_PORT_OF_NODE_0

~/code/PyTorch-StudioGAN>>> CUDA_VISIBLE_DEVICES=0,1,2,3 python3 src/main.py -t -e -DDP -n 2 -nr 0 -c CONFIG_PATH

docker run -it --gpus all --shm-size 128g --name studioGAN --network=host -v /home/USER:/root/code --workdir /root/code mgkang/studiogan:latest /bin/bash

~/code>>> export NCCL_SOCKET_IFNAME=^docker0,lo

~/code>>> export MASTER_ADDR=PUBLIC_IP_OF_NODE_0

~/code>>> export MASTER_PORT=AVAILABLE_PORT_OF_NODE_0

~/code/PyTorch-StudioGAN>>> CUDA_VISIBLE_DEVICES=0,1,2,3 python3 src/main.py -t -e -DDP -n 2 -nr 1 -c CONFIG_PATH

StudioGAN不支持DDP对ContraGAN的训练。这是因为进行对比学习需要一个“集合”运算来计算准确的条件对比损失

Mixed Precision Training 混合精度训练

CUDA_VISIBLE_DEVICES=0,...,N python3 src/main.py -t -mpc -c CONFIG_PATH

Standing Statistics

CUDA_VISIBLE_DEVICES=0,...,N python3 src/main.py -e -std_stat --standing_step STANDING_STEP -c CONFIG_PATH

Synchronized(同步) BatchNorm

CUDA_VISIBLE_DEVICES=0,...,N python3 src/main.py -t -sync_bn -c CONFIG_PATH

Load All Data in Main Memory

CUDA_VISIBLE_DEVICES=0,...,N python3 src/main.py -t -l -c CONFIG_PATH

LARS

CUDA_VISIBLE_DEVICES=0,...,N python3 src/main.py -t -l -c CONFIG_PATH -LARS

Analyzing Generated Images

The StudioGAN supports Image visualization, K-nearest neighbor analysis, Linear interpolation, and Frequency analysis. All results will be saved in ./figures/RUN_NAME/*.png.

StudioGAN 支持图像可视化、k 最近邻分析、线性差值以及频率分析。所有的结果保存在「./figures/RUN_NAME/*.png」中。

Image Visualization 图像可视化

CUDA_VISIBLE_DEVICES=0,...,N python3 src/main.py -iv -std_stat --standing_step STANDING_STEP -c CONFIG_PATH --checkpoint_folder CHECKPOINT_FOLDER --log_output_path LOG_OUTPUT_PATH

K-Nearest Neighbor Analysis (这里固定 K=7,第一列中是生成的图像)

CUDA_VISIBLE_DEVICES=0,...,N python3 src/main.py -knn -std_stat --standing_step STANDING_STEP -c CONFIG_PATH --checkpoint_folder CHECKPOINT_FOLDER --log_output_path LOG_OUTPUT_PATH

Linear Interpolation 线性插值 (applicable only to conditional Big ResNet models)

CUDA_VISIBLE_DEVICES=0,...,N python3 src/main.py -itp -std_stat --standing_step STANDING_STEP -c CONFIG_PATH --checkpoint_folder CHECKPOINT_FOLDER --log_output_path LOG_OUTPUT_PATH

Frequency Analysis 频率分析

CUDA_VISIBLE_DEVICES=0,...,N python3 src/main.py -fa -std_stat --standing_step STANDING_STEP -c CONFIG_PATH --checkpoint_folder CHECKPOINT_FOLDER --log_output_path LOG_OUTPUT_PATH

TSNE Analysis

CUDA_VISIBLE_DEVICES=0,...,N python3 src/main.py -tsne -std_stat --standing_step STANDING_STEP -c CONFIG_PATH --checkpoint_folder CHECKPOINT_FOLDER --log_output_path LOG_OUTPUT_PATH

Metrics 度量标准

Inception Score (IS)

初始评分(IS)是衡量GAN产生高保真度和多样图像的度量标准。计算IS需要预先训练的Inception-V3网络,最近的方法使用OpenAI的TensorFlow实现。

To compute official IS, you have to make a "samples.npz" file using the command below:

CUDA_VISIBLE_DEVICES=0,...,N python3 src/main.py -s -c CONFIG_PATH --checkpoint_folder CHECKPOINT_FOLDER --log_output_path LOG_OUTPUT_PATH

它将自动在路径 ./samples/RUN_NAME/fake/npz/samples.npz 中创建samples.npz文件。之后,执行 TensorFlow official IS implementation。注意,我们不把数据集分割成十份来计算IS十次。我们只使用整个数据集计算一次,这是CompareGAN存储库中使用的评估策略

CUDA_VISIBLE_DEVICES=0,...,N python3 src/inception_tf13.py --run_name RUN_NAME --type "fake"

请记住,您需要安装TensorFlow 1.3或更早版本!

Note that StudioGAN logs Pytorch-based IS during the training.

Frechet Inception Distance (FID)

FID是评价GAN模型性能的一种广泛应用的度量方法。计算FID需要预先训练的Inception-V3网络,现代方法使用 Tensorflow-based FID. StudioGAN利用PyTorch-based FID 在相同的PyTorch环境中测试GAN模型。我们展示了基于PyTorch的FID实现提供了与TensorFlow实现几乎相同的结果(参见本文的附录F)。

Precision and Recall (PR: F_1/8=Weights Precision, F_8=Weights Recall)

Precision measures how accurately the generator can learn the target distribution. Recall measures how completely the generator covers the target distribution. Like IS and FID, calculating Precision and Recall requires the pre-trained Inception-V3 model. StudioGAN uses the same hyperparameter settings with the original Precision and Recall implementation, and StudioGAN calculates the F-beta score suggested by Sajjadi et al.

Benchmark

※ 如果您发现任何错误的实现、错误和错误的分数,我们都欢迎您的贡献

我们报告了各种GANS的最佳IS、FID和F_beta值。B.S.是指训练的批次大小。

具体数据略了看原网站

CR, ICR, DiffAugment, ADA, and LO refer to regularization or optimization techiniques: CR (Consistency Regularization), ICR (Improved Consistency Regularization), DiffAugment (Differentiable Augmentation), ADA (Adaptive Discriminator Augmentation), and LO (Latent Optimization), respectively.

CR、ICR、DiffAugment、ADA和LO分别指正则化或优化技术:CR(一致性正则化)、ICR(改进一致性正则化)、DiffAugment (差分增强)、ADA(自适应判别器增强)和LO(潜优化)。

CIFAR10 (3x32x32)

在训练时,我们使用了下面的命令。

使用单一的TITAN RTX GPU,训练BigGAN需要13至15个小时

CUDA_VISIBLE_DEVICES=0 python3 src/main.py -t -e -l -stat_otf -c CONFIG_PATH --eval_type "test"

评估时,批量归一化层的统计量是实时计算的(批次统计量)。使用10K测试和10K生成的图像计算IS、FID和F_beta值。

CUDA_VISIBLE_DEVICES=0 python3 src/main.py -e -l -stat_otf -c CONFIG_PATH --checkpoint_folder CHECKPOINT_FOLDER --eval_type "test"

Tiny ImageNet (3x64x64)

With 4 TITAN RTX GPUs, training BigGAN takes about 2 days.

CUDA_VISIBLE_DEVICES=0,...,N python3 src/main.py -t -e -l -stat_otf -c CONFIG_PATH --eval_type "valid"

CUDA_VISIBLE_DEVICES=0,...,N python3 src/main.py -e -l -stat_otf -c CONFIG_PATH --checkpoint_folder CHECKPOINT_FOLDER --eval_type "valid"

ImageNet (3x128x128)

With 8 TESLA V100 GPUs, training BigGAN2048 takes about a month.

CUDA_VISIBLE_DEVICES=0,...,N python3 src/main.py -t -e -l -sync_bn -stat_otf -c CONFIG_PATH --eval_type "valid"

在评估时,预先计算批处理归一化层的统计量(以前统计量的移动平均值)。

IS, FID, and F_beta values are computed using 50K validation and 50K generated Images.

CUDA_VISIBLE_DEVICES=0,...,N python3 src/main.py -e -l -sync_bn -c CONFIG_PATH --checkpoint_folder CHECKPOINT_FOLDER --eval_type "valid"

StudioGAN thanks the following Repos for the code sharing

Exponential Moving Average: https://github.com/ajbrock/BigGAN-PyTorch

Synchronized BatchNorm: https://github.com/vacancy/Synchronized-BatchNorm-PyTorch

Self-Attention module: https://github.com/voletiv/self-attention-GAN-pytorch

Implementation Details: https://github.com/ajbrock/BigGAN-PyTorch

Architecture Details: https://github.com/google/compare_gan

DiffAugment: https://github.com/mit-han-lab/data-efficient-gans

Adaptive Discriminator Augmentation: https://github.com/rosinality/stylegan2-pytorch

Tensorflow IS: https://github.com/openai/improved-gan

Tensorflow FID: https://github.com/bioinf-jku/TTUR

Pytorch FID: https://github.com/mseitzer/pytorch-fid

Tensorflow Precision and Recall: https://github.com/msmsajjadi/precision-recall-distributions

torchlars: https://github.com/kakaobrain/torchlars

Citation

StudioGAN是为下列研究项目建立的。如果您使用StudioGAN,请引用我们的工作。

@inproceedings{kang2020ContraGAN,

title = {{ContraGAN: Contrastive Learning for Conditional Image Generation}},

author = {Minguk Kang and Jaesik Park},

journal = {Conference on Neural Information Processing Systems (NeurIPS)},

year = {2020}

}

[1] Experiments on Tiny ImageNet are conducted using the ResNet architecture instead of CNN.

[2] Our re-implementation of ACGAN (ICML’17) with slight modifications, which bring strong performance enhancement for the experiment using CIFAR10.

[3] Our re-implementation of BigGAN/BigGAN-Deep (ICLR’18) with slight modifications, which bring strong performance enhancement for the experiment using CIFAR10.

[4] IS is computed using Tensorflow official code.