聚类(二)K-means算法优化

K-Means 算法问题

1.对于K个初始质心的选择比较敏感,容易陷入局部最小值

对于K个初始质心的选择比较敏感,容易陷入局部最小值。

例如在运行K-Means的程序中,每次运行结果可能都不一样,如下面的两种情况,K-Means也是收敛了,但只是收敛到了局部最小值

解决办法:

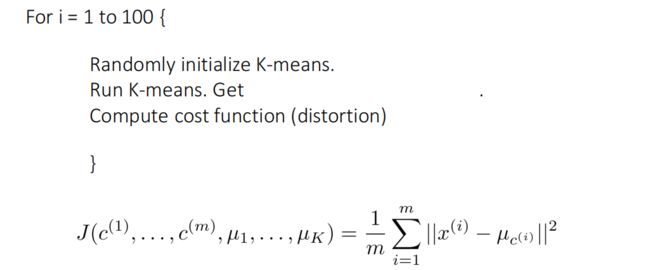

使用多次的随机初始化,计算每一次建模得到的代价函数的值,选取代价函数最小的结果最为聚类结果。

x表示样本,u表示x对应得簇得重心。

程序:

import numpy as np

import matplotlib.pyplot as plt

data=np.genfromtxt('kmeans.txt',delimiter=' ')#空格作为分隔符

print(data.shape)

print(data[:5])

plt.scatter(data[:,0],data[:,1])

plt.show()

(80, 2)

[[ 1.658985 4.285136]

[-3.453687 3.424321]

[ 4.838138 -1.151539]

[-5.379713 -3.362104]

[ 0.972564 2.924086]]

def CalDistance(x1,x2):

return np.sqrt(sum((x1-x2)**2))

def initCenter(data,k):

numSamples,dim=data.shape

center=np.zeros((k,dim))

for i in range(k):

index=int(np.random.uniform(0,numSamples))

center[i,:]=data[index,:]

return center

def kmeans(data,k):

numSamples=data.shape[0]

#样本的属性,第一列表示它属于哪个簇,第二列表示它与该簇的误差(到重心的距离)

resultData=np.array(np.zeros((numSamples,2)))

ischange=True#聚类是否发生了改变

center=initCenter(data,k)#初始化重心

while ischange:

ischange=False

for i in range(numSamples):

minDist=10000#先给点一个很大的最小距离

mindex=0#初始化应属于的簇

for j in range(k):

distance=CalDistance(data[i,:],center[j,:])

if(distance<minDist):

minDist=distance

resultData[i,1]=minDist

mindex=j

if(resultData[i,0]!=mindex):

ischange=True#样本的簇发生了改变

resultData[i,0]=mindex

for j in range(k):#更新重心坐标

cluster_index=np.nonzero(resultData[:,0]==j)

points=data[cluster_index]

#提取出j簇的所有样本点

center[j,:]=np.mean(points,axis=0)

return center,resultData

def showData(data,k,center,resultData):#显示结果

numSamples,dim=data.shape

if(dim!=2):

print('error')

return 1

mark=['or','ob','og','ok']#用不同颜色画出不同的类别

if(k>len(mark)):

print("your k is to large")

return 1

for i in range(numSamples):

markIndex=int(resultData[i,0])

plt.plot(data[i,0],data[i,1],mark[markIndex])

mark=['*r','*b','*g','*k']#用不同的样式画出不同的重心

for i in range(k):

plt.plot(center[i,0],center[i,1],mark[i],markersize=20)

plt.show()

k=4

min_loss=10000

min_loss_center=np.array([])

min_loss_result=np.array([])

for i in range(50):

#center为重心

#resultdata为样本属性,第一列为该样本得簇,第二列为对应的重心到样本点得距离

center,resultdata=kmeans(data,k)

loss=sum(resultdata[:,1])/data.shape[0]

if(loss<min_loss):

min_loss=loss

min_loss_center=center

min_loss_result=resultdata

center=min_loss_center

resultData=min_loss_result

center

array([[-3.53973889, -2.89384326],

[ 2.65077367, -2.79019029],

[ 2.6265299 , 3.10868015],

[-2.46154315, 2.78737555]])

def predict(datas):

return np.array([np.argmin(((np.tile(data,[k,1])-center)**2).sum(axis=1)) for data in datas])

#画出簇的作用域

#求出x,y的范围

x_min,x_max=data[:,0].min()-1,data[:,0].max()+1

y_min,y_max=data[:,1].min()-1,data[:,1].max()+1

#生成网格矩阵

xx,yy=np.meshgrid(np.arange(x_min,x_max,0.02),

np.arange(y_min,y_max,0.02))

z=predict(np.c_[xx.ravel(),yy.ravel()])

z=z.reshape(xx.shape)#预测z

cs=plt.contourf(xx,yy,z)

showData(data,k,center,resultData)

2.K值选取是由用户决定的,不同k值得到的结果会有很大的不同

解决办法:肘部法则

若图像中没有肘部点,需要自己根据需要来设置

程序:

import numpy as np

import matplotlib.pyplot as plt

data=np.genfromtxt('kmeans.txt',delimiter=' ')#空格作为分隔符

print(data.shape)

print(data[:5])

plt.scatter(data[:,0],data[:,1])

plt.show()

(80, 2)

[[ 1.658985 4.285136]

[-3.453687 3.424321]

[ 4.838138 -1.151539]

[-5.379713 -3.362104]

[ 0.972564 2.924086]]

def calDistence(x1,x2):#计算两个点之间的距离

return np.sqrt(sum((x1-x2)**2))

def initCenter(data,k):

numbSamples,dim=data.shape

#获取样本的行数和列数

center=np.zeros((k,dim))

#生成k个重心

for i in range(k):

index=int(np.random.uniform(0,numbSamples))

#随机选择一个索引

center[i,:]=data[index,:]#初始化重心

return center

def kmeans(data,k):

numSample=data.shape[0]#样本个数

#样本的属性,第一列表示它属于哪个簇,第二列表示它与该簇的误差(到重心的距离)

resultData=np.array(np.zeros((numSample,2)))

ischange=True#聚类是否发生了改变

center=initCenter(data,k)#初始化重心

while(ischange):

ischange=False

#对每个样本进行循环,计算器属于那个类别

for i in range(numSample):

minDist=10000#先给点一个很大的最小距离

mindex=0#初始化应属于的簇

for j in range(k):

distance=calDistence(data[i,:],center[j,:])

#计算样本点到每个重心的距离

if(distance<minDist):

minDist=distance#计算出的距离小于最小距离

resultData[i,1]=minDist#更新最小距离

mindex=j#记录下簇

if(resultData[i,0]!=mindex):

ischange=True#样本的簇发生了改变

resultData[i,0]=mindex

for j in range(k):#更新重心坐标

cluster_index=np.nonzero(resultData[:,0]==j)

points=data[cluster_index]

#提取出j簇的所有样本点

center[j,:]=np.mean(points,axis=0)

return center,resultData

def showData(data,k,center,resultData):#显示结果

numSamples,dim=data.shape

if(dim!=2):

print('error')

return 1

mark=['or','ob','og','ok']#用不同颜色画出不同的类别

if(k>len(mark)):

print("your k is to large")

return 1

for i in range(numSamples):

markIndex=int(resultData[i,0])

plt.plot(data[i,0],data[i,1],mark[markIndex])

mark=['*r','*b','*g','*k']#用不同的样式画出不同的重心

for i in range(k):

plt.plot(center[i,0],center[i,1],mark[i],markersize=20)

plt.show()

list_lost=[]

for k in range(2,10):

min_loss=10000

min_loss_center=np.array([])

min_loss_result=np.array([])

for i in range(50):

#center为重心

#resultdata为样本属性,第一列为该样本得簇,第二列为对应的重心到样本点得距离

center,resultdata=kmeans(data,k)

loss=sum(resultdata[:,1])/data.shape[0]

if(loss<min_loss):

min_loss=loss

min_loss_center=center

min_loss_result=resultdata

list_lost.append(min_loss)

# center=min_loss_center

# resultData=min_loss_result

# center

list_lost

[2.9811811738953176,

1.9708559728104191,

1.1675654672086735,

1.0712368269135584,

1.0033240887599812,

0.9449655969333923,

0.8844890541976118,

0.8328655913591613]

plt.plot(range(2,10),list_lost)

plt.xlabel('k')

plt.ylabel('loss')

plt.show()#有图像可以看出 k=4时为肘部点

3.存在局限性

4.数据比较大的时候,收敛会比较慢

解决办法:mini batch k-means