【Mixed Pooling】《Mixed Pooling for Convolutional Neural Networks》

RSKT-2014

International conference on rough sets and knowledge technology

文章目录

- 1 Background and Motivation

- 2 Review of Convolutional Neural Networks

- 3 Advantages / Contributions

- 4 Method

- 5 Experiments

-

- 5.1 Datasets

- 5.2 Experimental Results

- 6 Conclusion(own) / Future work

1 Background and Motivation

池化层的作用(一文看尽深度学习中的9种池化方法!)

- 增大网络感受野

- 抑制噪声,降低信息冗余

- 降低模型计算量,降低网络优化难度,防止网络过拟合

- 使模型对输入图像中的特征位置变化更加鲁棒

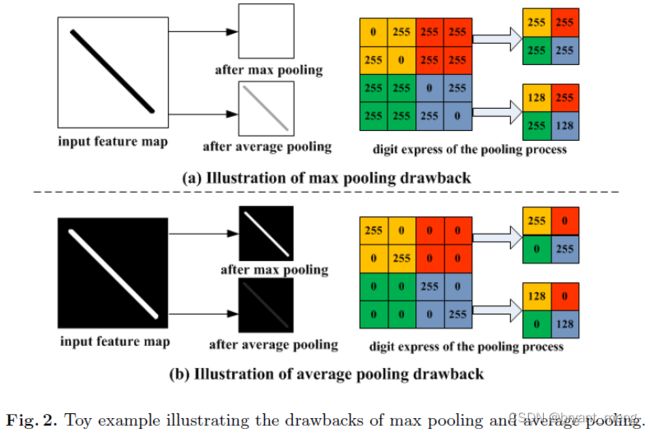

作者针对 max 和 ave pooling 的缺点,

提出了 mix pooling——randomly employs the local max pooling and average pooling methods when training CNNs

2 Review of Convolutional Neural Networks

- Convolutional Layer,包括卷积操作和 activation function

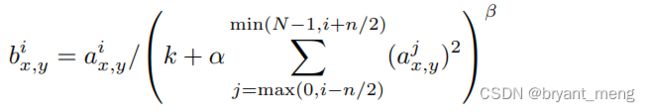

- Non-linear Transformation Layer,也即 normalization 层,现在比较流行的是 BN 等,以前的是 LCN(local contrast normalization) 和 AlexNet 的 LRN(the local response normalization) 等,PS:论文中 LCN 的公式感觉有问题,LRN 细节也原版论文也有差距,形式基本一致

- Feature Pooling Layer

3 Advantages / Contributions

借鉴 dropout, 混合max 和 ave 池化,提出 mixed pooling

4 Method

λ \lambda λ is a random value being either 0 or 1

2)mixed pooling 反向传播

先看看 max 和 ave pooling 的反向传播

mixed pooling

得记录下 λ \lambda λ 的取值,才能正确反向传播

the pooling history about the random value λ \lambda λ in Eq. must be recorded during forward propagation.

3)Pooling at Test Time

统计训练时某次 pooling 采用 max 和 ave 的频次 F m a x k F_{max}^{k} Fmaxk 和 F a v e k F_{ave}^{k} Favek,谁的频次高测试的时候该处的 pooling 就用谁,开始玄学了是吧,哈哈哈哈

5 Experiments

5.1 Datasets

- CIFAR-10

- CIFAR-100

- SVHN

5.2 Experimental Results

train error 高,acc 高

作者解释 This indicates that the proposed mixed pooling outperforms max pooling and average pooling to address the over-fitting problem

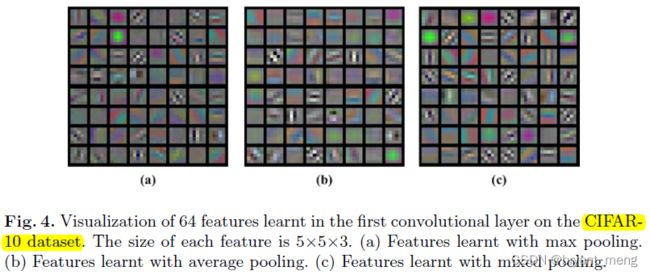

可视化结果

可以看出 mixed pooling 包含更多的信息

2)CIFAR-100

3)SVHN

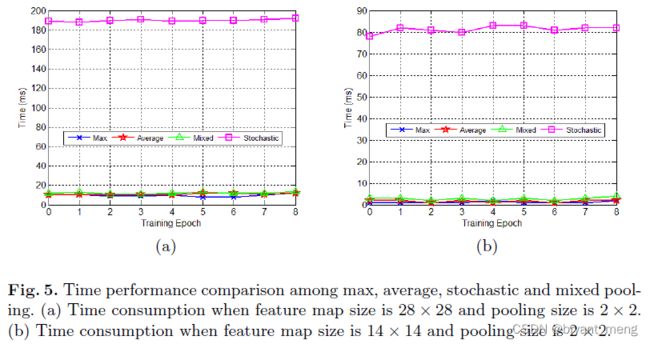

4)Time Performance

6 Conclusion(own) / Future work

LRN

k , n , α , β k, n, \alpha, \beta k,n,α,β 都是超参数, a , b a,b a,b 输入输出特征图, x , y x,y x,y 空间位置, i i i 通道位置

以下内容来自 深度学习的局部响应归一化LRN(Local Response Normalization)理解

import tensorflow as tf

import numpy as np

x = np.array([i for i in range(1,33)]).reshape([2,2,2,4])

y = tf.nn.lrn(input=x,depth_radius=2,bias=0,alpha=1,beta=1)

with tf.Session() as sess:

print(x)

print('#############')

print(y.eval())

LCN

《What is the best multi-stage architecture for object recognition?》