已达到人类水准语音识别模型的whisper,真的有这么厉害吗?

嗨,好久不见,很长时间没有写东西了,所以今天来简单的带大家了解一下语音识别模型Whisper。

Whisper是openai在9月发布的一个开源语音识别翻译模型,它的英语翻译的鲁棒性和准确性已经达到了很高的水准,支持99种语言翻译,安装使用都比较简单快捷,现在让我带大家看看whisper的安装和简单使用,过程中也遇到了一些问题,也会把解决办法贴上去,希望对你们有用。

-

环境:

Window,Python3.8,

-

安装:

1.whiper库安装

pip install git+https://github.com/openai/whisper.git

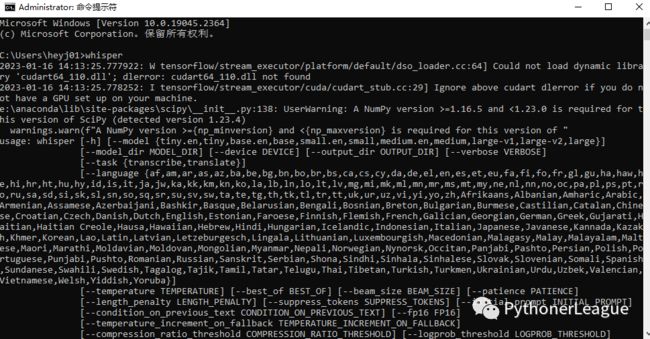

运行成功以后cmd界面执行whisper会有如下提示说明安装成功:

2.ffmpeg安装

Whisper需要使用ffmpeg工具提取声音数据,所以需要下载安装ffmpeg,下载地址:

http://ffmpeg.org/download.html#build-windows

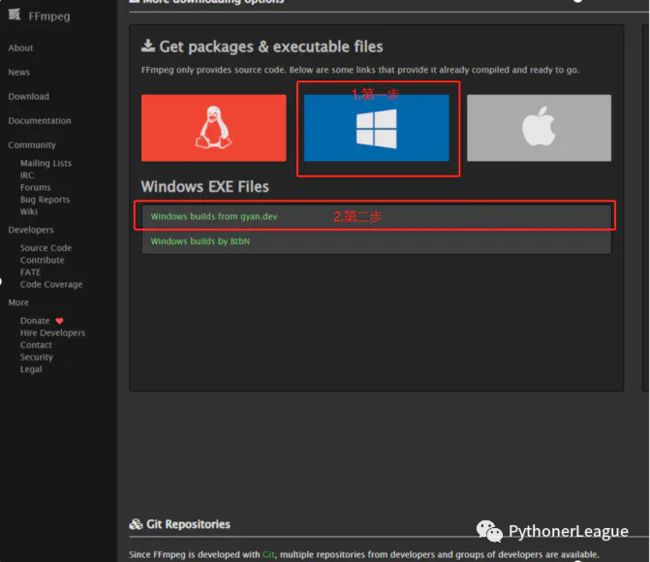

进入下载页面以后根据下图依次点击

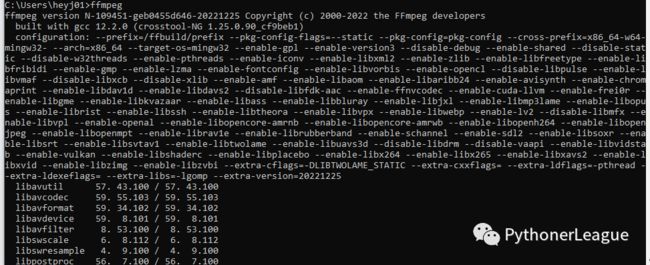

根据上图1,2两步即可下载ffmpeg压缩包,解压到电脑任意位置,然后为其添加环境变量即可,本人路径为例C:\Users\heyj01\Desktop\ffmpeg-master-latest-win64-gpl-shared\bin添加到环境变量cmd窗口输入ffmpeg有如下提示代表成功:

3.依赖的其它python库

由于whisper还依赖pytorch,transform等库,不过当你在接下运行使用whisper进行翻译的时候根据提示依次使用pip install 模块名字 安装即可

-

使用:

Whisper使用非常简单

#引用whisper模块

import whisper

#加载large模型

model = whisper.load_model("large")

#根据视频的语音翻译成中文

result = model.transcribe("test.mp4",language='Chinese')

#whispe默认是30秒的翻译窗口,根据30秒语音切片,生成2秒翻译结果列表

for i in result["segments"]:

print(i['text'])首先whisper的模型有下面这几种,每种大小不一样,所需要的内存计算时间效果也不一样,模型越小翻译速度快,但是语音识别翻译其它跟视频语言不一致的语言效果就越差,反之模型越大翻译速度使用内存也越大,效果是越好的。

load_model函数还有两个参数是device,download_root

device是计算引擎,可以选择cpu,或者cuda(也就是gpu),不填默认为cpu,有显卡并且显存满足你所选的模型大小可以正常跑起来,不然会报内存错误。

download_root是模型保存以及读取路径,不填默认为系统用户下的路径,我的为例C:\Users\heyj01\.cache\whisper,第一次加载模型,模型没有在路径下会下载模型到download_root路径下。

transcribe函数的language目前支持99种语言,如下:

"en": "english","zh": "chinese","de": "german","es": "spanish","ru": "russian","ko": "korean","fr": "french","ja": "japanese","pt": "portuguese","tr": "turkish","pl": "polish","ca": "catalan","nl": "dutch","ar": "arabic","sv": "swedish","it": "italian","id": "indonesian","hi": "hindi","fi": "finnish","vi": "vietnamese","he": "hebrew","uk": "ukrainian","el": "greek","ms": "malay","cs": "czech","ro": "romanian","da": "danish","hu": "hungarian","ta": "tamil","no": "norwegian","th": "thai","ur": "urdu","hr": "croatian","bg": "bulgarian","lt": "lithuanian","la": "latin","mi": "maori","ml": "malayalam","cy": "welsh","sk": "slovak","te": "telugu","fa": "persian","lv": "latvian","bn": "bengali","sr": "serbian","az": "azerbaijani","sl": "slovenian","kn": "kannada","et": "estonian","mk": "macedonian","br": "breton","eu": "basque","is": "icelandic","hy": "armenian","ne": "nepali","mn": "mongolian","bs": "bosnian","kk": "kazakh","sq": "albanian","sw": "swahili","gl": "galician","mr": "marathi","pa": "punjabi","si": "sinhala","km": "khmer","sn": "shona","yo": "yoruba","so": "somali","af": "afrikaans","oc": "occitan","ka": "georgian","be": "belarusian","tg": "tajik","sd": "sindhi","gu": "gujarati","am": "amharic","yi": "yiddish","lo": "lao","uz": "uzbek","fo": "faroese","ht": "haitian creole","ps": "pashto","tk": "turkmen","nn": "nynorsk","mt": "maltese","sa": "sanskrit","lb": "luxembourgish","my": "myanmar","bo": "tibetan","tl": "tagalog","mg": "malagasy","as": "assamese","tt": "tatar","haw": "hawaiian","ln": "lingala","ha": "hausa","ba": "bashkir","jw": "javanese","su": "sundanese",

官方还提供了另外一种调用方案:

import whisper

model = whisper.load_model("base")

# load audio and pad/trim it to fit 30 seconds

audio = whisper.load_audio("audio.mp3")

audio = whisper.pad_or_trim(audio)

# make log-Mel spectrogram and move to the same device as the model

mel = whisper.log_mel_spectrogram(audio).to(model.device)

# detect the spoken language

_, probs = model.detect_language(mel)

print(f"Detected language: {max(probs, key=probs.get)}")

# decode the audio

options = whisper.DecodingOptions(language='Chinese')

result = whisper.decode(model, mel, options)

# print the recognized text

print(result.text)这种方法在我这里是有报错的,因为我电脑没有gpu所以这一行代码

options = whisper.DecodingOptions(language='zh')

改成:options = whisper.DecodingOptions(language='zh',fp16 = False),因为cpu不支持fp16。

-

总结

测试了一下,whiper对英语的识别还是很厉害的,一些小语种的识别翻译需要用到大模型效果才会好些,不过比起其他的一些识别翻译模型还是强很多,而且开源了,相信whisper会越来越好的,最后给出whsiper的github地址:

https://github.com/openai/whisper

Whsper的安装简单使用就介绍到这了,希望你们能够使用这个开源模型开发一些有趣的工具,下一篇文章将是我使用whisper+pyqt5开发一个具有语音识别翻译生成字幕,自动为视频添加字幕,监听麦克风生成字幕的工具,有兴趣的可以期待一下。