基于pytorch的kaggle_CIFAR-10图片分类(可GPU也可CPU)

主体代码来自于动手学深度学习

代码分两个部分组成,第一部分是主体代码kaggle_CIFAR-10.py,第二部分是d2l.py是一堆类或者函数定义的地方,其中也有很多函数没有用到,所以很长,但是也都是书中的,很实用,书中大部分都包括了,如果看书学的话可以直接带走~

数据集的话运行代码是可以直接下载的,不过是只有1000张图片(原版50000张),所以训练出来的看着挺拉胯的;不过如果有想要完整数据集的可以联系我,我就会免费发资源到csdn

代码中也标有很多的注释方便理解,预测部分没有做,如果有兴趣的话也可以私信我~

网络用的是resnet18(残差网络),loss是交叉熵,随机梯度下降

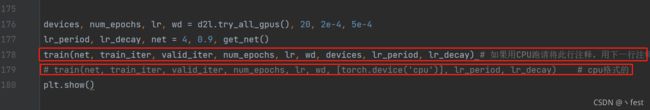

CPU和GPU都有是因为我当时pytorch的版本和cuda的版本不一致导致GPU不能用,所以我就改成了CPU格式的,不过很简单只需要改动主体代码的两个部分即可:

主体kaggle_CIFAR-10.py

import collections

import math

import os

import shutil

import pandas as pd

import torch

import torchvision

from torch import nn

import d2l

from d2l import *

d2l.DATA_HUB['cifar10_tiny'] = (d2l.DATA_URL + 'kaggle_cifar10_tiny.zip',

'2068874e4b9a9f0fb07ebe0ad2b29754449ccacd')

# 如果你使用完整的Kaggle竞赛的数据集,设置`demo`为 False,这里的是1000个数据,所以训练出来的看着很拉胯O(∩_∩)O哈哈~

# 完整版的数据集需要自己去下载,网址:https://www.kaggle.com/c/cifar-10

demo = True

if demo:

data_dir = d2l.download_extract('cifar10_tiny')

else:

data_dir = '../data/cifar-10/'

def read_csv_labels(fname):

"""读取 `fname` 来给标签字典返回一个文件名。"""

with open(fname, 'r') as f:

# 跳过文件头行 (列名)

lines = f.readlines()[1:]

tokens = [l.rstrip().split(',') for l in lines] # rstrip() 删除 string 字符串末尾的指定字符,默认为空白符,包括空格、换行符、回车符、制表符

return dict(((name, label) for name, label in tokens))

labels = read_csv_labels(os.path.join(data_dir, 'trainLabels.csv')) # labels是一个字典,key是图片编号,value是图片里的东西,例:“1”:“bird”

# print('# 训练示例 :', len(labels))

# print('# 类别 :', len(set(labels.values())))

def copyfile(filename, target_dir):

"""将文件复制到目标目录。"""

os.makedirs(target_dir, exist_ok=True)

shutil.copy(filename, target_dir)

# 将验证集从原始的训练集中拆分出来

def reorg_train_valid(data_dir, labels, valid_ratio): # valid_ratio原始数据集中训练集的个数与验证集的比例

# 训练数据集中示例最少的类别中的示例数

n = collections.Counter(labels.values()).most_common()[-1][1] # most_common(n)传进去一个可选参数n(代表获取数量最多的前n个元素,如果不传参数,代表返回所有结果)

# 返回一个列表(里面的元素是一个元组,元组第0位是被计数的具体元素,元组的第1位是出现的次数,如:[('a',1),[('b'),2],[('c',3)]])当多个元素计数值相同时,按照字母序排列。

# 验证集中每个类别的示例数

n_valid_per_label = max(1, math.floor(n * valid_ratio))

label_count = {}

for train_file in os.listdir(os.path.join(data_dir, 'train')):

label = labels[train_file.split('.')[0]]

fname = os.path.join(data_dir, 'train', train_file)

copyfile(fname, os.path.join(data_dir, 'train_valid_test',

'train_valid', label))

if label not in label_count or label_count[label] < n_valid_per_label:

copyfile(fname, os.path.join(data_dir, 'train_valid_test',

'valid', label))

label_count[label] = label_count.get(label, 0) + 1

else:

copyfile(fname, os.path.join(data_dir, 'train_valid_test',

'train', label))

return n_valid_per_label

def reorg_test(data_dir):

# 预测期间整理测试集,以方便读取

for test_file in os.listdir(os.path.join(data_dir, 'test')):

copyfile(os.path.join(data_dir, 'test', test_file),

os.path.join(data_dir, 'train_valid_test', 'test',

'unknown'))

def reorg_cifar10_data(data_dir, valid_ratio):

# 调用前面定义的函数 read_csv_labels 、 reorg_train_valid 和 reorg_test

labels = read_csv_labels(os.path.join(data_dir, 'trainLabels.csv'))

reorg_train_valid(data_dir, labels, valid_ratio)

reorg_test(data_dir)

# 调用函数,处理验证集和测试集

batch_size = 32 if demo else 128

valid_ratio = 0.1

reorg_cifar10_data(data_dir, valid_ratio)

# 数据增广

transform_train = torchvision.transforms.Compose([

# 在高度和宽度上将图像放大到40像素的正方形

torchvision.transforms.Resize(40),

# 随机裁剪出一个高度和宽度均为40像素的正方形图像,

# 生成一个面积为原始图像面积0.64到1倍的小正方形,

# 然后将其缩放为高度和宽度均为32像素的正方形

torchvision.transforms.RandomResizedCrop(32, scale=(0.64, 1.0),

ratio=(1.0, 1.0)),

# 图像水平翻转

torchvision.transforms.RandomHorizontalFlip(),

torchvision.transforms.ToTensor(),

# 标准化图像的每个通道

torchvision.transforms.Normalize([0.4914, 0.4822, 0.4465],

[0.2023, 0.1994, 0.2010])])

transform_test = torchvision.transforms.Compose([

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize([0.4914, 0.4822, 0.4465],

[0.2023, 0.1994, 0.2010])])

# 读取由原始图像组成的数据集,每个示例都包括一张图片和一个标签

train_ds, train_valid_ds = [torchvision.datasets.ImageFolder(os.path.join(data_dir, 'train_valid_test', folder),

transform=transform_train) for folder in ['train', 'train_valid']]

# 最终预测之前,我们根据训练集和验证集组合而成的训练模型进行训练,以充分利用所有标记的数据

valid_ds, test_ds = [torchvision.datasets.ImageFolder(

os.path.join(data_dir, 'train_valid_test', folder),

transform=transform_test) for folder in ['valid', 'test']]

train_iter, train_valid_iter = [torch.utils.data.DataLoader(dataset, batch_size, shuffle=True, drop_last=True)

for dataset in (train_ds, train_valid_ds)]

valid_iter = torch.utils.data.DataLoader(valid_ds, batch_size, shuffle=False, drop_last=True)

test_iter = torch.utils.data.DataLoader(test_ds, batch_size, shuffle=False, drop_last=False)

# 定义模型

def get_net():

num_classes = 10

net = d2l.resnet18(num_classes, 3) # 使用的网络是resnet18(残差网络)

return net

# 损失函数 交叉熵损失函数

loss = nn.CrossEntropyLoss(reduction="none")

# 训练函数

def train(net, train_iter, valid_iter, num_epochs, lr, wd, devices, lr_period, lr_decay):

trainer = torch.optim.SGD(net.parameters(), lr=lr, momentum=0.9, weight_decay=wd) # 使用随机梯度下降

scheduler = torch.optim.lr_scheduler.StepLR(trainer, lr_period, lr_decay) # 自定义调整学习率 LambdaLR

num_batches, timer = len(train_iter), d2l.Timer()

legend = ['train loss', 'train acc']

if valid_iter is not None:

legend.append('valid acc')

animator = d2l.Animator(xlabel='epoch', xlim=[1, num_epochs], legend=legend)

net = nn.DataParallel(net, device_ids=devices).to(devices[0]) # 如果用CPU跑请将此行注释,用下一行注释的代码跑

# net = net.to(devices[0]) # cpu格式的

for epoch in range(num_epochs):

net.train()

metric = d2l.Accumulator(3)

for i, (features, labels) in enumerate(train_iter):

timer.start()

l, acc = d2l.train_batch_ch13(net, features, labels, loss, trainer, devices)

metric.add(l, acc, labels.shape[0])

timer.stop()

if (i + 1) % (num_batches // 5) == 0 or i == num_batches - 1:

animator.add(epoch + (i + 1) / num_batches,

(metric[0] / metric[2], metric[1] / metric[2],

None))

if valid_iter is not None:

valid_acc = d2l.evaluate_accuracy_gpu(net, valid_iter)

animator.add(epoch + 1, (None, None, valid_acc))

scheduler.step()

measures = (f'train loss {metric[0] / metric[2]:.3f}, '

f'train acc {metric[1] / metric[2]:.3f}')

if valid_iter is not None:

measures += f', valid acc {valid_acc:.3f}'

print(measures + f'\n{metric[2] * num_epochs / timer.sum():.1f}'

f' examples/sec on {str(devices)}')

devices, num_epochs, lr, wd = d2l.try_all_gpus(), 20, 2e-4, 5e-4

lr_period, lr_decay, net = 4, 0.9, get_net()

train(net, train_iter, valid_iter, num_epochs, lr, wd, devices, lr_period, lr_decay) # 如果用CPU跑请将此行注释,用下一行注释的代码跑

# train(net, train_iter, valid_iter, num_epochs, lr, wd, [torch.device('cpu')], lr_period, lr_decay) # cpu格式的

plt.show()第二部分d2l.py

import collections

import math

import os

import random

import re

import hashlib

import sys

import tarfile

import time

import requests

import zipfile

from tqdm import tqdm

from IPython import display

from matplotlib import pyplot as plt

import torch

from torch import nn

from torch.utils import data

import torch.nn.functional as F

import torchvision

import torchvision.transforms as transforms

import numpy as np

VOC_CLASSES = ['background', 'aeroplane', 'bicycle', 'bird', 'boat',

'bottle', 'bus', 'car', 'cat', 'chair', 'cow',

'diningtable', 'dog', 'horse', 'motorbike', 'person',

'potted plant', 'sheep', 'sofa', 'train', 'tv/monitor']

VOC_COLORMAP = [[0, 0, 0], [128, 0, 0], [0, 128, 0], [128, 128, 0],

[0, 0, 128], [128, 0, 128], [0, 128, 128], [128, 128, 128],

[64, 0, 0], [192, 0, 0], [64, 128, 0], [192, 128, 0],

[64, 0, 128], [192, 0, 128], [64, 128, 128], [192, 128, 128],

[0, 64, 0], [128, 64, 0], [0, 192, 0], [128, 192, 0],

[0, 64, 128]]

# ###################### 3.2 ############################

def set_figsize(figsize=(3.5, 2.5)):

use_svg_display()

# 设置图的尺寸

plt.rcParams['figure.figsize'] = figsize

def use_svg_display():

"""Use svg format to display plot in jupyter"""

display.set_matplotlib_formats('svg')

def data_iter(batch_size, features, labels):

num_examples = len(features)

indices = list(range(num_examples))

random.shuffle(indices) # 样本的读取顺序是随机的

for i in range(0, num_examples, batch_size):

j = torch.LongTensor(indices[i: min(i + batch_size, num_examples)]) # 最后一次可能不足一个batch

yield features.index_select(0, j), labels.index_select(0, j)

def linreg(X, w, b):

return torch.mm(X, w) + b

def squared_loss(y_hat, y):

# 注意这里返回的是向量, 另外, pytorch里的MSELoss并没有除以 2

return ((y_hat - y.view(y_hat.size())) ** 2) / 2

def sgd(params, lr, batch_size):

# 为了和原书保持一致,这里除以了batch_size,但是应该是不用除的,因为一般用PyTorch计算loss时就默认已经

# 沿batch维求了平均了。

for param in params:

param.data -= lr * param.grad / batch_size # 注意这里更改param时用的param.data

# ######################3##### 3.5 #############################

def get_fashion_mnist_labels(labels):

text_labels = ['t-shirt', 'trouser', 'pullover', 'dress', 'coat',

'sandal', 'shirt', 'sneaker', 'bag', 'ankle boot']

return [text_labels[int(i)] for i in labels]

def show_fashion_mnist(images, labels):

use_svg_display()

# 这里的_表示我们忽略(不使用)的变量

_, figs = plt.subplots(1, len(images), figsize=(12, 12))

for f, img, lbl in zip(figs, images, labels):

f.imshow(img.view((28, 28)).numpy())

f.set_title(lbl)

f.axes.get_xaxis().set_visible(False)

f.axes.get_yaxis().set_visible(False)

# plt.show()

# 5.6 修改

# def load_data_fashion_mnist(batch_size, root='~/Datasets/FashionMNIST'):

# """Download the fashion mnist dataset and then load into memory."""

# transform = transforms.ToTensor()

# mnist_train = torchvision.datasets.FashionMNIST(root=root, train=True, download=True, transform=transform)

# mnist_test = torchvision.datasets.FashionMNIST(root=root, train=False, download=True, transform=transform)

# if sys.platform.startswith('win'):

# num_workers = 0 # 0表示不用额外的进程来加速读取数据

# else:

# num_workers = 4

# train_iter = torch.utils.data.DataLoader(mnist_train, batch_size=batch_size, shuffle=True, num_workers=num_workers)

# test_iter = torch.utils.data.DataLoader(mnist_test, batch_size=batch_size, shuffle=False, num_workers=num_workers)

# return train_iter, test_iter

# ########################### 3.6 ###############################

# (3.13节修改)

def evaluate_accuracy(data_iter, net):

acc_sum, n = 0.0, 0

for X, y in data_iter:

acc_sum = acc_sum + (net(X).argmax(dim=1) == y).float().sum().item()

n = n + y.shape[0]

return acc_sum / n

def train_ch3(net, train_iter, test_iter, loss, num_epochs, batch_size,

params=None, lr=None, optimizer=None):

for epoch in range(num_epochs):

train_l_sum, train_acc_sum, n = 0.0, 0.0, 0

for X, y in train_iter:

y_hat = net(X)

l = loss(y_hat, y).sum()

# 梯度清零

if optimizer is not None:

optimizer.zero_grad()

elif params is not None and params[0].grad is not None:

for param in params:

param.grad.data.zero_()

l.backward()

if optimizer is None:

sgd(params, lr, batch_size)

else:

optimizer.step() # “softmax回归的简洁实现”一节将用到

train_l_sum = train_l_sum + l.item()

train_acc_sum = train_acc_sum + (y_hat.argmax(dim=1) == y).sum().item()

n =n + y.shape[0]

test_acc = evaluate_accuracy(test_iter, net)

print('epoch %d, loss %.4f, train acc %.3f, test acc %.3f'

% (epoch + 1, train_l_sum / n, train_acc_sum / n, test_acc))

# ########################### 3.7 #####################################3

class FlattenLayer(torch.nn.Module):

def __init__(self):

super(FlattenLayer, self).__init__()

def forward(self, x): # x shape: (batch, *, *, ...)

return x.view(x.shape[0], -1)

# ########################### 3.11 ###############################

def semilogy(x_vals, y_vals, x_label, y_label, x2_vals=None, y2_vals=None,

legend=None, figsize=(3.5, 2.5)):

set_figsize(figsize)

plt.xlabel(x_label)

plt.ylabel(y_label)

plt.semilogy(x_vals, y_vals)

if x2_vals and y2_vals:

plt.semilogy(x2_vals, y2_vals, linestyle=':')

plt.legend(legend)

# plt.show()

# ############################# 3.13 ##############################

# 5.5 修改

# def evaluate_accuracy(data_iter, net):

# acc_sum, n = 0.0, 0

# for X, y in data_iter:

# if isinstance(net, torch.nn.Module):

# net.eval() # 评估模式, 这会关闭dropout

# acc_sum += (net(X).argmax(dim=1) == y).float().sum().item()

# net.train() # 改回训练模式

# else: # 自定义的模型

# if('is_training' in net.__code__.co_varnames): # 如果有is_training这个参数

# # 将is_training设置成False

# acc_sum += (net(X, is_training=False).argmax(dim=1) == y).float().sum().item()

# else:

# acc_sum += (net(X).argmax(dim=1) == y).float().sum().item()

# n += y.shape[0]

# return acc_sum / n

# ########################### 5.1 #########################

# def corr2d(X, K):

# h, w = K.shape

# Y = torch.zeros((X.shape[0] - h + 1, X.shape[1] - w + 1))

# for i in range(Y.shape[0]):

# for j in range(Y.shape[1]):

# Y[i, j] = (X[i: i + h, j: j + w] * K).sum()

# return Y

# ############################ 5.5 #########################

# def evaluate_accuracy(data_iter, net,

# device=torch.device('cuda' if torch.cuda.is_available() else 'cpu')):

# acc_sum, n = 0.0, 0

# with torch.no_grad():

# for X, y in data_iter:

# if isinstance(net, torch.nn.Module):

# net.eval() # 评估模式, 这会关闭dropout

# acc_sum += (net(X.to(device)).argmax(dim=1) == y.to(device)).float().sum().cpu().item()

# net.train() # 改回训练模式

# else: # 自定义的模型, 3.13节之后不会用到, 不考虑GPU

# if ('is_training' in net.__code__.co_varnames): # 如果有is_training这个参数

# # 将is_training设置成False

# acc_sum += (net(X, is_training=False).argmax(dim=1) == y).float().sum().item()

# else:

# acc_sum += (net(X).argmax(dim=1) == y).float().sum().item()

# n += y.shape[0]

# return acc_sum / n

def train_ch5(net, train_iter, test_iter, batch_size, optimizer, device, num_epochs):

net = net.to(device)

print("training on ", device)

loss = torch.nn.CrossEntropyLoss()

batch_count = 0

for epoch in range(num_epochs):

train_l_sum, train_acc_sum, n, start = 0.0, 0.0, 0, time.time()

for X, y in train_iter:

X = X.to(device)

y = y.to(device)

y_hat = net(X)

l = loss(y_hat, y)

optimizer.zero_grad()

l.backward()

optimizer.step()

train_l_sum =train_l_sum + l.cpu().item()

train_acc_sum =train_acc_sum + (y_hat.argmax(dim=1) == y).sum().cpu().item()

n =n + y.shape[0]

batch_count =batch_count + 1

test_acc = evaluate_accuracy(test_iter, net)

print('epoch %d, loss %.4f, train acc %.3f, test acc %.3f, time %.1f sec'

% (epoch + 1, train_l_sum / batch_count, train_acc_sum / n, test_acc, time.time() - start))

# ########################## 5.6 #########################3

# def load_data_fashion_mnist(batch_size, resize=None, root='~/Datasets/FashionMNIST'):

# """Download the fashion mnist dataset and then load into memory."""

# trans = []

# if resize:

# trans.append(torchvision.transforms.Resize(size=resize))

# trans.append(torchvision.transforms.ToTensor())

#

# transform = torchvision.transforms.Compose(trans)

# mnist_train = torchvision.datasets.FashionMNIST(root=root, train=True, download=True, transform=transform)

# mnist_test = torchvision.datasets.FashionMNIST(root=root, train=False, download=True, transform=transform)

# if sys.platform.startswith('win'):

# num_workers = 0 # 0表示不用额外的进程来加速读取数据

# else:

# num_workers = 4

# train_iter = torch.utils.data.DataLoader(mnist_train, batch_size=batch_size, shuffle=True, num_workers=num_workers)

# test_iter = torch.utils.data.DataLoader(mnist_test, batch_size=batch_size, shuffle=False, num_workers=num_workers)

#

# return train_iter, test_iter

############################# 5.8 ##############################

class GlobalAvgPool2d(nn.Module):

# 全局平均池化层可通过将池化窗口形状设置成输入的高和宽实现

def __init__(self):

super(GlobalAvgPool2d, self).__init__()

def forward(self, x):

return F.avg_pool2d(x, kernel_size=x.size()[2:])

# ########################### 5.11 ################################

# class Residual(nn.Module):

# def __init__(self, in_channels, out_channels, use_1x1conv=False, stride=1):

# super(Residual, self).__init__()

# self.conv1 = nn.Conv2d(in_channels, out_channels, kernel_size=3, padding=1, stride=stride)

# self.conv2 = nn.Conv2d(out_channels, out_channels, kernel_size=3, padding=1)

# if use_1x1conv:

# self.conv3 = nn.Conv2d(in_channels, out_channels, kernel_size=1, stride=stride)

# else:

# self.conv3 = None

# self.bn1 = nn.BatchNorm2d(out_channels)

# self.bn2 = nn.BatchNorm2d(out_channels)

#

# def forward(self, X):

# Y = F.relu(self.bn1(self.conv1(X)))

# Y = self.bn2(self.conv2(Y))

# if self.conv3:

# X = self.conv3(X)

# return F.relu(Y + X)

def resnet_block(in_channels, out_channels, num_residuals, first_block=False):

if first_block:

assert in_channels == out_channels # 第一个模块的通道数同输入通道数一致

blk = []

for i in range(num_residuals):

if i == 0 and not first_block:

blk.append(Residual(in_channels, out_channels, use_1x1conv=True, stride=2))

else:

blk.append(Residual(out_channels, out_channels))

return nn.Sequential(*blk)

def resnet18(output=10, in_channels=3):

net = nn.Sequential(

nn.Conv2d(in_channels, 64, kernel_size=7, stride=2, padding=3),

nn.BatchNorm2d(64),

nn.ReLU(),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1))

net.add_module("resnet_block1", resnet_block(64, 64, 2, first_block=True))

net.add_module("resnet_block2", resnet_block(64, 128, 2))

net.add_module("resnet_block3", resnet_block(128, 256, 2))

net.add_module("resnet_block4", resnet_block(256, 512, 2))

net.add_module("global_avg_pool", GlobalAvgPool2d()) # GlobalAvgPool2d的输出: (Batch, 512, 1, 1)

net.add_module("fc", nn.Sequential(FlattenLayer(), nn.Linear(512, output)))

return net

# ############################## 6.3 ##################################3

def load_data_jay_lyrics():

"""加载周杰伦歌词数据集"""

with zipfile.ZipFile('../../data/jaychou_lyrics.txt.zip') as zin:

with zin.open('jaychou_lyrics.txt') as f:

corpus_chars = f.read().decode('utf-8')

corpus_chars = corpus_chars.replace('\n', ' ').replace('\r', ' ')

corpus_chars = corpus_chars[0:10000]

idx_to_char = list(set(corpus_chars))

char_to_idx = dict([(char, i) for i, char in enumerate(idx_to_char)])

vocab_size = len(char_to_idx)

corpus_indices = [char_to_idx[char] for char in corpus_chars]

return corpus_indices, char_to_idx, idx_to_char, vocab_size

def data_iter_random(corpus_indices, batch_size, num_steps, device=None):

# 减1是因为输出的索引x是相应输入的索引y加1

num_examples = (len(corpus_indices) - 1) // num_steps

epoch_size = num_examples // batch_size

example_indices = list(range(num_examples))

random.shuffle(example_indices)

# 返回从pos开始的长为num_steps的序列

def _data(pos):

return corpus_indices[pos: pos + num_steps]

if device is None:

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

for i in range(epoch_size):

# 每次读取batch_size个随机样本

i = i * batch_size

batch_indices = example_indices[i: i + batch_size]

X = [_data(j * num_steps) for j in batch_indices]

Y = [_data(j * num_steps + 1) for j in batch_indices]

yield torch.tensor(X, dtype=torch.float32, device=device), torch.tensor(Y, dtype=torch.float32, device=device)

def data_iter_consecutive(corpus_indices, batch_size, num_steps, device=None):

if device is None:

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

corpus_indices = torch.tensor(corpus_indices, dtype=torch.float32, device=device)

data_len = len(corpus_indices)

batch_len = data_len // batch_size

indices = corpus_indices[0: batch_size * batch_len].view(batch_size, batch_len)

epoch_size = (batch_len - 1) // num_steps

for i in range(epoch_size):

i = i * num_steps

X = indices[:, i: i + num_steps]

Y = indices[:, i + 1: i + num_steps + 1]

yield X, Y

# ###################################### 6.4 ######################################

def one_hot(x, n_class, dtype=torch.float32):

# X shape: (batch), output shape: (batch, n_class)

x = x.long()

res = torch.zeros(x.shape[0], n_class, dtype=dtype, device=x.device)

res.scatter_(1, x.view(-1, 1), 1)

return res

def to_onehot(X, n_class):

# X shape: (batch, seq_len), output: seq_len elements of (batch, n_class)

return [one_hot(X[:, i], n_class) for i in range(X.shape[1])]

def predict_rnn(prefix, num_chars, rnn, params, init_rnn_state,

num_hiddens, vocab_size, device, idx_to_char, char_to_idx):

state = init_rnn_state(1, num_hiddens, device)

output = [char_to_idx[prefix[0]]]

for t in range(num_chars + len(prefix) - 1):

# 将上一时间步的输出作为当前时间步的输入

X = to_onehot(torch.tensor([[output[-1]]], device=device), vocab_size)

# 计算输出和更新隐藏状态

(Y, state) = rnn(X, state, params)

# 下一个时间步的输入是prefix里的字符或者当前的最佳预测字符

if t < len(prefix) - 1:

output.append(char_to_idx[prefix[t + 1]])

else:

output.append(int(Y[0].argmax(dim=1).item()))

return ''.join([idx_to_char[i] for i in output])

# def grad_clipping(params, theta, device):

# norm = torch.tensor([0.0], device=device)

# for param in params:

# norm += (param.grad.data ** 2).sum()

# norm = norm.sqrt().item()

# if norm > theta:

# for param in params:

# param.grad.data *= (theta / norm)

# def train_and_predict_rnn(rnn, get_params, init_rnn_state, num_hiddens,

# vocab_size, device, corpus_indices, idx_to_char,

# char_to_idx, is_random_iter, num_epochs, num_steps,

# lr, clipping_theta, batch_size, pred_period,

# pred_len, prefixes):

# if is_random_iter:

# data_iter_fn = data_iter_random

# else:

# data_iter_fn = data_iter_consecutive

# params = get_params()

# loss = nn.CrossEntropyLoss()

#

# for epoch in range(num_epochs):

# if not is_random_iter: # 如使用相邻采样,在epoch开始时初始化隐藏状态

# state = init_rnn_state(batch_size, num_hiddens, device)

# l_sum, n, start = 0.0, 0, time.time()

# data_iter = data_iter_fn(corpus_indices, batch_size, num_steps, device)

# for X, Y in data_iter:

# if is_random_iter: # 如使用随机采样,在每个小批量更新前初始化隐藏状态

# state = init_rnn_state(batch_size, num_hiddens, device)

# else:

# # 否则需要使用detach函数从计算图分离隐藏状态, 这是为了

# # 使模型参数的梯度计算只依赖一次迭代读取的小批量序列(防止梯度计算开销太大)

# for s in state:

# s.detach_()

#

# inputs = to_onehot(X, vocab_size)

# # outputs有num_steps个形状为(batch_size, vocab_size)的矩阵

# (outputs, state) = rnn(inputs, state, params)

# # 拼接之后形状为(num_steps * batch_size, vocab_size)

# outputs = torch.cat(outputs, dim=0)

# # Y的形状是(batch_size, num_steps),转置后再变成长度为

# # batch * num_steps 的向量,这样跟输出的行一一对应

# y = torch.transpose(Y, 0, 1).contiguous().view(-1)

# # 使用交叉熵损失计算平均分类误差

# l = loss(outputs, y.long())

#

# # 梯度清0

# if params[0].grad is not None:

# for param in params:

# param.grad.data.zero_()

# l.backward()

# grad_clipping(params, clipping_theta, device) # 裁剪梯度

# sgd(params, lr, 1) # 因为误差已经取过均值,梯度不用再做平均

# l_sum += l.item() * y.shape[0]

# n += y.shape[0]

#

# if (epoch + 1) % pred_period == 0:

# print('epoch %d, perplexity %f, time %.2f sec' % (

# epoch + 1, math.exp(l_sum / n), time.time() - start))

# for prefix in prefixes:

# print(' -', predict_rnn(prefix, pred_len, rnn, params, init_rnn_state,

# num_hiddens, vocab_size, device, idx_to_char, char_to_idx))

# ################################### 6.5 ################################################

# class RNNModel(nn.Module):

# def __init__(self, rnn_layer, vocab_size):

# super(RNNModel, self).__init__()

# self.rnn = rnn_layer

# self.hidden_size = rnn_layer.hidden_size * (2 if rnn_layer.bidirectional else 1)

# self.vocab_size = vocab_size

# self.dense = nn.Linear(self.hidden_size, vocab_size)

# self.state = None

#

# def forward(self, inputs, state): # inputs: (batch, seq_len)

# # 获取one-hot向量表示

# X = to_onehot(inputs, self.vocab_size) # X是个list

# Y, self.state = self.rnn(torch.stack(X), state)

# # 全连接层会首先将Y的形状变成(num_steps * batch_size, num_hiddens),它的输出

# # 形状为(num_steps * batch_size, vocab_size)

# output = self.dense(Y.view(-1, Y.shape[-1]))

# return output, self.state

#

#

# def predict_rnn_pytorch(prefix, num_chars, model, vocab_size, device, idx_to_char,

# char_to_idx):

# state = None

# output = [char_to_idx[prefix[0]]] # output会记录prefix加上输出

# for t in range(num_chars + len(prefix) - 1):

# X = torch.tensor([output[-1]], device=device).view(1, 1)

# if state is not None:

# if isinstance(state, tuple): # LSTM, state:(h, c)

# state = (state[0].to(device), state[1].to(device))

# else:

# state = state.to(device)

#

# (Y, state) = model(X, state) # 前向计算不需要传入模型参数

# if t < len(prefix) - 1:

# output.append(char_to_idx[prefix[t + 1]])

# else:

# output.append(int(Y.argmax(dim=1).item()))

# return ''.join([idx_to_char[i] for i in output])

#

#

# def train_and_predict_rnn_pytorch(model, num_hiddens, vocab_size, device,

# corpus_indices, idx_to_char, char_to_idx,

# num_epochs, num_steps, lr, clipping_theta,

# batch_size, pred_period, pred_len, prefixes):

# loss = nn.CrossEntropyLoss()

# optimizer = torch.optim.Adam(model.parameters(), lr=lr)

# model.to(device)

# state = None

# for epoch in range(num_epochs):

# l_sum, n, start = 0.0, 0, time.time()

# data_iter = data_iter_consecutive(corpus_indices, batch_size, num_steps, device) # 相邻采样

# for X, Y in data_iter:

# if state is not None:

# # 使用detach函数从计算图分离隐藏状态, 这是为了

# # 使模型参数的梯度计算只依赖一次迭代读取的小批量序列(防止梯度计算开销太大)

# if isinstance(state, tuple): # LSTM, state:(h, c)

# state = (state[0].detach(), state[1].detach())

# else:

# state = state.detach()

#

# (output, state) = model(X, state) # output: 形状为(num_steps * batch_size, vocab_size)

#

# # Y的形状是(batch_size, num_steps),转置后再变成长度为

# # batch * num_steps 的向量,这样跟输出的行一一对应

# y = torch.transpose(Y, 0, 1).contiguous().view(-1)

# l = loss(output, y.long())

#

# optimizer.zero_grad()

# l.backward()

# # 梯度裁剪

# grad_clipping(model.parameters(), clipping_theta, device)

# optimizer.step()

# l_sum += l.item() * y.shape[0]

# n += y.shape[0]

#

# try:

# perplexity = math.exp(l_sum / n)

# except OverflowError:

# perplexity = float('inf')

# if (epoch + 1) % pred_period == 0:

# print('epoch %d, perplexity %f, time %.2f sec' % (

# epoch + 1, perplexity, time.time() - start))

# for prefix in prefixes:

# print(' -', predict_rnn_pytorch(

# prefix, pred_len, model, vocab_size, device, idx_to_char,

# char_to_idx))

# ######################################## 7.2 ###############################################

def train_2d(trainer):

x1, x2, s1, s2 = -5, -2, 0, 0 # s1和s2是自变量状态,本章后续几节会使用

results = [(x1, x2)]

for i in range(20):

x1, x2, s1, s2 = trainer(x1, x2, s1, s2)

results.append((x1, x2))

print('epoch %d, x1 %f, x2 %f' % (i + 1, x1, x2))

return results

def show_trace_2d(f, results):

plt.plot(*zip(*results), '-o', color='#ff7f0e')

x1, x2 = np.meshgrid(np.arange(-5.5, 1.0, 0.1), np.arange(-3.0, 1.0, 0.1))

plt.contour(x1, x2, f(x1, x2), colors='#1f77b4')

plt.xlabel('x1')

plt.ylabel('x2')

# ######################################## 7.3 ###############################################

def get_data_ch7():

data = np.genfromtxt('../../data/airfoil_self_noise.dat', delimiter='\t')

data = (data - data.mean(axis=0)) / data.std(axis=0)

return torch.tensor(data[:1500, :-1], dtype=torch.float32), \

torch.tensor(data[:1500, -1], dtype=torch.float32) # 前1500个样本(每个样本5个特征)

def train_ch7(optimizer_fn, states, hyperparams, features, labels,

batch_size=10, num_epochs=2):

# 初始化模型

net, loss = linreg, squared_loss

w = torch.nn.Parameter(torch.tensor(np.random.normal(0, 0.01, size=(features.shape[1], 1)), dtype=torch.float32),

requires_grad=True)

b = torch.nn.Parameter(torch.zeros(1, dtype=torch.float32), requires_grad=True)

def eval_loss():

return loss(net(features, w, b), labels).mean().item()

ls = [eval_loss()]

data_iter = torch.utils.data.DataLoader(

torch.utils.data.TensorDataset(features, labels), batch_size, shuffle=True)

for _ in range(num_epochs):

start = time.time()

for batch_i, (X, y) in enumerate(data_iter):

l = loss(net(X, w, b), y).mean() # 使用平均损失

# 梯度清零

if w.grad is not None:

w.grad.data.zero_()

b.grad.data.zero_()

l.backward()

optimizer_fn([w, b], states, hyperparams) # 迭代模型参数

if (batch_i + 1) * batch_size % 100 == 0:

ls.append(eval_loss()) # 每100个样本记录下当前训练误差

# 打印结果和作图

print('loss: %f, %f sec per epoch' % (ls[-1], time.time() - start))

set_figsize()

plt.plot(np.linspace(0, num_epochs, len(ls)), ls)

plt.xlabel('epoch')

plt.ylabel('loss')

# 本函数与原书不同的是这里第一个参数优化器函数而不是优化器的名字

# 例如: optimizer_fn=torch.optim.SGD, optimizer_hyperparams={"lr": 0.05}

def train_pytorch_ch7(optimizer_fn, optimizer_hyperparams, features, labels,

batch_size=10, num_epochs=2):

# 初始化模型

net = nn.Sequential(

nn.Linear(features.shape[-1], 1)

)

loss = nn.MSELoss()

optimizer = optimizer_fn(net.parameters(), **optimizer_hyperparams)

def eval_loss():

return loss(net(features).view(-1), labels).item() / 2

ls = [eval_loss()]

data_iter = torch.utils.data.DataLoader(

torch.utils.data.TensorDataset(features, labels), batch_size, shuffle=True)

for _ in range(num_epochs):

start = time.time()

for batch_i, (X, y) in enumerate(data_iter):

# 除以2是为了和train_ch7保持一致, 因为squared_loss中除了2

l = loss(net(X).view(-1), y) / 2

optimizer.zero_grad()

l.backward()

optimizer.step()

if (batch_i + 1) * batch_size % 100 == 0:

ls.append(eval_loss())

# 打印结果和作图

print('loss: %f, %f sec per epoch' % (ls[-1], time.time() - start))

set_figsize()

plt.plot(np.linspace(0, num_epochs, len(ls)), ls)

plt.xlabel('epoch')

plt.ylabel('loss')

############################## 8.3 ##################################

class Benchmark():

def __init__(self, prefix=None):

self.prefix = prefix + ' ' if prefix else ''

def __enter__(self):

self.start = time.time()

def __exit__(self, *args):

print('%stime: %.4f sec' % (self.prefix, time.time() - self.start))

# ########################### 9.1 ########################################

def show_images(imgs, num_rows, num_cols, titles=None, scale=1.5):

# Plot a list of image

figsize = (num_cols * scale, num_rows * scale)

_, axes = plt.subplots(num_rows, num_cols, figsize=figsize)

axes = axes.flatten()

for i, (ax, img) in enumerate(zip(axes, imgs)):

if torch.is_tensor(img):

# 图片张量

ax.imshow(img.numpy())

else:

# PIL图片

ax.imshow(img)

ax.axes.get_xaxis().set_visible(False)

ax.axes.get_yaxis().set_visible(False)

if titles:

ax.set_title(titles[i])

# plt.show()

return axes

def train(train_iter, test_iter, net, loss, optimizer, device, num_epochs):

net = net.to(device)

print("training on ", device)

batch_count = 0

for epoch in range(num_epochs):

train_l_sum, train_acc_sum, n, start = 0.0, 0.0, 0, time.time()

for X, y in train_iter:

X = X.to(device)

y = y.to(device)

y_hat = net(X)

l = loss(y_hat, y)

optimizer.zero_grad()

l.backward()

optimizer.step()

train_l_sum =train_l_sum + l.cpu().item()

train_acc_sum =train_acc_sum + (y_hat.argmax(dim=1) == y).sum().cpu().item()

n =n + y.shape[0]

batch_count =batch_count + 1

test_acc = evaluate_accuracy(test_iter, net)

print('epoch %d, loss %.4f, train acc %.3f, test acc %.3f, time %.1f sec'

% (epoch + 1, train_l_sum / batch_count, train_acc_sum / n, test_acc, time.time() - start))

############################## 9.3 #####################

def bbox_to_rect(bbox, color):

# 将边界框(左上x, 左上y, 右下x, 右下y)格式转换成matplotlib格式:

# ((左上x, 左上y), 宽, 高)

return plt.Rectangle(

xy=(bbox[0], bbox[1]), width=bbox[2] - bbox[0], height=bbox[3] - bbox[1],

fill=False, edgecolor=color, linewidth=2)

# ############################# 10.7 ##########################

def read_imdb(folder='train', data_root="/S1/CSCL/tangss/Datasets/aclImdb"):

data = []

for label in ['pos', 'neg']:

folder_name = os.path.join(data_root, folder, label)

for file in tqdm(os.listdir(folder_name)):

with open(os.path.join(folder_name, file), 'rb') as f:

review = f.read().decode('utf-8').replace('\n', '').lower()

data.append([review, 1 if label == 'pos' else 0])

random.shuffle(data)

return data

def get_tokenized_imdb(data):

"""

data: list of [string, label]

"""

def tokenizer(text):

return [tok.lower() for tok in text.split(' ')]

return [tokenizer(review) for review, _ in data]

def get_vocab_imdb(data):

tokenized_data = get_tokenized_imdb(data)

counter = collections.Counter([tk for st in tokenized_data for tk in st])

return torchtext.vocab.Vocab(counter, min_freq=5)

def preprocess_imdb(data, vocab):

max_l = 500 # 将每条评论通过截断或者补0,使得长度变成500

def pad(x):

return x[:max_l] if len(x) > max_l else x + [0] * (max_l - len(x))

tokenized_data = get_tokenized_imdb(data)

features = torch.tensor([pad([vocab.stoi[word] for word in words]) for words in tokenized_data])

labels = torch.tensor([score for _, score in data])

return features, labels

def load_pretrained_embedding(words, pretrained_vocab):

"""从预训练好的vocab中提取出words对应的词向量"""

embed = torch.zeros(len(words), pretrained_vocab.vectors[0].shape[0]) # 初始化为0

oov_count = 0 # out of vocabulary

for i, word in enumerate(words):

try:

idx = pretrained_vocab.stoi[word]

embed[i, :] = pretrained_vocab.vectors[idx]

except KeyError:

oov_count =oov_count + 0

if oov_count > 0:

print("There are %d oov words.")

return embed

def predict_sentiment(net, vocab, sentence):

"""sentence是词语的列表"""

device = list(net.parameters())[0].device

sentence = torch.tensor([vocab.stoi[word] for word in sentence], device=device)

label = torch.argmax(net(sentence.view((1, -1))), dim=1)

return 'positive' if label.item() == 1 else 'negative'

d2l = sys.modules[__name__]

class Timer:

def __init__(self):

self.times = []

self.start()

def start(self):

# 启动计时器

self.tik = time.time()

def stop(self):

# 停止计时器并将时间记录在列表中

self.times.append(time.time() - self.tik)

return self.times[-1]

def avg(self):

# 返回平均时间

return sum(self.times) / len(self.times)

def sum(self):

# 返回时间总和

return sum(self.times)

def cumsum(self):

# 返回累计时间

return np.array(self.times).cumsum().tolist()

def synthetic_data(w, b, num_examples):

# 生成y = Xw + b + 噪音

X = torch.normal(0, 1, (num_examples, len(w)))

y = torch.matmul(X, w) + b

y =y + torch.normal(0, 0.01, y.shape)

return X, y.reshape((-1, 1))

def load_array(data_arrays, batch_size, is_train=True):

# 构造一个pytorch数据迭代器

dataset = data.TensorDataset(*data_arrays)

return data.DataLoader(dataset, batch_size, shuffle=is_train)

def get_dataloader_workers():

# 使用4个进程来读取数据

return 4

def load_data_fashion_mnist(batch_size, resize=None):

# 下载Fashion-MNIST数据集,然后将其加载到内存中

trans = [transforms.ToTensor()]

if resize:

trans.insert(0, transforms.Resize(resize))

trans = transforms.Compose(trans)

mnist_train = torchvision.datasets.FashionMNIST(root="data", train=True, transform=trans, download=True)

mnist_test = torchvision.datasets.FashionMNIST(root="data", train=False, transform=trans, download=True)

return (data.DataLoader(mnist_train, batch_size, shuffle=True, num_workers=get_dataloader_workers()),

data.DataLoader(mnist_test, batch_size, shuffle=False, num_workers=get_dataloader_workers()))

def accuracy(y_hat, y):

# 计算预测正确的数量

if len(y_hat.shape) > 1 and y_hat.shape[1] > 1:

y_hat = y_hat.argmax(axis=1)

cmp = y_hat.type(y.dtype) == y

return float(cmp.type(y.dtype).sum())

def evaluate_accracy(net, data_iter):

# 计算在指定数据集上模型的精度s

if isinstance(net, torch.nn.Module):

net.eval() # 将模型 设置为评估模式

metric = Accumulator(2) # 正确预测数、预测总数

for X, y in data_iter:

metric.add(accuracy(net(X), y), y.numel())

return metric[0] / metric[1]

class Accumulator:

# 在“n”个变量上累加

def __init__(self, n):

self.data = [0, 0] * n

def add(self, *args):

self.data = [a + float(b) for a, b in zip(self.data, args)]

def reset(self):

self.data = [0, 0] * len(self.data)

def __getitem__(self, idx):

return self.data[idx]

def trian_epoch_ch3(net, train_iter, loss, updater):

# 训练模型的一个迭代周期

# 将模型设置为训练模式

if isinstance(net, torch.nn.Module):

net.train()

# 训练损失总和、训练准确度总和、样本数

metric = Accumulator(3)

for X, y in train_iter:

# 计算梯度并更新参数

y_hat = net(X)

l = loss(y_hat, y)

if isinstance(updater, torch.optim.Optimizer):

# 使用pytorch内置的优化器和损失函数

updater.zero_grad()

l.backward()

updater.step()

metric.add(float(l) * len(y), accuracy(y_hat, y), y.size().numel())

else:

# 使用定制的优化器和损失函数

l.sum().backward()

updater(X.shape[0])

metric.add(float(l.sum()), accuracy(y_hat, y), y.numel())

# 返回训练损失和训练准确率

return metric[0] / metric[2], metric[1] / metric[2]

class Animator: #@save

"""在动画中绘制数据。"""

def __init__(self, xlabel=None, ylabel=None, legend=None, xlim=None,

ylim=None, xscale='linear', yscale='linear',

fmts=('-', 'm--', 'g-.', 'r:'), nrows=1, ncols=1,

figsize=(3.5, 2.5)):

# 增量地绘制多条线

if legend is None:

legend = []

d2l.use_svg_display()

self.fig, self.axes = d2l.plt.subplots(nrows, ncols, figsize=figsize)

if nrows * ncols == 1:

self.axes = [self.axes, ]

# 使用lambda函数捕获参数

self.config_axes = lambda: d2l.set_axes(

self.axes[0], xlabel, ylabel, xlim, ylim, xscale, yscale, legend)

self.X, self.Y, self.fmts = None, None, fmts

def add(self, x, y):

# 向图表中添加多个数据点

if not hasattr(y, "__len__"):

y = [y]

n = len(y)

if not hasattr(x, "__len__"):

x = [x] * n

if not self.X:

self.X = [[] for _ in range(n)]

if not self.Y:

self.Y = [[] for _ in range(n)]

for i, (a, b) in enumerate(zip(x, y)):

if a is not None and b is not None:

self.X[i].append(a)

self.Y[i].append(b)

self.axes[0].cla()

for x, y, fmt in zip(self.X, self.Y, self.fmts):

self.axes[0].plot(x, y, fmt)

self.config_axes()

display.display(self.fig)

display.clear_output(wait=True)

def set_axes(axes, xlabel, ylabel, xlim, ylim, xscale, yscale, legend):

# 设置matplotlib的轴

axes.set_xlabel(xlabel)

axes.set_ylabel(ylabel)

axes.set_xscale(xscale)

axes.set_yscale(yscale)

axes.set_xlim(xlim)

axes.set_ylim(ylim)

if legend:

axes.legend(legend)

axes.grid()

def plot(X, Y=None, xlabel=None, ylabel=None, legend=None, xlim=None, ylim=None, xscale='linear', yscale='linear',

fmts=('-', 'm--', 'g-.', 'r:'), figsize=(3.5, 2.5), axes=None):

# 绘制数据点

if legend is None:

legend = []

set_figsize(figsize)

axes = axes if axes else plt.gca()

# 如果'X'有一个轴,输出True

def has_one_axis(X):

return hasattr(X, "ndim") and X.ndim == 1 or isinstance(X, list)

if has_one_axis(X):

X = [X]

if Y is None:

X, Y = [[]] + len(X), X

elif has_one_axis(Y):

X = X * len(Y)

axes.cla() # Clear axis即清除当前图形中的当前活动轴。其他轴不受影响

for x, y, fmt in zip(X, Y, fmts):

if len(x):

axes.plot(x, y, fmt)

else:

axes.plot(y, fmt)

set_axes(axes, xlabel, ylabel, xlim, ylim, xscale, yscale, legend)

def try_gpu(i=0):

# 如果存在,则返回gpu(i),否则返回cpu()

if torch.cuda.device_count() >= i + 1:

return torch.device(f'cuda:{i}')

return torch.device('cpu')

def try_all_gpus():

# 返回所有可用的gpu,如果没有gpu,则返回[cup(),]

devices = [torch.device(f'cuda:{i}') for i in range(torch.cuda.device_count())]

# return devices if devices else [torch.device('cpu')]

return devices if devices else [torch.device('cpu')]

def corr2d(X, K):

# 计算二维互相运算

h, w = K.shape

Y = torch.zeros((X.shape[0] - h + 1, X.shape[1] - w + 1))

for i in range(Y.shape[1]):

for j in range(Y.shape[1]):

Y[i, j] = (X[i:i + h, j:j + w] * K).sum()

return Y

def evaluate_accuracy_gpu(net, data_iter, device=None):

# 使用gpu计算模型在数据集上的精度

if isinstance(net, torch.nn.Module):

net.eval() # 设置为评估模式

if not device:

device = next(iter(net.parameters())).device

# 正确预测的数量,总预测的数量

metric = Accumulator(2)

for X, y in data_iter:

if isinstance(X, list):

# BERT微调所需的

X = [x.to(device) for x in X]

else:

X = X.to(device)

y = y.to(device)

metric.add(accuracy(net(X), y), y.numel())

return metric[0] / metric[1]

def train_ch6(net, train_iter, test_iter, num_epochs, lr, device):

# 用GPU训练模型

def init_weights(m):

if type(m) == nn.Linear or type(m) == nn.Conv2d:

nn.init.xavier_uniform_(m.weight)

net.apply(init_weights)

print('training on', device)

net.to(device)

optimizer = torch.optim.SGD(net.parameters(), lr=lr)

loss = nn.CrossEntropyLoss()

animator = Animator(xlabel='epoch', xlim=[1, num_epochs], legend=['train loss', 'train acc', 'test acc'])

timer, num_batches = Timer(), len(train_iter)

for epoch in range(num_epochs):

# 训练损失之和,训练准确率之和,范例数

metric = Accumulator(3)

net.train()

for i, (X, Y) in enumerate(train_iter): # enumerate() 函数用于将一个可遍历的数据对象(如列表、元组或字符串)组合为一个索引序列,同时列出数据和数据下标

timer.start()

optimizer.zero_grad()

X, y = X.to(device), y.to(device)

y_hat = net(X)

l = loss(y_hat, y)

l.backward()

optimizer.step()

with torch.no_grad(): # torch.no_grad() 是一个上下文管理器,被该语句 wrap 起来的部分将不会track 梯度

metric.add(l * X.shape[0], accuracy(y_hat, y), X.shape[0])

timer.stop()

train_l = metric[0] / metric[2]

train_acc = metric[1] / metric[2]

if (i + 1) % (num_batches // 5) == 0 or i == num_batches - 1:

animator.add(epoch + (i + 1) / num_batches, (train_l, train_acc, None))

test_acc = evaluate_accuracy(net, test_iter)

animator.add(epoch + 1, (None, None, test_acc))

print(f'loss {train_l:.3f}, train acc {train_acc:.3f}, 'f'test acc {test_acc:.3f}')

print(f'{metric[2] * num_epochs / timer.sum():.1f} examples/sec'f'on {str(device)}')

# 残差

class Residual(nn.Module):

def __init__(self, input_channels, num_channels, use_1x1conv=False, stride=1):

super().__init__()

self.conv1 = nn.Conv2d(input_channels, num_channels, kernel_size=3, padding=1, stride=stride)

self.conv2 = nn.Conv2d(num_channels, num_channels, kernel_size=3, padding=1)

if use_1x1conv:

self.conv3 = nn.Conv2d(input_channels, num_channels, kernel_size=1, stride=stride)

else:

self.conv3 = None

self.bn1 = nn.BatchNorm2d(num_channels)

self.bn2 = nn.BatchNorm2d(num_channels)

self.relu = nn.ReLU(inplace=False)

def forward(self, X):

Y = F.relu(self.bn1(self.conv1(X)))

y = self.bn2(self.conv2(Y))

if self.conv3:

X = self.conv3(X)

Y =Y + X

return F.relu(Y)

DATA_HUB = dict() # dict()用于创造一个字典

DATA_URL = 'http://d2l-data.s3-accelerate.amazonaws.com/'

def download(name, cache_dir=os.path.join('data')):

# 下载一个DATA_HUB中的文件,返回本地文件名

assert name in DATA_HUB, f"{name} 不存在于 {DATA_HUB}."

url, sha1_hash = DATA_HUB[name]

os.makedirs(cache_dir, exist_ok=True)

fname = os.path.join(cache_dir, url.split('/')[-1])

if os.path.exists(fname):

sha1 = hashlib.sha1()

with open(fname, 'rb') as f:

while True:

data = f.read(1048576)

if not data:

break

sha1.update(data)

if sha1.hexdigest() == sha1_hash:

return fname # Hit cache

print(f'正在从{url}下载{fname}...')

r = requests.get(url, stream=True, verify=True)

with open(fname, 'wb') as f:

f.write(r.content)

return fname

def download_extract(name, folder=None):

# 下载并解压zip/tar文件

fname = download(name)

base_dir = os.path.dirname(fname)

data_dir, ext = os.path.splitext(fname)

if ext == '.zip':

fp = zipfile.ZipFile(fname, 'r')

elif ext in ('.tar', '.gz'):

fp = tarfile.open(fname, 'r')

else:

assert False # 只有zip/tar文件可以被解压缩

fp.extractall(base_dir)

return os.path.join(base_dir, folder) if folder else data_dir

def load_array(data_arrays, batch_size, is_train=True):

# 构造一个pytorch数据迭代器

dataset = torch.utils.data.TensorDataset(*data_arrays)

return torch.utils.data.DataLoader(dataset, batch_size, shuffle=is_train)

def download_all():

# 下载DATA_HUB中的所有文件

for name in DATA_HUB:

download(name)

def read_time_machine():

# load the tine machine dataset into a list of text lines

with open(download('timemachine'), 'r') as f:

lines = f.readlines()

return [re.sub('[^A-Za-z]+', ' ', line).strip().lower() for line in lines]

def tokenize(lines, token='word'):

# 将文本行拆分为单词或字符词元

if token == 'word':

return [line.split() for line in lines]

elif token == 'char':

return [list(line) for line in lines]

else:

print("错误:未知词元类型:" + token)

class Vocab:

# 文本词汇表

def __init__(self, tokens=None, min_freq=0, reserved_tokens=None):

if tokens is None:

tokens = []

if reserved_tokens is None:

reserved_tokens = []

# 按出现频率排序

counter = count_corpus(tokens)

self.token_freqs = sorted(counter.item(), ket=lambda x: x[1], reverse=True)

# 位置词元的索引为0

self.unk, uniq_tokens = 0, [''] + reserved_tokens

uniq_tokens =uniq_tokens + [token for token, freq in self.token_freqs]

self.idx_to_token, self.token_to_idx = [], dict()

for token in uniq_tokens:

self.idx_to_token.append(token)

self.token_to_idx[token] = len(self.idx_to_token) - 1

def __len__(self):

return len(self.idx_to_token)

def __getitem__(self, tokens):

if not isinstance(tokens, (list, tuple)):

return self.token_to_idx.get(tokens, self.unk)

return [self.__getitem__(token) for token in tokens]

def to_tokens(self, indices):

if not isinstance(indices, (list, tuple)):

return self.idx_to_token[indices]

return [self.idx_to_token[index] for index in indices]

def count_corpus(tokens):

# 统计词元的频率

# 这里的'tokens'是1D列表或2D列表

if len(tokens) == 0 or isinstance(tokens[0], list):

# 将词元列表展平成使用词元填充的一个列表

tokens = [token for line in tokens for token in line]

return collections.Counter(tokens)

def load_corpus_time_machine(max_tokens=-1):

# 返回时光机器数据集的词元索引表和词汇表

lines = read_time_machine()

tokens = tokenize(lines, 'char')

vocab = Vocab(tokens)

# 因为时光机器数据集中的每一个文本行不一定是一个句子或一个段落,所以将所有文本行展平到一个列表中

corpus = [vocab[token] for line in tokens for token in line]

if max_tokens > 0:

corpus = corpus[:max_tokens]

return corpus, vocab

def seq_data_iter_random(corpus, batch_size, num_steps):

# 使用随机抽样生成一个小批量子序列

# 从随机偏移量来时对序列进行分区,随机范围包括'num_steps - 1'

corpus = corpus[random.randint(0, num_steps - 1):]

# 减去1,是因为我们需要考虑标签

num_subseqs = (len(corpus) - 1) // num_steps

# 长度为'num_steps'的子序列的起始索引

initial_indices = list(range(0, num_subseqs * num_steps, num_steps))

# 在随机抽样的迭代过程中,来自两个相邻的、随机的、小批量中的子序列不一定在原始序列上相邻

random.shuffle(initial_indices)

def data(pos):

# 返回从'pos'位置开始的长度为'num_steps'的序列

return corpus[pos:pos + num_steps]

num_batches = num_subseqs // batch_size

for i in range(0, batch_size * num_batches, batch_size):

# 在这里,'initial_indices'包含子序列的随机起始索引

initial_indices_per_batch = initial_indices[i:i + batch_size]

X = [data(j) for j in initial_indices_per_batch]

Y = [data(j + 1) for j in initial_indices_per_batch]

yield torch.tensor(X), torch.tensor(Y)

def seq_data_iter_sequential(corpus, batch_size, num_steps):

# 使用顺序分区生成一个小批量序列

# 从随机偏移量开始划分序列

offset = random.randint(0, num_steps)

num_tokens = ((len(corpus) - offset - 1) // batch_size) * batch_size

Xs = torch.tensor(corpus[offset:offset + num_tokens])

Ys = torch.tensor(corpus[offset + 1:offset + 1 + num_tokens])

Xs, Ys = Xs.reshape(batch_size, -1), Ys.reshape(batch_size, -1)

num_batches = Xs.shape[1] // num_steps

for i in range(0, num_steps * num_batches, num_steps):

X = Xs[:, i:i + num_steps]

Y = Ys[:, i:i + num_steps]

yield X,Y

class SeqDataLoader:

# 加载序列数据的迭代层

def __len__(self, batch_size, num_steps, use_random_iter, max_tokens):

if use_random_iter:

self.data_iter_fn = seq_data_iter_random

else:

self.data_iter_fn = seq_data_iter_sequential

self.corpus, self.vocab = load_corpus_time_machine(max_tokens)

self.batch_size, self.num_steps = batch_size, num_steps

def __iter__(self):

return self.data_iter_fn(self.corpus, self.batch_size, self.num_steps)

def load_data_time_machine(batch_size, num_steps, use_random_iter=False, max_tokens=10000):

# 返回时光机器数据集的迭代器和词汇表

data_iter = SeqDataLoader(batch_size, num_steps, use_random_iter, max_tokens)

return data_iter, data_iter.vocab

class RNNModelScratch:

# 从零开始实现的循环神经网络

def __init__(self, vocab_size, num_hiddens, device, get_params, init_state, forward_fn):

self.vocab_size, self.num_hiddens = vocab_size, num_hiddens

self.params = get_params(vocab_size, num_hiddens, device)

self.init_state, self.forward_fn = init_state, forward_fn

def __call__(self, X, state):

X = F.one_hot(X.T, self.vocab_size).type(torch.float32)

return self.forward_fn(X, state, self.params)

def begin_state(self, batch_size, device):

return self.init_state(batch_size, self.num_hiddens, device)

def predict_ch8(prefix, num_preds, net, vocab, device):

# 在'prefix'后面生成新字符

state = net.begin_state(batch_size=1, device=device)

outputs = [vocab[prefix[0]]]

get_input = lambda: torch.tensor([outputs[-1]], device=device).reshape((1, 1))

for y in prefix[1:]: # 预热期

_, state = net(get_input(), state)

outputs.append(vocab[y])

for _ in range(num_preds): # 预测'num_preds'步

y, state = net(get_input(), state)

outputs.append(int(y, np.argmax(dim=1).reshape(1)))

return ''.join([vocab.idx_to_token[i] for i in outputs])

def grad_clipping(net, theta):

# 裁剪梯度

if isinstance(net, nn.Module):

params = [p for p in net.parameters() if p.requires_grad]

else:

params = net.params

norm = torch.sqrt(sum(torch.sum((p.grad ** 2)) for p in params))

if norm > theta:

for param in params:

param.grad[:] *= theta / norm

def train_epoch_ch8(net, train_iter, loss, updater, device, use_random_iter):

# 训练模型一个迭代周期

state, timer = None, Timer()

metric = Accumulator(2) # 训练损失之和,词元数量

for X, Y in train_iter:

if state is None or use_random_iter:

# 在第一次迭代或视同随机抽样时初始化'state'

state = net.begin_state(batch_size=X.shape[0], device=device)

else:

if isinstance(net, nn.Module) and not isinstance(state, tuple):

# ‘state’对于'nn.GUR'是个张量

state.detach()

else:

# ‘state’对于'nn.LSTM'或对于我们从零开始实现的模型是个张量

for s in state:

s.detach_()

y = Y.T.reshape(-1)

X, y = X.to(device), y.to(device)

y_hat, state = net(X, state)

l = loss(y_hat, y.long()).mean()

if isinstance(updater, torch.optim.Optimizer):

updater.zero_grad()

l.backward()

grad_clipping(net, 1)

updater.step()

else:

l.backward()

grad_clipping(net, 1)

# 因为已经使用了'mean'函数

updater(batch_size=1)

metric.add(l * y.numel(), y.numel())

return math.exp(metric[0] / metric[1]), metric[1] / timer.stop()

def train_ch8(net, train_iter, vocab, lr, num_epochs, device, use_random_iter=False):

# 训练模型

loss = nn.CrossEntropyLoss()

animator = Animator(xlabel='epoch', ylabel='perplexity', legend=['train'], xlim=[10, num_epochs])

# 初始化

if isinstance(net, nn.Module):

updater = torch.optim.SGD(net.parameters(), lr)

else:

updater = lambda batch_size: sgd(net.params, lr, batch_size)

predict = lambda prefix: predict_ch8(prefix, 50, net, vocab, device)

# 训练和预测

for epoch in range(num_epochs):

ppl, speed = train_epoch_ch8(net, train_iter, loss, updater, device, use_random_iter)

if (epoch + 1) % 10 == 0:

print(predict('time traveller'))

animator.add(epoch + 1, [ppl])

print(f'困惑度 {ppl:.lf}, {speed:.f} 词元/秒 {str(device)}')

print(predict('time traveller'))

print(predict('traveller'))

class RNNModel(nn.Module):

# 循环神经网络模型

def __init__(self, rnn_layer, vocab_size, **kwargs):

super(RNNModel, self).__init__(**kwargs)

self.rnn = rnn_layer

self.vocab_size = vocab_size

self.num_hiddens = self.rnn.hidden_size

# 如果RNN是双向的,'num_directions'应该是2,否则应该是1

if not self.rnn.bidirectional:

self.num_directions = 1

self.liner = nn.Linear(self.num_hiddens, self.vocab_size)

else:

self.num_directions = 2

self.liner = nn.Linear(self.num_hiddens * 2, self.vocab_size)

def forward(self, inputs, state):

X = F.one_hot(inputs.T.long(), self.vocab_size)

X = X.to(torch.float32)

Y, state = self.rnn(X, state)

# 全连接层首先将'Y'的形状改为('时间步数'*'批量大小','隐藏单元数')

# 他的输出形状是('时间步数'*'批量大小','词表大小')

output = self.liner(Y.reshape((-1, Y.shape[-1])))

return output, state

def begin_state(self, device, batch_size=1):

if not isinstance(self.rnn, nn.LSTM):

# 'nn.GRU'以张量作为隐藏状态

return torch.zeros((self.num_directions * self.rnn.num_layers, batch_size, self.num_hiddens), device=device)

else:

# 'nn.LSTM'以张量作为隐藏状态

return (torch.zeros((self.num_directions * self.rnn.num_layers, batch_size, self.num_hiddens), device=device),

torch.zeros((self.num_directions * self.rnn.num_layers, batch_size, self.num_hiddens), device=device))

DATA_HUB['fra-eng'] = (DATA_URL + 'fra-eng.zip', '94646ad1522d915e7b0f9296181140edcf86a4f5')

def read_data_nmt():

# 载入“英语——法语”数据集

data_dir = download_extract('fra-eng')

with open(os.path.join(data_dir, 'fra.txt'), 'r', encoding='utf-8') as f:

return f.read()

# raw_text = read_data_nmt()

# print(raw_text[:75])

def preprocess_nmt(text):

# 预处理“英语——法语”数据集

def no_space(char, prev_char):

return char in set(',.!?') and prev_char != ' '

# 使用空格替换不间断空格

# 使用小写字母替换大写字母

text = text.replace('\u202f', ' ').replace('\xa0', ' ').lower()

# 在单词和标点符号之间插入空格

out = [' ' + char if i > 0 and no_space(char, text[i - 1]) else char for i, char in enumerate(text)]

return ''.join(out)

def tokenize_nmt(text, num_examples=None):

# 词元化“英语——法语“数据集

source, target = [], []

for i, line in enumerate(text.split('\n')):

if num_examples and i > num_examples:

break

parts = line.split('\t')

if len(parts) == 2:

source.append(parts[0].split(' '))

target.append(parts[1].split(' '))

return source, target

def truncate_pad(line, num_steps, padding_token):

# 截断或填充文本序列

if len(line) > num_steps:

return line[:num_steps] # 截断

return line +[padding_token] * (num_steps - len(line)) # 填充

def build_array_nmt(lines, vocab, num_steps):

# 将机器翻译的文本序列转成小批量

lines = [vocab[l] for l in lines]

lines = [l +[vocab['']] for l in lines]

array = torch.tensor([truncate_pad(l, num_steps, vocab['']) for l in lines])

valid_len = (array != vocab['']).type(torch.int32).sum(1)

return array, valid_len

def load_data_nmt(batch_size, num_steps, num_examples=600):

# 返回翻译数据集的迭代器和词汇表

text = preprocess_nmt(read_data_nmt())

source, target = tokenize_nmt(text, num_examples)

src_vocab = Vocab(source, min_freq=2, reserved_tokens=['', '', ''])

tgt_vocab = Vocab(target, min_freq=2, reserved_tokens=['', '', ''])

src_array, src_valid_len = build_array_nmt(source, src_vocab, num_steps)

tgt_array, tgt_valid_len = build_array_nmt(target, tgt_vocab, num_steps)

data_arrays = (src_array, src_valid_len, tgt_array, tgt_valid_len)

data_iter = load_array(data_arrays, batch_size)

return data_iter, src_vocab, tgt_vocab

class Encoder(nn.Module):

# 编码器—解码器结构的基本编码器接口

def __init__(self, **kwargs):

super(Encoder, self).__init__(**kwargs)

def forward(self, X, *args):

raise NotImplementedError

class Decoder(nn.Module):

# 编码器—解码器结构的基本解码器接口

def __init__(self, **kwargs):

super(Decoder, self).__init__(**kwargs)

def init_state(self, enc_outputs, *args):

raise NotImplementedError

def forward(self, X, state):

raise NotImplementedError

class EncoderDecoder(nn.Module):

# 编码器—解码器结构的基类

def __init__(self, encoder, decoder, **kwargs):

super(EncoderDecoder, self).__init__(**kwargs)

self.encoder = encoder

self.decoder = decoder

def forward(self, enc_X, dec_X, *args):

enc_output =self.encoder(enc_X, *args)

dec_state = self.decoder.init_state(enc_output, *args)

return self.decoder(dec_X, dec_state)

class Seq2seqEncoder(Encoder):

# 用于序列到序列学习的循环神经网络

def __iter__(self, vocab_size, embed_size, num_hiddens, num_layers, dropout=0, **kwargs):

super(Seq2seqEncoder, self).__init__(**kwargs)

# 嵌入层

self.embedding = nn.Embedding(vocab_size, embed_size)

self.rnn = nn.GRU(embed_size, num_hiddens, num_layers, dropout=dropout)

def forward(self, X, *args):

# 输出X的形状:(batch_size, num_steps, embed_size)

X = self.embedding(X)

# 在循环神经网络模型中,第一个轴对应于时间步

X = X.permute(1, 0, 2)

# 如果未提及状态,则默认为0

output, state = self.rnn(X)

# output的形状:(num_steps, batch_size, num_hiddens)

# state[0]的形状:(num_layers, batch_size, num_hiddens)

return output, state

def sequence_mask(X, valid_len, value=0):

# 在序列中屏蔽不相关的项

maxlen = X.size(1)

mask = torch.arange((maxlen), dytpe=torch.float32, device=X.device)[None, :] < valid_len[:, None]

X[~mask] = value

return X

class MaskedSoftmaxCELoss(nn.CrossEntropyLoss):

# 带屏蔽的softmax交叉熵损失函数

# pred的形状:(batch_size, num_steps, vocab_size)

# label的形状:(batch_size, nu,_steps)

# valid_len的形状:(batch_size)

def forward(self, pred, label, valid_len):

weights = torch.ones_like(label)

weights = sequence_mask(weights, valid_len)

self.reduction='none'

unweighted_loss = super(MaskedSoftmaxCELoss, self).forward(pred.permute(0, 2, 1), label)

weighted_loss = (unweighted_loss * weights).mean(dim=1)

return weighted_loss

def train_seq2seq(net, data_iter, lr, num_epochs, tgt_vocab, device):

# 训练序列到序列模型

def xavier_init_weights(m):

if type(m) == nn.Linear:

nn.init.xavier_uniform_(m.weight)

if type(m) == nn.GRU:

for param in m._flat_weights_names:

if "weight" in param:

nn.init.xavier_uniform_(m._parameters[param])

net.apply(xavier_init_weights)

net.to(device)

optimizer = torch.optim.Adam(net.parameters(), lr=lr)

loss =MaskedSoftmaxCELoss()

net.train()

animator = Animator(xlabel='epoch', ylabel='loss', xlim=[10, num_epochs])

for epoch in range(num_epochs):

timer = Timer()

metric = Accumulator(2) # 训练损失总和, 词元数量

for batch in data_iter:

X, X_valid_len, Y, Y_valid_len = [x.to(device) for x in batch]

bos = torch.tensor(tgt_vocab[''] * Y.shape[0], device=device).reshape(-1, 1)

dec_input = torch.cat([bos, Y[:, :-1]], 1) # 教师强制

Y_hat, _ = net(X, dec_input, X_valid_len)

l = loss(Y_hat, Y, Y_valid_len)

l.sum().backward() # 损失函数的标量进行“反转”

grad_clipping(net, 1)

num_tokens = Y_valid_len.sum()

optimizer.step()

with torch.no_grad():

metric.add(l.sum(), num_tokens)

if (epoch + 1) % 10 == 0:

animator.add(epoch + 1, (metric[0] / metric[1],))

print(f'loss {metric[0] / metric[1]:.3f}, {metric[1] / timer.stop():.1f}' f'tokens/sec on {str(device)}')

def predict_seq2seq(net, src_sentence, src_vocab, tgt_vocab, num_steps, device, save_attention_weights=False):

# 序列到序列模型的预测

# 在预测是将"net"设置为评估模式

net.eval()

src_tokens = src_vocab[src_sentence.lower().split(' ')] + [src_vocab['']]

enc_valid_len = torch.tensor([len(src_tokens)], device=device)

src_tokens = truncate_pad(src_tokens, num_steps, src_vocab[''])

# 添加批量轴

enc_X = torch.unsqueeze(torch.tensor(src_tokens, dtype=torch.long, device=device), dim=0)

enc_outputs = net.encoder(enc_X, enc_valid_len)

dec_state = net.decoder.init_state(enc_outputs, enc_valid_len)

# 添加批量轴

dec_X = torch.unsqueeze(torch.tensor([tgt_vocab['']], dtype=torch.long, device=device), dim=0)

output_seq, attention_weight_seq = [], []

for _ in range(num_steps):

Y, dec_state = net.decoder(dec_X, dec_state)

# 我们使用具有预测最高可能性的词元,作为解码器在下一时间步的输入

dec_X = Y.argmax(dim=2)

pred = dec_X.squeeze(dim=0).type(torch.int32).item()

# 保存注意力权重

if save_attention_weights:

attention_weight_seq.append(net.decoder.attention_weights)

# 一旦序列结束词元被预测,输出序列的生成就完成了

if pred == tgt_vocab[""]:

break

output_seq.append(pred)

return ' '.join(tgt_vocab.to_tokens(output_seq)), attention_weight_seq

def bleu(pred_seq, label_seq, k):

# 计算bleu

pred_tokens, label_tokens = pred_seq.split(' '), label_seq.split(' ')

len_pred, len_label = len(pred_tokens), len(label_tokens)

score = math.exp(min(0, 1 - len_label / len_pred))

for n in range(1, k + 1):

num_matches, label_subs = 0, collections.defaultdict(int)

for i in range(len_label - n + 1):

label_subs[''.join(label_tokens[i: i + n])] = label_subs[''.join(label_tokens[i: i + n])] + 1

for i in range(len_pred - n + 1):

if label_subs[''.join(pred_tokens[i: i + n])] > 0:

num_matches =num_matches + 1

label_subs[''.join(pred_tokens[i: i + n])] -= 1

score *= math.pow(num_matches / (len_pred - n + 1), math.pow(0.5, n))

return score

def show_heatmaps(matrices, xlabel, ylabel, titles=None, figsize=(2.5, 2.5), cmap='Reds'):

use_svg_display()

num_rows, num_cols = matrices.shape[0], matrices.shape[1]

fig, axes = plt.subplots(num_rows, num_cols, figsize=figsize, sharex=True, sharey=True, squeeze=False)

for i, (row_axes, row_matrices) in enumerate(zip(axes, matrices)):

for j, (ax, matrix) in enumerate(zip(row_axes, row_matrices)):

pcm = ax.imshow(matrix.detach().numpy(), cmap=cmap)

if i == num_rows - 1:

ax.set_xlabel(xlabel)

if j == 0:

ax.set_ylabel(ylabel)

if titles:

ax.set_title(titles[j])

fig.colorbar(pcm, ax=axes, shrink=0.6 )

def maskd_softmax(X, valid_lens):

# 通过在最后一个轴上遮蔽元素来执行softmax操作

# X:3D张量, valid_lens:1D或2D张量

if valid_lens is None:

return nn.functional.softmax(X, dim=-1)

else:

shape = X.shape

if valid_lens.dim() == 1:

valid_lens = torch.repeat_interleave(valid_lens, shape[1])

else:

valid_lens = valid_lens.reshape(-1)

# 在最后的轴上,被遮蔽的元素使用一个非常大的负值替换,从而其softmax(指数)输出为0

X = sequence_mask(X.reshape(-1, shape[-1]), valid_lens, value=-1e6)

return nn.functional.softmax(X.reshape(shape), dim=-1)

class AdditiveAttention(nn.Module):

# 加性注意力

def __init__(self, key_size, query_size, num_hiddens, dropout, **kwargs):

super(AdditiveAttention, self).__init__(**kwargs)

self.W_k = nn.Linear(key_size, num_hiddens, bias=False)

self.W_q = nn.Linear(query_size, num_hiddens, bias=False)

self.w_v = nn.Linear(num_hiddens, 1, bias=False)

self.dropout = nn.Dropout(dropout)

def forward(self, queries, keys, values, valid_lens):

queries, keys = self.W_q(queries), self.W_q(keys)

# 在维度扩展后

# queries的形状:(batch_size, 查询的个数, 1, num_hidden)

# key的形状:(batch_size, 1, “键—值”对的个数, num_hidden)

# 使用广播的方式进行求和

features = queries.unsqueeze(2) + keys.unsqueeze(1)

features = torch.tanh(features)

# self.w_v仅有一个输出,因此从形状中移除最后那个维度

# scores的形状:(batch_size, 查询的个数, "键—值"对的个数)

scores = self.w_v(features).squeeze(-1)

scores.attention_weights = maskd_softmax(scores, valid_lens)

# values的形状:(batch_size, “键—值”对的个数,值得维度)

return torch.bmm(self.dropout(self.attention_weights), values)

class DotProductAttention(nn.Module):

# 缩放点积注意力

def __init__(self, dropout, **kwargs):

super(DotProductAttention, self).__init__(**kwargs)

self.dropout = nn.Dropout(dropout)

# queries的形状:(batch_size, 查询的个数, d)

# keys的形状:(batch_size, “键-值”对的个数, d)

# values的形状:(batch_size, “键-值”对的个数, 值得维度)

# valid_lens的形状:(batch_size, )或(batch_size, 查询的个数)

def forward(self, queries, keys, values, valid_lens=None):

d = queries.shape[-1]

# 设置transpose_b=True 为了交换leys 的最后两个维度

scores = torch.bmm(queries, keys.transpose(1, 2)) / math.sqrt(d)

self.attention_weights = maskd_softmax(scores, valid_lens)

return torch.bmm(self.dropout(self.attention_weights), values)

class AttentionDecoder(Decoder):

# 带有注意力机制的解码器基本接口

def __init__(self, **kwargs):

super(AttentionDecoder, self).__init__(**kwargs)

@property

def attention_weights(self):

raise NotImplementedError

class MultiHeadAttention(nn.Module):

def __init__(self, key_size, query_size, value_size, num_hiddens,

num_heads, dropout, bias=False, **kwargs):

super(MultiHeadAttention, self).__init__(**kwargs)

self.num_heads = num_heads

self.attention = d2l.DotProductAttention(dropout)

self.W_q = nn.Linear(query_size, num_hiddens, bias=bias)

self.W_k = nn.Linear(key_size, num_hiddens, bias=bias)

self.W_v = nn.Linear(value_size, num_hiddens, bias=bias)

self.W_o = nn.Linear(num_hiddens, num_hiddens, bias=bias)

def forward(self, queries, keys, values, valid_lens):

# `queries`, `keys`, or `values` 的形状:

# (`batch_size`, 查询或者“键-值”对的个数, `num_hiddens`)

# `valid_lens` 的形状:

# (`batch_size`,) or (`batch_size`, 查询的个数)

# 经过变换后,输出的 `queries`, `keys`, or `values` 的形状:

# (`batch_size` * `num_heads`, 查询或者“键-值”对的个数,

# `num_hiddens` / `num_heads`)

queries = transpose_qkv(self.W_q(queries), self.num_heads)

keys = transpose_qkv(self.W_k(keys), self.num_heads)

values = transpose_qkv(self.W_v(values), self.num_heads)

if valid_lens is not None:

# 在轴 0,将第一项(标量或者矢量)复制 `num_heads` 次,

# 然后如此复制第二项,然后诸如此类。

valid_lens = torch.repeat_interleave(

valid_lens, repeats=self.num_heads, dim=0)

# `output` 的形状: (`batch_size` * `num_heads`, 查询的个数,

# `num_hiddens` / `num_heads`)

output = self.attention(queries, keys, values, valid_lens)

# `output_concat` 的形状: (`batch_size`, 查询的个数, `num_hiddens`)

output_concat = transpose_output(output, self.num_heads)

return self.W_o(output_concat)

def transpose_qkv(X, num_heads):

# 输入 `X` 的形状: (`batch_size`, 查询或者“键-值”对的个数, `num_hiddens`).

# 输出 `X` 的形状: (`batch_size`, 查询或者“键-值”对的个数, `num_heads`,

# `num_hiddens` / `num_heads`)

X = X.reshape(X.shape[0], X.shape[1], num_heads, -1)

# 输出 `X` 的形状: (`batch_size`, `num_heads`, 查询或者“键-值”对的个数,

# `num_hiddens` / `num_heads`)

X = X.permute(0, 2, 1, 3)

# `output` 的形状: (`batch_size` * `num_heads`, 查询或者“键-值”对的个数,

# `num_hiddens` / `num_heads`)

return X.reshape(-1, X.shape[2], X.shape[3])

#@save

def transpose_output(X, num_heads):

"""逆转 `transpose_qkv` 函数的操作"""

X = X.reshape(-1, num_heads, X.shape[1], X.shape[2])

X = X.permute(0, 2, 1, 3)

return X.reshape(X.shape[0], X.shape[1], -1)

class PositionalEncoding(nn.Module):

def __init__(self, num_hiddens, dropout, max_len=1000):

super(PositionalEncoding, self).__init__()

self.dropout = nn.Dropout(dropout)

# 创建一个足够长的 `P`

self.P = torch.zeros((1, max_len, num_hiddens))

X = torch.arange(max_len, dtype=torch.float32).reshape(

-1, 1) / torch.pow(10000, torch.arange(

0, num_hiddens, 2, dtype=torch.float32) / num_hiddens)

self.P[:, :, 0::2] = torch.sin(X)

self.P[:, :, 1::2] = torch.cos(X)

def forward(self, X):

X = X + self.P[:, :X.shape[1], :].to(X.device)

return self.dropout(X)

class PositionWiseFFN(nn.Module):

def __init__(self, ffn_num_input, ffn_num_hiddens, ffn_num_outputs,

**kwargs):

super(PositionWiseFFN, self).__init__(**kwargs)

self.dense1 = nn.Linear(ffn_num_input, ffn_num_hiddens)

self.relu = nn.ReLU()

self.dense2 = nn.Linear(ffn_num_hiddens, ffn_num_outputs)

def forward(self, X):

return self.dense2(self.relu(self.dense1(X)))

class AddNorm(nn.Module):

def __init__(self, normalized_shape, dropout, **kwargs):

super(AddNorm, self).__init__(**kwargs)

self.dropout = nn.Dropout(dropout)

self.ln = nn.LayerNorm(normalized_shape)

def forward(self, X, Y):

return self.ln(self.dropout(Y) + X)

class EncoderBlock(nn.Module):

def __init__(self, key_size, query_size, value_size, num_hiddens,

norm_shape, ffn_num_input, ffn_num_hiddens, num_heads,

dropout, use_bias=False, **kwargs):

super(EncoderBlock, self).__init__(**kwargs)

self.attention = d2l.MultiHeadAttention(

key_size, query_size, value_size, num_hiddens, num_heads, dropout,

use_bias)

self.addnorm1 = AddNorm(norm_shape, dropout)

self.ffn = PositionWiseFFN(

ffn_num_input, ffn_num_hiddens, num_hiddens)

self.addnorm2 = AddNorm(norm_shape, dropout)

def forward(self, X, valid_lens):

Y = self.addnorm1(X, self.attention(X, X, X, valid_lens))

return self.addnorm2(Y, self.ffn(Y))

class TransformerEncoder(d2l.Encoder):

def __init__(self, vocab_size, key_size, query_size, value_size,

num_hiddens, norm_shape, ffn_num_input, ffn_num_hiddens,

num_heads, num_layers, dropout, use_bias=False, **kwargs):

super(TransformerEncoder, self).__init__(**kwargs)

self.num_hiddens = num_hiddens

self.embedding = nn.Embedding(vocab_size, num_hiddens)

self.pos_encoding = d2l.PositionalEncoding(num_hiddens, dropout)

self.blks = nn.Sequential()

for i in range(num_layers):

self.blks.add_module("block"+str(i),

EncoderBlock(key_size, query_size, value_size, num_hiddens,

norm_shape, ffn_num_input, ffn_num_hiddens,

num_heads, dropout, use_bias))

def forward(self, X, valid_lens, *args):

# 因为位置编码值在 -1 和 1 之间,

# 因此嵌入值乘以嵌入维度的平方根进行缩放,

# 然后再与位置编码相加。

X = self.pos_encoding(self.embedding(X) * math.sqrt(self.num_hiddens))

self.attention_weights = [None] * len(self.blks)

for i, blk in enumerate(self.blks):

X = blk(X, valid_lens)

self.attention_weights[

i] = blk.attention.attention.attention_weights

return X

def box_corner_to_center(boxes):

"""从(左上,右下)转换到(中间,宽度,高度)"""

x1, y1, x2, y2 = boxes[:, 0], boxes[:, 1], boxes[:, 2], boxes[:, 3]

cx = (x1 + x2) / 2

cy = (y1 + y2) / 2

w = x2 - x1

h = y2 - y1

boxes = torch.stack((cx, cy, w, h), axis=-1)

return boxes

def box_center_to_corner(boxes):

"""从(中间,宽度,高度)转换到(左上,右下)"""

cx, cy, w, h = boxes[:, 0], boxes[:, 1], boxes[:, 2], boxes[:, 3]

x1 = cx - 0.5 * w

y1 = cy - 0.5 * h

x2 = cx + 0.5 * w

y2 = cy + 0.5 * h

boxes = torch.stack((x1, y1, x2, y2), axis=-1)

return boxes

def bbox_to_rect(bbox, color):

# 将边界框 (左上x, 左上y, 右下x, 右下y) 格式转换成 matplotlib 格式:

# ((左上x, 左上y), 宽, 高)

return d2l.plt.Rectangle(

xy=(bbox[0], bbox[1]), width=bbox[2]-bbox[0], height=bbox[3]-bbox[1],

fill=False, edgecolor=color, linewidth=2)

def train_batch_ch13(net, X, y, loss, trainer, devices):

if isinstance(X, list):

# 微调BERT中所需

X = [x.to(devices[0]) for x in X]

else:

X = X.to(devices[0])

y = y.to(devices[0])

net.train()

trainer.zero_grad()

pred = net(X)

l = loss(pred, y)

l.sum().backward()

trainer.step()

train_loss_sum = l.sum()

train_acc_sum = d2l.accuracy(pred, y)

return train_loss_sum, train_acc_sum

def train_ch13(net, train_iter, test_iter, loss, trainer, num_epochs,

devices=d2l.try_all_gpus()):

timer, num_batches = d2l.Timer(), len(train_iter)

animator = d2l.Animator(xlabel='epoch', xlim=[1, num_epochs], ylim=[0, 1],

legend=['train loss', 'train acc', 'test acc'])

net = nn.DataParallel(net, device_ids=devices).to(devices[0])

for epoch in range(num_epochs):

# 4个维度:储存训练损失,训练准确度,实例数,特点数

metric = d2l.Accumulator(4)

for i, (features, labels) in enumerate(train_iter):

timer.start()

l, acc = train_batch_ch13(

net, features, labels, loss, trainer, devices)

metric.add(l, acc, labels.shape[0], labels.numel())

timer.stop()

if (i + 1) % (num_batches // 5) == 0 or i == num_batches - 1:

animator.add(epoch + (i + 1) / num_batches,

(metric[0] / metric[2], metric[1] / metric[3],

None))

test_acc = d2l.evaluate_accuracy_gpu(net, test_iter)

animator.add(epoch + 1, (None, None, test_acc))

print(f'loss {metric[0] / metric[2]:.3f}, train acc '

f'{metric[1] / metric[3]:.3f}, test acc {test_acc:.3f}')

print(f'{metric[2] * num_epochs / timer.sum():.1f} examples/sec on '

f'{str(devices)}')