神经网络:卷积层、池化层、非线性激活函数

文章目录

-

-

- 前置:卷积操作

- 一、基本骨架nn.Module的使用(对其继承)

- 二、卷积层(Convolution Layers)

- 三、池化层(Pooling Layers)

-

- ceil mode/floor mode

- 四、非线性激活函数

-

前置:卷积操作

stride = 1

输出一个3维矩阵

stride = 2

卷积后输出一个2维矩阵

#卷积操作

import torch

import torch.nn.functional as F

#二维张量的输入,开始有多少个中括号就是几维张量

#此处input和kernel是2维张量,因此torch.Size([5,5])括号里是一个长度为2的列表

#表示一个5*5的矩阵

input = torch.tensor([[1,2,0,3,1],

[0,1,2,3,1],

[1,2,1,0,0],

[5,2,3,1,1],

[2,1,0,1,1]])

#卷积核

#表示一个3*3的矩阵

kernel = torch.tensor([[1,2,1],

[0,1,0],

[2,1,0]])

#输入矩阵和卷积核的尺寸

print(input.shape)

print(kernel.shape)

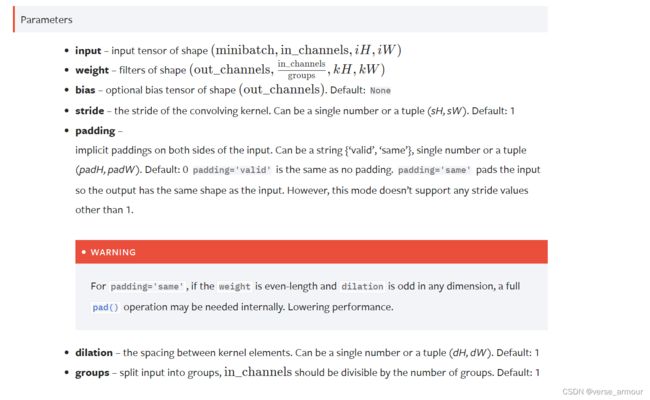

#torch.nn.functional.conv2d(input, weight, bias=None, stride=1, padding=0, dilation=1, groups=1) → Tensor

#input – input tensor of shape(minibatch,in_channels,iH,iW)

input = torch.reshape(input,(1,1,5,5))

kernel = torch.reshape(kernel,(1,1,3,3))

print(input.shape)

print(kernel.shape)

output = F.conv2d(input,kernel,stride=1)

print(output)#输出3维矩阵

output2 = F.conv2d(input,kernel,stride=2)

print(output2)#输出2维矩阵

output3 = F.conv2d(input,kernel,stride=1,padding=1)

print(output3)#padding=1是在输入矩阵边缘补一圈0的操作,保证了输入输出维度一致。

一、基本骨架nn.Module的使用(对其继承)

import torch

from torch import nn

#一、基本骨架nn.Module的使用

#定义神经网络的模板(对nn.module类的继承)

class zhiyuan(nn.Module):

def __init__(self) -> None:

super().__init__()

def forward(self,input):

output = input + 1

return output

#用zhiyuan模块创建出来的神经网络xuzhiyuan

xuzhiyuan = zhiyuan()#类的实例化

#定义输入x

x = torch.tensor(1.0)

#输出

output = xuzhiyuan(x)

print(output)

二、卷积层(Convolution Layers)

https://github.com/vdumoulin/conv_arithmetic/blob/master/README.md

github上的这个动画很好地阐释了torch.nn.functional.conv2d函数的参数padding和stride的含义。

参数kernel_size的值取多少都无所谓,因为训练神经网络的过程中就是对卷积核的参数进行不断调整。

两个最重要的参数:

- in_channels(输入图片的信道数)

- out_channels(输出图片的信道数)

如果输出的channel数是2,就会生成两个卷积核,对应生成两个矩阵,最终这两个矩阵的叠加作为输出。

代码示例:

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10("../data",train=False,transform=torchvision.transforms.ToTensor()

,download=True)

dataloader = DataLoader(dataset,batch_size=64)

class Verse(nn.Module):

def __init__(self) -> None:

super().__init__()

self.conv1 = Conv2d(in_channels=3,out_channels=6,kernel_size=3,stride=1,padding=0)

def forward(self,x):

x = self.conv1(x)

return x

verse = Verse()

# print(verse)

writer = SummaryWriter("logs")

step = 0

for data in dataloader:

imgs,targets = data

output = verse(imgs)

print(imgs.shape)

print(output.shape)

#torch.Size([64, 3, 32, 32])

writer.add_images("input",imgs,step)

#torch.Size([64, 6, 30, 30])

output = torch.reshape(output,(-1,3,30,30))#转3通道,只有3通道的RGB可以可视化输出

writer.add_images("output",output,step)

step = step + 1

writer.close()

三、池化层(Pooling Layers)

ceil mode/floor mode

ceil:向上取整

floor:向下取整

是否保留右边不足一个池化核大小的数据取决于前面设定的参数ceil_mode

如果True,则向上取整,保留;

如果False,则向下取整,舍弃。

import torch

import torchvision

from torch import nn

from torch.nn import MaxPool2d

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10("../data",train=True,transform=torchvision.transforms.ToTensor())

dataloader = DataLoader(dataset,batch_size=64)

# input = torch.tensor([[1,2,0,3,1],

# [0,1,2,3,1],

# [1,2,1,0,0],

# [5,2,3,1,1],

# [2,1,0,1,1]],dtype=torch.float32)

# input = torch.reshape(input,(-1,1,5,5))#一个batch_size(后期会调整),一个channel,每个channel是一个5*5的矩阵

# print(input.shape)

#继承父类(nn.Module)

class Verse(nn.Module):

def __init__(self) -> None:

super(Verse,self).__init__()

self.maxpool1 = MaxPool2d(kernel_size=3,ceil_mode=False)

def forward(self,input):

output = self.maxpool1(input)

return output

#实例化

verse = Verse()

writer = SummaryWriter("logs_maxpoold2")

step = 0

for data in dataloader:

imgs,targets = data

writer.add_images("input1",imgs,step)

writer.add_images("output1",verse(imgs),step)

step = step + 1

writer.close()

四、非线性激活函数

import torch

from torch import nn

from torch.nn import ReLU

input = torch.tensor([[1,0.5],

[-1,3]])

input = torch.reshape(input,(-1,1,2,2))

# print(input.shape)

class Verse(nn.Module):

def __init__(self):

super(Verse,self).__init__()

self.relu1 = ReLU()

def forward(self,input):

output = self.relu1(input)

return output

verse = Verse()

output = verse(input)

print(output)

#tensor([[[[1.0000, 0.5000],

# [0.0000, 3.0000]]]])