23、OpenMV使用tensorflow 1.15.0训练模型mobilenet_v1_1.0_224进行车辆识别

基本思想:希望使用OpenMV调用Tensorflow 的tflite进行目标检测

一、下载window的Openmv的开发工具,软件下载

https://singtown.com/openmv/安装软件之后,进行链接和运行测试即可

测试的画面

帧率还是蛮快的

46.6321

46.6132

46.6284

46.6431

46.6249

46.6392

46.6216

46.6356

46.6185

46.6321

46.6156

46.6289

46.6418

46.6258三、本想使用49、TensorFlow训练模型转成tfilte,进行Android端进行车辆检测、跟踪、部署_sxj731533730-CSDN博客 训练的v3模型生成的tflite直接挪过来,测试,发现跑不通,

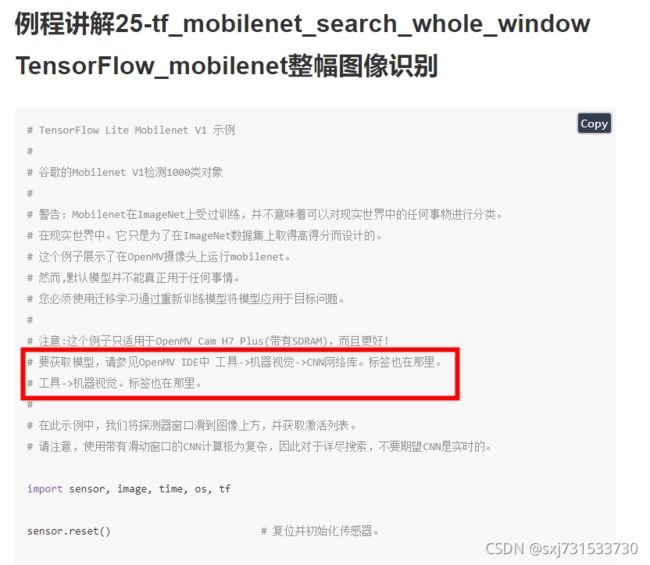

又想把18、R329开发板模拟仿真_sxj731533730-CSDN博客 训练的v2模型移植过来,发现也跑不动~~ 仔细查看官方的历程发现这几句注释tf_mobilenet_search_whole_window TensorFlow_mobilenet整幅图像识别 · OpenMV中文入门教程

发现里面全是mobileNet_v1的模型,因此我去tensroflow官网找了一个v1的模型加载openMV试了一下,看看可不可以运行

测试了https://github.com/tensorflow/models/blob/master/research/slim/nets/mobilenet_v1.md 模型是可以运行的

四、开始训练我们自己的模型吧,没有使用它提供的在线训练工具 Edge Impulse~ 原因为啥,数据保密~~~

利用之前的生成的record模型,进行v1的训练,其中搭建环境可以具体具体参考下列两篇博客

18、R329开发板模拟仿真_sxj731533730-CSDN博客 49、TensorFlow训练模型转成tfilte,进行Android端进行车辆检测、跟踪、部署_sxj731533730-CSDN博客

首先检查一下数据集是否标注一一对应

import os

path1 = "G:\\B"

def file_name(file_dir):

jpg_list = []

json_list = []

for root, dirs, files in os.walk(file_dir):

for file in files:

if os.path.splitext(file)[1] == '.jpg':

jpg_list.append(os.path.splitext(file)[0])

elif os.path.splitext(file)[1] == '.json':

json_list.append(os.path.splitext(file)[0])

diff = set(json_list).difference(set(jpg_list))

print("picture ",len(jpg_list))

print(len(diff))

for name in diff:

print("no jpg", name + ".json")

os.remove(path1 + "/" + name + ".json")

diff2 = set(jpg_list).difference(set(json_list))

print(len(diff2))

for name in diff2:

print("no json", name + ".jpg")

os.remove(path1 + "/" + name + ".jpg")

return jpg_list, json_list

file_name(path1)测试结果 (1915 张图片) 按1915*80%算=1532张图片

"C:\Program Files\Python36\python.exe" F:/TFLite_detection_stream.py

picture 1915

0

5

no json 02a43a441f5c71ab9ff8ecab1f33ca49.jpg

no json e8a6241633597e1b7430e0cf5eb06222.jpg

no json 4d3de6244250e35355bd45d83d615379.jpg

no json 2009_000354.jpg

no json 01ab0c4e74e5cefbc25e78e8b2b4b30d.jpg

Process finished with exit code 0然后进行标签文文件对应生成处理

import os

import xml.etree.ElementTree as ET

import shutil

import io

import json

dir = "G:\\B"

for file in os.listdir(dir):

if file.endswith(".json"):

file_json = io.open(os.path.join(dir, file), 'r', encoding='utf-8')

json_data = file_json.read()

data = json.loads(json_data)

m_filename = data['shapes'][0]['label']

newDir = os.path.join(dir, m_filename)

if not os.path.isdir(newDir):

os.mkdir(newDir)

(filename, extension) = os.path.splitext(file)

if not os.path.isfile(os.path.join(newDir, ".".join([filename, "jpg"]))):

shutil.copy(os.path.join(dir, ".".join([filename, "jpg"])), newDir)

elif file.endswith(".xml"):

# with open(os.path.join(root,file), 'r') as f:

tree = ET.parse(os.path.join(dir, file))

root = tree.getroot()

for obj in root.iter('object'): # 多个元素

cls = obj.find('name').text

newDir = os.path.join(dir, cls)

print(newDir)

if not os.path.isdir(newDir):

os.mkdir(newDir)

(filename, extension) = os.path.splitext(file)

if not os.path.isfile(os.path.join(newDir, ".".join([filename, "jpg"]))):

shutil.copy(os.path.join(dir, ".".join([filename, "jpg"])), newDir)

elif file.endswith(".jpg"):

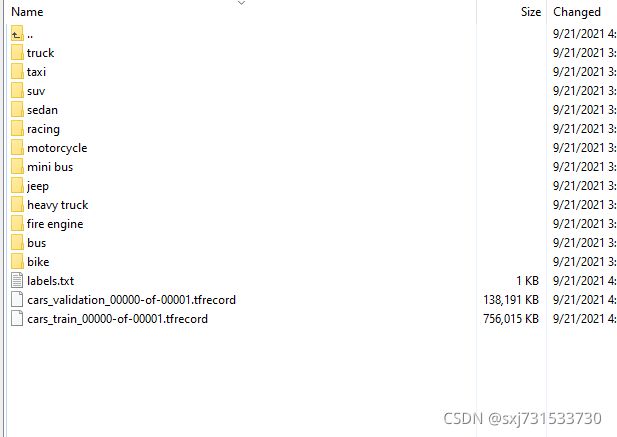

print(os.path.join(dir, file))生成结果(外侧的图片和标注文件可以移走了)

定义描述文件,其实和我之前玩的R329训练方法是一样的18、R329开发板模拟仿真_sxj731533730-CSDN博客

ubuntuu@ubuntu:~/ubuntu/models$ sudo vim research/slim/datasets/car.pycar.py文件内容

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import os

import tensorflow.compat.v1 as tf

import tf_slim as slim

from datasets import dataset_utils

_FILE_PATTERN = 'cars_%s_*.tfrecord'

SPLITS_TO_SIZES = {'train': int(1915*0.8), 'validation': int(1915*0.2)}

_NUM_CLASSES = 12

_ITEMS_TO_DESCRIPTIONS = {

'image': 'A color image of varying size.',

'label': 'A single integer between 0 and 4',

}

def get_split(split_name, dataset_dir, file_pattern=None, reader=None):

"""Gets a dataset tuple with instructions for reading flowers.

Args:

split_name: A train/validation split name.

dataset_dir: The base directory of the dataset sources.

file_pattern: The file pattern to use when matching the dataset sources.

It is assumed that the pattern contains a '%s' string so that the split

name can be inserted.

reader: The TensorFlow reader type.

Returns:

A `Dataset` namedtuple.

Raises:

ValueError: if `split_name` is not a valid train/validation split.

"""

if split_name not in SPLITS_TO_SIZES:

raise ValueError('split name %s was not recognized.' % split_name)

if not file_pattern:

file_pattern = _FILE_PATTERN

file_pattern = os.path.join(dataset_dir, file_pattern % split_name)

# Allowing None in the signature so that dataset_factory can use the default.

if reader is None:

reader = tf.TFRecordReader

keys_to_features = {

'image/encoded': tf.FixedLenFeature((), tf.string, default_value=''),

'image/format': tf.FixedLenFeature((), tf.string, default_value='png'),

'image/class/label': tf.FixedLenFeature(

[], tf.int64, default_value=tf.zeros([], dtype=tf.int64)),

}

items_to_handlers = {

'image': slim.tfexample_decoder.Image(),

'label': slim.tfexample_decoder.Tensor('image/class/label'),

}

decoder = slim.tfexample_decoder.TFExampleDecoder(

keys_to_features, items_to_handlers)

labels_to_names = None

if dataset_utils.has_labels(dataset_dir):

labels_to_names = dataset_utils.read_label_file(dataset_dir)

return slim.dataset.Dataset(

data_sources=file_pattern,

reader=reader,

decoder=decoder,

num_samples=SPLITS_TO_SIZES[split_name],

items_to_descriptions=_ITEMS_TO_DESCRIPTIONS,

num_classes=_NUM_CLASSES,

labels_to_names=labels_to_names)同时向同在一个目录下的dataset_factory.py填入对应dataset_map

from datasets import car

....

datasets_map = {

'cifar10': cifar10,

'flowers': flowers,

'imagenet': imagenet,

'mnist': mnist,

'visualwakewords': visualwakewords,

'car':car,

}

五、将处理过的数据集使用winSCAP拖到

/home/ubuntu/models/research/slim/datasets/car修改一下

sudo vim /home/ubuntu/models/research/slim/datasets/convert_dataset_car.py文件内容

# Copyright 2016 The TensorFlow Authors. All Rights Reserved.

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import math

import os

import random

import sys

from six.moves import range

from six.moves import zip

import tensorflow.compat.v1 as tf

from datasets import dataset_utils

# The URL where the Flowers data can be downloaded.

# The number of images in the validation set.

_NUM_VALIDATION = 350

# Seed for repeatability.

_RANDOM_SEED = 0

# The number of shards per dataset split.

_NUM_SHARDS = 1

class ImageReader(object):

"""Helper class that provides TensorFlow image coding utilities."""

def __init__(self):

# Initializes function that decodes RGB JPEG data.

self._decode_jpeg_data = tf.placeholder(dtype=tf.string)

self._decode_jpeg = tf.image.decode_jpeg(self._decode_jpeg_data, channels=3)

def read_image_dims(self, sess, image_data):

image = self.decode_jpeg(sess, image_data)

return image.shape[0], image.shape[1]

def decode_jpeg(self, sess, image_data):

image = sess.run(self._decode_jpeg,

feed_dict={self._decode_jpeg_data: image_data})

assert len(image.shape) == 3

assert image.shape[2] == 3

return image

def _get_filenames_and_classes(dataset_dir):

"""Returns a list of filenames and inferred class names.

Args:

dataset_dir: A directory containing a set of subdirectories representing

class names. Each subdirectory should contain PNG or JPG encoded images.

Returns:

A list of image file paths, relative to `dataset_dir` and the list of

subdirectories, representing class names.

"""

flower_root = os.path.join(dataset_dir)

directories = []

class_names = []

for filename in os.listdir(flower_root):

path = os.path.join(flower_root, filename)

if os.path.isdir(path):

directories.append(path)

class_names.append(filename)

photo_filenames = []

for directory in directories:

for filename in os.listdir(directory):

path = os.path.join(directory, filename)

photo_filenames.append(path)

return photo_filenames, sorted(class_names)

def _get_dataset_filename(dataset_dir, split_name, shard_id):

output_filename = 'cars_%s_%05d-of-%05d.tfrecord' % (

split_name, shard_id, _NUM_SHARDS)

return os.path.join(dataset_dir, output_filename)

def _convert_dataset(split_name, filenames, class_names_to_ids, dataset_dir):

"""Converts the given filenames to a TFRecord dataset.

Args:

split_name: The name of the dataset, either 'train' or 'validation'.

filenames: A list of absolute paths to png or jpg images.

class_names_to_ids: A dictionary from class names (strings) to ids

(integers).

dataset_dir: The directory where the converted datasets are stored.

"""

assert split_name in ['train', 'validation']

num_per_shard = int(math.ceil(len(filenames) / float(_NUM_SHARDS)))

with tf.Graph().as_default():

image_reader = ImageReader()

with tf.Session('') as sess:

for shard_id in range(_NUM_SHARDS):

output_filename = _get_dataset_filename(

dataset_dir, split_name, shard_id)

with tf.python_io.TFRecordWriter(output_filename) as tfrecord_writer:

start_ndx = shard_id * num_per_shard

end_ndx = min((shard_id+1) * num_per_shard, len(filenames))

for i in range(start_ndx, end_ndx):

sys.stdout.write('\r>> Converting image %d/%d shard %d' % (

i+1, len(filenames), shard_id))

sys.stdout.flush()

# Read the filename:

image_data = tf.gfile.GFile(filenames[i], 'rb').read()

height, width = image_reader.read_image_dims(sess, image_data)

class_name = os.path.basename(os.path.dirname(filenames[i]))

class_id = class_names_to_ids[class_name]

example = dataset_utils.image_to_tfexample(

image_data, b'jpg', height, width, class_id)

tfrecord_writer.write(example.SerializeToString())

sys.stdout.write('\n')

sys.stdout.flush()

def _dataset_exists(dataset_dir):

for split_name in ['train', 'validation']:

for shard_id in range(_NUM_SHARDS):

output_filename = _get_dataset_filename(

dataset_dir, split_name, shard_id)

if not tf.gfile.Exists(output_filename):

return False

return True

def run(dataset_dir):

"""Runs the download and conversion operation.

Args:

dataset_dir: The dataset directory where the dataset is stored.

"""

if not tf.gfile.Exists(dataset_dir):

tf.gfile.MakeDirs(dataset_dir)

if _dataset_exists(dataset_dir):

print('Dataset files already exist. Exiting without re-creating them.')

return

photo_filenames, class_names = _get_filenames_and_classes(dataset_dir)

class_names_to_ids = dict(

list(zip(class_names, list(range(len(class_names))))))

# Divide into train and test:

random.seed(_RANDOM_SEED)

random.shuffle(photo_filenames)

training_filenames = photo_filenames[_NUM_VALIDATION:]

validation_filenames = photo_filenames[:_NUM_VALIDATION]

# First, convert the training and validation sets.

_convert_dataset('train', training_filenames, class_names_to_ids,

dataset_dir)

_convert_dataset('validation', validation_filenames, class_names_to_ids,

dataset_dir)

# Finally, write the labels file:

labels_to_class_names = dict(

list(zip(list(range(len(class_names))), class_names)))

dataset_utils.write_label_file(labels_to_class_names, dataset_dir)

print('\nFinished converting the car dataset!')

dir="/home/ubuntu/models/research/slim/datasets/car"

run(dir)执行命令

ubuntu@ubuntu:~/models$ python3 research/slim/datasets/convert_dataset_car.py生成的目录结果

六、将下载 https://github.com/tensorflow/models/blob/master/research/slim/nets/mobilenet_v1.md的mobilenet_v1_1.0_224 文件夹移动到文件夹

/home/ubuntu/models/research/slim/datasets训练命令train

ubutnu@ubuntu:~/models$ python3 research/slim/train_image_classifier.py --train_dir=./train_dir --dataset_dir=research/slim/datasets/car --dataset_name=car --dataset_spilt_name=train --model_name=mobilenet_v1 --train_image_size=224 --checkpoint_path=research/slim/datasets/mobilenet_v1_1.0_224/mobilenet_v1_1.0_224.ckpt --max_number_of_steps=30000 --checkpoint_exclude_scopes=MobilenetV1/Logits训练完成

I0921 17:27:12.002618 140244355847936 learning.py:512] global step 30000: loss = 0.0850 (0.183 sec/step)

INFO:tensorflow:Stopping Training.

I0921 17:27:12.012650 140244355847936 learning.py:769] Stopping Training.

INFO:tensorflow:Finished training! Saving model to disk.

I0921 17:27:12.013312 140244355847936 learning.py:777] Finished training! Saving model to disk.

/home/ps/anaconda3/envs/tensorflow/lib/python3.6/site-packages/tensorflow_core/python/summary/writer/writer.py:386: UserWarning: Attempting to use a closed FileWriter. The operation will be a noop unless the FileWriter is explicitly reopened.

warnings.warn("Attempting to use a closed FileWriter. "验证命令vaild

ubuntu@ubuntu:~/models$ python3 research/slim/eval_image_classifier.py --alsologtostderr ---checkpoint=train_dir/model.ckpt-30000 --dataset_dir=research/slim/datasets/car --dataset_name=car --dataset_split_name=validation --model_name=mobilenet_v1 --train_image_size=224 --eval_dir=train_dir/eval验证结果

W0922 08:42:00.008753 139924728133376 deprecation.py:323] From /home/ps/anaconda3/envs/tensorflow/lib/python3.6/site-packages/tensorflow_core/python/training/monitored_session.py:882: start_queue_runners (from tensorflow.python.training.queue_runner_impl) is deprecated and will be removed in a future version.

Instructions for updating:

To construct input pipelines, use the `tf.data` module.

2021-09-22 08:42:01.020176: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcudnn.so.7

2021-09-22 08:42:02.386257: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcublas.so.10.0

INFO:tensorflow:Evaluation [1/4]

I0922 08:42:03.114004 139924728133376 evaluation.py:167] Evaluation [1/4]

INFO:tensorflow:Evaluation [2/4]

I0922 08:42:03.205864 139924728133376 evaluation.py:167] Evaluation [2/4]

INFO:tensorflow:Evaluation [3/4]

I0922 08:42:03.469731 139924728133376 evaluation.py:167] Evaluation [3/4]

INFO:tensorflow:Evaluation [4/4]

I0922 08:42:03.855772 139924728133376 evaluation.py:167] Evaluation [4/4]

eval/Accuracy[0.825]

eval/Recall_5[0.7175]

INFO:tensorflow:Finished evaluation at 2021-09-22-08:42:04

I0922 08:42:04.115464 139924728133376 evaluation.py:275] Finished evaluation at 2021-09-22-08:42:04七、生成pb的过程先要生成前导图,然后再给前导图导入参数即可

(1)、创建推理图 需要下载 GitHub - tensorflow/tensorflow: An Open Source Machine Learning Framework for Everyone 代码

ubuntu@ubuntu:~/models$ python3 research/slim/export_inference_graph.py alsologtostderr --model_name=mobilenet_v1 --output_file=./inference_graph_mobilenet.pb --dataset_name=car生成模型

ubuntu@ubuntu:~/models$ python3 ../tensorflow/tensorflow/python/tools/freeze_graph.py --input_graph=./inference_graph_mobilenet.pb --input_binary=true --input_checkpoint=./train_dir/model.ckpt-30000 --output_graph=./frozen_mobilenet.pb --output_node_names=MobilenetV1/Predictions/Reshape_1优化模型

ubuntu@ubuntu:~/models$ python3 ../tensorflow/tensorflow/python/tools/optimize_for_inference.py --input=./frozen_mobilenet.pb --output=./opt_frozen_mobilenet.pb --input_names=input --output_names=MobilenetV1/Predictions/Reshape_1然后使用脚本生成tflite模型

import tensorflow as tf

# 需要配置

in_path = r"frozen_mobilenet.pb"

# 模型输入节点

input_tensor_name = ["input"]

input_tensor_shape = {"input": [1, 224, 224, 3]}

# 模型输出节点

classes_tensor_name = ["MobilenetV1/Predictions/Reshape_1"]

converter = tf.lite.TFLiteConverter.from_frozen_graph(in_path,

input_tensor_name, classes_tensor_name,

input_tensor_shape)

converter.allow_custom_ops = True

converter.post_training_quantize = True

tflite_model = converter.convert()

open("output_detect.tflite", "wb").write(tflite_model)

print("done")测试openMV

我数据集图片是网上爬虫爬的 不是太好 ,有点慢 待调查原因

附录:训练一个轻量级模型 mobilenet_v1_0.25_128

ubuntu@ubuntu:~/models$ python3 research/slim/train_image_classifier.py --train_dir=./train_dir --dataset_dir=research/slim/datasets/car --dataset_name=car --dataset_spilt_name=train --model_name=mobilenet_v1 --train_image_size=128 --max_number_of_steps=30000 --checkpoint_exclude_scopes=MobilenetV1/Logits生成模型 优化模型 生成tflite 命令参数一样