基于opencv的试卷检测识别

如果有图像处理,图像识别的任务,欢迎下方评论或者私聊作者!

视频观看:

20211212

主界面:

选择图片后:

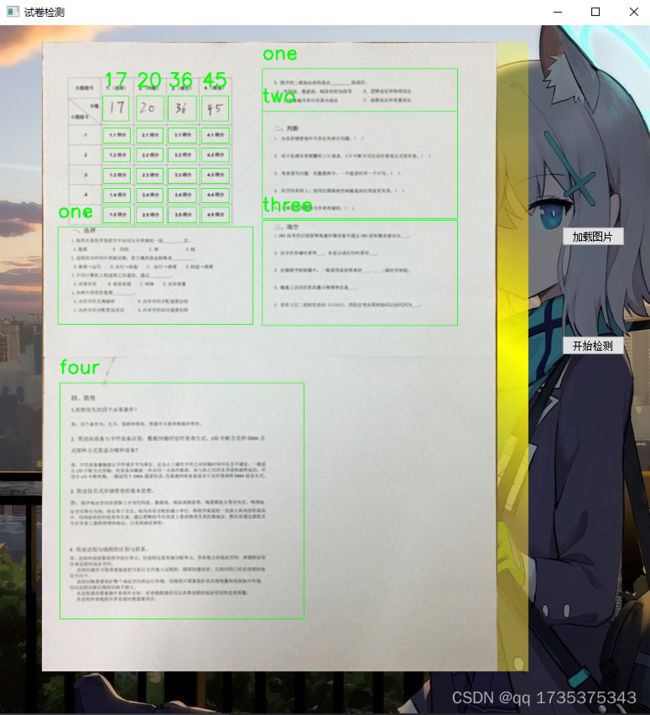

识别以后:

识别完成以后会自动截取不同的题目,然后保持到不同的文件夹中,分数会单独的保存到一个txt文本中。

手写数字数据集:

手写数字识别代码,建议不要直接用mnist手写数字数据集,因为使用这个数据集训练出来的网络,根本识别不了我自己写的数字,亲身体验!没办法,我只好自己制作了手写数据集,其实很简单。数据集如下所示:

其余的都差不多是这样,就不过多展示了。需要注意的是图片上面只有数字是黑色的,这样方面提取出数字,如果写错了,可以用一些图像编辑的软件将错的部分涂成白色即可,就和上图一样。

手写数字识别代码:

import tensorflow as tf

import cv2 as cv

import numpy as np

from get_data import *

model=tf.keras.models.Sequential([

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(128,activation='relu'),

tf.keras.layers.Dense(128,activation='relu'),

tf.keras.layers.Dense(128,activation='relu'),

tf.keras.layers.Dense(10,activation='softmax')

])

model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy'])

model.fit(x_train,y_train,batch_size=32,epochs=10,validation_split=0.1,validation_freq=1)

model.save('mode_2.h5')

是不是很简单呢?请看第四行:

from get_data import *

关于图像处理的部分在get_data.py这个代码中,下面是get_data.py的代码。

get_data.py

import numpy as np

import cv2 as cv

x_train=[]

y_train=[]

aa=9

for aa in range(10):

src=cv.imread('data/{}.jpg'.format(aa))

gray=cv.cvtColor(src,cv.COLOR_BGR2GRAY)

thred=np.where(gray>150,255,0).astype('uint8')

thred=255-thred

#开闭运算

k = np.ones((3, 3), np.uint8)

#thred=cv.dilate(thred,k)

thred = cv.morphologyEx(thred, cv.MORPH_CLOSE, k)

cnts=cv.findContours(thred,cv.RETR_EXTERNAL,cv.CHAIN_APPROX_SIMPLE)[0]

print(len(cnts))

for i in cnts:

area = cv.contourArea(i)

if aa!=1:

b=20

else:b=15

if area>=b:

x, y, w, h = cv.boundingRect(i)

cv.rectangle(src,(x,y),(x+w,y+h),(0,0,255),2)

lkuo=thred[y:y+h,x:x+w]

da = max(h, w)

rate = da / 40

ro = cv.resize(lkuo, (int(w / rate), int(h / rate)))

h, w = ro.shape

t, b = int((43 - h) / 2), 43 - h - int((43 - h) / 2)

l, r = int((43 - w) / 2), 43 - w - int((43 - w) / 2)

ro = cv.copyMakeBorder(ro, t, b, l, r, cv.BORDER_CONSTANT, value=0)

ro = cv.resize(ro, (40, 40))

ro = np.where(ro > 0, 255, 0).astype('float32')

ro = ro / 255

x_train.append(ro)

y_train.append(aa)

x_train=np.array(x_train).astype('float32')

y_train=np.array(y_train).astype('float32')

y_train=np.reshape(y_train,(y_train.shape[0],1))

np.random.seed(1)

np.random.shuffle(x_train)

np.random.seed(1)

np.random.shuffle(y_train)

print(y_train)

if __name__=='__main__':

pass

好了,到这里手写数字识别的部分已经完结了,后面可以直接调用我们训练好的网络就可以识别了,下面是关于试卷检测的代码:

import time

import cv2 as cv

import numpy as np

from tensorflow.keras.models import load_model

import os

model =load_model('mode.h5')

def zb(img,a):

# b=np.sort(a,axis=0)

idx = np.argsort(a, axis=0)

aa = a[idx[:, 0]]

idx12=np.argsort(aa[:2],axis=0)

idx34 = np.argsort(aa[2:], axis=0)

aa[:2]=aa[:2][idx12[:,1]]

aa[2:]=aa[2:][idx34[:,1]]

p1 = aa[0]

p2 = aa[1]

p3 = aa[3]

p4 = aa[2]

# rect=[p1,p2,p3,p4]

# rect=np.array(rect)

w=max(np.sqrt(np.sum(np.square(p4-p1))),np.sqrt(np.sum(np.square(p3-p2))))

h=max(np.sqrt(np.sum(np.square(p2-p1))),np.sqrt(np.sum(np.square(p3-p4))))

dst=np.array([[0,0],

[w - 1, 0],

[w - 1, h - 1],

[0, h - 1]],dtype='float32')

xx=[p1,p4,p3,p2]

aa=np.array(xx).astype('float32')

M=cv.getPerspectiveTransform(aa,dst)

warped=cv.warpPerspective(img,M,(int(w),int(h)))

return warped

def draw(img,x1,y1,x2,y2,text=None,dr=True):

if dr:

cv.rectangle(img, (x1, y1), (x2, y2), (0, 255, 0), 1)

cv.putText(img,text,(x1,y1-15),cv.FONT_HERSHEY_SIMPLEX,1,(0,255,0),2)

src=warped_copy[y1:y2,x1:x2]

return src

def chang_pic(ro):

h,w=ro.shape

da=max(h,w)

rate=da/40

ro=cv.resize(ro,(int(w/rate),int(h/rate)))

h,w=ro.shape

t,b=int((43-h)/2),43-h-int((43-h)/2)

l,r=int((43-w)/2),43-w-int((43-w)/2)

ro=cv.copyMakeBorder(ro,t,b,l,r,cv.BORDER_CONSTANT,value=0)

ro=cv.resize(ro,(40,40))

#cv.imshow('ro1', ro)

ro=np.where(ro>0,255,0).astype('float32')

ro=ro/255

print('ro=',ro.shape)

ro=np.reshape(ro,(1,40,40))

pre=model.predict(ro)[0]

true=np.argmax(pre)

return str(true)

def shuzi(imgs):

imgs=imgs[4:-4,4:-4]

cc=max(imgs.shape[0],imgs.shape[1])

imgs=cv.resize(imgs,(cc,cc))

imgs=cv.cvtColor(imgs,cv.COLOR_BGR2GRAY)

#cv.imshow('gray',imgs)

thred = np.where(imgs > 215, 0, 255).astype('uint8')

#cv.imshow('a11',thred)

contours, hierarchy = cv.findContours(thred, cv.RETR_EXTERNAL, cv.CHAIN_APPROX_NONE)

lis=[]

if len(contours)==0:

return None

contours_2=[]

for i in contours:

x, y, w, h = cv.boundingRect(i)

area = cv.contourArea(i)

if area>10:

lis.append(x)

contours_2.append(i)

print(area)

lis=np.array(lis)

#contours_2=np.array(contours_2)

idx=np.argsort(lis)

print(idx)

#contours_2=np.array(contours_2)

contours_3=[]

for id,ii in enumerate(idx):

contours_3.append(contours_2[ii])

#print(contours_2)

ll=''

for j in contours_3:

x1, y1, w1, h1 = cv.boundingRect(j)

lunkuo=thred[y1:y1+h1,x1:x1+w1]

#cv.imshow('lh45',lunkuo)

number=chang_pic(lunkuo)

ll=ll+number

return ll

nam='3.jpg'

image=cv.imread('./pic/'+nam)

def mmain(image):

a=1000

ratio=image.shape[0]/a

orig=image.copy()

image=cv.resize(image,(int(image.shape[1]/ratio),a))

gray=cv.cvtColor(image,cv.COLOR_BGR2GRAY)

gray=cv.GaussianBlur(gray,(5,5),0)

#边缘检测

edged=cv.Canny(gray,75,200)

cv.imwrite('lk.jpg',edged)

#轮廓检测

cnts=cv.findContours(edged.copy(),cv.RETR_LIST,cv.CHAIN_APPROX_SIMPLE)[0]

cnts=sorted(cnts,key=cv.contourArea,reverse=True)[0]

#遍历轮廓

# cnts=np.reshape(cnts,(cnts.shape[0],cnts.shape[2]))

# print(cnts.shape)

peri=cv.arcLength(cnts,True)

approx=cv.approxPolyDP(cnts,0.02*peri,True)

if len(approx)==4:

screenCnt=approx

cv.drawContours(image,[screenCnt],-1,(0,255,0),2)

warped=zb(orig,screenCnt.reshape(4,2)*ratio)

warped=cv.resize(warped,(724,1000))

global warped_copy

warped_copy=warped.copy()

#cv.imwrite('pic/sjuan.jpg',warped)

print(warped.shape)

score1=draw(warped,96,85,138,125)

score2=draw(warped,150,85,193,125)

score3=draw(warped,200,85,245,125)

score4=draw(warped,254,85,296,125)

#识别分数

num1 = shuzi(score1)

print('num1=',num1)

num2 = shuzi(score2)

print(num2)

num3 = shuzi(score3)

print('num3=',num3)

num4 = shuzi(score4)

print('num4=',num4)

s1= draw(warped, 96, 85, 138, 125,text=num1)

s2 = draw(warped, 150, 85, 193, 125,text=num2)

s3 = draw(warped, 200, 85, 245, 125,text=num3)

s4 = draw(warped, 254, 85, 296, 125,text=num4)

dati1_1=draw(warped,25,293,335,448,text='one')

dati1_2=draw(warped,350,42,660,110,text='one')

dati1=np.concatenate((dati1_1,dati1_2),axis=0)

dati2=draw(warped,350,110,660,280,text='two')

dati3=draw(warped,350,283,660,450,text='three')

dati4=draw(warped,28,541,416,916,text='four')

da1_1=draw(warped_copy,37,294,323,336,dr=False)

da1_2 = draw(warped_copy, 37, 336, 323, 368, dr=False)

da1_3 = draw(warped_copy, 37, 368, 323, 390, dr=False)

da1_4= draw(warped_copy, 37, 390, 323, 442, dr=False)

da1_5 = draw(warped_copy, 353, 46, 651, 111, dr=False)

ddaa1=[da1_1,da1_2,da1_3,da1_4,da1_5]

ddaa1_name=['da1_1','da1_2','da1_3','da1_4','da1_5']

da2_1 = draw(warped_copy, 353, 120, 651, 166, dr=False)

da2_2 = draw(warped_copy, 353, 166, 651, 192, dr=False)

da2_3 = draw(warped_copy, 353, 195, 651, 218, dr=False)

da2_4 = draw(warped_copy, 353, 222, 651, 252, dr=False)

da2_5 = draw(warped_copy, 353, 250, 651, 282, dr=False)

ddaa2=[da2_1,da2_2,da2_3,da2_4,da2_5]

ddaa2_name=['da2_1','da2_2','da2_3','da2_4','da2_5']

da3_1 = draw(warped_copy, 353, 281, 634, 322, dr=False)

da3_2 = draw(warped_copy, 353, 322, 634, 346, dr=False)

da3_3 = draw(warped_copy, 353, 346, 634, 378, dr=False)

da3_4 = draw(warped_copy, 353, 378, 634, 400, dr=False)

da3_5 = draw(warped_copy, 353, 400, 634, 442, dr=False)

ddaa3=[da3_1,da3_2,da3_3,da3_4,da3_5]

ddaa3_name=['da3_1','da3_2','da3_3','da3_4','da3_5']

da4_1 = draw(warped_copy, 35, 551, 381, 617, dr=False)

da4_2 = draw(warped_copy, 35, 617, 381, 700, dr=False)

da4_3 = draw(warped_copy, 35, 700, 381, 786, dr=False)

da4_4 = draw(warped_copy, 35, 786, 381, 912, dr=False)

ddaa4=[da4_1,da4_2,da4_3,da4_4]

ddaa4_name=['da4_1','da4_2','da4_3','da4_4']

t1_1=draw(warped,97,136,140,160)

t1_2=draw(warped,97,168,140,190)

t1_3 = draw(warped, 97, 200, 140, 224)

t1_4 = draw(warped, 97, 232, 140, 255)

t1_5 = draw(warped, 97, 262, 140, 287)

t2_1 = draw(warped, 150, 136, 192, 160)

t2_2 = draw(warped, 150, 168, 192, 190)

t2_3 = draw(warped, 150, 200, 192, 224)

t2_4 = draw(warped, 150, 232, 192, 255)

t2_5 = draw(warped, 150, 262, 192, 287)

t3_1 = draw(warped, 200, 136, 245, 160)

t3_2 = draw(warped, 200, 168, 245, 190)

t3_3 = draw(warped, 200, 200, 245, 224)

t3_4 = draw(warped, 200, 232, 245, 255)

t3_5 = draw(warped, 200, 262, 245, 287)

t4_1 = draw(warped, 253, 136, 297, 160)

t4_2 = draw(warped, 253, 168, 297, 190)

t4_3 = draw(warped, 253, 200, 297, 224)

t4_4 = draw(warped, 253, 232, 297, 255)

t4_5 = draw(warped, 253, 262, 297, 287)

fen=[t1_1,t1_2,t1_3,t1_4,t1_5,

t2_1,t2_2,t2_3,t2_4,t2_5,

t3_1,t3_2,t3_3,t3_4,t3_5,

t4_1,t4_2,t4_3,t4_4,t4_5]

name=['t1_1','t1_2','t1_3','t1_4','t1_5',

't2_1','t2_2','t2_3','t2_4','t2_5',

't3_1','t3_2','t3_3','t3_4','t3_5',

't4_1','t4_2','t4_3','t4_4','t4_5']

ss=[num1,num2,num3,num4]

fensu=0

for iii in ss:

try:

fensu+=int(iii)

except:pass

print(fensu)

#创建文件夹保存文件

if not os.path.isdir("timu"):

os.mkdir("timu")

if not os.path.isdir("timu/score"):

os.mkdir("timu/score")

if not os.path.isdir("timu/one"):

os.mkdir("timu/one")

if not os.path.isdir("timu/two"):

os.mkdir("timu/two")

if not os.path.isdir("timu/three"):

os.mkdir("timu/three")

if not os.path.isdir("timu/four"):

os.mkdir("timu/four")

na=nam.split('.')[0]

#保存大题

for sce,wq in zip(ddaa1,ddaa1_name):

cv.imwrite('timu/one/{}_{}.jpg'.format(na,wq),sce)

for sce,wq in zip(ddaa2,ddaa2_name):

cv.imwrite('timu/two/{}_{}.jpg'.format(na,wq),sce)

for sce,wq in zip(ddaa3,ddaa3_name):

cv.imwrite('timu/three/{}_{}.jpg'.format(na,wq),sce)

for sce,wq in zip(ddaa4,ddaa4_name):

cv.imwrite('timu/four/{}_{}.jpg'.format(na,wq),sce)

#cv.imwrite('timu/{}_one.jpg'.format(na),dati1)

# cv.imwrite('timu/{}_two.jpg'.format(na), dati2)

# cv.imwrite('timu/{}_three.jpg'.format(na), dati3)

# cv.imwrite('timu/{}_four.jpg'.format(na), dati4)

#保存分数区域

for ax,nna in zip(fen,name):

cv.imwrite('timu/score/{}_{}.jpg'.format(na,nna), ax)

#保存题目

# cv.imwrite('warp.jpg',warped_copy)

with open('score.txt','a') as f:

f.write(str(num1)+' '+str(num2)+' '+str(num3)+' '+str(num4)+' '+str(fensu)+'\n')

return warped,image

else:

return

if __name__=='__main__':

img=cv.imread('./pic/2.jpg')

warped, img = mmain(img)

cv.imshow('q12',img)

cv.waitKey(0)

cv.destroyWindow()

下面是界面的部分代码:

import sys, cv2

from PyQt5.QtGui import *

from PyQt5.QtWidgets import *

from PyQt5.QtCore import *

from untitled import Ui_Dialog

from t1 import *

class My(QMainWindow,Ui_Dialog):

def __init__(self):

super(My,self).__init__()

self.setupUi(self)

self.pushButton.clicked.connect(self.pic)

self.setWindowTitle('试卷检测')

self.pushButton_2.clicked.connect(self.dis)

self.setIcon()

def setIcon(self):

palette1 = QPalette()

# palette1.setColor(self.backgroundRole(), QColor(192,253,123)) # 设置背景颜色

palette1.setBrush(self.backgroundRole(), QBrush(QPixmap('22.png'))) # 设置背景图片

self.setPalette(palette1)

# self.setAutoFillBackground(True) # 不设置也可以

# self.setGeometry(300, 300, 250, 150)

#self.setWindowIcon(QIcon('22.jpg'))

def pic(self):

imgName, imgType = QFileDialog.getOpenFileName(self,

"打开图片",

"",

" *.jpg;;*.png;;*.jpeg;;*.bmp;;All Files (*)")

img = cv2.imread(imgName)

self.warped,self.img=mmain(img)

h1,w1=self.warped.shape[0],self.warped.shape[1]

self.warped=cv.resize(self.warped,(int(w1/(h1/750)),750))

print(self.warped.shape)

self.img = cv.resize(self.img, (int(w1 / (h1 / 750)), 750))

try:

self.warped=self.cv_qt(self.warped)

self.img = self.cv_qt(self.img)

self.label.setPixmap(QPixmap.fromImage(self.img))

except:pass

def cv_qt(self, src):

h, w, d = src.shape

bytesperline = d * w

# self.src=cv.cvtColor(self.src,cv.COLOR_BGR2RGB)

qt_image = QImage(src.data, w, h, bytesperline, QImage.Format_RGB888).rgbSwapped()

return qt_image

def dis(self):

self.label.setPixmap(QPixmap.fromImage(self.warped))

if __name__ == '__main__':

app = QApplication(sys.argv)

# 初始化GUI窗口 并传入摄像头句柄

win = My()

win.show()

sys.exit(app.exec_())

下载链接:完整项目下载地址