【KAPAO】《Rethinking Keypoint Representations:Modeling Keypoints and Poses as Objects for XXX》

《Rethinking Keypoint Representations: Modeling Keypoints and Poses as Objects for Multi-Person Human Pose Estimation》

ECCV-2022

文章目录

- 1 Background and Motivation

- 2 Related Work

- 3 Advantages / Contributions

- 4 KAPAO:Keypoints and Poses as Objects

-

- 4.1 Architectural Details

- 4.2 Loss Function

- 4.3 Inference

- 4.4 Limitations

- 5 Experiments

-

- 5.1 Datasets and Metrics

- 5.2 Microsoft COCO Keypoints

- 5.3 CrowdPose

- 5.4 Ablation Studies

- A Supplementary Material

-

- A.1 Hyperparameters

- A.2 Influence of input size on accuracy and speed

- A.3 Video Inference Demos

- A.4 Error Analysis

- A.5 Qualitative Comparisons

- 7 Conclusion(own)

1 Background and Motivation

主流的人体姿态估计多基于 heatmap-based regression 方法

缺点

- suffer from quantization error

- require excessive computation to generate and post-process

- when two keypoints of the same type appear in close proximity to one another, the overlapping heatmap signals may be mistaken for a single keypoint.

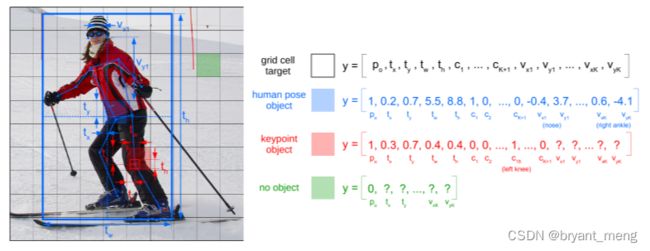

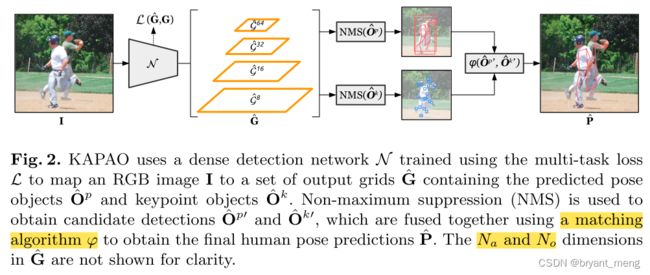

本文作者直接提出了 single-stage multi-person human pose estimation by simultaneously detecting human pose and keypoint objects and fusing the detections to exploit the strengths of both object representations——Keypoints And Poses As Objects

-

pose objects,上图蓝色部分,也即人体框和人体关键点(这个就能预测关键点了)

-

keypoint objects,上图红色部分,也即关键点框(作者本文提出来的,同 pose objects 一起预测出来,框的中心点会和 pose objects 的关键点按一定策略进行融合)

2 Related Work

- Heatmap-free keypoint detection

- Single-stage human pose estimation

generally less accurate, but usually perform better in crowded scenes - Extending object detectors for human pose estimation

3 Advantages / Contributions

提出 KAPAO 人体姿态估计方法(keypoint objects,pose objects),heatmap-free,significantly faster and more accurate than state-of-the-art heatmap-based methods when not using TTA

4 KAPAO:Keypoints and Poses as Objects

O ^ = O ^ k ∪ O ^ p \mathbf{\hat{O}} = \mathbf{\hat{O}}^k \cup \mathbf{\hat{O}}^p O^=O^k∪O^p

a set of keypoint objects { O k ^ ∈ O ^ k } \{\hat{\mathcal{O}^k} \in \mathbf{\hat{O}}^k\} {Ok^∈O^k}

a set of pose objects { O p ^ ∈ O ^ p } \{\hat{\mathcal{O}^p} \in \mathbf{\hat{O}}^p\} {Op^∈O^p}

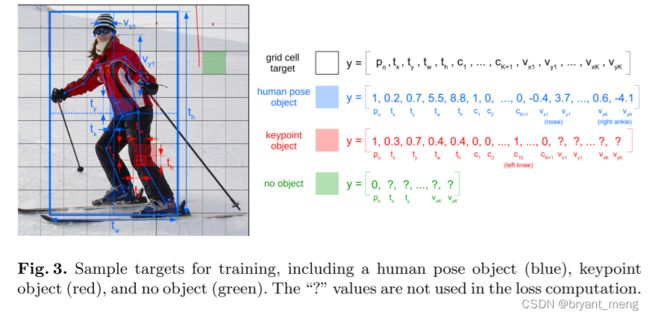

对于每个 keypoint object O k \mathcal{O}^k Ok,有 a small bounding box b = ( b x , b y , b w , b h ) \mathbf{b} = (b_x, b_y, b_w, b_h) b=(bx,by,bw,bh),超参数 b s = b w = b h b_s = b_w = b_h bs=bw=bh 控制 b \mathbf{b} b 的大小

- characterized by strong local features

- carry no information regarding the concept of a person or pose

- keypoint objects exist in a subspace of a pose objects

对于每个 pose object O p \mathcal{O}^p Op,有 a bounding box of class “person,” and a set of keypoints z = { ( x k , y k ) } k = 1 K \mathbf{z} = \{(x_k, y_k)\}_{k=1}^{K} z={(xk,yk)}k=1K,

4.1 Architectural Details

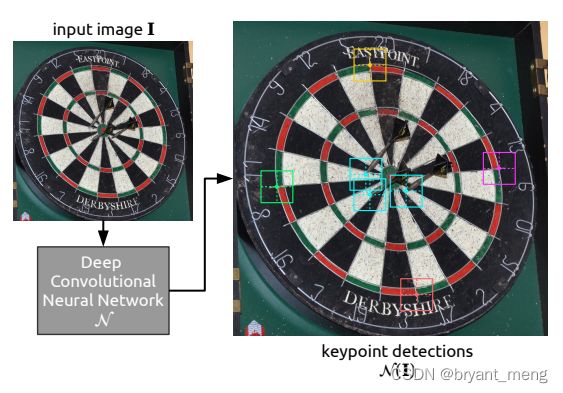

N ( I ) = G ^ \mathcal{N}(\mathbf{I}) = \mathbf{\hat{G}} N(I)=G^

input image I ∈ R h × w × 3 \mathbf{I} \in \mathbb{R}^{h \times w \times 3} I∈Rh×w×3

network N \mathcal{N} N

output grid G ^ = { G ^ s ∣ s ∈ { 8 , 16 , 32 , 64 } } \mathbf{\hat{G}} = \{\hat{\mathcal{G}}^s| s \in \{8, 16, 32, 64\}\} G^={G^s∣s∈{8,16,32,64}}, G ^ s ∈ R h s × w s × N a × N o \hat{\mathcal{G}}^s \in \mathbb{R}^{\frac{h}{s} \times \frac{w}{s} \times N_a \times N_o} G^s∈Rsh×sw×Na×No

-

N a N_a Na is the number of anchor channels

-

N o = 3 K + 6 N_o = 3K+6 No=3K+6 is the number of output channels for each object

- the objectness p ^ o \hat{p}_o p^o,1

- the intermediate bounding boxes t ^ ′ = ( t ^ x ′ , t ^ y ′ , t ^ w ′ , t ^ h ′ ) \hat{\mathbf{t}}'= (\hat{t}'_x, \hat{t}'_y, \hat{t}'_w, \hat{t}'_h) t^′=(t^x′,t^y′,t^w′,t^h′),4

- the object class scores c ^ = ( c ^ 1 , . . . , c ^ K + 1 ) \hat{\mathbf{c}}=(\hat{c}_1,...,\hat{c}_{K+1}) c^=(c^1,...,c^K+1),K+1

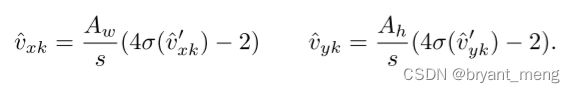

- the intermediate keypoints v ′ ^ = { v ^ x k ′ , v ^ y k ′ } k = 1 K \hat{\mathbf{v}'} = \{\hat{v}'_{xk}, \hat{v}'_{yk}\}_{k=1}^K v′^={v^xk′,v^yk′}k=1K,2K

Additional detection redundancy is provided by also allowing the neighbouring grid cells G ^ i ± 1 , j s \hat{\mathcal{G}}_{i\pm1, j}^s G^i±1,js and G ^ i , j ± 1 s \hat{\mathcal{G}}_{i, j\pm1}^s G^i,j±1s to detect an object in image patch I p = I s i : s ( i + 1 ) , s j : s ( j + 1 ) \mathbf{I}_p = \mathbf{I}_{si:s(i+1), sj:s(j+1)} Ip=Isi:s(i+1),sj:s(j+1)

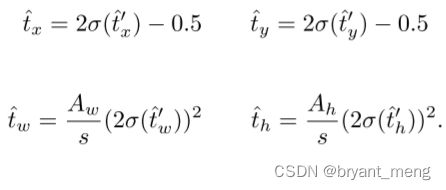

bbox 的计算(x,y 计算的是偏移量)

关键点的计算(偏移量)

其中 A w A_w Aw 和 A h A_h Ah 表示 anchor 的宽高

4.2 Loss Function

- w s w_s ws is grid weighting

- IoU 指的是 CIoU

- v k v_k vk 是 keypoint visibility flags

- N b N_b Nb 是 batch size

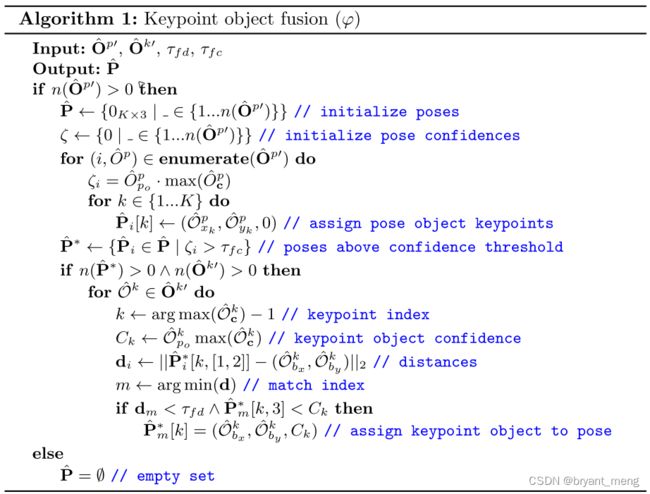

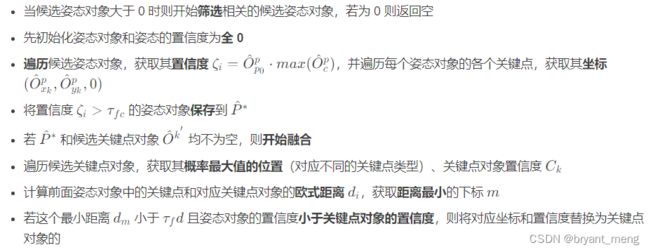

4.3 Inference

The predicted intermediate bounding boxes t ^ \hat{\mathbf{t}} t^ and keypoints v ^ \hat{\mathbf{v}} v^ are mapped back to the original image coordinates using the following transformation:

b ^ = s ( t ^ + [ i , j , 0 , 0 ] ) \hat{\mathbf{b}} = s(\hat{\mathbf{t}}+[i,j,0,0]) b^=s(t^+[i,j,0,0])

z ^ k = s ( v ^ k + [ i , j ] ) \hat{\mathbf{z}}_k = s(\hat{\mathbf{v}}_k+[i,j]) z^k=s(v^k+[i,j])

NMS 处理

O ^ p ′ = N M S ( O ^ p , τ b p ) \hat{\mathbf{O}}^{p'} = NMS(\hat{\mathbf{O}}^{p}, \tau_{bp}) O^p′=NMS(O^p,τbp)

O ^ k ′ = N M S ( O ^ k , τ b k ) \hat{\mathbf{O}}^{k'} = NMS(\hat{\mathbf{O}}^{k}, \tau_{bk}) O^k′=NMS(O^k,τbk)

τ b p \tau_{bp} τbp 和 τ b k \tau_{bk} τbk 为 IoU thresholds

结果进行融合

P ^ = φ ( O ^ p ′ , O ^ k ′ , τ f d , τ f c ) \hat{\mathbf{P}} = \varphi(\hat{\mathbf{O}}^{p'}, \hat{\mathbf{O}}^{k'}, \tau_{fd}, \tau_{fc}) P^=φ(O^p′,O^k′,τfd,τfc)

4.4 Limitations

-

pose objects do not include individual keypoint confidences,只有 keypoint objects 有

-

training requires a considerable amount of time and GPU memory due to the large input size used

5 Experiments

5.1 Datasets and Metrics

- COCO

- CrowdPose

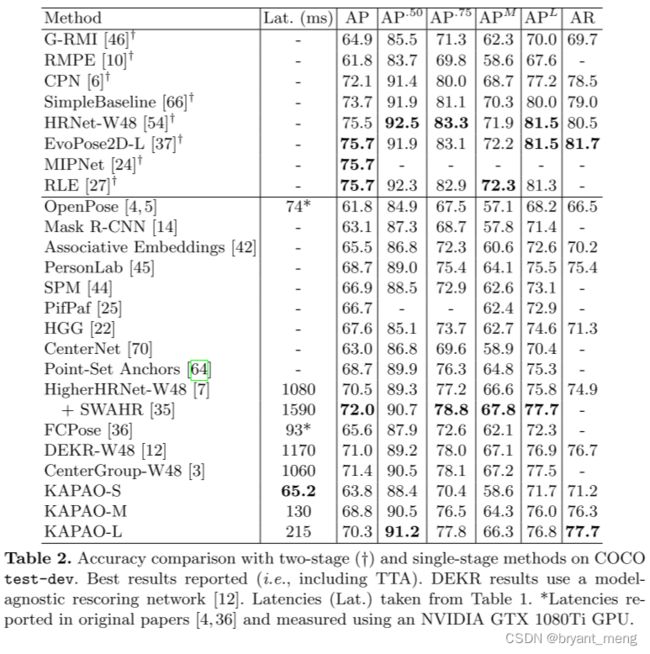

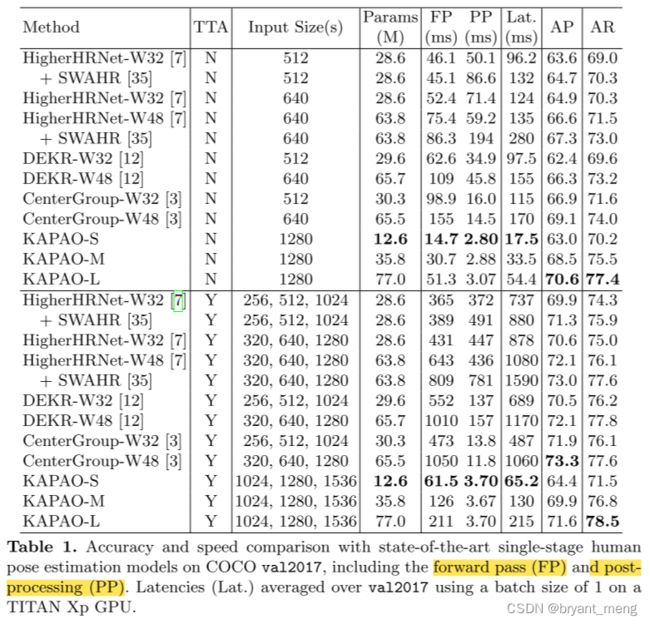

5.2 Microsoft COCO Keypoints

val 集上

The post-processing time of KAPAO depends less on the input size so it only increases by approximately 1 ms when using TTA.

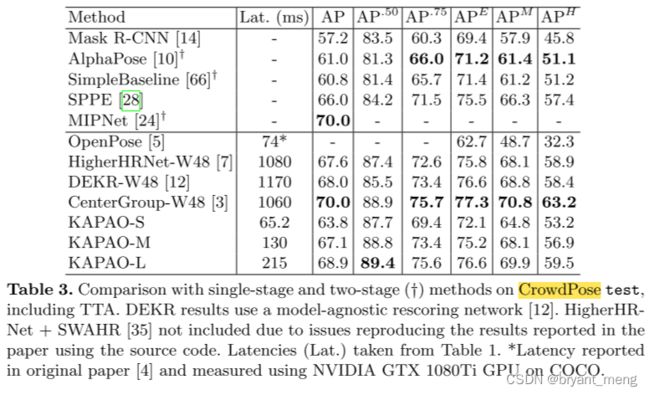

5.3 CrowdPose

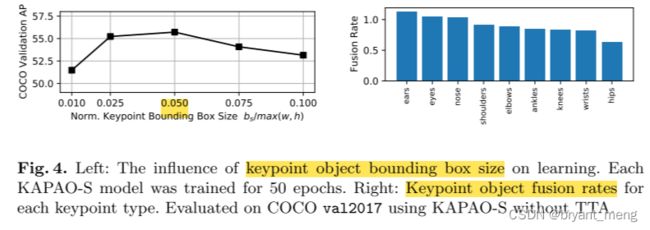

5.4 Ablation Studies

右图,不同关键点的融合率,distinct local image features (e.g., the eyes, ears, and nose) have higher fusion rates as they are detected more precisely as keypoint objects than as pose objects.

A Supplementary Material

A.1 Hyperparameters

A.2 Influence of input size on accuracy and speed

{640, 768, 896, 1024, 1152, 1280}

reducing the input size to 1152 had a negligible effect on the accuracy but provided a meaningful latency reduction.

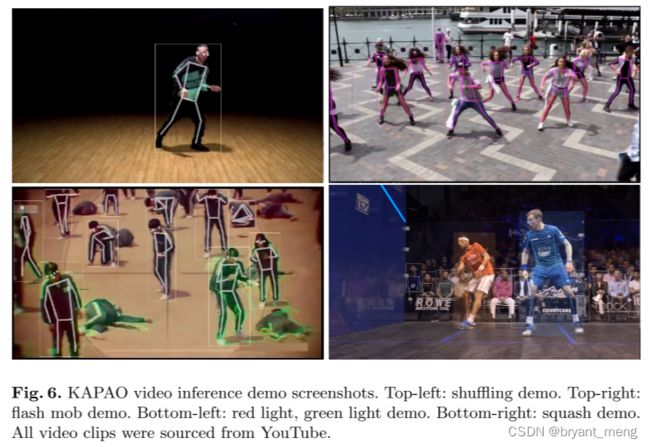

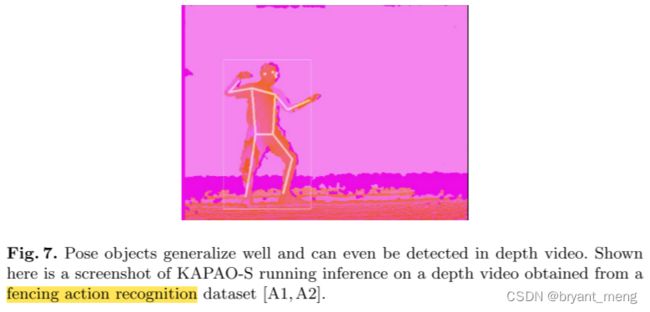

A.3 Video Inference Demos

A.4 Error Analysis

KAPAO consistently provides higher AR than previous single-stage methods and higher AP at lower OKS thresholds

four error categories:

-

Background False Positives

-

False Negatives

-

Scoring

due to sub-optimal confidence score assignment; they occur when two detections are in the proximity of a ground-truth annotation and the one with the highest confidence has the lowest OKS -

Localization

- Jitter: 0.5 ≤ e x p ( − d i 2 / 2 s 2 k i 2 ) < 0.85 0.5 \leq exp(-d_i^2 / 2s^2k_i^2)<0.85 0.5≤exp(−di2/2s2ki2)<0.85

- Miss: e x p ( − d i 2 / 2 s 2 k i 2 ) < 0.5 exp(-d_i^2 / 2s^2k_i^2)<0.5 exp(−di2/2s2ki2)<0.5

- Inversion:left-right keypoint flipping within an instance;

- Swap:keypoint swapping between instances

KAPAO-L is less prone to Swap and Inversion errors

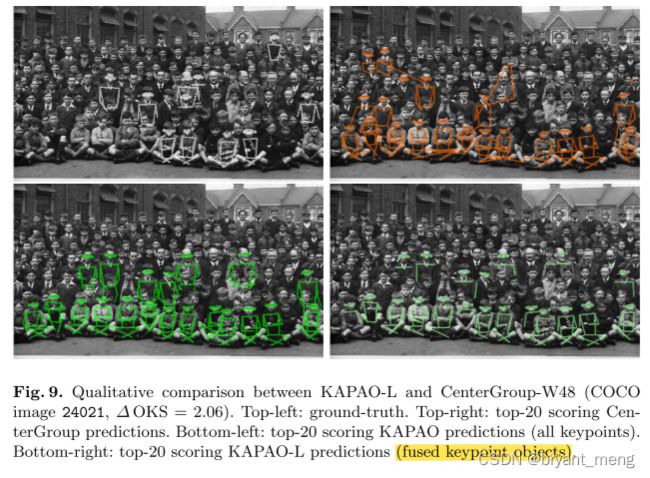

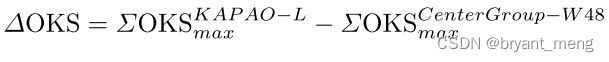

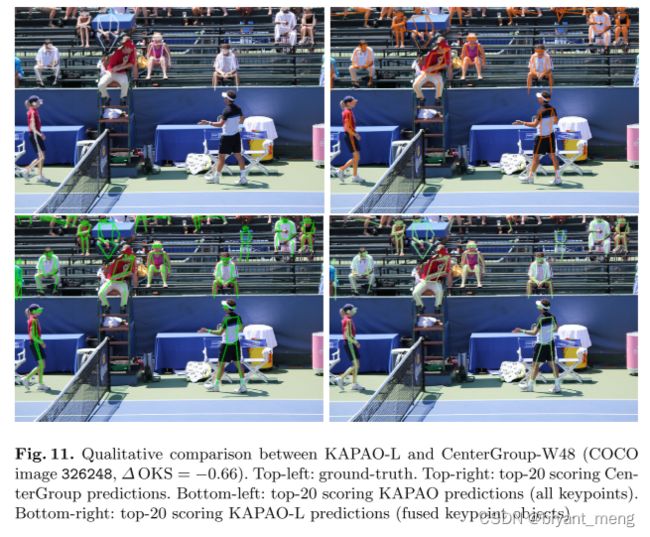

A.5 Qualitative Comparisons

the top-20 scoring pose detections for each model.

Because all the COCO keypoint metrics are computed using the 20 top-scoring detections

Swap error is a common failure case for CenterGroup but an uncommon failure case for KAPAO due to its detection of holistic pose objects

7 Conclusion(own)

-

灵感来自于:

《DeepDarts: Modeling Keypoints as Objects for Automatic Scorekeeping in Darts using a Single Camera》(CVPR-2021)

-

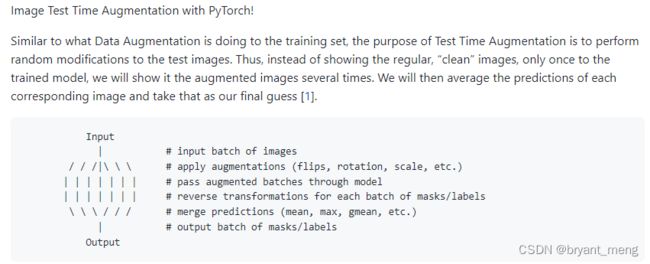

TTA( Test Time Augmentation)

测试时增强,指的是在推理(预测)阶段,将原始图片进行水平翻转、垂直翻转、对角线翻转、旋转角度等数据增强操作,得到多张图,分别进行推理,再对多个结果进行综合分析,得到最终输出结果。

测试时增强-TTA (Test time augmention)

qubvel / ttach

Test Time Augmentation (TTA) and how to perform it with Keras