超分辨 :SRCNN

超分辨重建

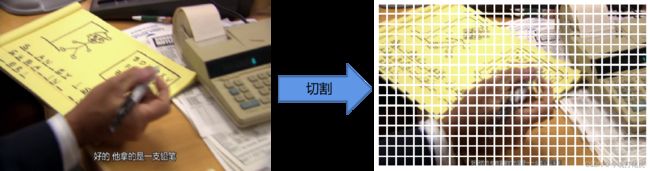

通过卷积神经网络提升图像的分辨率,本文采用一个简单的模型来实现对图片画质提升,测试数据来自《office》中的部分剧照,由于画面原始尺寸较大,所以是对原始画面切片后的每一片进行分辨率提升,然后在重组,训练数据也是基于每个图片的切片(Patch)进行训练。

训练和数据制作过程如下:

- 降低分辨率:

![]()

- 切割图片,补丁之间有重复

- 训练模型,学习低分辨率 → \to → 高分辨率的映射关系

![]()

![]()

完整代码如下:

import pathlib

import numpy as np

import matplotlib.pyplot as plt

from glob import glob

from PIL import Image

from tqdm import tqdm

from tensorflow.keras import Model

from tensorflow.keras.optimizers import Adam

from tensorflow.keras.layers import Conv2D, ReLU, Input

from tensorflow.keras.preprocessing.image import img_to_array, load_img

def build_srcnn(height, width, depth):

input = Input(shape=(height, width, depth))

x = Conv2D(filters=64, kernel_size=(7, 7), strides=1, padding='valid')(input)

x = ReLU()(x)

x = Conv2D(filters=64, kernel_size=(5, 5), strides=1, padding='valid')(x)

x = ReLU()(x)

x = Conv2D(filters=32, kernel_size=(5, 5), strides=1, padding='valid')(x)

x = ReLU()(x)

x = Conv2D(filters=32, kernel_size=(5, 5), strides=1, padding='valid')(x)

x = ReLU()(x)

x = Conv2D(filters=32, kernel_size=(3, 3), strides=1, padding='valid')(x)

x = ReLU()(x)

x = Conv2D(filters=32, kernel_size=(3, 3), strides=1, padding='valid')(x)

x = ReLU()(x)

x = Conv2D(filters=32, kernel_size=(3, 3), strides=1, padding='valid')(x)

x = ReLU()(x)

output = Conv2D(filters=depth, kernel_size=(3, 3))(x)

return Model(input, output)

缩放图像

SCALE = 2.5 # 图像缩放系数

INPUT_DIM = 51 # 输入图像Patch 的大小

LABEL_SIZE = 25 # 标签Patch的大小

PAD = int((INPUT_DIM - LABEL_SIZE)/2.0)

STRIDE = 15 # 图像剪裁间隔(步长)

def resize_image(image_array, factor):

original_image = Image.fromarray(image_array)

new_size = np.array(original_image.size) * factor

new_size = new_size.astype(np.int32)

new_size = tuple(new_size)

resized = original_image.resize(new_size)

resized = img_to_array(resized)

resized = resized.astype(np.uint8)

return resized

def crop_image(image):

height, width = image.shape[:2]

width -= int(width % SCALE)

height -= int(height % SCALE)

return image[:height, :width]

def downsize_upsize_image(image):

'''get low resolution'''

scaled = resize_image(image, 1.0/SCALE)

scaled = resize_image(scaled, SCALE/1.0)

return scaled

def crop_input(image, w, h):

h_slice = slice(h, h+INPUT_DIM)

w_slice = slice(w, w+INPUT_DIM)

return image[h_slice, w_slice]

def crop_output(image, w, h):

h_slice = slice(h+PAD, h+PAD+LABEL_SIZE)

w_slice = slice(w+PAD, w+PAD+LABEL_SIZE)

return image[h_slice, w_slice]

制作训练数据

file_patten = str(pathlib.Path.home()/'DeepVision/frames'/'*.png')

DataSetPaths = [*glob(file_patten)]

dataset_paths = np.random.choice(DataSetPaths, 2000)

data = []

labels = []

for image_path in tqdm(dataset_paths, ncols=80):

image = load_img(image_path)

image = img_to_array(image)

image = image.astype(np.uint8)

image = crop_image(image)

scaled = downsize_upsize_image(image)

height, width = image.shape[:2]

for h in range(0, height - INPUT_DIM + 1, STRIDE):

for w in range(0, width - INPUT_DIM + 1, STRIDE):

crop = crop_input(scaled, w, h)

target = crop_output(image, w, h)

data.append(crop)

labels.append(target)

data = np.array(data)

labels = np.array(labels)

100%|███████████████████████████████████████| 2000/2000 [00:28<00:00, 70.73it/s]

训练模型

EPOCHS = 20

BATCH_SIZE = 128

optimizer = Adam(learning_rate=1e-3, decay=1e-3 / EPOCHS)

model = build_srcnn(INPUT_DIM, INPUT_DIM, 3)

model.compile(loss='mse', optimizer=optimizer)

history = model.fit(data, labels, batch_size=BATCH_SIZE, epochs=EPOCHS)