YOLOv3

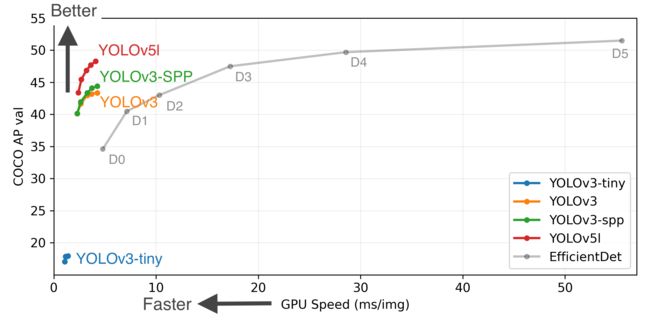

- GPU Speed measures end-to-end time per image averaged over 5000 COCO val2017 images using a V100 GPU with batch size 32, and includes image preprocessing, PyTorch FP16 inference, postprocessing and NMS.

- EfficientDet data from google/automl at batch size 8.

- Reproduce by

python test.py --task study --data coco.yaml --iou 0.7 --weights yolov3.pt yolov3-spp.pt yolov3-tiny.pt yolov5l.pt

GPU的速度测量端到端的时间平均超过5000 COCO val2017图像使用V100 GPU,批量32,包括图像预处理、PyTorch FP16推理、后处理和NMS。

批量大小为8时,来自google/automl的高效数据集。

通过python test.py复制–任务研究–数据coco.yaml–iou 0.7–权重yolov3.pt yolov3-spp.pt yolov3-tiny.pt yolov5l.pt

Branch Notice

The ultralytics/yolov3 repository is now divided into two branches:

ultralytics/yolov3存储库现在分为两个分支:

- Master branch: Forward-compatible with all YOLOv5 models and methods (recommended ✅).

主分支:向前兼容所有YOLOv5模型和方法(推荐)✅).

$ git clone https://github.com/ultralytics/yolov3 # master branch (default)

- Archive branch: Backwards-compatible with original darknet .cfg models (no longer maintained ⚠️).

归档分支:向后兼容原始的darknet.cfg模型(不再维护)⚠️).

$ git clone https://github.com/ultralytics/yolov3 -b archive # archive branch

Pretrained Checkpoints

预训练检查站

| Model | size (pixels) |

mAPval 0.5:0.95 |

mAPtest 0.5:0.95 |

mAPval 0.5 |

Speed V100 (ms) |

params (M) |

FLOPS 640 (B) |

|

|---|---|---|---|---|---|---|---|---|

| YOLOv3-tiny | 640 | 17.6 | 17.6 | 34.8 | 1.2 | 8.8 | 13.2 | |

| YOLOv3 | 640 | 43.3 | 43.3 | 63.0 | 4.1 | 61.9 | 156.3 | |

| YOLOv3-SPP | 640 | 44.3 | 44.3 | 64.6 | 4.1 | 63.0 | 157.1 | |

| YOLOv5l | 640 | 48.2 | 48.2 | 66.9 | 3.7 | 47.0 | 115.4 |

-

APtest denotes COCO test-dev2017 server results, all other AP results denote val2017 accuracy.

APtest表示COCO test-dev2017服务器结果,所有其他AP结果表示val2017准确性 -

AP values are for single-model single-scale unless otherwise noted. Reproduce mAP by

python test.py --data coco.yaml --img 640 --conf 0.001 --iou 0.65

*除非另有说明,AP值适用于单模型单比例。通过python test.py --data coco.yaml --img 640 --conf 0.001 --iou 0.65再现地图 -

SpeedGPU averaged over 5000 COCO val2017 images using a GCP n1-standard-16 V100 instance, and includes FP16 inference, postprocessing and NMS. Reproduce speed by

python test.py --data coco.yaml --img 640 --conf 0.25 --iou 0.45

SpeedGPU使用GCP n1-standard-16 V100实例,平均超过5000张COCO val2017图像,包括FP16推理、后处理和NMS。通过python test.py --data coco.yaml --img 640 --conf 0.25 --iou 0.45再现速度 -

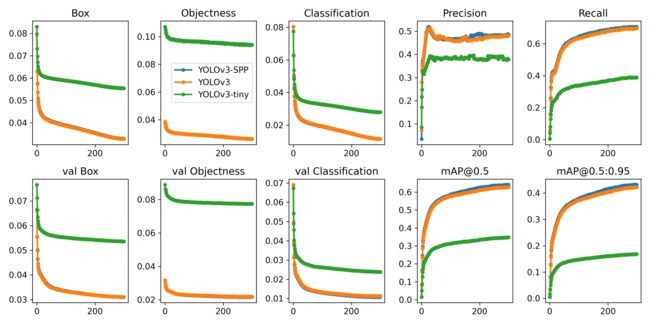

All checkpoints are trained to 300 epochs with default settings and hyperparameters (no autoaugmentation).

所有的检查点都被训练成300个历元,带有默认设置和超参数(没有自动增强)。

Requirements

要求

Python 3.8 or later with all requirements.txt dependencies installed, including torch>=1.7. To install run:

安装了所有requirements.txt依赖项的Python 3.8或更高版本,包括torch>=1.7。要安装:

$ pip install -r requirements.txt

Tutorials

教程

- Train Custom Data RECOMMENDED

训练自定义数据 推荐 - Tips for Best Training Results

最佳培训效果提示☘️ 推荐☘️ RECOMMENDED - Weights & Biases Logging NEW

重量和偏差记录 新建 - Supervisely Ecosystem NEW

监管生态系统 新建 - Multi-GPU Training

多GPU训练 - PyTorch Hub ⭐ NEW

PyTorch中心⭐ 新建 - TorchScript, ONNX, CoreML Export

*TorchScript、ONNX、CoreML导出 - Test-Time Augmentation (TTA)

*试验时间延长(TTA) - Model Ensembling

模型集成 - Model Pruning/Sparsity

模型修剪/稀疏 - Hyperparameter Evolution

超参数演化 - Transfer Learning with Frozen Layers ⭐ NEW

*冻结层迁移学习⭐ 新建 - TensorRT Deployment

TensorRT部署

Environments

环境

YOLOv3 may be run in any of the following up-to-date verified environments (with all dependencies including CUDA/CUDNN, Python and PyTorch preinstalled):

YOLOv3可以在以下任何一个最新的验证环境中运行(所有依赖项包括CUDA/CUDNN、Python和PyTorch都已预装):

- Google Colab and Kaggle notebooks with free GPU:

带有免费GPU的Google Colab和Kaggle笔记本电脑 - Google Cloud Deep Learning VM. See GCP Quickstart Guide

谷歌云深度学习虚拟机。请参阅GCP快速入门指南 - Amazon Deep Learning AMI. See AWS Quickstart Guide

亚马逊深度学习。请参阅AWS快速入门指南 - Docker Image. See Docker Quickstart Guide

Docker图像。请参阅Docker快速入门指南Docker Pulls

Inference

推论

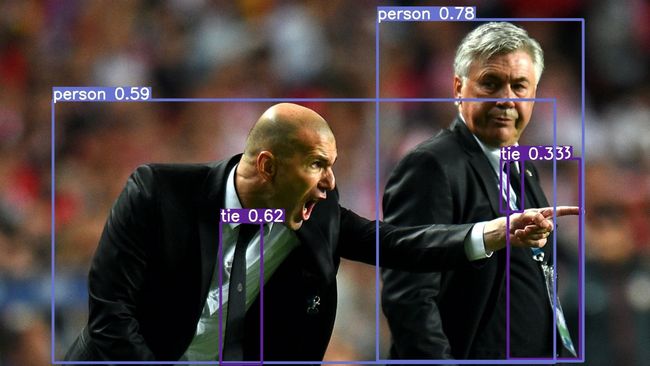

detect.py runs inference on a variety of sources, downloading models automatically from the latest YOLOv3 release and saving results to runs/detect.

py在各种源上运行推断,从最新的YOLOv3版本自动下载模型,并将结果保存到runs/detect。

$ python detect.py --source 0 # webcam

file.jpg # image

file.mp4 # video

path/ # directory

path/*.jpg # glob

'https://youtu.be/NUsoVlDFqZg' # YouTube video

'rtsp://example.com/media.mp4' # RTSP, RTMP, HTTP stream

To run inference on example images in data/images:

要对数据/图像中的示例图像进行推断:

$ python detect.py --source data/images --weights yolov3.pt --conf 0.25

PyTorch Hub

PyTorch中心。

To run batched inference with YOLOv3 and PyTorch Hub:

要使用YOLOv3和PyTorch Hub运行批处理推断:

import torch

# Model

model = torch.hub.load('ultralytics/yolov3', 'yolov3') # or 'yolov3_spp', 'yolov3_tiny'

# Image

img = 'https://ultralytics.com/images/zidane.jpg'

# Inference

results = model(img)

results.print() # or .show(), .save()

Training

培训

Run commands below to reproduce results on COCO dataset (dataset auto-downloads on first use). Training times for YOLOv3/YOLOv3-SPP/YOLOv3-tiny are 6/6/2 days on a single V100 (multi-GPU times faster). Use the largest --batch-size your GPU allows (batch sizes shown for 16 GB devices).

运行下面的命令在COCO数据集上重现结果(第一次使用时数据集自动下载)。YOLOv3/YOLOv3 SPP/YOLOv3 tiny在单个V100上的训练时间为6/6/2天(多个GPU的速度快了很多)。使用GPU允许的最大批量大小 --batch-size(为16GB设备显示的批量大小)。

$ python train.py --data coco.yaml --cfg yolov3.yaml --weights '' --batch-size 24

yolov3-spp.yaml 24

yolov3-tiny.yaml 64