金融反欺诈(项目练习)

本项目需解决的问题

本项目通过利用信用卡的历史交易数据,进行机器学习,构建信用卡反欺诈预测模型,提前发现客户信用卡被盗刷的事件。

项目背景

数据集包含由欧洲持卡人于2013年9月使用信用卡进行交的数据。此数据集显示两天内发生的交易,其中284,807笔交易中有492笔被盗刷。数据集非常不平衡, 积极的类(被盗刷)占所有交易的0.172%。

它只包含作为PCA转换结果的数字输入变量。不幸的是,由于保密问题,我们无法提供有关数据的原始功能和更多背景信息。特征V1,V2,… V28是使用PCA 获得的主要组件,没有用PCA转换的唯一特征是“时间”和“量”。特征’时间’包含数据集中每个事务和第一个事务之间经过的秒数。特征“金额”是交易金额,此特 征可用于实例依赖的成本认知学习。特征’类’是响应变量,如果发生被盗刷,则取值1,否则为0。 以上取自Kaggle官网对本数据集部分介绍(谷歌翻译),关于数据集更多介绍请参考《Credit Card Fraud Detection》。

算法选择

- 首先,我们拿到的数据是持卡人两天内的信用卡交易数据,这份数据包含很多维度,要解决的问题是预测持卡人是否会发生信用卡被盗刷。信用卡持卡人是否会发生被盗刷只有两种可能,发生被盗刷或不发生被盗刷。又因为这份数据是打标好的(字段Class是目标列),也就是说它是一个监督学习的场景。于是,我们判定信用卡持卡人是否会发生被盗刷是一个二元分类问题,意味着可以通过二分类相关的算法来找到具体的解决办法,本项目选用的算法是逻辑斯蒂回归(Logistic Regression)。

- 分析数据:数据是结构化数据 ,不需要做特征抽象。特征V1至V28是经过PCA处理,而特征Time和Amount的数据规格与其他特征差别较大,需要对其做特征缩放,将特征缩放至同一个规格。在数据质量方面 ,没有出现乱码或空字符的数据,可以确定字段Class为目标列,其他列为特征列。

- 这份数据是全部打标好的数据,可以通过交叉验证的方法对训练集生成的模型进行评估。70%的数据进行训练,30%的数据进行预测和评估。

&emsp&emsp现对该业务场景进行总结如下: - 根据历史记录数据学习并对信用卡持卡人是否会发生被盗刷进行预测,二分类监督学习场景,选择逻辑斯蒂回归(Logistic Regression)算法。

- 数据为结构化数据,不需要做特征抽象,但需要做特征缩放。

1.数据预处理

import seaborn as sns

import matplotlib.pyplot as plt

%matplotlib inline

import numpy as np

import pandas as pd

data = pd.read_csv('./creditcard.csv')

data.head()

| Time | V1 | V2 | V3 | V4 | V5 | V6 | V7 | V8 | V9 | ... | V21 | V22 | V23 | V24 | V25 | V26 | V27 | V28 | Amount | Class | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0.0 | -1.359807 | -0.072781 | 2.536347 | 1.378155 | -0.338321 | 0.462388 | 0.239599 | 0.098698 | 0.363787 | ... | -0.018307 | 0.277838 | -0.110474 | 0.066928 | 0.128539 | -0.189115 | 0.133558 | -0.021053 | 149.62 | 0 |

| 1 | 0.0 | 1.191857 | 0.266151 | 0.166480 | 0.448154 | 0.060018 | -0.082361 | -0.078803 | 0.085102 | -0.255425 | ... | -0.225775 | -0.638672 | 0.101288 | -0.339846 | 0.167170 | 0.125895 | -0.008983 | 0.014724 | 2.69 | 0 |

| 2 | 1.0 | -1.358354 | -1.340163 | 1.773209 | 0.379780 | -0.503198 | 1.800499 | 0.791461 | 0.247676 | -1.514654 | ... | 0.247998 | 0.771679 | 0.909412 | -0.689281 | -0.327642 | -0.139097 | -0.055353 | -0.059752 | 378.66 | 0 |

| 3 | 1.0 | -0.966272 | -0.185226 | 1.792993 | -0.863291 | -0.010309 | 1.247203 | 0.237609 | 0.377436 | -1.387024 | ... | -0.108300 | 0.005274 | -0.190321 | -1.175575 | 0.647376 | -0.221929 | 0.062723 | 0.061458 | 123.50 | 0 |

| 4 | 2.0 | -1.158233 | 0.877737 | 1.548718 | 0.403034 | -0.407193 | 0.095921 | 0.592941 | -0.270533 | 0.817739 | ... | -0.009431 | 0.798278 | -0.137458 | 0.141267 | -0.206010 | 0.502292 | 0.219422 | 0.215153 | 69.99 | 0 |

data.describe()

| Time | V1 | V2 | V3 | V4 | V5 | V6 | V7 | V8 | V9 | ... | V21 | V22 | V23 | V24 | V25 | V26 | V27 | V28 | Amount | Class | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| count | 284807.000000 | 2.848070e+05 | 2.848070e+05 | 2.848070e+05 | 2.848070e+05 | 2.848070e+05 | 2.848070e+05 | 2.848070e+05 | 2.848070e+05 | 2.848070e+05 | ... | 2.848070e+05 | 2.848070e+05 | 2.848070e+05 | 2.848070e+05 | 2.848070e+05 | 2.848070e+05 | 2.848070e+05 | 2.848070e+05 | 284807.000000 | 284807.000000 |

| mean | 94813.859575 | 3.919560e-15 | 5.688174e-16 | -8.769071e-15 | 2.782312e-15 | -1.552563e-15 | 2.010663e-15 | -1.694249e-15 | -1.927028e-16 | -3.137024e-15 | ... | 1.537294e-16 | 7.959909e-16 | 5.367590e-16 | 4.458112e-15 | 1.453003e-15 | 1.699104e-15 | -3.660161e-16 | -1.206049e-16 | 88.349619 | 0.001727 |

| std | 47488.145955 | 1.958696e+00 | 1.651309e+00 | 1.516255e+00 | 1.415869e+00 | 1.380247e+00 | 1.332271e+00 | 1.237094e+00 | 1.194353e+00 | 1.098632e+00 | ... | 7.345240e-01 | 7.257016e-01 | 6.244603e-01 | 6.056471e-01 | 5.212781e-01 | 4.822270e-01 | 4.036325e-01 | 3.300833e-01 | 250.120109 | 0.041527 |

| min | 0.000000 | -5.640751e+01 | -7.271573e+01 | -4.832559e+01 | -5.683171e+00 | -1.137433e+02 | -2.616051e+01 | -4.355724e+01 | -7.321672e+01 | -1.343407e+01 | ... | -3.483038e+01 | -1.093314e+01 | -4.480774e+01 | -2.836627e+00 | -1.029540e+01 | -2.604551e+00 | -2.256568e+01 | -1.543008e+01 | 0.000000 | 0.000000 |

| 25% | 54201.500000 | -9.203734e-01 | -5.985499e-01 | -8.903648e-01 | -8.486401e-01 | -6.915971e-01 | -7.682956e-01 | -5.540759e-01 | -2.086297e-01 | -6.430976e-01 | ... | -2.283949e-01 | -5.423504e-01 | -1.618463e-01 | -3.545861e-01 | -3.171451e-01 | -3.269839e-01 | -7.083953e-02 | -5.295979e-02 | 5.600000 | 0.000000 |

| 50% | 84692.000000 | 1.810880e-02 | 6.548556e-02 | 1.798463e-01 | -1.984653e-02 | -5.433583e-02 | -2.741871e-01 | 4.010308e-02 | 2.235804e-02 | -5.142873e-02 | ... | -2.945017e-02 | 6.781943e-03 | -1.119293e-02 | 4.097606e-02 | 1.659350e-02 | -5.213911e-02 | 1.342146e-03 | 1.124383e-02 | 22.000000 | 0.000000 |

| 75% | 139320.500000 | 1.315642e+00 | 8.037239e-01 | 1.027196e+00 | 7.433413e-01 | 6.119264e-01 | 3.985649e-01 | 5.704361e-01 | 3.273459e-01 | 5.971390e-01 | ... | 1.863772e-01 | 5.285536e-01 | 1.476421e-01 | 4.395266e-01 | 3.507156e-01 | 2.409522e-01 | 9.104512e-02 | 7.827995e-02 | 77.165000 | 0.000000 |

| max | 172792.000000 | 2.454930e+00 | 2.205773e+01 | 9.382558e+00 | 1.687534e+01 | 3.480167e+01 | 7.330163e+01 | 1.205895e+02 | 2.000721e+01 | 1.559499e+01 | ... | 2.720284e+01 | 1.050309e+01 | 2.252841e+01 | 4.584549e+00 | 7.519589e+00 | 3.517346e+00 | 3.161220e+01 | 3.384781e+01 | 25691.160000 | 1.000000 |

data.info()

RangeIndex: 284807 entries, 0 to 284806

Data columns (total 31 columns):

Time 284807 non-null float64

V1 284807 non-null float64

V2 284807 non-null float64

V3 284807 non-null float64

V4 284807 non-null float64

V5 284807 non-null float64

V6 284807 non-null float64

V7 284807 non-null float64

V8 284807 non-null float64

V9 284807 non-null float64

V10 284807 non-null float64

V11 284807 non-null float64

V12 284807 non-null float64

V13 284807 non-null float64

V14 284807 non-null float64

V15 284807 non-null float64

V16 284807 non-null float64

V17 284807 non-null float64

V18 284807 non-null float64

V19 284807 non-null float64

V20 284807 non-null float64

V21 284807 non-null float64

V22 284807 non-null float64

V23 284807 non-null float64

V24 284807 non-null float64

V25 284807 non-null float64

V26 284807 non-null float64

V27 284807 non-null float64

V28 284807 non-null float64

Amount 284807 non-null float64

Class 284807 non-null int64

dtypes: float64(30), int64(1)

memory usage: 67.4 MB

从上面可以看出,数据为结构化数据,不需要抽特征转化,但特征Time和Amount的数据规格和其他特征不一样,需要对其做特征做特征缩放。

from sklearn.preprocessing import StandardScaler

standard = StandardScaler()#标准化

data['Amount'] = standard.fit_transform(data[['Amount']])#标准化Amount

data['Time'] = data['Time'].map(lambda x : x//3600)#本来是以秒为单位,差距太大,改成以小时为单位(特征转换)

data['Time'] = standard.fit_transform(data[['Time']])#再进行标准化

2.特征工程

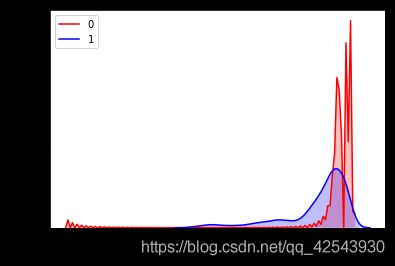

选择特征,从v1-v28中选出不能很好区分0,1的特征,例如把v1中分类为1和0的用图表示出来,如果0,1面积重叠过多则代表这个特征对于分类的作用不大

cond_0 = data['Class'] == 0#表示被盗刷的

cond_1 = data['Class'] == 1#表示正常使用的

#画V1的图像

g=sns.kdeplot(data['V1'][cond_0], color="Red", shade = True)

g=sns.kdeplot(data['V1'][cond_1], color="blue", shade = True)

g.legend(["0","1"])

g.set_xlabel('V1')

g.set_ylabel("Frequency")

#按照上面的图,画出全部特征(v1-v28)

plt.figure(figsize=(30,60))

for i in range(1,29):

ax = plt.subplot(8,4,i)

g=sns.kdeplot(data['V%d'%(i)][cond_0],color="Red", shade = True,ax=ax)

g=sns.kdeplot(data['V%d'%(i)][cond_1], color="blue", shade = True,ax=ax)

# ax.set_title('V%d'%(i))

g.set_xlabel('V%d'%(i))

g.set_ylabel('Frequency')

g=g.legend(['0','1'])

上图是不同变量在信用卡被盗刷和信用卡正常的不同分布情况,我们将选择在不同信用卡状态下的分布有明显区别的变量。因此剔除变量V8、V13 、V15 、V20 、V21 、V22、 V23 、V24 、V25 、V26 、V27 和V28变量。

# 删除属性 V13,V15,v20,v22,v23,v24,v25,v26,v27,V28

droplabels= ['V13','V15','V20','V22','V23','V24','V25','V26','V27','V28']

data1 = data.drop(droplabels,axis = 1)

data1.head()

| Time | V1 | V2 | V3 | V4 | V5 | V6 | V7 | V8 | V9 | ... | V11 | V12 | V14 | V16 | V17 | V18 | V19 | V21 | Amount | Class | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | -1.960264 | -1.359807 | -0.072781 | 2.536347 | 1.378155 | -0.338321 | 0.462388 | 0.239599 | 0.098698 | 0.363787 | ... | -0.551600 | -0.617801 | -0.311169 | -0.470401 | 0.207971 | 0.025791 | 0.403993 | -0.018307 | 0.244964 | 0 |

| 1 | -1.960264 | 1.191857 | 0.266151 | 0.166480 | 0.448154 | 0.060018 | -0.082361 | -0.078803 | 0.085102 | -0.255425 | ... | 1.612727 | 1.065235 | -0.143772 | 0.463917 | -0.114805 | -0.183361 | -0.145783 | -0.225775 | -0.342475 | 0 |

| 2 | -1.960264 | -1.358354 | -1.340163 | 1.773209 | 0.379780 | -0.503198 | 1.800499 | 0.791461 | 0.247676 | -1.514654 | ... | 0.624501 | 0.066084 | -0.165946 | -2.890083 | 1.109969 | -0.121359 | -2.261857 | 0.247998 | 1.160686 | 0 |

| 3 | -1.960264 | -0.966272 | -0.185226 | 1.792993 | -0.863291 | -0.010309 | 1.247203 | 0.237609 | 0.377436 | -1.387024 | ... | -0.226487 | 0.178228 | -0.287924 | -1.059647 | -0.684093 | 1.965775 | -1.232622 | -0.108300 | 0.140534 | 0 |

| 4 | -1.960264 | -1.158233 | 0.877737 | 1.548718 | 0.403034 | -0.407193 | 0.095921 | 0.592941 | -0.270533 | 0.817739 | ... | -0.822843 | 0.538196 | -1.119670 | -0.451449 | -0.237033 | -0.038195 | 0.803487 | -0.009431 | -0.073403 | 0 |

from sklearn.feature_selection import SelectKBest

from sklearn.feature_selection import chi2 #卡方检验

X = data1.iloc[:,:-1]#特征

y = data1['Class']#标签

X1 = SelectKBest(chi2, k=15).fit_transform(abs(X), y) #卡方检验不允许输出负数,所以abs 选择更有用的15个k=15

plt.figure(figsize=(3,6))#画出标签情况

ax = plt.subplot(1,1,1)

s = y.value_counts()#0标签有284315个

# 1有492个

s.plot(kind = 'pie')

目标变量“Class”正常和被盗刷两种类别的数量差别较大,会对模型学习造成困扰

使用SMOTE过采样处理数据不均衡问题

from imblearn.over_sampling import SMOTE

smote=SMOTE()

X2,y2 = smote.fit_resample(X1,y)

print((y2 == 1).sum())#本来只有492个,变成了284315跟0分类一样

3.模型训练

from sklearn.model_selection import train_test_split,GridSearchCV

X_train,X_test,y_train,y_test = train_test_split(X2,y2,test_size = 0.1)

from sklearn.linear_model import LogisticRegression

lg = LogisticRegression()

clf1 = GridSearchCV(lg,param_grid={'C':[0.1,0.5,1.0,10,100]}) #C 正则化的强度

clf1.fit(X_train,y_train)

from sklearn import metrics

predicted=clf1.predict(X_test)

print('精准值:',metrics.precision_score(predicted,y_test))

print('召回率:',metrics.recall_score(predicted,y_test))

print('F1:',metrics.f1_score(predicted,y_test))

print("准确率:",clf1.score(X_test,y_test))

结果:

精准值: 0.8888732295116624

召回率: 0.9755607115235886

F1: 0.9302016887282918

准确率: 0.9334189191565693