神经网络模型的压缩,优化及部署;Model compression & optimization, deployment

Model deployment,compression & optimization

No matter how great your ML models are,if they take just milliseconds too long,

users will click on something else.

-模型研究、训练和 模型推理是一对矛盾

Model Research& Train: Better, bigger, slower,hardware more powerful

Model inference: faster,smaller,IOT/mobile/constrained computing

=Make models faster

-Inference optimization

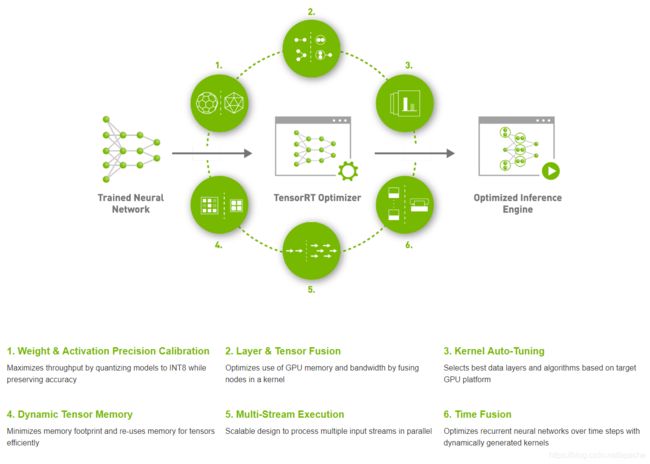

NVIDIA TensorRT

TensorRT is a SDK/C++ library that facilitates high-performance inference on GPUs.

TensorRT Started: https://developer.nvidia.com/tensorrt-getting-started

TensorRT download https://developer.nvidia.com/nvidia-tensorrt-download

Documents : https://docs.nvidia.com/deeplearning/tensorrt/archives/index.html

https://github.com/NVIDIA/TensorRT

Ubuntu1804+CUDA10.0安装TensorRT7

https://blog.csdn.net/u011681952/article/details/105973996

-Make models smaller

-Model Compression

-1.Quantization 2. Distillation 3.Pruning 4.Low-ranked factorization

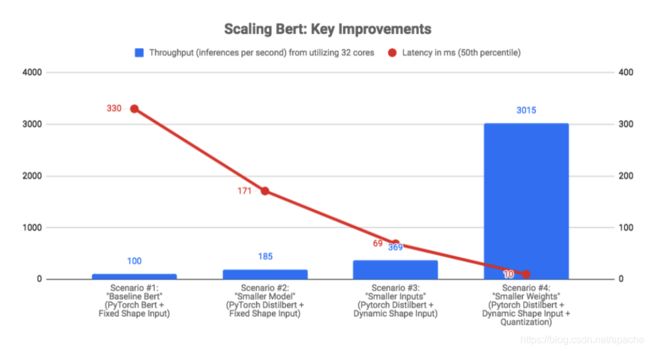

-Make models smaller: case study

How We Scaled Bert To Serve 1+ Billion Daily Requests on CPUs

https://robloxtechblog.com/how-we-scaled-bert-to-serve-1-billion-daily-requests-on-cpus-d99be090db26

https://blog.roblox.com/2020/05/scaled-bert-serve-1-billion-daily-requests-cpus/

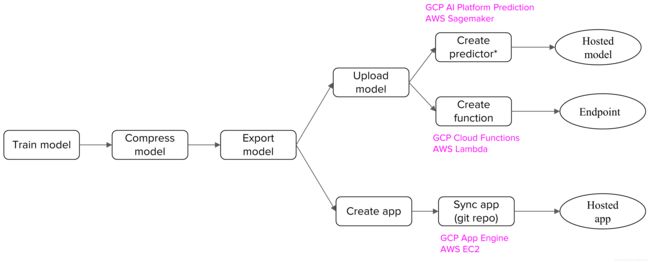

-Deploy a model on cloud

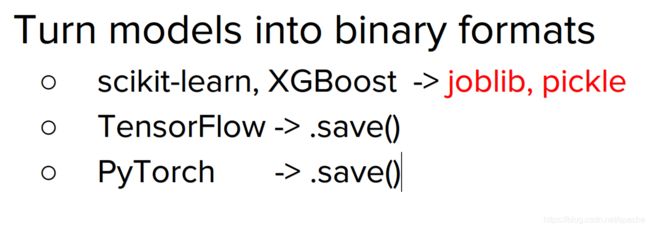

-Export a model

-Turn models into binary formats

-部署方式:

hosted Model,App,Endpoint

-Containerized deployment

-Creating a Docker image with Dockerfile

-Container registry

-Container: microservices

Kubernetes: container orchestration

-

=Test

Canary testing

A/B testing

Interleaved experiments

Shadow testing

==================================================

faster:Inference optimization

NVIDIA TensorRT

https://developer.nvidia.com/tensorrt

start: https://developer.nvidia.com/tensorrt-getting-started

guide: https://docs.nvidia.com/deeplearning/tensorrt/developer-guide/index.html

github https://github.com/NVIDIA/TensorRT

TensorRT is a C++ library that facilitates high-performance inference on NVIDIA graphics processing units (GPUs). It is designed to work in a complementary fashion

with training frameworks such as TensorFlow, Caffe, PyTorch, MXNet, etc.It focuses specifically on running an already-trained network quickly and efficiently on a GPU

for the purpose of generating a result (a process that is referred to in various places as scoring, detecting, regression, or inference).

=================================================

smaller:Model Compression

-韩松Han Song博士2015年在斯坦福/英伟达发表的Deep Compression是模型压缩方面很重要的论文。

在Deep Compression中,作者提出三种方法来进行模型压缩:剪枝,量化和霍夫曼编码。

==1.Quantization

神经网络模型量化论文小结

https://blog.csdn.net/u012101561/article/details/80868352

Tensorflow模型量化(Quantization)原理及其实现方法

https://zhuanlan.zhihu.com/p/79744430

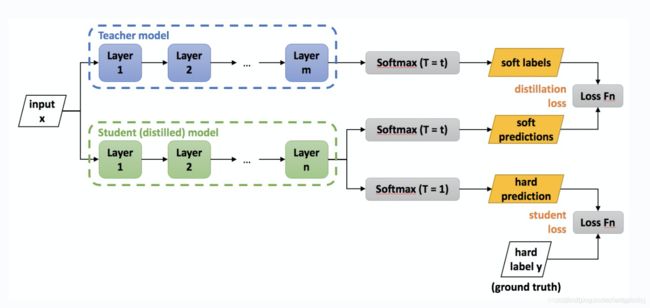

==2. Distillation

以DistilBert为例:

Distillation效果: 模型尺寸减少40%,语言理解能力保留97%,速度提升60%.

-

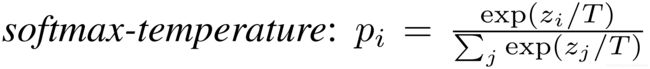

Softmax modify:

-模型训练方式:

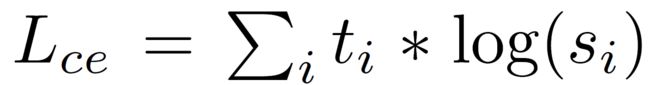

-三重损失函数Loss:

蒸馏Loss,语言模型Loss,余弦距离Loss

-

--

BERT蒸馏,DistilBERT、Distil-LSTM、TinyBERT、FastBERT(论文+代码)

https://blog.csdn.net/qq_38556984/article/details/109256544

TinyBERT蒸馏:小模型在只有原始BERT模型13%参数量的情况下,推断加速达到9倍,

同时在自然语言处理标准评测GLUE上获得原始BERT模型96%的效果。

TinyBERT: 面向预训练语言模型的知识蒸馏方法

https://bbs.huaweicloud.com/blogs/195703

https://blog.csdn.net/fengzhou_/article/details/111433457

DistilBert解读

https://blog.csdn.net/fengzhou_/article/details/107211090

Bert瘦身之DistilBert

https://zhuanlan.zhihu.com/p/126303499

知识蒸馏(Knowledge Distillation),Law-Yao

https://blog.csdn.net/nature553863/article/details/80568658

=================================================

GPT优化

-

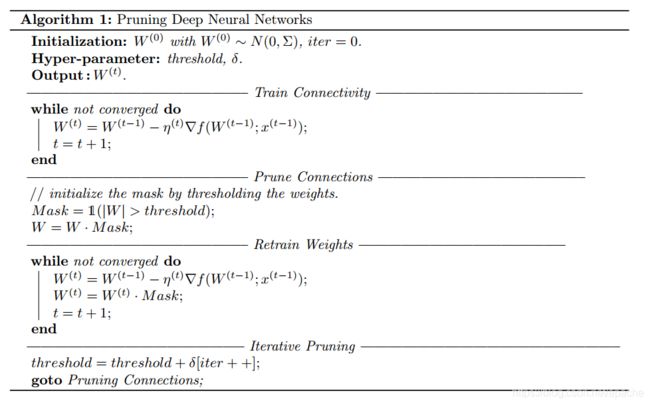

==3.Pruning

剪枝对应这篇论文:Learning both Weights and Connections for Efficient Neural Networks。

具体讲,剪枝将模型中不重要的权重设置为0,将原来的dense model转变为sparse model,达到压缩的目的。

论文使用三步方法修剪冗余连接:

首先,训练网络以了解哪些连接很重要。接下来,修剪不重要的连接。最后,重新训练网络以微调其余连接的权重。

在ImageNet数据集上,我们的方法将AlexNet的参数数量减少了9倍,从6100万到到670万,而不会造成准确性损失。

-解决什么问题

如何在不损失精度的前提下,对DNN进行剪枝(或者说稀疏化),从而压缩模型。

-why剪枝work

为什么能够通过剪枝的方法来压缩模型呢?难道剪掉的那些连接真的不重要到可以去掉吗?论文中,作者指出,DNN模型广泛存在着参数过多的问题,具有很大的冗余。

-怎么做剪枝

作者的方法分为三个步骤:

- Train Connectivity: 按照正常方法训练初始模型。作者认为该模型中权重的大小表征了其重要程度;

- Prune Connection: 将初始模型中那些低于某个阈值的的权重参数置成0(即所谓剪枝);

- Re-Train: 重新训练,以期其他未被剪枝的权重能够补偿pruning带来的精度下降;

为了达到一个满意的压缩比例和精度要求,2和3要重复多次。

-算法流程摘自Han Song博士论文

-对神经元进行剪枝

将神经元之间的connection剪枝后(或者说将权重稀疏化了),那些0

输入0输出的神经元也应该被剪枝了。然后,我们又可以继续以这个神经元出发,剪掉与它相关的connection。

这个步骤可以在训练的时候自动发生。

Deep Compression: Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffman Coding,

https://arxiv.org/abs/1510.00149

Learning both Weights and Connections for Efficient Neural Networks

https://www.groundai.com/project/learning-both-weights-and-connections-for-efficient-neural-networks/

https://xmfbit.github.io/2018/03/14/paper-network-prune-hansong/

==4.Low-ranked factorization

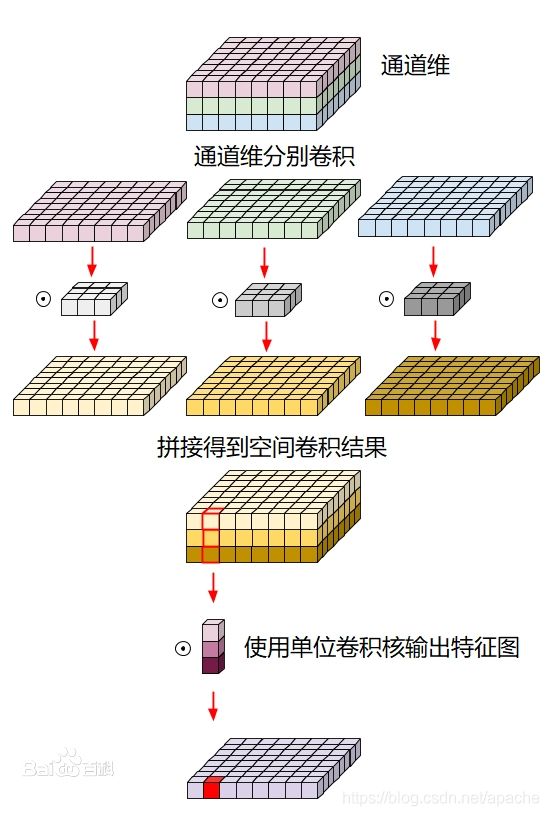

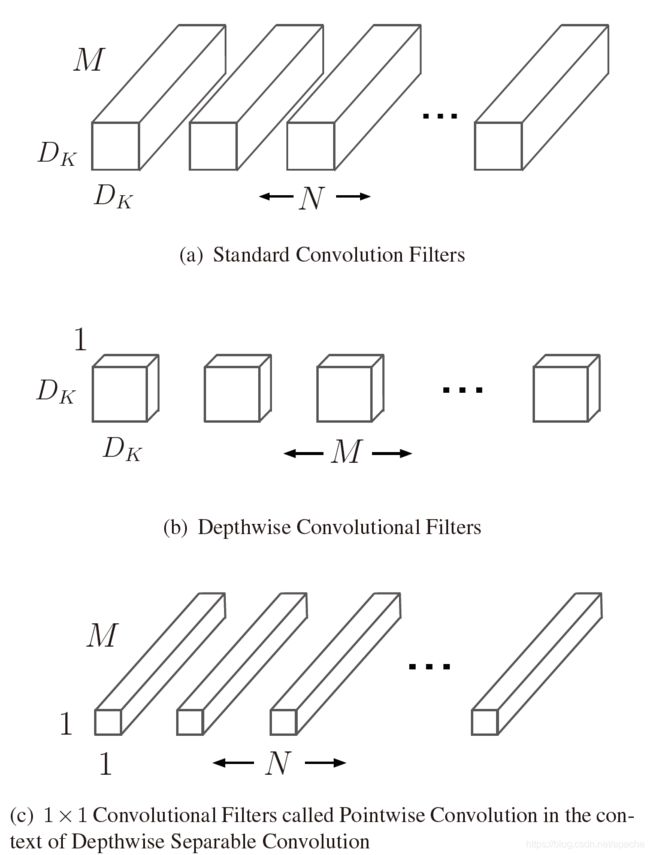

-以MobileNets为例:

-MobileNets是Google针对手机等嵌入式设备提出的一种轻量级的深层神经网络;重点在于压缩模型,同时保证精度。

深度可分卷积(depthwise separable convolution)是卷积神经网络中对标准的卷积计算进行改进所得到的算法,

其通过拆分空间维度和通道(深度)维度的相关性,减少了卷积计算所需要的参数个数,并在一些研究中被证实提升了卷积核参数的使用效率 。

深度可分卷积分为两部分,首先使用给定的卷积核尺寸对每个通道分别卷积并将结果组合,该部分被称为depthwise convolution,

随后深度可分卷积使用单位卷积核进行标准卷积并输出特征图,该部分被称为pointwise convolution。

-

- depthwise separable convolution :depthwise convolution + pointwise convolution

MobileNets是基于一个流线型的架构,它使用深度可分卷积算法来构建轻量级的深层神经网络。

深度可分离卷积depthwise separable convolution,它是一种可以分解的卷积:可以将标准卷积分解为深度卷积depthwise convolution和逐点卷积pointwise convolution。

简单理解就是矩阵的因式分解。深度卷积将每个卷积核应用到每一个通道,而1 × 1卷积用来组合通道卷积的输出,这种分解可以有效减少计算量,降低模型大小。

-

- 图a中的卷积核就是最常见的3D卷积,分解为deep-wise方式:一个逐个通道处理的2D卷积(图b)和一个3D的1*1卷积(图c)

-可以这样理解:

-

MobileNets: Efficient Convolutional Neural Networks for MobileVision Applications

https://arxiv.org/abs/1704.04861

https://blog.csdn.net/u010349092/article/details/81607819

网络解析(二):MobileNets详解

https://cuijiahua.com/blog/2018/02/dl_6.html

轻量化网络:MobileNets

https://blog.csdn.net/u011995719/article/details/78850275

【完】

====================================================================

====================================================================

Deep Compression: Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffman coding,

https://arxiv.org/abs/1510.00149

韩松博士毕业论文Efficient methods and hardware for deep learning论文详解

https://blog.csdn.net/weixin_36474809/article/details/85613013

All The Ways You Can Compress BERT

http://mitchgordon.me/machine/learning/2019/11/18/all-the-ways-to-compress-BERT.html

A Survey of Methods for Model Compression in NLP

https://www.pragmatic.ml/a-survey-of-methods-for-model-compression-in-nlp/