图像特征点匹配(视频质量诊断、画面抖动检测)

在视频质量诊断中,我们通常会涉及到“画面抖动”的检测。在此过程中就需要在视频中隔N帧取一帧图像,然后在获取的两帧图像上找出特征点,并进行相应的匹配。

当然了,这一过程中会出现很多的问题,例如:特征点失配等。

本文主要关注特征点匹配及去除失配点的方法。

主要功能:对统一物体拍了两张照片,只是第二张图片有选择和尺度的变化。现在要分别对两幅图像提取特征点,然后将这些特征点匹配,使其尽量相互对应。

下面,本文通过采用surf特征,分别使用Brute-force matcher和Flann-based matcher对特征点进行相互匹配。

1、 BFMatcher matcher

第一段代码摘自opencv官网的教程:

#include "stdafx.h"

#include

#include "opencv2/core/core.hpp"

#include "opencv2/features2d/features2d.hpp"

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/nonfree/features2d.hpp"

#include "opencv2/calib3d/calib3d.hpp"

#include "opencv2/imgproc/imgproc.hpp"

using namespace cv;

using namespace std;

int _tmain(int argc, _TCHAR* argv[])

{

Mat img_1 = imread( "haha1.jpg", CV_LOAD_IMAGE_GRAYSCALE );

Mat img_2 = imread( "haha2.jpg", CV_LOAD_IMAGE_GRAYSCALE );

if( !img_1.data || !img_2.data )

{ return -1; }

//-- Step 1: Detect the keypoints using SURF Detector

//Threshold for hessian keypoint detector used in SURF

int minHessian = 15000;

SurfFeatureDetector detector( minHessian );

std::vector keypoints_1, keypoints_2;

detector.detect( img_1, keypoints_1 );

detector.detect( img_2, keypoints_2 );

//-- Step 2: Calculate descriptors (feature vectors)

SurfDescriptorExtractor extractor;

Mat descriptors_1, descriptors_2;

extractor.compute( img_1, keypoints_1, descriptors_1 );

extractor.compute( img_2, keypoints_2, descriptors_2 );

//-- Step 3: Matching descriptor vectors with a brute force matcher

BFMatcher matcher(NORM_L2,false);

vector< DMatch > matches;

matcher.match( descriptors_1, descriptors_2, matches );

//-- Draw matches

Mat img_matches;

drawMatches( img_1, keypoints_1, img_2, keypoints_2, matches, img_matches );

//-- Show detected matches

imshow("Matches", img_matches );

waitKey(0);

return 0;

}

Brute-force descriptor matcher. For each descriptor in the first set, this matcher finds the closest descriptor in the second set by trying each one. This descriptor matcher supports masking permissible matches of descriptor sets.

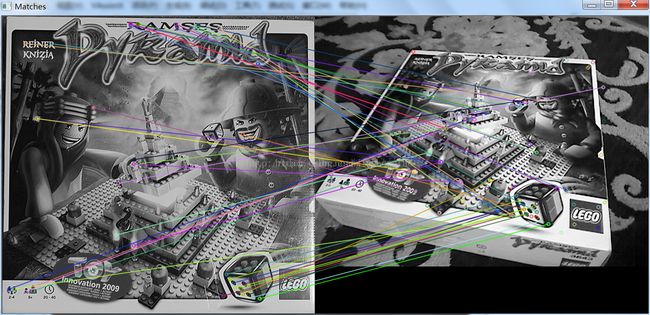

上面是那个bfmatcher的介绍。我上面代码把surf的阈值故意设置的很大(15000),否则图片全是线,没法看。上面代码的运行结果:

如图,有很多匹配失误。书中对匹配失误有两种定义:

False-positivematches:特征点健全,只是对应关系错误;

False-negativematches:特征点消失,导致对应关系错误;

我们只关心第一种情况,解决方案有两种,一种是将BFMatcher构造函数的第二个参数设置为true,作为cross-match filter。

BFMatcher matcher(NORM_L2,true);

他的思想是:to match train descriptors with the query set and viceversa.Only common matches for these two matches are returned. Such techniques usually produce best results with minimal number of outliers when there are enough matches。

为了使用查询集来匹配训练特征描述子。只有完成匹配了才返回。在有足够的匹配的特征点个数时,这种技术通常能够在异常值最小的情况下产生最好的结果。

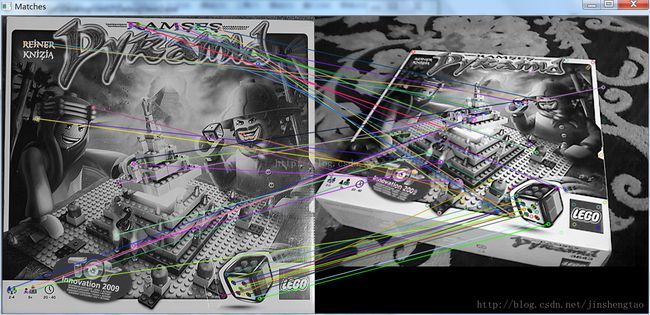

效果图:

可以看到匹配错误的线段比第一副图少了。

2、Flann-based matcher

uses the fastapproximate nearest neighbor search algorithm to find correspondences (it usesfast third-party library for approximate nearest neighbors library for this).

用法:

FlannBasedMatcher matcher1;

matcher1.match(descriptors_1, descriptors_2, matches );效果图:

下面介绍第二种去除匹配错误点方法,KNN-matching

We performKNN-matching first with K=2. Two nearest descriptors are returned for eachmatch.The match is returned only if the distance ratio between the first andsecond matches is big enough (the ratio threshold is usually near two).

#include "stdafx.h"

#include

#include "opencv2/core/core.hpp"

#include "opencv2/features2d/features2d.hpp"

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/nonfree/features2d.hpp"

#include "opencv2/calib3d/calib3d.hpp"

#include "opencv2/imgproc/imgproc.hpp"

using namespace cv;

using namespace std;

int _tmain(int argc, _TCHAR* argv[])

{

Mat img_1 = imread( "test.jpg", CV_LOAD_IMAGE_GRAYSCALE );

Mat img_2 = imread( "test1.jpg", CV_LOAD_IMAGE_GRAYSCALE );

if( !img_1.data || !img_2.data )

{ return -1; }

//-- Step 1: Detect the keypoints using SURF Detector

//Threshold for hessian keypoint detector used in SURF

int minHessian = 1500;

SurfFeatureDetector detector( minHessian );

std::vector keypoints_1, keypoints_2;

detector.detect( img_1, keypoints_1 );

detector.detect( img_2, keypoints_2 );

//-- Step 2: Calculate descriptors (feature vectors)

SurfDescriptorExtractor extractor;

Mat descriptors_1, descriptors_2;

extractor.compute( img_1, keypoints_1, descriptors_1 );

extractor.compute( img_2, keypoints_2, descriptors_2 );

//-- Step 3: Matching descriptor vectors with a brute force matcher

BFMatcher matcher(NORM_L2,false);

//FlannBasedMatcher matcher1;

vector< DMatch > matches;

vector> matches2;

matcher.match( descriptors_1, descriptors_2, matches );

//matcher1.match(descriptors_1, descriptors_2, matches );

const float minRatio = 1.f / 1.5f;

matches.clear();

matcher.knnMatch(descriptors_1, descriptors_2,matches2,2);

for (size_t i=0; i 这里,我把surf阈值设为1500了,效果图:

使用单应性矩阵变换来进一步细化结果:

单应性矩阵findHomography: 计算多个二维点对之间的最优单映射变换矩阵 H(3行x3列) ,使用最小均方误差或者RANSAC方法 。

//refine

const int minNumberMatchesAllowed = 8;

if (matches.size() < minNumberMatchesAllowed)

return false;

// Prepare data for cv::findHomography

std::vector srcPoints(matches.size());

std::vector dstPoints(matches.size());

for (size_t i = 0; i < matches.size(); i++)

{

//cout< inliersMask(srcPoints.size());

Mat homography = findHomography(srcPoints, dstPoints, CV_FM_RANSAC, 3.0f, inliersMask);

std::vector inliers;

for (size_t i=0; i 这段代码直接承接上一段代码即可。效果图: