8-点击流数据分析项目-Hive分析

文章目录

- 8-点击流数据分析项目-Hive分析

-

- 一、环境准备与数据导入

-

- 1.开启hadoop

- 2.导入数据

- 二、创建hive表

-

- 创建 原始数据表(clickstreamdata-pre):

- 创建点击流pageview表clickstreamdata-pageview

-

- 创建点击流visit表clickstreamdata-visits

- 三、数据导入Hive

- 四、生成统计指标

-

- 生成统计数据指标的明细表

- 导入数据(2021-09-18的数据)

- 按小时统计pvs

- 按天统计pvs

- 统计页面pvs值

- 统计2021-09-18这个分区里面的受访页面的top1

- 统计访问表数据

-

- 回头/单次访客统计

- 查询今日所有回头访客及其访问次数。

- 人均访问频次

- 人均页面浏览量

- 五、创建hive的数据临时表

-

- 每天的pvs值

- 指定日期的pvs值

- 每天的page的pvs值

- 六、导入mysql数据库表

-

- 查看sqoop安装目录

- 创建Mysql数据库

- 使用sqoop导出到mysql

- 总结

8-点击流数据分析项目-Hive分析

一、环境准备与数据导入

1.开启hadoop

如果在lsn等虚拟环境中开启需要先执行格式化,如果已经格式化的就不要二次格式化了

hadoop namenode -format

启动Hadoop

start-dfs.sh

start-yarn.sh

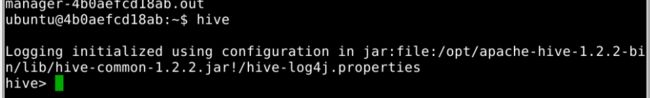

启动Hive

hive

查看是否启动

jps

2.导入数据

备注:本步骤不用重复执行,第7部分已经执行完成了,重复一遍,是为了回顾文件的具体位置

将数据上传到hadoop集群所在节点

备注,MR清洗后的数据集见:https://download.csdn.net/download/m0_38139250/75060549

创建hdfs目录

hadoop fs -mkdir -p /sx/clickstream

hadoop fs -mkdir /sx/clickstream

hadoop fs -put /home/ubuntu/Code/clickstreamdata-pre /sx/clickstream

hadoop fs -put /home/ubuntu/Code/clickstreamdata-pageviews /sx/clickstream

hadoop fs -put /home/ubuntu/Code/clickstreamdata-visits /sx/clickstream

二、创建hive表

进入hive创建数据表

在终端输入hive 进入hive界面

hive

创建 原始数据表(clickstreamdata-pre):

对应mr清洗完之后的数据clickstreamdata-pre,而不是原始日志数据

drop table if exists ods_weblog_origin;

create table ods_weblog_origin(

valid string,

remote_addr string,

remote_user string,

time_local string,

request string,

status string,

body_bytes_sent string,

http_referer string,

http_user_agent string)

partitioned by (datestr string)

row format delimited

fields terminated by '\001';

创建点击流pageview表clickstreamdata-pageview

drop table if exists ods_click_pageviews;

create table ods_click_pageviews(

session string,

remote_addr string,

remote_user string,

time_local string,

request string,

visit_step string,

page_staylong string,

http_referer string,

http_user_agent string,

body_bytes_sent string,

status string)

partitioned by (datestr string)

row format delimited

fields terminated by '\001';

创建点击流visit表clickstreamdata-visits

drop table if exists ods_click_stream_visit;

create table ods_click_stream_visit(

session string,

remote_addr string,

inTime string,

outTime string,

inPage string,

outPage string,

referal string,

pageVisits int)

partitioned by (datestr string)

row format delimited

fields terminated by '\001';

三、数据导入Hive

load data inpath '/sx/clickstream/clickstreamdata-pre' into table ods_weblog_origin partition(datestr='2021-09-18');

load data inpath '/sx/clickstream/clickstreamdata-pageviews' into table ods_click_pageviews partition(datestr='2021-09-18');

load data inpath '/sx/clickstream/clickstreamdata-visits' into table ods_click_stream_visit partition(datestr='2021-09-18');

四、生成统计指标

生成统计数据指标的明细表

drop table ods_weblog_detail;

create table ods_weblog_detail(

valid string, --有效标识

remote_addr string, --来源IP

remote_user string, --用户标识

time_local string, --访问完整时间

daystr string, --访问日期

timestr string, --访问时间

month string, --访问月

day string, --访问日

hour string, --访问时

request string, --请求的url

status string, --响应码

body_bytes_sent string, --传输字节数

http_referer string, --来源url

ref_host string, --来源的host

ref_path string, --来源的路径

ref_query string, --来源参数query

ref_query_id string, --来源参数query的值

http_user_agent string --客户终端标识

)

partitioned by(datestr string);

导入数据(2021-09-18的数据)

insert into table ods_weblog_detail partition(datestr='2021-09-18')

select c.valid,c.remote_addr,c.remote_user,c.time_local,

substring(c.time_local,0,10) as daystr,

substring(c.time_local,12) as tmstr,

substring(c.time_local,6,2) as month,

substring(c.time_local,9,2) as day,

substring(c.time_local,11,3) as hour,

c.request,c.status,c.body_bytes_sent,c.http_referer,c.ref_host,c.ref_path,c.ref_query,c.ref_query_id,c.http_user_agent

from

(SELECT

a.valid,a.remote_addr,a.remote_user,a.time_local,

a.request,a.status,a.body_bytes_sent,a.http_referer,a.http_user_agent,b.ref_host,b.ref_path,b.ref_query,b.ref_query_id

FROM ods_weblog_origin a LATERAL VIEW parse_url_tuple(regexp_replace(http_referer, "\"", ""), 'HOST', 'PATH','QUERY', 'QUERY:id') b as

ref_host, ref_path, ref_query, ref_query_id) c;

按小时统计pvs

select count(*) as pvs,month,day,hour from ods_weblog_detail group by month,day,hour;

按天统计pvs

select count(*) as pvs,a.month as month,a.day as day from ods_weblog_detail a

group by a.month,a.day;

统计页面pvs值

select count(*) as pvs,a.month as month,a.day as day,a.request request from ods_weblog_detail a

group by a.month,a.day,a.request;

统计2021-09-18这个分区里面的受访页面的top1

select '2021-09-18',a.request,a.request_counts from

(

select request as request,count(request) as request_counts

from ods_weblog_detail

where datestr='2021-09-18'

group by request

having request is not null

) a

order by a.request_counts desc limit 1;

统计访问表数据

回头/单次访客统计

select remote_addr ,count(remote_addr) ipcount

from ods_click_stream_visit

group by remote_addr

having ipcount > 1

查询今日所有回头访客及其访问次数。

select tmp.day,tmp.remote_addr,tmp.acc_cnt

from

(select '2021-09-18' as day,remote_addr,count(session) as acc_cnt from ods_click_stream_visit

group by remote_addr) tmp

where tmp.acc_cnt>1

人均访问频次

使用所有的独立访问的人,即独立的session个数除以所有的去重IP即可

select count(session)/count(distinct remote_addr) from ods_click_stream_visit where datestr='2021-09-18';

人均页面浏览量

所有的页面点击次数累加除以所有的独立去重IP总和即可

select sum(pagevisits)/count(distinct remote_addr) from ods_click_stream_visit where datestr='2021-09-18';

五、创建hive的数据临时表

每天的pvs值

drop table dw_pvs_everyday;

create table dw_pvs_everyday(pvs bigint,month string,day string);

insert into table dw_pvs_everyday

select count(*) as pvs,a.month as month,a.day as day from ods_weblog_detail a

group by a.month,a.day;

指定日期的pvs值

drop table if exists dw_pvs_everyhour_oneday;

create table if not exists dw_pvs_everyhour_oneday(month string,day string,hour string,pvs bigint) partitioned by(datestr string);

insert into table dw_pvs_everyhour_oneday partition(datestr='2021-09-18')

select a.month as month,a.day as day,a.hour as hour,count(*) as pvs from ods_weblog_detail a

where a.datestr='2021-09-18' group by a.month,a.day,a.hour;

每天的page的pvs值

drop table if exists dw_pvs_request_everyday;

create table if not exists dw_pvs_request_everyday(pvs bigint,month string,day string,request string);

insert into table dw_pvs_request_everyday

select count(*) as pvs,a.month as month,a.day as day,a.request request from ods_weblog_detail a

group by a.month,a.day,a.request;

六、导入mysql数据库表

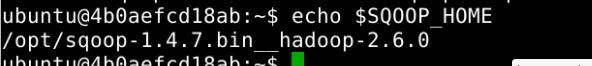

查看sqoop安装目录

echo $SQOOP_HOME

创建Mysql数据库

create database weblogs;

use weblogs;

drop table if exists weblogs.dw_pvs_everyday;

create table if not exists weblogs.dw_pvs_everyday

(

pvs int comment "每天浏览量",

month char(20) comment "月份",

day char(20) comment "日期"

);

drop table if exists weblogs.dw_pvs_everyhour_oneday;

create table if not exists weblogs.dw_pvs_everyhour_oneday

(

month char(20) comment "月份",

day char(20) comment "日期",

hour char(20) comment "小时",

pvs int comment "每天浏览量"

) ;

drop table if exists weblogs.dw_pvs_request_page;

create table if not exists weblogs.dw_pvs_request_page

(

pvs int comment "每天浏览量",

month char(20) comment "月份",

day char(20) comment "日期",

request char(200) comment "请求"

);

使用sqoop导出到mysql

### 从hive表导出到mysql数据库表

sqoop export --connect jdbc:mysql://localhost:3306/weblogs --username root --password 123456 --m 1 --export-dir /user/hive/warehouse/dw_pvs_everyday --table dw_pvs_everyday --input-fields-terminated-by '\001'

# 注意:需要修改为本机ip地址

sqoop export --connect jdbc:mysql://localhost:3306/weblogs --username root --password 123456 --m 1 --export-dir /user/hive/warehouse/dw_pvs_everyhour_oneday/datestr=2021-09-18 --table dw_pvs_everyhour_oneday --input-fields-terminated-by '\001'

# 注意:需要修改为本机ip地址

sqoop export --connect jdbc:mysql://localhost:3306/weblogs --username root --password 123456 --m 1 --export-dir /user/hive/warehouse/dw_pvs_request_everyday --table dw_pvs_request_page --input-fields-terminated-by '\001'

# 注意:需要修改为本机ip地址

备注:如果用lsn,需要打开mysql-workbench,导出的文件位于/home/ubuntu/dumps中

总结

本文完成了点击流分析项目的hive导入,hive分析与hive导出等三个部分。