目标检测(3)—— 如何使用PyTorch加载COCO类型的数据集

一、如何使用PyTorch加载COCO数据集

- 打开pytorch的官网

- 可以看到COCO数据集不提供下载

- 回顾json文件里面都有什么:“annotations"里面有"bbox”

- 先打印COCO数据集第一个数据看看

import torchvision

from PIL import ImageDraw

COCO_dataset=torchvision.datasets.CocoDetection(root="D:\\目标检测数据集\\val2017\\val2017",

annFile="D:\\目标检测数据集\\annotations_trainval2017\\annotations\\instances_val2017.json",

)

print(COCO_dataset[0])

结果:

loading annotations into memory...

Done (t=0.46s)

creating index...

index created!

(<PIL.Image.Image image mode=RGB size=640x426 at 0x27E438A9B00>, [{'segmentation': [[240.86, 211.31, 240.16, 197.19, 236.98, 192.26, 237.34, 187.67, 245.8, 188.02, 243.33, 176.02, 250.39, 186.96, 251.8, 166.85, 255.33, 142.51, 253.21, 190.49, 261.68, 183.08, 258.86, 191.2, 260.98, 206.37, 254.63, 199.66, 252.51, 201.78, 251.8, 212.01]], 'area': 531.8071000000001, 'iscrowd': 0, 'image_id': 139, 'bbox': [236.98, 142.51, 24.7, 69.5], 'category_id': 64, 'id': 26547}, {'segmentation': [[9.66, 167.76, 156.35, 173.04, 153.71, 256.48, 82.56, 262.63, 7.03, 260.87]], 'area': 13244.657700000002, 'iscrowd': 0, 'image_id': 139, 'bbox': [7.03, 167.76, 149.32, 94.87], 'category_id': 72, 'id': 34646}, {'segmentation': [[563.33, 209.19, 637.69, 209.19, 638.56, 287.92, 557.21, 280.04]], 'area': 5833.117949999999, 'iscrowd': 0, 'image_id': 139, 'bbox': [557.21, 209.19, 81.35, 78.73], 'category_id': 72, 'id': 35802}, {'segmentation': [[368.16, 252.94, 383.77, 255.69, 384.69, 235.49, 389.28, 226.31, 392.03, 219.89, 413.15, 218.05, 411.31, 241.92, 411.31, 256.61, 412.23, 274.05, 414.98, 301.6, 414.98, 316.29, 412.23, 311.7, 406.72, 290.58, 405.8, 270.38, 389.28, 270.38, 381.01, 270.38, 383.77, 319.04, 377.34, 320.88, 377.34, 273.14, 358.98, 266.71, 358.98, 253.86, 370.91, 253.86]], 'area': 2245.34355, 'iscrowd': 0, 'image_id': 139, 'bbox': [358.98, 218.05, 56.0, 102.83], 'category_id': 62, 'id': 103487}, {'segmentation': [[319.32, 230.98, 317.41, 220.68, 296.03, 218.0, 296.03, 230.22, 297.18, 244.34, 296.03, 258.08, 299.47, 262.28, 296.03, 262.28, 298.32, 266.48, 295.27, 278.69, 291.45, 300.45, 290.69, 310.76, 292.21, 309.23, 294.89, 291.67, 298.32, 274.11, 300.61, 266.1, 323.9, 268.77, 328.09, 272.59, 326.57, 297.02, 323.9, 315.34, 327.71, 316.48, 329.62, 297.78, 329.62, 269.53, 341.84, 266.48, 345.27, 266.1, 350.23, 265.72, 343.74, 312.28, 346.8, 310.76, 351.76, 273.35, 352.52, 264.95, 350.23, 257.32, 326.19, 255.79, 323.51, 254.27, 321.61, 244.72, 320.46, 235.56, 320.08, 231.36]], 'area': 1833.7840000000017, 'iscrowd': 0, 'image_id': 139, 'bbox': [290.69, 218.0, 61.83, 98.48], 'category_id': 62, 'id': 104368}, {'segmentation': [[436.06, 304.37, 443.37, 300.71, 436.97, 261.4, 439.71, 245.86, 437.88, 223.92, 415.94, 223.01, 413.2, 244.95, 415.03, 258.66, 416.86, 268.72, 415.03, 289.74, 419.6, 280.6, 423.26, 263.23, 434.23, 265.98]], 'area': 1289.3734500000014, 'iscrowd': 0, 'image_id': 139, 'bbox': [413.2, 223.01, 30.17, 81.36], 'category_id': 62, 'id': 105328}, {'segmentation': [[317.4, 219.24, 319.8, 230.83, 338.98, 230.03, 338.58, 222.44, 338.58, 221.24, 336.58, 219.64, 328.19, 220.44]], 'area': 210.14820000000023, 'iscrowd': 0, 'image_id': 139, 'bbox': [317.4, 219.24, 21.58, 11.59], 'category_id': 62, 'id': 110334}, {'segmentation': [[428.19, 219.47, 430.94, 209.57, 430.39, 210.12, 421.32, 216.17, 412.8, 217.27, 413.9, 214.24, 422.42, 211.22, 429.29, 201.6, 430.67, 181.8, 430.12, 175.2, 427.09, 168.06, 426.27, 164.21, 430.94, 159.26, 440.29, 157.61, 446.06, 163.93, 448.53, 168.06, 448.53, 173.01, 449.08, 174.93, 454.03, 185.1, 455.41, 188.4, 458.43, 195.0, 460.08, 210.94, 462.28, 226.61, 460.91, 233.76, 454.31, 234.04, 460.08, 256.85, 462.56, 268.13, 465.58, 290.67, 465.85, 293.14, 463.38, 295.62, 452.66, 295.34, 448.26, 294.52, 443.59, 282.7, 446.06, 235.14, 446.34, 230.19, 438.09, 232.39, 438.09, 221.67, 434.24, 221.12, 427.09, 219.74]], 'area': 2913.1103999999987, 'iscrowd': 0, 'image_id': 139, 'bbox': [412.8, 157.61, 53.05, 138.01], 'category_id': 1, 'id': 230831}, {'segmentation': [[384.98, 206.58, 384.43, 199.98, 385.25, 193.66, 385.25, 190.08, 387.18, 185.13, 387.18, 182.93, 386.08, 181.01, 385.25, 178.81, 385.25, 175.79, 388.0, 172.76, 394.88, 172.21, 398.72, 173.31, 399.27, 176.06, 399.55, 183.48, 397.9, 185.68, 395.15, 188.98, 396.8, 193.38, 398.45, 194.48, 399.0, 205.75, 395.43, 207.95, 388.83, 206.03]], 'area': 435.1449499999997, 'iscrowd': 0, 'image_id': 139, 'bbox': [384.43, 172.21, 15.12, 35.74], 'category_id': 1, 'id': 233201}, {'segmentation': [[513.6, 205.75, 526.04, 206.52, 526.96, 208.82, 526.96, 221.72, 512.22, 221.72, 513.6, 205.9]], 'area': 217.71919999999997, 'iscrowd': 0, 'image_id': 139, 'bbox': [512.22, 205.75, 14.74, 15.97], 'category_id': 78, 'id': 1640282}, {'segmentation': [[493.1, 282.65, 493.73, 174.34, 498.72, 174.34, 504.34, 175.27, 508.09, 176.21, 511.83, 178.08, 513.39, 179.33, 513.08, 280.46, 500.28, 281.09, 494.35, 281.71]], 'area': 2089.9747999999986, 'iscrowd': 0, 'image_id': 139, 'bbox': [493.1, 174.34, 20.29, 108.31], 'category_id': 82, 'id': 1647285}, {'segmentation': [[611.35, 349.51, 604.77, 305.89, 613.14, 307.99, 619.11, 351.6]], 'area': 338.60884999999973, 'iscrowd': 0, 'image_id': 139, 'bbox': [604.77, 305.89, 14.34, 45.71], 'category_id': 84, 'id': 1648594}, {'segmentation': [[613.24, 308.24, 620.68, 308.79, 626.12, 354.68, 618.86, 352.32]], 'area': 322.5935999999982, 'iscrowd': 0, 'image_id': 139, 'bbox': [613.24, 308.24, 12.88, 46.44], 'category_id': 84, 'id': 1654394}, {'segmentation': [[454.29, 121.35, 448.71, 125.07, 447.77, 136.25, 451.5, 140.9, 455.92, 143.0, 461.04, 138.34, 461.74, 128.33, 456.39, 121.12]], 'area': 225.6642000000005, 'iscrowd': 0, 'image_id': 139, 'bbox': [447.77, 121.12, 13.97, 21.88], 'category_id': 85, 'id': 1666628}, {'segmentation': [[553.14, 392.76, 551.33, 370.57, 549.06, 360.15, 571.7, 314.42, 574.42, 309.43, 585.74, 316.23, 579.4, 323.93, 578.04, 331.17, 577.59, 334.34, 578.95, 342.49, 581.21, 351.55, 585.29, 370.57, 584.83, 389.13, 580.31, 399.1, 563.55, 399.1, 554.95, 394.57]], 'area': 2171.6188500000007, 'iscrowd': 0, 'image_id': 139, 'bbox': [549.06, 309.43, 36.68, 89.67], 'category_id': 86, 'id': 1667817}, {'segmentation': [[361.37, 229.69, 361.56, 226.09, 360.42, 220.02, 359.29, 213.39, 358.72, 208.84, 353.41, 209.22, 353.22, 211.31, 353.41, 216.23, 352.65, 220.21, 350.76, 228.55, 351.71, 231.39, 360.8, 231.39, 362.13, 228.74]], 'area': 178.18510000000012, 'iscrowd': 0, 'image_id': 139, 'bbox': [350.76, 208.84, 11.37, 22.55], 'category_id': 86, 'id': 1669970}, {'segmentation': [[413.7, 220.47, 412.25, 231.06, 419.96, 231.54, 421.88, 219.02]], 'area': 90.98724999999988, 'iscrowd': 0, 'image_id': 139, 'bbox': [412.25, 219.02, 9.63, 12.52], 'category_id': 62, 'id': 1941808}, {'segmentation': [[242.95, 212.06, 241.24, 199.54, 254.32, 194.99, 255.46, 200.68, 253.18, 212.62]], 'area': 189.56010000000012, 'iscrowd': 0, 'image_id': 139, 'bbox': [241.24, 194.99, 14.22, 17.63], 'category_id': 86, 'id': 2146194}, {'segmentation': [[339.52, 201.72, 336.79, 216.23, 346.35, 214.69, 346.52, 199.5]], 'area': 120.23200000000004, 'iscrowd': 0, 'image_id': 139, 'bbox': [336.79, 199.5, 9.73, 16.73], 'category_id': 86, 'id': 2146548}, {'segmentation': [[321.21, 231.22, 387.48, 232.09, 385.95, 244.95, 362.85, 245.6, 361.32, 266.53, 361.98, 310.12, 362.41, 319.5, 353.47, 320.15, 350.2, 318.41, 350.86, 277.65, 350.64, 272.63, 351.29, 246.69, 322.96, 245.17], [411.46, 232.31, 418.87, 231.22, 415.82, 248.87, 409.93, 248.87], [436.96, 232.31, 446.77, 232.09, 446.77, 235.79, 446.12, 266.53, 445.46, 303.15, 441.54, 302.93, 440.23, 296.17, 438.92, 289.85, 438.92, 244.95, 436.09, 244.29]], 'area': 2362.4897499999984, 'iscrowd': 0, 'image_id': 139, 'bbox': [321.21, 231.22, 125.56, 88.93], 'category_id': 67, 'id': 2204286}])

- 可以看到返回了一个元组

- (图片,注释)

打个断点看看:这里重点关心bbox

- 将json中的bbox取出来,就可以画在图片上

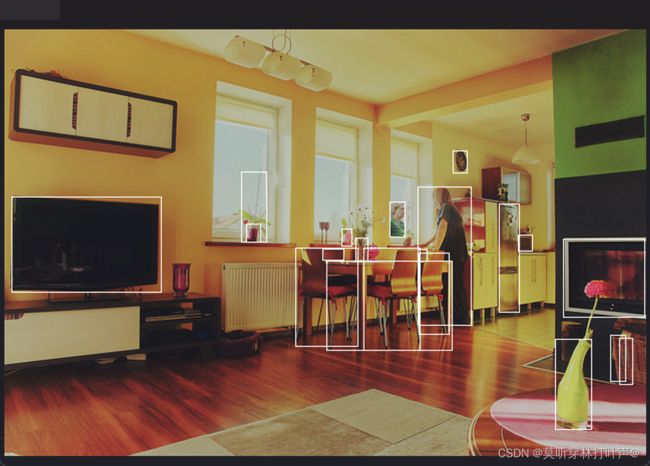

完整代码:

import torchvision

from PIL import ImageDraw

COCO_dataset=torchvision.datasets.CocoDetection(root="D:\\目标检测数据集\\val2017\\val2017",

annFile="D:\\目标检测数据集\\annotations_trainval2017\\annotations\\instances_val2017.json",

)

image,info=COCO_dataset[0]

image_handler=ImageDraw.ImageDraw(image)

#取出info中的所有bbox 在info里面循环取出bbox

for annotation in info:

x_min,y_min,width,height=annotation['bbox']

image_handler.rectangle(((x_min,y_min),(x_min+width,y_min+height)))

image.show()

二、如何使用PyTorch加载自己的COCO类型的数据集

- 和上面的读取方式一模一样,只用替换路径。

- PyTorch提供的只是COCO类型的空壳子,直接替换数据集就ok。

import torchvision

from PIL import ImageDraw

COCO_dataset=torchvision.datasets.CocoDetection(root="D:\\目标检测标注\\images_test",

annFile="D:\\目标检测标注\\coco1-4\\annotations\\instances_default.json",

)

image,info=COCO_dataset[1]

image_handler=ImageDraw.ImageDraw(image)

#取出info中的所有bbox 在info里面循环取出bbox

for annotation in info:

x_min,y_min,width,height=annotation['bbox']

image_handler.rectangle(((x_min,y_min),(x_min+width,y_min+height)))

image.show()

- 如何制作自己的数据集查看上一节

报错的话在终端下载:pip install pycocotools-windows