TensorRT综述

TensorRT综述

- TensorRT综述

-

- 概述

- 环境搭建(基于docker环境)

- ONNX转TensorRT

- TensorRT 推理

-

- 加载EfficientNet TensorRT模型

- 分配GPU、将输入数据复制到GPU内存中、将输出数据从GPU内存复制到主机内存中

- 前处理

- 推理

- 后处理

- 完整代码:

TensorRT综述

概述

TensorRT是NVIDIA提供的用于深度学习推理的高性能推理引擎,它可以优化深度学习模型的推理速度和性能,以满足实时应用的需求。

TensorRT采用了多种优化技术,包括网络剪枝、量化、层融合等,可以自动优化网络结构以减少运行时间和内存占用。与常规的深度学习框架相比,TensorRT可以将模型的推理速度提高数倍,同时减少了计算和内存的开销。

TensorRT支持多种类型的神经网络,包括卷积神经网络、循环神经网络、生成对抗网络等,可以在多种平台上运行,包括GPU、CPU、嵌入式设备等。同时,TensorRT还提供了C++和Python API,可以方便地集成到现有的深度学习框架和应用程序中。

总之,TensorRT是一款优秀的深度学习推理引擎,可以大大提高深度学习模型的推理速度和性能,适用于许多实时应用场景。

环境搭建(基于docker环境)

Docker环境有以下几个优势:

- 跨平台:Docker容器可以在不同的操作系统上运行,包括Linux、Windows、Mac等,这使得应用程序可以更加容易地在不同的环境中部署和运行。

- 隔离性:Docker容器提供了一种隔离应用程序的方式,使得应用程序不会互相干扰,同时可以避免不同应用程序之间的依赖冲突。这样可以让应用程序更加稳定、可靠,也更容易管理。

- 可移植性:Docker容器是一种轻量级的虚拟化技术,可以将应用程序和其依赖项打包到一个镜像中,并在不同的环境中运行,这使得应用程序可以更加方便地进行部署、迁移和扩展。

- 快速部署:使用Docker容器可以极大地简化应用程序的部署过程,只需要将容器部署到目标环境中即可,无需安装依赖项、配置环境等繁琐的过程。

- 可伸缩性:Docker容器可以在多个主机上运行,并可以通过Docker Swarm等容器编排工具进行管理,从而实现应用程序的快速扩展和伸缩。

总之,Docker环境可以让应用程序更加轻量、可移植、可靠,同时也更容易部署和管理。这使得Docker成为了现代应用程序开发和部署的重要工具之一。

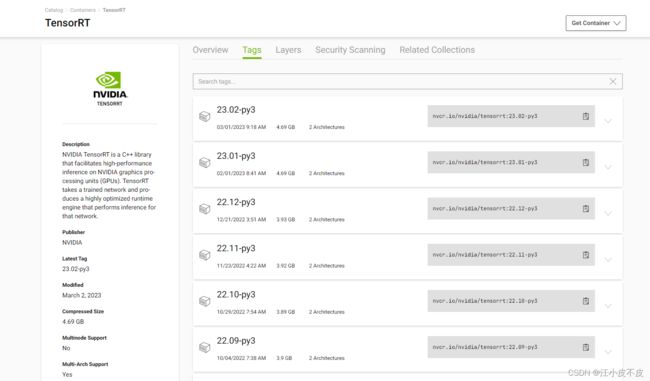

- 进入NVIDIA官网

https://catalog.ngc.nvidia.com/orgs/nvidia/containers/tensorrt/tags

- 选择需要的tensorrt版本

- 下载TensorRT docker镜像

docker pull nvcr.io/nvidia/tensorrt:21.07-py3

- 启动容器,并进入镜像

docker run --gpus all -it --rm -v /work/jxp/project/:/models nvcr.io/nvidia/tensorrt:21.07-py3

- 安装opencv

pip install opencv-python-headless -i https://pypi.doubanio.com/simple

注意:如果安装opencv-python,会报错ImportError: libGL.so.1: cannot open shared object file: No such file or directory

ONNX转TensorRT

- 全精度转换(FP32)

trtexec --onnx=efficientnet.onnx --explicitBatch --minShapes=input:1x3x224x224 --optShapes=input:16x3x224x224 --maxShapes=input:32x3x224x224 --saveEngine=efficientnet.plan --verbose

- 半精度转换(FP16)

trtexec --onnx=efficientnet.onnx --explicitBatch --minShapes=input:1x3x224x224 --optShapes=input:16x3x224x224 --maxShapes=input:32x3x224x224 --saveEngine=efficientnet.plan --fp16 --verbose

TensorRT 推理

TensorRT推理的流程一般包括以下几个步骤:

- 定义模型:首先需要定义深度学习模型,可以使用常见的深度学习框架(如TensorFlow、PyTorch等)或者使用C++或Python API直接定义模型。

- 优化模型:使用TensorRT进行模型优化,包括层融合、剪枝、量化、深度缩减等优化技术,以达到加速和减小模型大小的目的。

- 创建执行引擎:将优化后的模型转化为TensorRT执行引擎,以供后续推理使用。执行引擎包括计算图、内存分配、网络优化等信息,可以根据需要序列化到文件中。

- 加载数据:将输入数据加载到内存中,包括图像、音频、文本等数据。

- 前处理:对输入数据进行前处理,包括归一化、裁剪、缩放、格式转换等操作,以满足模型输入的要求。

- 推理计算:将前处理后的数据输入到TensorRT执行引擎中进行推理计算,得到输出结果。

- 后处理:对输出结果进行后处理,包括格式转换、解码、可视化等操作,以满足应用程序的需求。

- 输出结果:将后处理后的输出结果输出到文件、数据库、网络等存储介质中,以供后续应用程序使用。

总之,TensorRT推理的流程包括了模型定义、模型优化、执行引擎创建、数据加载、前处理、推理计算、后处理、输出结果等多个步骤,需要综合考虑各个步骤的性能、准确性、可靠性等因素,以满足应用程序的需求。

加载EfficientNet TensorRT模型

#---------------------------------------------------------------

# 加载EfficientNet TensorRT模型

#---------------------------------------------------------------

engine_file_path = "/path/to/efficientnet.engine"

with open(engine_file_path, "rb") as f, trt.Runtime(trt.Logger()) as runtime:

engine = runtime.deserialize_cuda_engine(f.read())

context = engine.create_execution_context()

分配GPU、将输入数据复制到GPU内存中、将输出数据从GPU内存复制到主机内存中

#---------------------------------------------------------------

# 分配GPU、将输入数据复制到GPU内存中、将输出数据从GPU内存复制到主机内存中

#---------------------------------------------------------------

def allocate_buffers(self, engine, max_batch_size=32):

inputs = []

outputs = []

bindings = []

stream = cuda.Stream()

for binding in engine:

dims = engine.get_binding_shape(binding)

# print(dims)

if dims[0] == -1:

assert(max_batch_size is not None)

dims[0] = max_batch_size # 动态batch_size适应

size = trt.volume(dims) * engine.max_batch_size

dtype = trt.nptype(engine.get_binding_dtype(binding))

host_mem = cuda.pagelocked_empty(size, dtype) # 开辟出一片显存

device_mem = cuda.mem_alloc(host_mem.nbytes)

bindings.append(int(device_mem))

if engine.binding_is_input(binding):

inputs.append(HostDeviceMem(host_mem, device_mem))

else:

outputs.append(HostDeviceMem(host_mem, device_mem))

return inputs, outputs, bindings, stream

前处理

#---------------------------------------------------------------

# 前处理

#---------------------------------------------------------------

def img_pre_process(self, img):

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

img = cv2.resize(img, (self.input_size[1], self.input_size[0]), interpolation=cv2.INTER_LINEAR)

try:

cv2.imwrite("test.jpg", img)

img = img.astype(np.float32)

img /= 255.0

img_mean = (0.485,0.456,0.406)

img_std = (0.229,0.224,0.225)

img = img - img_mean

img = (img/img_std)

except Exception as e:

print("img_pre_process===wrong!!!",e)

return img

def pre_process(self, img_list):

self.batch_size = len(img_list)

batch_img = []

self.batch_data = []

self.context.set_binding_shape(0, (self.batch_size, 3, self.input_size[0], self.input_size[1]))

self.inputs, self.outputs, self.bindings, self.stream = self.allocate_buffers(

self.engine, max_batch_size=self.batch_size) # 构建输入,输出,流指针

imgs_result = self.thread_pool.map(self.img_pre_process, img_list)

imgs_list_res = list(zip(imgs_result))

for r in imgs_list_res:

batch_img.append(r[0])

self.batch_data = np.stack(batch_img, axis=0)

return self.batch_data

推理

#---------------------------------------------------------------

# 推理

#---------------------------------------------------------------

def do_inference_v2(self, context, bindings, inputs, outputs, stream):

[cuda.memcpy_htod_async(inp.device, inp.host, stream) for inp in inputs]

context.execute_async_v2(bindings=bindings, stream_handle=stream.handle)

[cuda.memcpy_dtoh_async(out.host, out.device, stream) for out in outputs]

stream.synchronize()

return [out.host for out in outputs]

def model_predict(self):

np.copyto(self.inputs[0].host, self.batch_data.ravel())

result = self.do_inference_v2(self.context, self.bindings, self.inputs, self.outputs, self.stream)[0]

return result

后处理

#---------------------------------------------------------------

# 推理

#---------------------------------------------------------------

def post_process(self,result):

result = result.reshape(-1,4)

for r in result:

index = np.argmax(r, axis=0)

print("=======index===========",index)

完整代码:

import os

import time

import glob

import cv2

import numpy as np

import pycuda.autoinit

import pycuda.driver as cuda

import tensorrt as trt

import concurrent.futures

class HostDeviceMem(object):

def __init__(self, host_mem, device_mem):

self.host = host_mem

self.device = device_mem

def __str__(self):

return "Host:\n" + str(self.host) + "\nDevice:\n" + str(self.device)

def __repr__(self):

return self.__str__()

class TrtModel(object):

def __init__(self, engine_path, input_size):

try:

self.engine_path = engine_path

TRT_LOGGER = trt.Logger(trt.Logger.WARNING)

self.runtime = trt.Runtime(TRT_LOGGER)

self.engine = self.load_engine(self.runtime, self.engine_path)

self.context = self.engine.create_execution_context()

self.thread_pool = concurrent.futures.ThreadPoolExecutor()

self.input_size = input_size

except Exception as e:

print("TrtModel******init*********wrong")

# 加载EfficientNet TensorRT模型

def load_engine(self, trt_runtime, engine_path):

try:

with open(engine_path, "rb") as f:

engine_data = f.read()

engine = trt_runtime.deserialize_cuda_engine(engine_data)

except Exception as e:

print("load_engine filled")

return engine

# 分配GPU内存、将输入数据复制到GPU内存中、将输出数据从GPU内存复制到主机内存中

def allocate_buffers(self, engine, max_batch_size=32):

inputs = []

outputs = []

bindings = []

stream = cuda.Stream()

for binding in engine:

dims = engine.get_binding_shape(binding)

if dims[0] == -1:

assert(max_batch_size is not None)

dims[0] = max_batch_size # 动态batch_size适应

size = trt.volume(dims) * engine.max_batch_size

dtype = trt.nptype(engine.get_binding_dtype(binding))

host_mem = cuda.pagelocked_empty(size, dtype) # 开辟出一片显存

device_mem = cuda.mem_alloc(host_mem.nbytes)

bindings.append(int(device_mem))

if engine.binding_is_input(binding):

inputs.append(HostDeviceMem(host_mem, device_mem))

else:

outputs.append(HostDeviceMem(host_mem, device_mem))

return inputs, outputs, bindings, stream

#---------------------------------------------------------------

# 前处理

#---------------------------------------------------------------

def img_pre_process(self, img):

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

img = cv2.resize(img, (self.input_size[1], self.input_size[0]), interpolation=cv2.INTER_LINEAR)

try:

cv2.imwrite("test.jpg", img)

img = img.astype(np.float32)

img /= 255.0

img_mean = (0.485,0.456,0.406)

img_std = (0.229,0.224,0.225)

img = img - img_mean

img = (img/img_std)

except Exception as e:

print("img_pre_process===wrong!!!",e)

return img

def pre_process(self, img_list):

self.batch_size = len(img_list)

batch_img = []

self.batch_data = []

self.context.set_binding_shape(0, (self.batch_size, 3, self.input_size[0], self.input_size[1]))

self.inputs, self.outputs, self.bindings, self.stream = self.allocate_buffers(

self.engine, max_batch_size=self.batch_size) # 构建输入,输出,流指针

imgs_result = self.thread_pool.map(self.img_pre_process, img_list)

imgs_list_res = list(zip(imgs_result))

for r in imgs_list_res:

batch_img.append(r[0])

self.batch_data = np.stack(batch_img, axis=0)

return self.batch_data

#---------------------------------------------------------------

# 推理

#---------------------------------------------------------------

def do_inference_v2(self, context, bindings, inputs, outputs, stream):

[cuda.memcpy_htod_async(inp.device, inp.host, stream) for inp in inputs]

context.execute_async_v2(bindings=bindings, stream_handle=stream.handle)

[cuda.memcpy_dtoh_async(out.host, out.device, stream) for out in outputs]

stream.synchronize()

return [out.host for out in outputs]

def model_predict(self):

np.copyto(self.inputs[0].host, self.batch_data.ravel())

result = self.do_inference_v2(self.context, self.bindings, self.inputs, self.outputs, self.stream)[0]

return result

#---------------------------------------------------------------

# 后处理

#---------------------------------------------------------------

def post_process(self,result):

result = result.reshape(-1,4)

for r in result:

index = np.argmax(r, axis=0)

print("=======index===========",index)

if __name__ == "__main__":

img_path = 'data/'

engine_path = 'model.plan'

img_list = []

for img in os.listdir(img_path):

img_p = os.path.join(img_path,img)

img_ = cv2.imread(img_p)

img_list.append(img_)

input_size = [224,224]

trt_model = TrtModel(engine_path, input_size)

trt_model.pre_process(img_list)

result = trt_model.model_predict()

trt_model.post_process(result)