Hive:cannot be cast to org.apache.hadoop.io.XXXWritable 数据类型解决方案

使用Sqoop导数据经常出现数据类型异常,或在ETL过程中发现,类型不同查询不出。可通过查看Parquet的元数据,查看Parquet文件数据与Hive表是否一致。不一致时,对应字段相关处理。

一、Sqoop导数据

1.1 Mysql表

CREATE TABLE `scrm_user_crowd` (

`id` int(11) NOT NULL AUTO_INCREMENT,

`zb_id` int(11) DEFAULT NULL COMMENT '直播ID',

`user_id` varchar(50) DEFAULT NULL COMMENT '用户ID',

`name` varchar(255) DEFAULT NULL COMMENT '人群名称',

`definition` varchar(255) DEFAULT NULL COMMENT '人群定义',

`count` bigint(20) DEFAULT NULL COMMENT '人群数量',

`update_type` tinyint(4) DEFAULT NULL COMMENT '更新方式 :1手动更新,2例行更新',

`create_time` datetime DEFAULT NULL COMMENT '创建时间',

`update_time` datetime DEFAULT NULL COMMENT '更新时间',

`tag_query_json` varchar(3000) DEFAULT NULL COMMENT '标签查询json',

`cos_url` varchar(255) DEFAULT NULL COMMENT 'COS地址',

`cos_key` varchar(255) DEFAULT NULL COMMENT 'COS中的key',

`system_recommend` tinyint(4) unsigned DEFAULT '1' COMMENT '是否系统推荐,1是自定义人群,2是系统推荐',

`status` tinyint(4) DEFAULT '1' COMMENT '记录状态,1为正常,2为删除',

`finished` tinyint(1) DEFAULT '1' COMMENT '数据计算是否完成, 1 计算中, 2 已完成',

PRIMARY KEY (`id`)

) ENGINE=InnoDB AUTO_INCREMENT=434 DEFAULT CHARSET=utf8mb4 COMMENT='用户人群表'1.2 Hive表

drop table if exists ods.ods_hive_mysql_scrm_scrm_user_crowd_di;

create external table if not exists ods.ods_hive_mysql_scrm_scrm_user_crowd_di(

`id` int comment '自增主键',

`zb_id` int comment '直播ID',

`user_id` string comment '用户ID',

`name` string comment '人群名称',

`definition` string comment '人群定义',

`count` bigint comment '人群数量',

`update_type` int comment '更新方式',

`create_time` string comment '创建时间',

`update_time` string comment '更新时间',

`tag_query_json` string comment '标签查询json',

`cos_url` string comment 'COS地址',

`cos_key` string comment 'COS中的key',

`system_recommend` int comment '是否系统推荐,1是自定义人群,2是系统推荐',

`status` int comment '记录状态,1为正常,2为删除'

)comment '用户人群表(增量)'

partitioned by (dt string)

stored as parquet

location '/user/hive/warehouse/ods.db/ods_hive_mysql_scrm_scrm_user_crowd_di';1.3 采集方式

sqoop import \

--connect jdbc:mysql://localhot:3306/scrm?zeroDateTimeBehavior=convertToNull\&dontTrackOpenResources=true\&defaultFetchSize=1000\&useCursorFetch=true \

--username root \

--password 123456 \

--null-string '\\N' \

--null-non-string '\\N' \

--query '

SELECT id,zb_id,user_id,name,definition,

count,update_type,create_time,update_time,tag_query_json,

cos_url,cos_key,system_recommend,status

FROM scrm.scrm_user_crowd

WHERE create_time>="'$vDay'" or update_time>="'$vDay'" AND $CONDITIONS' \

--target-dir /usr/hive/warehouse/ods.db/ods_hive_mysql_scrm_scrm_user_crowd_di/$vDay \

--append \

--num-mappers 1 \

--split-by id \

--as-parquetfile \

--map-column-java create_time=String,update_time=String \

--map-column-hive create_time=String,update_time=String1.4 查看数据

hive> SELECT * FROM ods.ods_hive_mysql_scrm_scrm_user_crowd_di WHERE dt = '2020-12-10';

OK

SLF4J: Failed to load class "org.slf4j.impl.StaticLoggerBinder".

SLF4J: Defaulting to no-operation (NOP) logger implementation

SLF4J: See http://www.slf4j.org/codes.html#StaticLoggerBinder for further details.

Failed with exception java.io.IOException:org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.ClassCastException: org.apache.hadoop.io.LongWritable cannot be cast to org.apache.hadoop.io.IntWritable

Time taken: 2.654 seconds

hive> exit;备注:出现类型转换异常

二、处理

2.1 导完之后数据类型

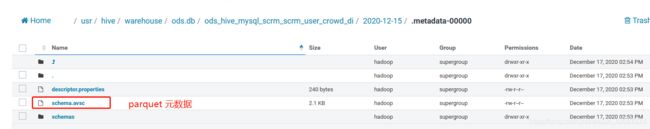

其中: /usr/hive/warehouse/ods.db/ods_hive_mysql_scrm_scrm_user_crowd_di/2020-12-15/.metadata-00000/schemas/schema.avsc

{

"type" : "record",

"name" : "AutoGeneratedSchema",

"doc" : "Sqoop import of QueryResult",

"fields" : [ {

"name" : "id",

"type" : [ "null", "int" ],

"default" : null,

"columnName" : "id",

"sqlType" : "4"

}, {

"name" : "zb_id",

"type" : [ "null", "int" ],

"default" : null,

"columnName" : "zb_id",

"sqlType" : "4"

}, {

"name" : "user_id",

"type" : [ "null", "string" ],

"default" : null,

"columnName" : "user_id",

"sqlType" : "12"

}, {

"name" : "name",

"type" : [ "null", "string" ],

"default" : null,

"columnName" : "name",

"sqlType" : "12"

}, {

"name" : "definition",

"type" : [ "null", "string" ],

"default" : null,

"columnName" : "definition",

"sqlType" : "12"

}, {

"name" : "count",

"type" : [ "null", "long" ],

"default" : null,

"columnName" : "count",

"sqlType" : "-5"

}, {

"name" : "update_type",

"type" : [ "null", "boolean" ],

"default" : null,

"columnName" : "update_type",

"sqlType" : "-7"

}, {

"name" : "create_time",

"type" : [ "null", "string" ],

"default" : null,

"columnName" : "create_time",

"sqlType" : "93"

}, {

"name" : "update_time",

"type" : [ "null", "string" ],

"default" : null,

"columnName" : "update_time",

"sqlType" : "93"

}, {

"name" : "tag_query_json",

"type" : [ "null", "string" ],

"default" : null,

"columnName" : "tag_query_json",

"sqlType" : "-1"

}, {

"name" : "cos_url",

"type" : [ "null", "string" ],

"default" : null,

"columnName" : "cos_url",

"sqlType" : "12"

}, {

"name" : "cos_key",

"type" : [ "null", "string" ],

"default" : null,

"columnName" : "cos_key",

"sqlType" : "12"

}, {

"name" : "system_recommend",

"type" : [ "null", "boolean" ],

"default" : null,

"columnName" : "system_recommend",

"sqlType" : "-7"

}, {

"name" : "status",

"type" : [ "null", "boolean" ],

"default" : null,

"columnName" : "status",

"sqlType" : "-7"

} ],

"tableName" : "QueryResult"

}2.2 修改数据类型转换方式

sqoop import \

--connect jdbc:mysql://localhost:3306/scrm?zeroDateTimeBehavior=convertToNull\&dontTrackOpenResources=true\&defaultFetchSize=1000\&useCursorFetch=true \

--username root \

--password 123456 \

--null-string '\\N' \

--null-non-string '\\N' \

--query '

SELECT id,zb_id,user_id,name,definition,

count,update_type,create_time,update_time,tag_query_json,

cos_url,cos_key,system_recommend,status

FROM scrm.scrm_user_crowd

WHERE create_time>="'$vDay'" or update_time>="'$vDay'" AND $CONDITIONS' \

--target-dir /usr/hive/warehouse/ods.db/ods_hive_mysql_scrm_scrm_user_crowd_di/$vDay \

--append \

--num-mappers 1 \

--split-by id \

--as-parquetfile \

--map-column-java create_time=String,update_time=String,count=Integer,update_type=Integer,system_recommend=Integer,status=Integer \

--map-column-hive create_time=String,update_time=String,count=Integer,update_type=Integer,system_recommend=Integer,status=Integer2.3 再次查看数据类型

说明:报错的数据类型被处理了

{

"type" : "record",

"name" : "AutoGeneratedSchema",

"doc" : "Sqoop import of QueryResult",

"fields" : [ {

"name" : "id",

"type" : [ "null", "int" ],

"default" : null,

"columnName" : "id",

"sqlType" : "4"

}, {

"name" : "zb_id",

"type" : [ "null", "int" ],

"default" : null,

"columnName" : "zb_id",

"sqlType" : "4"

}, {

"name" : "user_id",

"type" : [ "null", "string" ],

"default" : null,

"columnName" : "user_id",

"sqlType" : "12"

}, {

"name" : "name",

"type" : [ "null", "string" ],

"default" : null,

"columnName" : "name",

"sqlType" : "12"

}, {

"name" : "definition",

"type" : [ "null", "string" ],

"default" : null,

"columnName" : "definition",

"sqlType" : "12"

}, {

"name" : "count",

"type" : [ "null", "int" ],

"default" : null,

"columnName" : "count",

"sqlType" : "-5"

}, {

"name" : "update_type",

"type" : [ "null", "int" ],

"default" : null,

"columnName" : "update_type",

"sqlType" : "-7"

}, {

"name" : "create_time",

"type" : [ "null", "string" ],

"default" : null,

"columnName" : "create_time",

"sqlType" : "93"

}, {

"name" : "update_time",

"type" : [ "null", "string" ],

"default" : null,

"columnName" : "update_time",

"sqlType" : "93"

}, {

"name" : "tag_query_json",

"type" : [ "null", "string" ],

"default" : null,

"columnName" : "tag_query_json",

"sqlType" : "-1"

}, {

"name" : "cos_url",

"type" : [ "null", "string" ],

"default" : null,

"columnName" : "cos_url",

"sqlType" : "12"

}, {

"name" : "cos_key",

"type" : [ "null", "string" ],

"default" : null,

"columnName" : "cos_key",

"sqlType" : "12"

}, {

"name" : "system_recommend",

"type" : [ "null", "int" ],

"default" : null,

"columnName" : "system_recommend",

"sqlType" : "-7"

}, {

"name" : "status",

"type" : [ "null", "int" ],

"default" : null,

"columnName" : "status",

"sqlType" : "-7"

} ],

"tableName" : "QueryResult"

}2.4 再次查询

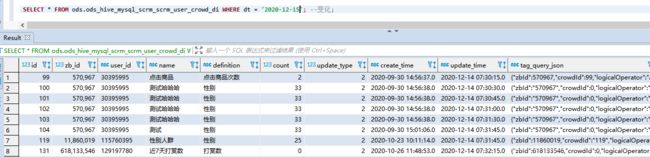

三、其他注意事项

1. Mysql的Bigint类型在MR中是LongWritable类型,在Hive表中不太好处理,建议转成String再转回来,上面的bigint类型我用IntWritable接,也是个问题。

2. Mysql的Tinyint类型在MR会处理成BooleanWritable类型也要注意。