完全小白如何运行人生中的第一个Bert文本分类代码

诸神缄默不语-个人CSDN博文目录

本文写于2023.3.20,不保证未来以下代码及操作过程仍然可以使用。

本文主要关注中文短文本分类。不过其他场景只要换预训练模型就行。

整体的训练流程是:将数据分成训练集、验证集和测试集。在训练集上训练16个epoch,在每次训练之后都在验证集上测试一遍,最终选择指标最高的一个epoch的checkpoint来运行测试集,得出其结果,并与真实标签进行对比,得到模型的最终指标。

文章目录

- 1. 准备数据集

- 2. 安装运行环境、下载必要文件

- 3. 运行代码

- 4. 常见问题

1. 准备数据集

数据用的是https://raw.githubusercontent.com/SophonPlus/ChineseNlpCorpus/master/datasets/ChnSentiCorp_htl_all/ChnSentiCorp_htl_all.csv。

此处不介绍具体对该数据集的处理过程,可参考用huggingface.transformers在文本分类任务(单任务和多任务场景下)上微调预训练模型一文。在这里,我主要是为了将数据集处理为一个比较显明易读的格式,以便读者替换为自己的数据集。

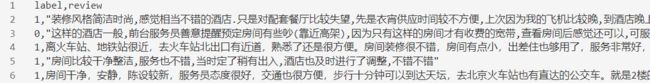

数据集都是CSV格式的文件,label列是标签(这里是数字形式。如果是文本形式,要先映射为数字), review列是要被分类的文本。

示例:

2. 安装运行环境、下载必要文件

建议使用Linux系统,但是如果你非要想用Windows系统也行。

如果直接使用Google Colab等集成平台,可以跳过下面的这些安装步骤。

- 安装VSCode:Visual Studio Code - Code Editing. Redefined

- 安装Python:建议直接下载anaconda(Anaconda教程(持续更新ing…))

(以下步骤建议在anaconda中新建一个虚拟环境,然后再行操作) - 安装PyTorch:PyTorch安装教程

- 安装transformers:huggingface.transformers安装教程

- 安装sklearn(如果你直接用anaconda的base环境,应该是可以直接跳过这一条的):Installing scikit-learn — scikit-learn 1.2.2 documentation

- 下载Bert预训练模型:下载这里所有的文件:bert-base-chinese at main

然后放到某个文件夹里,这个文件夹路径在下面的代码里放到pretrained_path位置处

3. 运行代码

在VSCode中新建一个文本文件,取名为chn_run.py,把如下代码复制进去(记得删掉CSDN复制后产生的多余文字):

import csv

from tqdm import tqdm

from copy import deepcopy

from sklearn.metrics import accuracy_score,precision_score,recall_score,f1_score

import torch

import torch.nn as nn

from torch.utils.data import DataLoader

from transformers import AutoTokenizer,AutoModelForSequenceClassification

"""超参设置"""

pretrained_path='/data/pretrained_model/bert-base-chinese'

dropout_rate=0.1

max_epoch_num=16

cuda_device='cuda:2'

output_dim=2

"""加载数据集"""

#训练集

with open('chn_train.csv') as f:

reader=csv.reader(f)

header=next(reader) #表头

train_data=[[int(row[0]),row[1]] for row in reader]

#验证集

with open('chn_valid.csv') as f:

reader=csv.reader(f)

header=next(reader)

valid_data=[[int(row[0]),row[1]] for row in reader]

#测试集

with open('chn_test.csv') as f:

reader=csv.reader(f)

header=next(reader)

test_data=[[int(row[0]),row[1]] for row in reader]

tokenizer = AutoTokenizer.from_pretrained(pretrained_path)

def collate_fn(batch):

pt_batch=tokenizer([x[1] for x in batch],padding=True,truncation=True,max_length=512,return_tensors='pt')

return {'input_ids':pt_batch['input_ids'],'token_type_ids':pt_batch['token_type_ids'],'attention_mask':pt_batch['attention_mask'],

'label':torch.tensor([x[0] for x in batch])}

train_dataloader=DataLoader(train_data,batch_size=16,shuffle=True,collate_fn=collate_fn)

valid_dataloader=DataLoader(valid_data,batch_size=128,shuffle=False,collate_fn=collate_fn)

test_dataloader=DataLoader(test_data,batch_size=128,shuffle=False,collate_fn=collate_fn)

"""建模"""

#API文档:https://huggingface.co/docs/transformers/main/en/model_doc/bert#transformers.BertForSequenceClassification

model=AutoModelForSequenceClassification.from_pretrained(pretrained_path,num_labels=output_dim)

model.to(cuda_device)

"""构建优化器、损失函数等"""

optimizer=torch.optim.Adam(params=model.parameters(),lr=1e-5)

loss_func=nn.CrossEntropyLoss()

max_valid_f1=0

best_model={}

"""训练与验证"""

for e in tqdm(range(max_epoch_num)):

for batch in train_dataloader:

model.train()

optimizer.zero_grad()

input_ids=batch['input_ids'].to(cuda_device)

token_type_ids=batch['token_type_ids'].to(cuda_device)

attention_mask=batch['attention_mask'].to(cuda_device)

labels=batch['label'].to(cuda_device)

outputs=model(input_ids,token_type_ids,attention_mask,labels=labels)

outputs.loss.backward()

optimizer.step()

#验证

with torch.no_grad():

model.eval()

labels=[]

predicts=[]

for batch in valid_dataloader:

input_ids=batch['input_ids'].to(cuda_device)

token_type_ids=batch['token_type_ids'].to(cuda_device)

attention_mask=batch['attention_mask'].to(cuda_device)

outputs=model(input_ids,token_type_ids,attention_mask)

labels.extend([i.item() for i in batch['label']])

predicts.extend([i.item() for i in torch.argmax(outputs.logits,1)])

f1=f1_score(labels,predicts,average='macro')

if f1>max_valid_f1:

best_model=deepcopy(model.state_dict())

max_valid_f1=f1

"""测试"""

model.load_state_dict(best_model)

with torch.no_grad():

model.eval()

labels=[]

predicts=[]

for batch in test_dataloader:

input_ids=batch['input_ids'].to(cuda_device)

token_type_ids=batch['token_type_ids'].to(cuda_device)

attention_mask=batch['attention_mask'].to(cuda_device)

outputs=model(input_ids,token_type_ids,attention_mask)

labels.extend([i.item() for i in batch['label']])

predicts.extend([i.item() for i in torch.argmax(outputs.logits,1)])

print(accuracy_score(labels,predicts))

print(precision_score(labels,predicts,average='macro'))

print(recall_score(labels,predicts,average='macro'))

print(f1_score(labels,predicts,average='macro'))

需要修改的内容:

- pretrained_path:改成你放预训练模型的文件夹

- cuda_device:改成你能用的卡。如果不用GPU就改成

cpu - output_dim:改成你的分类标签总数

chn_*.csv:改成你放置CSV文件的位置

运行结果类似于:

env_path/lib/python3.8/site-packages/scipy/__init__.py:146: UserWarning: A NumPy version >=1.16.5 and <1.23.0 is required for this version of SciPy (detected version 1.23.5

warnings.warn(f"A NumPy version >={np_minversion} and <{np_maxversion}"

Some weights of the model checkpoint at /data/pretrained_model/bert-base-chinese were not used when initializing BertForSequenceClassification: ['cls.predictions.transform.dense.bias', 'cls.seq_relationship.bias', 'cls.predictions.decoder.weight', 'cls.predictions.transform.dense.weight', 'cls.seq_relationship.weight', 'cls.predictions.transform.LayerNorm.weight', 'cls.predictions.bias', 'cls.predictions.transform.LayerNorm.bias']

- This IS expected if you are initializing BertForSequenceClassification from the checkpoint of a model trained on another task or with another architecture (e.g. initializing a BertForSequenceClassification model from a BertForPreTraining model).

- This IS NOT expected if you are initializing BertForSequenceClassification from the checkpoint of a model that you expect to be exactly identical (initializing a BertForSequenceClassification model from a BertForSequenceClassification model).

Some weights of BertForSequenceClassification were not initialized from the model checkpoint at /data/pretrained_model/bert-base-chinese and are newly initialized: ['classifier.weight', 'classifier.bias']

You should probably TRAIN this model on a down-stream task to be able to use it for predictions and inference.

100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 16/16 [1:39:37<00:00, 373.62s/it]

0.8088803088803089

0.7998017541751772

0.7586588921282799

0.7722809550288214

开头警告不用管。

(注意,不用在乎具体数值结果,我连续跑了2次,相差10个点……我用另一种类似的写法写出来能上90%呢,反正这个指标不用太在意,它稳定性很差的:用huggingface.transformers在文本分类任务(单任务和多任务场景下)上微调预训练模型)

4. 常见问题

- 如果CSV文件使用的不是默认分隔符、特殊字符和编码方式,需要在

reader()函数中添加对应的入参 - 如果原始数据不是CSV格式的文件,或者没有形成训练/验证/测试这样的分割方式,可以自己写个代码改成这种格式的

- 如果测试集没有标签,请去掉代码第100行其后的内容

- 如果想要得到在数据上的预测标签:待补

- 如果出现

- 其他报错情况

- RuntimeError: CUDA error: CUBLAS_STATUS_INTERNAL_ERROR when calling `cublasSgemm( handle, opa, opb, m, n, k, &alpha, a, lda, b, ldb, &beta, c, ldc)``

据我所知应该是Python、PyTorch和cuda对应的版本安装错误了,建议重装

- RuntimeError: CUDA error: CUBLAS_STATUS_INTERNAL_ERROR when calling `cublasSgemm( handle, opa, opb, m, n, k, &alpha, a, lda, b, ldb, &beta, c, ldc)``

- 其他报错信息可以发评论区问我,我看到并且会解决的就发在博文里。

请把报错信息复制全。就算是老中医看病也要望闻问切,你光给我前面一截子报错信息,你这是让我悬丝诊脉是吧?我能看懂个锤子啊!