收集的两个测试 amd gpu 平台上opencl的几个示例 hello example and hello kernel sample

hello_opencl_world.cpp

#include

#include

#include

#include

std::string getPlatformName(const cl_platform_id pid){

cl_int err_no=CL_SUCCESS;

size_t param_value_size;

err_no = clGetPlatformInfo(pid, CL_PLATFORM_NAME, 0, NULL, ¶m_value_size);

//sleep(1);

if(err_no != CL_SUCCESS)

{

std::cout<<"Error get CL_PLATFORM_NAME"< " << "Platform name is :" << platname << std::endl;

}

cl_platform_id platform_id = NULL;

cl_device_id device_id = NULL;

cl_uint num_platform = 0;

cl_uint num_device = 0;

clGetPlatformIDs(1, &platform_id, &num_platform);

std::cout << "stab num_platform=" << num_platform <= num_platforms) break;

} else {

break;

}

}

if(index >= num_platforms) {

std::cout << "not found GPU" << std::endl;

} else {

std::cout << "found GPU index=" << index << std::endl;

}

clGetDeviceIDs(platforms[0], CL_DEVICE_TYPE_GPU, 1, &device_id, &num_device);

std::cout << "stab num_device=" << num_device < CMakeLists.txt

cmake_minimum_required(VERSION 3.17)

add_executable(hello_opencl_world hello_opencl_world.cpp)

set(CMAKE_BUILD_TYPE Debug)

target_link_libraries(hello_opencl_world /opt/rocm/opencl/lib/libOpenCL.so)

target_include_directories(hello_opencl_world PRIVATE /opt/rocm/include)运行:

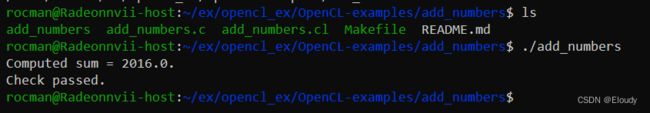

第二个示例,借用于此:

rocman@Radeonnvii-host:~/ex/opencl_ex/OpenCL-examples$ git remote -v

origin https://github.com/rsnemmen/OpenCL-examples.git (fetch)

origin https://github.com/rsnemmen/OpenCL-examples.git (push)

rocman@Radeonnvii-host:~/ex/opencl_ex/OpenCL-examples$

add_number.c

#define PROGRAM_FILE "add_numbers.cl"

#define KERNEL_FUNC "add_numbers"

#define ARRAY_SIZE 64

#include

#include

#include

#include

#include

#ifdef MAC

#include

#else

#include

#endif

/* Find a GPU or CPU associated with the first available platform

The `platform` structure identifies the first platform identified by the

OpenCL runtime. A platform identifies a vendor's installation, so a system

may have an NVIDIA platform and an AMD platform.

The `device` structure corresponds to the first accessible device

associated with the platform. Because the second parameter is

`CL_DEVICE_TYPE_GPU`, this device must be a GPU.

*/

cl_device_id create_device() {

cl_platform_id platform;

cl_device_id dev;

int err;

/* Identify a platform */

err = clGetPlatformIDs(1, &platform, NULL);

if(err < 0) {

perror("Couldn't identify a platform");

exit(1);

}

// Access a device

// GPU

err = clGetDeviceIDs(platform, CL_DEVICE_TYPE_GPU, 1, &dev, NULL);

if(err == CL_DEVICE_NOT_FOUND) {

// CPU

err = clGetDeviceIDs(platform, CL_DEVICE_TYPE_CPU, 1, &dev, NULL);

}

if(err < 0) {

perror("Couldn't access any devices");

exit(1);

}

return dev;

}

/* Create program from a file and compile it */

cl_program build_program(cl_context ctx, cl_device_id dev, const char* filename) {

cl_program program;

FILE *program_handle;

char *program_buffer, *program_log;

size_t program_size, log_size;

int err;

/* Read program file and place content into buffer */

program_handle = fopen(filename, "r");

if(program_handle == NULL) {

perror("Couldn't find the program file");

exit(1);

}

fseek(program_handle, 0, SEEK_END);

program_size = ftell(program_handle);

rewind(program_handle);

program_buffer = (char*)malloc(program_size + 1);

program_buffer[program_size] = '\0';

fread(program_buffer, sizeof(char), program_size, program_handle);

fclose(program_handle);

/* Create program from file

Creates a program from the source code in the add_numbers.cl file.

Specifically, the code reads the file's content into a char array

called program_buffer, and then calls clCreateProgramWithSource.

*/

program = clCreateProgramWithSource(ctx, 1,

(const char**)&program_buffer, &program_size, &err);

if(err < 0) {

perror("Couldn't create the program");

exit(1);

}

free(program_buffer);

/* Build program

The fourth parameter accepts options that configure the compilation.

These are similar to the flags used by gcc. For example, you can

define a macro with the option -DMACRO=VALUE and turn off optimization

with -cl-opt-disable.

*/

err = clBuildProgram(program, 0, NULL, NULL, NULL, NULL);

if(err < 0) {

/* Find size of log and print to std output */

clGetProgramBuildInfo(program, dev, CL_PROGRAM_BUILD_LOG,

0, NULL, &log_size);

program_log = (char*) malloc(log_size + 1);

program_log[log_size] = '\0';

clGetProgramBuildInfo(program, dev, CL_PROGRAM_BUILD_LOG,

log_size + 1, program_log, NULL);

printf("%s\n", program_log);

free(program_log);

exit(1);

}

return program;

}

int main() {

/* OpenCL structures */

cl_device_id device;

cl_context context;

cl_program program;

cl_kernel kernel;

cl_command_queue queue;

cl_int i, j, err;

size_t local_size, global_size;

/* Data and buffers */

float data[ARRAY_SIZE];

float sum[2], total, actual_sum;

cl_mem input_buffer, sum_buffer;

cl_int num_groups;

/* Initialize data */

for(i=0; i 0.01*fabs(actual_sum))

printf("Check failed.\n");

else

printf("Check passed.\n");

/* Deallocate resources */

clReleaseKernel(kernel);

clReleaseMemObject(sum_buffer);

clReleaseMemObject(input_buffer);

clReleaseCommandQueue(queue);

clReleaseProgram(program);

clReleaseContext(context);

return 0;

} kernel 代码

add_number.cl

/*

Code that contains kernels to run on accelerator in parallel. A kernel

represents the basic unit of executable code. Each kernel will be

executed on one work item ("pixel") of your parallel task:

1 work item = 1 "pixel" in your image

A practical application may generate thousands or even millions of

work-items, but for the simple task of adding 64 numbers,

eight work-items will suffice. The program file add_numbers.cl

contains a function called add_numbers that performs this operation.

Like all kernel functions, it returns void and its name is preceded by

the __kernel identifier.

The kernel has 64 values to add together and eight work-items with

which to add them. After each work-item computes its sum of eight

values, these partial results will be added together to form a sum for

the entire group. In the end, the kernel will return two sums — one for

each work-group executing the kernel.

data <===== input_buffer

group_result <===== sum_buffer

*/

__kernel void add_numbers(__global float4* data,

__local float* local_result, __global float* group_result) {

float sum;

float4 input1, input2, sum_vector; // array of 4 floats which support vectorization

uint global_addr, local_addr;

global_addr = get_global_id(0) * 2;

input1 = data[global_addr];

input2 = data[global_addr+1];

sum_vector = input1 + input2; // perform four floating-point additions simultaneously

local_addr = get_local_id(0);

local_result[local_addr] = sum_vector.s0 + sum_vector.s1 +

sum_vector.s2 + sum_vector.s3;

barrier(CLK_LOCAL_MEM_FENCE);

if(get_local_id(0) == 0) {

sum = 0.0f;

for(int i=0; iMakefile

PROJ=add_numbers

CC=gcc

CFLAGS=-std=c99 -Wall -DUNIX -g -DDEBUG

# No OpenCL

CFLAGSS=-std=c99 -Wall -DUNIX -g -DDEBUG

# Check for 32-bit vs 64-bit

PROC_TYPE = $(strip $(shell uname -m | grep 64))

# Check for Mac OS

OS = $(shell uname -s 2>/dev/null | tr [:lower:] [:upper:])

DARWIN = $(strip $(findstring DARWIN, $(OS)))

# MacOS System

ifneq ($(DARWIN),)

CFLAGS += -DMAC

LIBS=-framework OpenCL

ifeq ($(PROC_TYPE),)

CFLAGS+=-arch i386

else

CFLAGS+=-arch x86_64

endif

else

# Linux OS

LIBS=-lOpenCL

ifeq ($(PROC_TYPE),)

CFLAGS+=-m32

else

CFLAGS+=-m64

endif

# Check for Linux-AMD

ifdef AMDAPPSDKROOT

INC_DIRS=. $(AMDAPPSDKROOT)/include

ifeq ($(PROC_TYPE),)

LIB_DIRS=$(AMDAPPSDKROOT)/lib/x86

else

LIB_DIRS=$(AMDAPPSDKROOT)/lib/x86_64

endif

else

# Check for Linux-Nvidia

ifdef CUDA

INC_DIRS=. $(CUDA)/OpenCL/common/inc

endif

INC_DIRS=/opt/rocm-5.4.3/opencl/include

LIB_DIRS=/opt/rocm-5.4.3/opencl/lib

endif

endif

$(PROJ): $(PROJ).c

$(CC) $(CFLAGS) -o $@ $^ $(INC_DIRS:%=-I%) $(LIB_DIRS:%=-L%) $(LIBS)

.PHONY: clean

clean:

rm $(PROJ)运行:

第三个借用的示例:

hello_ocl.cpp

#include

#include

#include

#include

const int ARRAY_SIZE = 1000;

//一、 选择OpenCL平台并创建一个上下文

cl_context CreateContext()

{

cl_int errNum;

cl_uint numPlatforms;

cl_platform_id firstPlatformId;

cl_context context = NULL;

//选择可用的平台中的第一个

errNum = clGetPlatformIDs(1, &firstPlatformId, &numPlatforms);

if (errNum != CL_SUCCESS || numPlatforms <= 0)

{

std::cerr << "Failed to find any OpenCL platforms." << std::endl;

return NULL;

}

//创建一个OpenCL上下文环境

cl_context_properties contextProperties[] =

{

CL_CONTEXT_PLATFORM,

(cl_context_properties)firstPlatformId,

0

};

context = clCreateContextFromType(contextProperties, CL_DEVICE_TYPE_GPU,

NULL, NULL, &errNum);

return context;

}

//二、 创建设备并创建命令队列

cl_command_queue CreateCommandQueue(cl_context context, cl_device_id *device)

{

cl_int errNum;

cl_device_id *devices;

cl_command_queue commandQueue = NULL;

size_t deviceBufferSize = -1;

// 获取设备缓冲区大小

errNum = clGetContextInfo(context, CL_CONTEXT_DEVICES, 0, NULL, &deviceBufferSize);

if (deviceBufferSize <= 0)

{

std::cerr << "No devices available.";

return NULL;

}

// 为设备分配缓存空间

devices = new cl_device_id[deviceBufferSize / sizeof(cl_device_id)];

errNum = clGetContextInfo(context, CL_CONTEXT_DEVICES, deviceBufferSize, devices, NULL);

//选取可用设备中的第一个

commandQueue = clCreateCommandQueue(context, devices[0], 0, NULL);

*device = devices[0];

delete[] devices;

return commandQueue;

}

// 三、创建和构建程序对象

cl_program CreateProgram(cl_context context, cl_device_id device, const char* fileName)

{

cl_int errNum;

cl_program program;

std::ifstream kernelFile(fileName, std::ios::in);

if (!kernelFile.is_open())

{

std::cerr << "Failed to open file for reading: " << fileName << std::endl;

return NULL;

}

std::ostringstream oss;

oss << kernelFile.rdbuf();

std::string srcStdStr = oss.str();

const char *srcStr = srcStdStr.c_str();

program = clCreateProgramWithSource(context, 1,

(const char**)&srcStr,

NULL, NULL);

errNum = clBuildProgram(program, 0, NULL, NULL, NULL, NULL);

return program;

}

//创建和构建程序对象

bool CreateMemObjects(cl_context context, cl_mem memObjects[3],

float *a, float *b)

{

memObjects[0] = clCreateBuffer(context, CL_MEM_READ_ONLY | CL_MEM_COPY_HOST_PTR,

sizeof(float) * ARRAY_SIZE, a, NULL);

memObjects[1] = clCreateBuffer(context, CL_MEM_READ_ONLY | CL_MEM_COPY_HOST_PTR,

sizeof(float) * ARRAY_SIZE, b, NULL);

memObjects[2] = clCreateBuffer(context, CL_MEM_READ_WRITE,

sizeof(float) * ARRAY_SIZE, NULL, NULL);

return true;

}

// 释放OpenCL资源

void Cleanup(cl_context context, cl_command_queue commandQueue,

cl_program program, cl_kernel kernel, cl_mem memObjects[3])

{

for (int i = 0; i < 3; i++)

{

if (memObjects[i] != 0)

clReleaseMemObject(memObjects[i]);

}

if (commandQueue != 0)

clReleaseCommandQueue(commandQueue);

if (kernel != 0)

clReleaseKernel(kernel);

if (program != 0)

clReleaseProgram(program);

if (context != 0)

clReleaseContext(context);

}

int main(int argc, char** argv)

{

cl_context context = 0;

cl_command_queue commandQueue = 0;

cl_program program = 0;

cl_device_id device = 0;

cl_kernel kernel = 0;

cl_mem memObjects[3] = { 0, 0, 0 };

cl_int errNum;

// 一、选择OpenCL平台并创建一个上下文

context = CreateContext();

// 二、 创建设备并创建命令队列

commandQueue = CreateCommandQueue(context, &device);

//创建和构建程序对象

program = CreateProgram(context, device, "HelloWorld.cl");

// 四、 创建OpenCL内核并分配内存空间

kernel = clCreateKernel(program, "hello_kernel", NULL);

//创建要处理的数据

float result[ARRAY_SIZE];

float a[ARRAY_SIZE];

float b[ARRAY_SIZE];

for (int i = 0; i < ARRAY_SIZE; i++)

{

a[i] = (float)i;

b[i] = (float)(ARRAY_SIZE - i);

}

//创建内存对象

if (!CreateMemObjects(context, memObjects, a, b))

{

Cleanup(context, commandQueue, program, kernel, memObjects);

return 1;

}

// 五、 设置内核数据并执行内核

errNum = clSetKernelArg(kernel, 0, sizeof(cl_mem), &memObjects[0]);

errNum |= clSetKernelArg(kernel, 1, sizeof(cl_mem), &memObjects[1]);

errNum |= clSetKernelArg(kernel, 2, sizeof(cl_mem), &memObjects[2]);

size_t globalWorkSize[1] = { ARRAY_SIZE };

size_t localWorkSize[1] = { 1 };

errNum = clEnqueueNDRangeKernel(commandQueue, kernel, 1, NULL,

globalWorkSize, localWorkSize,

0, NULL, NULL);

// 六、 读取执行结果并释放OpenCL资源

errNum = clEnqueueReadBuffer(commandQueue, memObjects[2], CL_TRUE,

0, ARRAY_SIZE * sizeof(float), result,

0, NULL, NULL);

for (int i = 0; i < ARRAY_SIZE; i++)

{

std::cout << result[i] << " ";

}

std::cout << std::endl;

std::cout << "Executed program succesfully." << std::endl;

getchar();

Cleanup(context, commandQueue, program, kernel, memObjects);

return 0;

}

HelloWorld.cl

__kernel void hello_kernel(__global const float *a,

__global const float *b,

__global float *result)

{

int gid = get_global_id(0);

// result[gid] = a[gid] + b[gid];

//for(int i=0; i<10; i++) result[gid] += a[gid] *0.1+ b[gid] *0.1;

result[gid] = a[gid]*0.1 + b[gid]*0.1;

result[gid] = a[gid]*0.22 + b[gid]*0.22;

}编译:

$ g++ hello_ocl.cpp -I /opt/rocm-5.4.3/opencl/include -L /opt/rocm-5.4.3/opencl/lib -lOpenCL -gkernel是jit编译的,所以,可以只g++一次,然后不断地修改kernel代码,而不断地直接运行

运行:

示例4:测风扇性能,文件名同示例3,仅仅改了数据量,和 kernel 中加入 for 循环

#include

#include

#include

#include

const int ARRAY_SIZE =100000;// 483647;//100000;

//一、 选择OpenCL平台并创建一个上下文

cl_context CreateContext()

{

cl_int errNum;

cl_uint numPlatforms;

cl_platform_id firstPlatformId;

cl_context context = NULL;

//选择可用的平台中的第一个

errNum = clGetPlatformIDs(1, &firstPlatformId, &numPlatforms);

if (errNum != CL_SUCCESS || numPlatforms <= 0)

{

std::cerr << "Failed to find any OpenCL platforms." << std::endl;

return NULL;

}

//创建一个OpenCL上下文环境

cl_context_properties contextProperties[] =

{

CL_CONTEXT_PLATFORM,

(cl_context_properties)firstPlatformId,

0

};

context = clCreateContextFromType(contextProperties, CL_DEVICE_TYPE_GPU,

NULL, NULL, &errNum);

return context;

}

//二、 创建设备并创建命令队列

cl_command_queue CreateCommandQueue(cl_context context, cl_device_id *device)

{

cl_int errNum;

cl_device_id *devices;

cl_command_queue commandQueue = NULL;

size_t deviceBufferSize = -1;

// 获取设备缓冲区大小

errNum = clGetContextInfo(context, CL_CONTEXT_DEVICES, 0, NULL, &deviceBufferSize);

if (deviceBufferSize <= 0)

{

std::cerr << "No devices available.";

return NULL;

}

// 为设备分配缓存空间

devices = new cl_device_id[deviceBufferSize / sizeof(cl_device_id)];

errNum = clGetContextInfo(context, CL_CONTEXT_DEVICES, deviceBufferSize, devices, NULL);

//选取可用设备中的第一个

commandQueue = clCreateCommandQueue(context, devices[0], 0, NULL);

*device = devices[0];

delete[] devices;

return commandQueue;

}

// 三、创建和构建程序对象

cl_program CreateProgram(cl_context context, cl_device_id device, const char* fileName)

{

cl_int errNum;

cl_program program;

std::ifstream kernelFile(fileName, std::ios::in);

if (!kernelFile.is_open())

{

std::cerr << "Failed to open file for reading: " << fileName << std::endl;

return NULL;

}

std::ostringstream oss;

oss << kernelFile.rdbuf();

std::string srcStdStr = oss.str();

const char *srcStr = srcStdStr.c_str();

program = clCreateProgramWithSource(context, 1,

(const char**)&srcStr,

NULL, NULL);

errNum = clBuildProgram(program, 0, NULL, NULL, NULL, NULL);

return program;

}

//创建和构建程序对象

bool CreateMemObjects(cl_context context, cl_mem memObjects[3],

float *a, float *b)

{

memObjects[0] = clCreateBuffer(context, CL_MEM_READ_ONLY | CL_MEM_COPY_HOST_PTR,

sizeof(float) * ARRAY_SIZE, a, NULL);

memObjects[1] = clCreateBuffer(context, CL_MEM_READ_ONLY | CL_MEM_COPY_HOST_PTR,

sizeof(float) * ARRAY_SIZE, b, NULL);

memObjects[2] = clCreateBuffer(context, CL_MEM_READ_WRITE,

sizeof(float) * ARRAY_SIZE, NULL, NULL);

return true;

}

// 释放OpenCL资源

void Cleanup(cl_context context, cl_command_queue commandQueue,

cl_program program, cl_kernel kernel, cl_mem memObjects[3])

{

for (int i = 0; i < 3; i++)

{

if (memObjects[i] != 0)

clReleaseMemObject(memObjects[i]);

}

if (commandQueue != 0)

clReleaseCommandQueue(commandQueue);

if (kernel != 0)

clReleaseKernel(kernel);

if (program != 0)

clReleaseProgram(program);

if (context != 0)

clReleaseContext(context);

}

int main(int argc, char** argv)

{

cl_context context = 0;

cl_command_queue commandQueue = 0;

cl_program program = 0;

cl_device_id device = 0;

cl_kernel kernel = 0;

cl_mem memObjects[3] = { 0, 0, 0 };

cl_int errNum;

// 一、选择OpenCL平台并创建一个上下文

context = CreateContext();

// 二、 创建设备并创建命令队列

commandQueue = CreateCommandQueue(context, &device);

//创建和构建程序对象

program = CreateProgram(context, device, "HelloWorld.cl");

// 四、 创建OpenCL内核并分配内存空间

kernel = clCreateKernel(program, "hello_kernel", NULL);

//创建要处理的数据

float result[ARRAY_SIZE];

float a[ARRAY_SIZE];

float b[ARRAY_SIZE];

for (int i = 0; i < ARRAY_SIZE; i++)

{

a[i] = (float)i;

b[i] = (float)(ARRAY_SIZE - i);

}

//创建内存对象

if (!CreateMemObjects(context, memObjects, a, b))

{

Cleanup(context, commandQueue, program, kernel, memObjects);

return 1;

}

// 五、 设置内核数据并执行内核

errNum = clSetKernelArg(kernel, 0, sizeof(cl_mem), &memObjects[0]);

errNum |= clSetKernelArg(kernel, 1, sizeof(cl_mem), &memObjects[1]);

errNum |= clSetKernelArg(kernel, 2, sizeof(cl_mem), &memObjects[2]);

size_t globalWorkSize[1] = { ARRAY_SIZE };

size_t localWorkSize[1] = { 1 };

errNum = clEnqueueNDRangeKernel(commandQueue, kernel, 1, NULL,

globalWorkSize, localWorkSize,

0, NULL, NULL);

// 六、 读取执行结果并释放OpenCL资源

errNum = clEnqueueReadBuffer(commandQueue, memObjects[2], CL_TRUE,

0, ARRAY_SIZE * sizeof(float), result,

0, NULL, NULL);

for (int i = ARRAY_SIZE-7; i < ARRAY_SIZE; i++)

{

std::cout << result[i] << " ";

}

std::cout << std::endl;

std::cout << "Executed program succesfully." << std::endl;

getchar();

Cleanup(context, commandQueue, program, kernel, memObjects);

return 0;

} __kernel void hello_kernel(__global const float *a,

__global const float *b,

__global float *result)

{

int gid = get_global_id(0);

result[gid] = 0.0f;

// result[gid] = a[gid] + b[gid];

//for(int i=0; i<10; i++) result[gid] += a[gid] *0.1+ b[gid] *0.1;

for(int jj=0; jj<1000000; jj++){

result[gid] += a[gid]*0.11 + b[gid]*0.11;

// result[gid] += (a[gid]*0.22 + b[gid]*0.22);

}

}clrx_OpencL_GPU_汇编_amd

https://download.csdn.net/download/weixin_42667269/26666041

借名一用

CLRadeonExtender

https://github.com/CLRX/CLRX-mirror