【大数据】hadoop完全分布式集群搭建

目录

一、编写分发脚本xsnyc

1、在home/data/bin目录下创建分发脚本xsnyc

2、编写xsnyc脚本文件

3、修改脚本文件权限

4、分发脚本

二、配置SSH免密登录

1、生成密钥对

2、将公钥发送到本机

3、分别用SSH免密登录一下所有节点并exit

4、将home/data/.ssh文件分发到各个节点

三、集群配置(3个env脚本,4个xml文件,1个slaves脚本)

1、配置hadoop-env.sh、yarn-env.sh、mapred-env.sh文件

2、配置core-site.xml文件(NameNode)

3、配置hdfs-site.xml文件(Secondary NameNode)

4、配置yarn-site.xml文件(ResourceManager)

5、配置mapred-site.xml文件

6、配置slaves文件(DataNode)

7、分发以上8个文件(都位于hadoop目录下)

四、群起集群

1、第一次启动,格式化NameNode

2、启动HDFS

3、启动YARN

4、JPS检查

5、在Web端查看DataNodes等

6、集群启动、停止方式

7、集群时间配置和同步

注意:以下都是在普通用户data登录状态下操作

一、编写分发脚本xsnyc

1、在home/data/bin目录下创建分发脚本xsnyc

[data@hadoop100 ~]$ cd ~

[data@hadoop100 ~]$ mkdir bin

[data@hadoop100 ~]$ cd bin/

[data@hadoop100 bin]$ touch xsync

[data@hadoop100 bin]$ vi xsync2、编写xsnyc脚本文件

#!/bin/bash

此脚本用/bin/bash解释执行

#1 获取输入参数个数,如果没有参数,直接退出

pcount=$#

if ((pcount==0)); then

echo no args;

exit;

fi

#2 获取文件名称

p1=$1

fname=`basename $p1`

echo fname=$fname

#3 获取上级目录到绝对路径

pdir=`cd -P $(dirname $p1); pwd`

echo pdir=$pdir

#4 获取当前用户名称

user=`whoami`

#5 循环分发到hadoop101和hadoop102主机中

for((host=101; host<103; host++)); do

echo ------------------- hadoop$host --------------

rsync -av $pdir/$fname $user@hadoop$host:$pdir

done3、修改脚本文件权限

数字777,设置脚本xysnc文件对所有者、所属组、其他用户都具有可读可写可执行权限

[data@hadoop100 bin]$ chmod 777 xsync4、分发脚本

将bin目录(及其中xsync脚本文件)分发到另外两台虚拟机hadoop101和hadoop102的相同目录中,完成脚本同步

[data@hadoop100 bin]$ xsync /home/data/bin二、配置SSH免密登录

1、生成密钥对

在/home/data目录下的隐藏文件 .ssh中采用rsa算法生成公钥和私钥密钥对;

ssh-keygen 命令,生成、管理和转换认证密钥;-t指密钥的加密算法,生成密钥对采用的是rsa算法

[data@hadoop100 ~]$ cd .ssh

[data@hadoop100 .ssh]$ ssh-keygen -t rsa一路回车下去,发现生成了四个文件:authorized_keys(存放授权过的免密登录的服务器的公钥)、id_rsa(私钥)、id_rsa.pub(公钥)、known_hosts(记录ssh访问过的服务器的公钥)

2、将公钥发送到本机

[data@hadoop101 .ssh]$ ssh-copy-id localhost

The authenticity of host 'localhost (::1)' can't be established.

RSA key fingerprint is 03:82:2e:9b:20:17:5e:2a:f4:37:d2:2a:16:7f:73:ff.

Are you sure you want to continue connecting (yes/no)? 3、分别用SSH免密登录一下所有节点并exit

[data@hadoop100 ~]$ ssh hadoop100

Last login: Mon Nov 19 02:26:41 2018 from 192.168.5.201

[data@hadoop100 ~]$ exit

logout

Connection to hadoop100 closed.

[data@hadoop100 ~]$ ssh hadoop101

Last login: Mon Nov 19 10:29:35 2018 from hadoop100

[data@hadoop101 ~]$ exit

logout

Connection to hadoop101 closed.

[data@hadoop100 ~]$ ssh hadoop102

Last login: Mon Nov 19 10:29:29 2018 from 192.168.5.201

[data@hadoop102 ~]$ exit

logout

Connection to hadoop102 closed.4、将home/data/.ssh文件分发到各个节点

[data@hadoop100 ~]$ xsync /home/atguigu/.ssh

分别输入每台服务器的密码,ssh免密登录配置完毕三、集群配置(3个env脚本,4个xml文件,1个slaves脚本)

分配集群中3台服务器的HDFS(NameNode-DataNode-SecondaryNameNode)和YARN(NodeManager-ResourceManager),如下

| hadoop100 |

hadoop101 |

hadoop102 |

|

| HDFS |

NameNode DataNode |

DataNode |

SecondaryNameNode DataNode |

| YARN |

NodeManager |

ResourceManager NodeManager |

NodeManager |

以下8个配置文件均位于/opt/module/hadoop-2.7.2/etc/hadoop目录下

1、配置hadoop-env.sh、yarn-env.sh、mapred-env.sh文件

首先,找到JDK安装路径

[data@hadoop100 jdk1.8.0_144]$ pwd

/opt/module/jdk1.8.0_144然后,在三个env文件中配置JAVA_HOME变量,添加JDK安装路径

[data@hadoop100 hadoop]$ vim hadoop-env.sh

[data@hadoop100 hadoop]$ vim yarn-env.sh

[data@hadoop100 hadoop]$ vim mapred-env.sh

export JAVA_HOME=/opt/module/jdk1.8.0_144以下3个xml文件的配置均直接从hadoop官网直接copy过来

2、配置core-site.xml文件(NameNode)

[data@hadoop100 hadoop]$ vim core-site.xml

fs.defaultFS

hdfs://hadoop100:9000

hadoop.tmp.dir

/opt/module/hadoop-2.7.2/data/tmp

3、配置hdfs-site.xml文件(Secondary NameNode)

[data@hadoop100 hadoop]$ vim hdfs-site.xml

dfs.replication

3

dfs.namenode.secondary.http-address

hadoop102:50090

4、配置yarn-site.xml文件(ResourceManager)

[data@hadoop100 hadoop]$ vim yarn-site.xml

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.resourcemanager.hostname

hadoop101

yarn.log-aggregation-enable

true

yarn.log-aggregation.retain-seconds

604800

5、配置mapred-site.xml文件

[data@hadoop100 hadoop]$ vim mapred-site.xml

mapreduce.framework.name

yarn

mapreduce.jobhistory.address

hadoop102:10020

mapreduce.jobhistory.webapp.address

hadoop102:19888

6、配置slaves文件(DataNode)

[data@hadoop100 hadoop]$ vi slaves

hadoop100

hadoop101

hadoop102ps:记得把文件里losthost删掉,否则后续启动YARN和HDFS,会报如下异常

![]()

7、分发以上8个文件(都位于hadoop目录下)

[data@hadoop100 hadoop]$ xsync /opt/module/hadoop-2.7.2/etc/hadoop四、群起集群

| hadoop100 |

hadoop101 |

hadoop102 |

|

| HDFS |

NameNode DataNode |

DataNode |

SecondaryNameNode DataNode |

| YARN |

NodeManager |

ResourceManager NodeManager |

NodeManager |

1、第一次启动,格式化NameNode

NameNode配置在hadoop100中,在hadoop100中操作:

[data@hadoop100 hadoop -2.7.2]$ hdfs namenode -format2、启动HDFS

集群中任意一台虚拟机启动HDFS都可以,这里在hadoop100中启动HDFS;

由于集群已经实现互相ssh免密登录,所以可以实现联动群起操作

[data@hadoop101 hadoop-2.7.2]$ start-dfs.sh

Starting namenodes on [hadoop100]

hadoop100: starting namenode, logging to /opt/module/hadoop-2.7.2/logs/hadoop-data-namenode-hadoop100.out

hadoop100: starting datanode, logging to /opt/module/hadoop-2.7.2/logs/hadoop-data-datanode-hadoop100.out

hadoop101: starting datanode, logging to /opt/module/hadoop-2.7.2/logs/hadoop-data-datanode-hadoop101.out

hadoop102: starting datanode, logging to /opt/module/hadoop-2.7.2/logs/hadoop-data-datanode-hadoop102.out

Starting secondary namenodes [hadoop102]

hadoop102: starting secondarynamenode, logging to /opt/module/hadoop-2.7.2/logs/hadoop-data-secondarynamenode-hadoop102.out

[data@hadoop101 hadoop-2.7.2]$3、启动YARN

YARN需要在配置ResourceManager的服务器(hadoop101)上启动

[data@hadoop101 hadoop-2.7.2]$ start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /opt/module/hadoop-2.7.2/logs/yarn-data-resourcemanager-hadoop101.out

hadoop101: starting nodemanager, logging to /opt/module/hadoop-2.7.2/logs/yarn-data-nodemanager-hadoop101.out

hadoop102: starting nodemanager, logging to /opt/module/hadoop-2.7.2/logs/yarn-data-nodemanager-hadoop102.out

hadoop100: starting nodemanager, logging to /opt/module/hadoop-2.7.2/logs/yarn-data-nodemanager-hadoop100.out

[data@hadoop101 hadoop-2.7.2]$ 4、JPS检查

对每台服务器,用jps命令查看一下HDFS和YARN配置是否与设定一致

[data@hadoop100 hadoop-2.7.2]$ jps

5321 NameNode

5738 Jps

5406 DataNode

5566 NodeManager[data@hadoop101 hadoop-2.7.2]$ jps

4131 DataNode

4883 Jps

4551 NodeManager

4430 ResourceManager[data@hadoop102 hadoop-2.7.2]$ jps

4064 SecondaryNameNode

3956 DataNode

4360 Jps

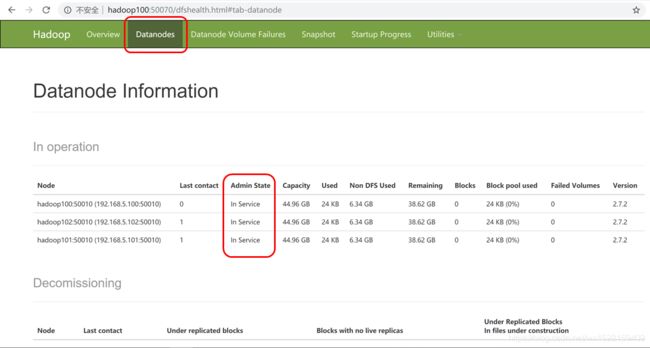

4190 NodeManager5、在Web端查看DataNodes等

打开Web端链接:http://hadoop100:50070;发现3个DataNode与配置一致显示,集群群起成功。

ps:查看SecondaryNameNode信息,http://hadoop102:50090

查看ResourceManager信息,http://hadoop101:8088

6、集群启动、停止方式

(1)群起模式下:

启动HDFS:start-dfs.sh 关闭HDFS:stop-dfs.sh

启动YARN:start-yarn.sh 关闭YARN:stop-yarn.sh

(2)单点启动模型下(一般用不着):

启动HDFS:hadoop-daemon.sh start namenode / datanode / secondarynamenode

关闭HDFS:hadoop-daemon.sh stop namenode / datanode / secondarynamenode

启动YARN:yarn-daemon.sh start resourcemanager / nodemanager

关闭YARN:yarn-daemon.sh stop resourcemanager / nodemanager

ps:目前基本已经废除使用start / stop-all.sh,官网没有说明原因,推测可能是hadoop2.0 x后YARN从MapReduce中独立出来,分别启动YARN和HDFS有利于解耦合。

7、集群时间配置和同步

1)检查ntp服务器是否安装

[data@hadoop100 ~]$ sudo rpm -qa|grep ntp

fontpackages-filesystem-1.41-1.1.el6.noarch

ntp-4.2.6p5-10.el6.centos.x86_64

ntpdate-4.2.6p5-10.el6.centos.x86_64(2)检查ntp服务器是否启动

[data@hadoop100 ~]$ sudo service ntpd status若显示正在运行,停止,关闭自启动

[data@hadoop100 ~]$ sudo service nptd stop

[data@hadoop100 ~]$ sudo chkconfig ntpd off(3)修改ntp配置文件

[data@hadoop100 ~]$ sudo vi /etc/ntp.conf

把server 0-3 前面全部加#(4)修改/etc/sysconfig/ntpd 文件

[data@hadoop100 ~]$ sudo vim /etc/sysconfig/ntpd让linux系统时间与win10系统时间同步

SYNC_HWCLOCK=yes(5)重新启动ntpd服务,并设置ntpd自启动

[data@hadoop100 ~]$ sudo service ntpd status

[data@hadoop100 ~]$ sudo service ntpd start

[data@hadoop100 ~]$ sudo chkconfig ntpd on(6)设置集群其他服务器与基准服务器时间同步

[data@hadoop100 ~]$ sudo crontab -e添加内容如下,设置每10分钟会与基准时间服务器同步一次

*/10 * * * * /usr/sbin/ntpdate hadoop102至此,我们完成了整个Hadoop完全分布式运行模式集群的搭建;