十二、Pytorch复现Residual Block

一、Residual Network

论文出处:Deep Residual Learning for Image Recognition

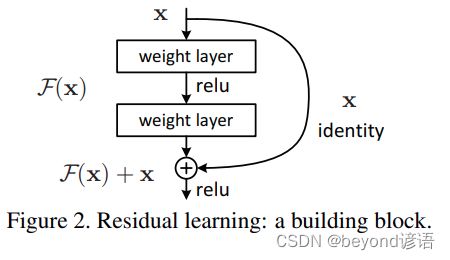

其核心模块:

二、复现Residual Block

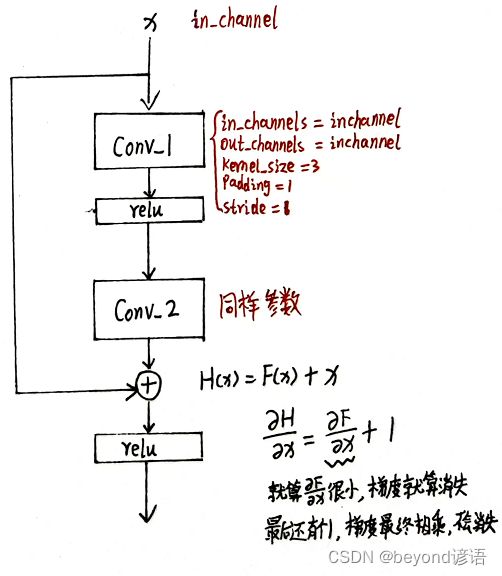

这里以两层卷积层为例进行设计复现

resnet可以很好的解决梯度消失问题

Residual Block大致要点:

样本x传入模型,分为两个分支,一个分支进行卷积层、relu层、卷积层、relu层;另一个分支中的x不变

最终两个分支通过相加操作结合到一块,最终再relu激活一次即可

1,数据集

老规矩,还使用MNIST手写数字数据集,详情可参考博文:九、多分类问题

import torch

from torchvision import transforms

from torchvision import datasets

from torch.utils.data import DataLoader

import torch.nn.functional as F #为了使用relu激活函数

import torch.optim as optim

batch_size = 64

transform = transforms.Compose([

transforms.ToTensor(),#把图片变成张量形式

transforms.Normalize((0.1307,),(0.3081,)) #均值和标准差进行数据标准化,这俩值都是经过整个样本集计算过的

])

train_dataset = datasets.MNIST(root='./',train=True,download=True,transform = transform)

train_loader = DataLoader(train_dataset,shuffle=True,batch_size=batch_size)

test_dataset = datasets.MNIST(root="./",train=False,download=True,transform=transform)

test_loader = DataLoader(test_dataset,shuffle=False,batch_size=batch_size)

2,测试数据集

这里以训练集中的第1个样本(train_dataset[0])为例进行测试

因为torch中卷积层传入参数格式需要为[B,C,W,H]形式,故通过x.view(-1,1,28,28)进行转换

卷积、relu、卷积、相加、relu

为了保证输入和输出特征大小保持一致,通过加边进行补充

x,y = train_dataset[0]

x.shape

"""

torch.Size([1, 28, 28])

"""

y

"""

5

"""

x = x.view(-1,1,28,28)

x.shape #[B,C,W,H]

"""

torch.Size([1, 1, 28, 28])

"""

channel = x.shape[1] #获取channel

#定义Residual Block,无非就是卷积、relu、卷积、relu然后再相加

conv1 = torch.nn.Conv2d(channel,channel,kernel_size=3,padding=1)

conv2 = torch.nn.Conv2d(channel,channel,kernel_size=3,padding=1)

conv_1 = conv1(x) #第一次卷积

conv_1.shape

"""

torch.Size([1, 1, 28, 28])

"""

relu_1 = F.relu(conv_1) #relu一下

relu_1.shape

"""

torch.Size([1, 1, 28, 28])

"""

H = conv_2 + x

H.shape

"""

torch.Size([1, 1, 28, 28])

"""

final = F.relu(H)

final.shape

"""

torch.Size([1, 1, 28, 28])

"""

3,Residual Block完整模块代码

class y_res(torch.nn.Module):

def __init__(self,channel):

super(y_res,self).__init__()

self.channels = channel

self.conv1 = torch.nn.Conv2d(channel,channel,kernel_size=3,padding=1)

self.conv2 = torch.nn.Conv2d(channel,channel,kernel_size=3,padding=1)

def forward(self,x):

conv_1 = self.conv1(x)

relu_1 = F.relu(conv_1)

conv_2 = self.conv2(relu_1)

H = conv_2 + x

final = F.relu(H)

return final

x,y = train_dataset[0]

x = x.view(-1,1,28,28)

channel = x.shape[1]

yy_res = y_res(channel)

final = yy_res(x)

final.shape

"""

torch.Size([1, 1, 28, 28])

"""

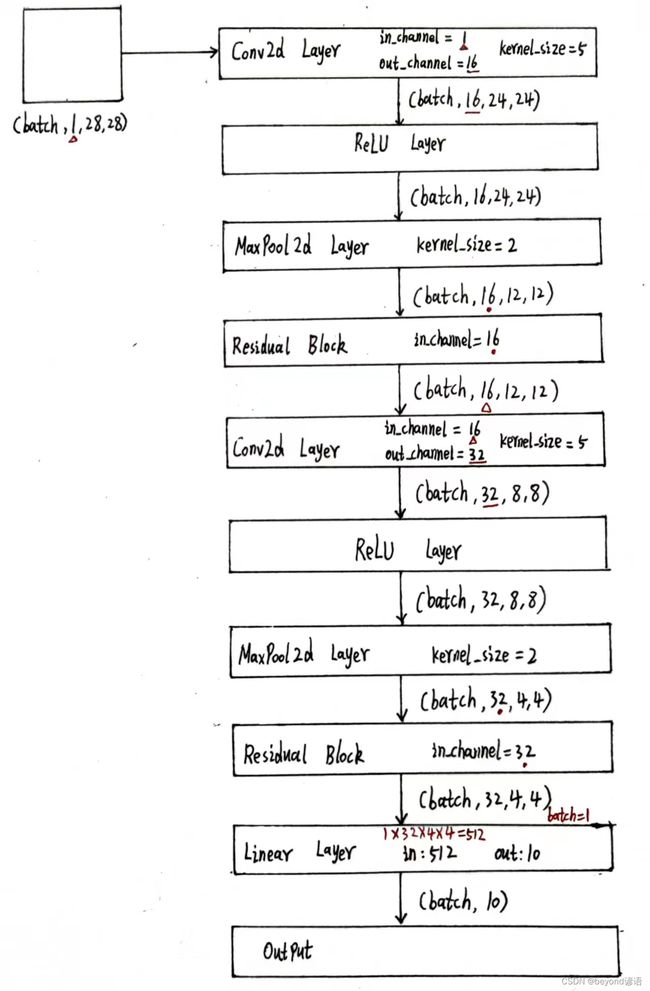

三、现学现卖

①准备数据集

数据集使用MNIST手写数字数据集,详细可参考博文:十、CNN卷积神经网络实战

②加载数据集

import torch

from torchvision import transforms

from torchvision import datasets

from torch.utils.data import DataLoader

import torch.nn.functional as F #为了使用relu激活函数

import torch.optim as optim

batch_size = 64

transform = transforms.Compose([

transforms.ToTensor(),#把图片变成张量形式

transforms.Normalize((0.1307,),(0.3081,)) #均值和标准差进行数据标准化,这俩值都是经过整个样本集计算过的

])

train_dataset = datasets.MNIST(root='./',train=True,download=True,transform = transform)

train_loader = DataLoader(train_dataset,shuffle=True,batch_size=batch_size)

test_dataset = datasets.MNIST(root="./",train=False,download=True,transform=transform)

test_loader = DataLoader(test_dataset,shuffle=False,batch_size=batch_size)

③模型构建

残差网络模型架构还是使用上述的模型

class yy_net(torch.nn.Module):

def __init__(self):

super(yy_net,self).__init__()

self.conv1 = torch.nn.Conv2d(1,16,kernel_size=5)

self.conv2 = torch.nn.Conv2d(16,32,kernel_size=5)

self.maxpool = torch.nn.MaxPool2d(2)

self.resblock1 = y_res(16)

self.resblock2 = y_res(32)

self.linear = torch.nn.Linear(512,10)

def forward(self,x):

batch_size = x.shape[0] #[B,C,W,H]

x = self.maxpool(F.relu(self.conv1(x)))

x = self.resblock1(x)

x = self.maxpool(F.relu(self.conv2(x)))

x = self.resblock2(x)

x = x.view(batch_size,-1)

x = self.linear(x)

return x

class y_res(torch.nn.Module):

def __init__(self,channel):

super(y_res,self).__init__()

self.channels = channel

self.conv1 = torch.nn.Conv2d(channel,channel,kernel_size=3,padding=1)

self.conv2 = torch.nn.Conv2d(channel,channel,kernel_size=3,padding=1)

def forward(self,x):

conv_1 = self.conv1(x)

relu_1 = F.relu(conv_1)

conv_2 = self.conv2(relu_1)

H = conv_2 + x

final = F.relu(H)

return final

④损失函数和优化器

lossf = torch.nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(),lr=0.0001,momentum=0.5)

⑤训练函数构建

def ytrain(epoch):

loss_total = 0.0

for batch_index ,data in enumerate(train_loader,0):

x,y = data

#x,y = x.to(device), y.to(device)#GPU加速

optimizer.zero_grad()

y_hat = model(x)

loss = lossf(y_hat,y)

loss.backward()

optimizer.step()

loss_total += loss.item()

if batch_index % 300 == 299:# 每300epoch输出一次

print("epoch:%d, batch_index:%5d \t loss:%.3f"%(epoch+1, batch_index+1, loss_total/300))

loss_total = 0.0 #每次epoch都将损失清除

⑥测试函数构建

def ytest():

correct = 0#模型预测正确的数量

total = 0#样本总数

with torch.no_grad():#测试不需要梯度,减小计算量

for data in test_loader:#读取测试样本数据

images, labels = data

#images, labels = images.to(device), labels.to(device) #GPU加速

pred = model(images)#预测,每一个样本占一行,每行有十个值,后续需要求每一行中最大值所对应的下标

pred_maxvalue, pred_maxindex = torch.max(pred.data,dim=1)#沿着第一个维度,一行一行来,去找每行中的最大值,返回每行的最大值和所对应下标

total += labels.size(0)#labels是一个(N,1)的向量,对应每个样本的正确答案

correct += (pred_maxindex == labels).sum().item()#使用预测得到的最大值的索引和正确答案labels进行比较,一致就是1,不一致就是0

print("Accuracy on testset :%d %%"%(100*correct / total))#correct预测正确的样本个数 / 样本总数 * 100 = 模型预测正确率

⑦主函数调用

if __name__ == '__main__':

for epoch in range(10):#训练10次

ytrain(epoch)#训练一次

if epoch%10 == 9:

ytest()#训练10次,测试1次

⑧完整代码

import torch

from torchvision import transforms

from torchvision import datasets

from torch.utils.data import DataLoader

import torch.nn.functional as F #为了使用relu激活函数

import torch.optim as optim

batch_size = 64

transform = transforms.Compose([

transforms.ToTensor(),#把图片变成张量形式

transforms.Normalize((0.1307,),(0.3081,)) #均值和标准差进行数据标准化,这俩值都是经过整个样本集计算过的

])

train_dataset = datasets.MNIST(root='./',train=True,download=True,transform = transform)

train_loader = DataLoader(train_dataset,shuffle=True,batch_size=batch_size)

test_dataset = datasets.MNIST(root="./",train=False,download=True,transform=transform)

test_loader = DataLoader(test_dataset,shuffle=False,batch_size=batch_size)

class yy_net(torch.nn.Module):

def __init__(self):

super(yy_net,self).__init__()

self.conv1 = torch.nn.Conv2d(1,16,kernel_size=5)

self.conv2 = torch.nn.Conv2d(16,32,kernel_size=5)

self.maxpool = torch.nn.MaxPool2d(2)

self.resblock1 = y_res(16)

self.resblock2 = y_res(32)

self.linear = torch.nn.Linear(512,10)

def forward(self,x):

batch_size = x.shape[0]

x = self.maxpool(F.relu(self.conv1(x)))

x = self.resblock1(x)

x = self.maxpool(F.relu(self.conv2(x)))

x = self.resblock2(x)

x = x.view(batch_size,-1)

x = self.linear(x)

return x

class y_res(torch.nn.Module):

def __init__(self,channel):

super(y_res,self).__init__()

self.channels = channel

self.conv1 = torch.nn.Conv2d(channel,channel,kernel_size=3,padding=1)

self.conv2 = torch.nn.Conv2d(channel,channel,kernel_size=3,padding=1)

def forward(self,x):

conv_1 = self.conv1(x)

relu_1 = F.relu(conv_1)

conv_2 = self.conv2(relu_1)

H = conv_2 + x

final = F.relu(H)

return final

model = yy_net()

lossf = torch.nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(),lr=0.0001,momentum=0.5)

def ytrain(epoch):

loss_total = 0.0

for batch_index ,data in enumerate(train_loader,0):

x,y = data

#x,y = x.to(device), y.to(device)#GPU加速

optimizer.zero_grad()

y_hat = model(x)

loss = lossf(y_hat,y)

loss.backward()

optimizer.step()

loss_total += loss.item()

if batch_index % 300 == 299:# 每300epoch输出一次

print("epoch:%d, batch_index:%5d \t loss:%.3f"%(epoch+1, batch_index+1, loss_total/300))

loss_total = 0.0 #每次epoch都将损失清除

def ytest():

correct = 0#模型预测正确的数量

total = 0#样本总数

with torch.no_grad():#测试不需要梯度,减小计算量

for data in test_loader:#读取测试样本数据

images, labels = data

#images, labels = images.to(device), labels.to(device) #GPU加速

pred = model(images)#预测,每一个样本占一行,每行有十个值,后续需要求每一行中最大值所对应的下标

pred_maxvalue, pred_maxindex = torch.max(pred.data,dim=1)#沿着第一个维度,一行一行来,去找每行中的最大值,返回每行的最大值和所对应下标

total += labels.size(0)#labels是一个(N,1)的向量,对应每个样本的正确答案

correct += (pred_maxindex == labels).sum().item()#使用预测得到的最大值的索引和正确答案labels进行比较,一致就是1,不一致就是0

print("Accuracy on testset :%d %%"%(100*correct / total))#correct预测正确的样本个数 / 样本总数 * 100 = 模型预测正确率

if __name__ == '__main__':

for epoch in range(10):#训练10次

ytrain(epoch)#训练一次

if epoch%10 == 9:

ytest()#训练10次,测试1次