neo4j etl设计

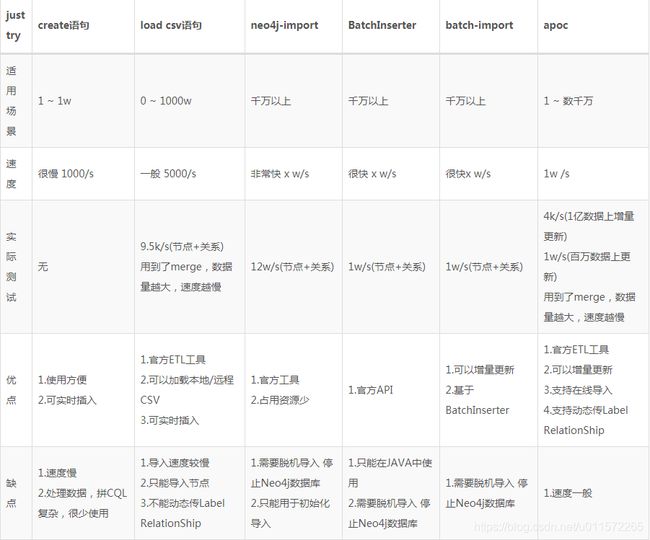

笔者最近碰到了把数据从关系型数据库导入到neo4j的需求,于是笔者翻了neo4j官网资料,发现了neo4j-etl工具,此工具可以将数据从rdbms导入到neo4j,但是由于该工具限制比较多,而且无法不好集成到自己的应用中,最终未采用该方案。于是笔者翻阅资料,找到了这么一张图。

由于笔者需求是定时同步数据,所以不能停机导入,故笔者采用的是load csv命令。

一:存储关系型数据库元数据信息

根据自己需求建立元数据模型

二:根据元数据把数据导入到csv

实例代码,可以根据自己具体的需求,连接数据库,生成sql,查询数据,进行导出。

/**

* 导入数据到csv

* 数据导入

* * 1.根据元数据分别生成节点以及关系的sql(需要做到根据不用类型数据库进行适配,先适配mysql)

* * 2.编写统一查询工具进行连接数据库进行数据查询(需要做到根据不用类型数据库进行适配,先适配mysql)

* * 3.根据查询出的字段顺序,把数据导入到csv

* * 4.动态生成csv存储目录以及文件名,写入map

*/

private void csvProducer(Long dataBaseId) {

PreparedStatement preparedStatement = null;

ResultSet resultSet = null;

Connection connection = null;

String date = DateUtils.getDate("YYYYMMddHHmmss");

csvDirectory = "/NEO4J_IMPORT_"+date;

try {

List metadataDataSources = metadataDataSourceRepository.getSynchroTableByDatasourceId(dataBaseId);

DatabaseInfo datebaseInfoById = datebaseInfoRepository.getDatebaseInfoById(dataBaseId);

Class.forName("com.mysql.cj.jdbc.Driver");

connection = DriverManager.getConnection(datebaseInfoById.getJdbc(), datebaseInfoById.getLoginName(), datebaseInfoById.getPassword());

if (ListUtils.isNotNull(metadataDataSources)) {

nodeCsvMap = new HashMap<>();

File csvFile = new File(path+"/import"+csvDirectory);

csvFile.mkdirs();

Map>> map = new HashMap<>();

for (MetadataDataSource metadataDataSource : metadataDataSources) {

List fields = fieldMap.get(metadataDataSource.getDataId());

String sql = queryEngine.queryTableView(fields, metadataDataSource.getTableName(), metadataDataSource.getNodeName(),databaseInfo.getDatabase());

preparedStatement = connection.prepareStatement(sql);

resultSet = preparedStatement.executeQuery();

ResultSetMetaData metaData = resultSet.getMetaData();

List> arrayList = new ArrayList();

while (resultSet.next() != false) {

List lists = new ArrayList<>();

for (MetadataNodeAttribute attribute:fields) {

lists.add(null == resultSet.getString(attribute.getAliasName())?"":resultSet.getString(attribute.getAliasName()));

}

arrayList.add(lists);

}

map.put(metadataDataSource, arrayList);

}

Set keySet = map.keySet();

List keys = new ArrayList(keySet);

for (MetadataDataSource key : keys) {

String csvFile1 = createCSVFile(map.get(key), "NODE_" + key.getTableName() + "__" + UUIDUtils.getUUid(), csvFile);

nodeCsvMap.put(key.getDataId(),csvFile1);

}

}

List relationships = metadataDataRelationshipRepository.getSynchroTableByDatasourceId(dataBaseId);

if (ListUtils.isNotNull(relationships)){

relCsvMap = new HashMap<>();

File csvFile = new File(path+"/import"+csvDirectory);

Map>> maps = new HashMap<>();

for (MetadataDataRelationship relationship : relationships) {

// 根据关系表查 数据

List> list = new ArrayList<>();

String sql = queryEngine.queryFkRelEtl(relationship, nodeMap.get(relationship.getDataIdForeign()).getTableName(), databaseInfo.getDatabase(), relationship.getManyTablePrimary());

preparedStatement = connection.prepareStatement(sql);

resultSet = preparedStatement.executeQuery();

ResultSetMetaData metaData = resultSet.getMetaData();

int columnCount = metaData.getColumnCount();

while (resultSet.next() != false) {

for (int i = 1; i <= columnCount; i++) {

List lists = new ArrayList<>();

lists.add(null == resultSet.getString(relationship.getRelationshipForeign())?"":resultSet.getString(relationship.getRelationshipForeign()));

lists.add(null == resultSet.getString(relationship.getManyTablePrimary())?"":resultSet.getString(relationship.getManyTablePrimary()));

list.add(lists);

}

}

maps.put(relationship,list);

}

Set keySet = maps.keySet();

List keys = new ArrayList(keySet);

for (MetadataDataRelationship key : keys) {

String csvFile1 = createCSVFile(maps.get(key), "RE_" + key.getRelationshipType() + "__" + UUIDUtils.getUUid(), csvFile);

relCsvMap.put(key.getRelationId(),csvFile1);

}

}

} catch (Exception e) {

e.printStackTrace();

}finally {

closeAllResource(connection ,preparedStatement,resultSet);

}

} 三:根据元数据生成cypher文件

模拟官方neo4j-etl jar包生成cypher文件的过程。

private String cypherProducer() throws IOException {

//1.生成约束cypher

//2.生成节点load csv语句

//3.生成关系load csv语句

FileWriter fw = null;

StringBuffer cypherFile = new StringBuffer();

String cypherUrl = (path+"/import"+csvDirectory+"/load-csv.cypher").replaceAll("\\\\", "/");

try {

File file = new File(cypherUrl);

if(!file.exists()){

file.createNewFile();

}

cypherFile.append(":begin"+"\r\n");

//拼装约束cypher

nodeMap.forEach((k, v) -> {

MetadataDataSource metadataDataSource = nodeMap.get(k);

String pk = pkMap.get(k);

try {

cypherFile.append("CREATE CONSTRAINT ON (n:`"+metadataDataSource.getNodeName()+"`) ASSERT n.`"+pk+"` IS UNIQUE;"+"\r\n");

} catch (Exception e) {

e.printStackTrace();

}

});

cypherFile.append(":commit"+"\r\n");

//生成节点load csv语句

nodeMap.forEach((k, v) -> {

try {

cypherFile.append("USING PERIODIC COMMIT"+"\r\n");

cypherFile.append("LOAD CSV FROM 'file://"+nodeCsvMap.get(k).replaceAll("\\\\", "/")+"' AS row FIELDTERMINATOR ','"+"\r\n");

int pkIndex = this.finkPk(k);

cypherFile.append("MERGE (n:`"+nodeMap.get(k).getNodeName()+"` {`"+pkMap.get(k)+"`: row["+pkIndex+"]})"+"\r\n");

cypherFile.append("SET ");

for(int j=0;j {

try {

cypherFile.append("USING PERIODIC COMMIT"+"\r\n");

cypherFile.append("LOAD CSV FROM 'file://"+relCsvMap.get(rel.getRelationId()).replaceAll("\\\\", "/")+"' AS row FIELDTERMINATOR ','"+"\r\n");

String primaryName = this.getFieldAlias(rel.getDataIdPrimary(),rel.getRelationshipPrimary());

String foreignName = this.getFieldAlias(rel.getDataIdForeign(),rel.getManyTablePrimary());

cypherFile.append("MATCH (a:`"+nodeMap.get(rel.getDataIdPrimary()).getNodeName()+"` {`"+primaryName+"`: row[0]})"+"\r\n");

cypherFile.append("MATCH (b:`"+nodeMap.get(rel.getDataIdForeign()).getNodeName()+"` {`"+foreignName+"`: row[1]})"+"\r\n");

cypherFile.append("MERGE (a)-[r:`"+rel.getRelationshipType()+"`]->(b);"+"\r\n");

} catch (Exception e) {

e.printStackTrace();

}

});

fw = new FileWriter(cypherUrl, true);

fw.write(cypherFile.toString());

fw.flush();

}catch (IOException e){

e.printStackTrace();

}finally {

if(null != fw){

fw.close();

}

}

return cypherUrl;

} 四:执行命令进行导入

private void export(String cypherUrl) throws FileNotFoundException {

SummaryCounters summaryCounters;

summaryCounters = InternalSummaryCounters.EMPTY_STATS;

Scanner scanner = new Scanner(new BufferedReader(new FileReader(cypherUrl))).useDelimiter(END_OF_STATEMENT);

try (Session session = neo4jConfig.neo4jDriver().session();){

Transaction tx = null;

while (scanner.hasNext()) {

String statement = scanner.next().trim();

if (statement.startsWith(String.format(":begin%n", new Object[0]))) {

tx = session.beginTransaction();

statement = statement.substring(String.format(":begin%n", new Object[0]).length());

}

if (statement.startsWith(String.format(":commit%n", new Object[0]))) {

if (tx != null) {

tx.success();

tx.close();

tx = null;

}

statement = statement.substring(String.format(":commit%n", new Object[0]).length());

}

if (statement.trim().isEmpty()){

continue;

}

try {

StatementResult result = tx != null ? tx.run(statement) : session.run(statement);

summaryCounters = this.updateSummaryCounters(summaryCounters, result.consume());

}catch (Exception e){

Loggers.Default.log(Level.SEVERE,e.getMessage());

}

}

}

this.printResult(summaryCounters);

}

private SummaryCounters updateSummaryCounters(SummaryCounters summaryCounters, ResultSummary resultSummary) {

SummaryCounters newCounters = resultSummary.counters();

return new InternalSummaryCounters(summaryCounters.nodesCreated() + newCounters.nodesCreated(), summaryCounters.nodesDeleted() + newCounters.nodesDeleted(), summaryCounters.relationshipsCreated() + newCounters.relationshipsCreated(), summaryCounters.relationshipsDeleted() + newCounters.relationshipsDeleted(), summaryCounters.propertiesSet() + newCounters.propertiesSet(), summaryCounters.labelsAdded() + newCounters.labelsAdded(), summaryCounters.labelsRemoved() + newCounters.labelsRemoved(), summaryCounters.indexesAdded() + newCounters.indexesAdded(), summaryCounters.indexesRemoved() + newCounters.indexesRemoved(), summaryCounters.constraintsAdded() + newCounters.constraintsAdded(), summaryCounters.constraintsRemoved() + newCounters.constraintsRemoved());

}

public void printResult(SummaryCounters summary) {

Loggers.Default.log(Level.INFO, String.format("(+%d,-%d) Nodes, (+%d,-%d) Labels, (+%d,-%d) Rels, (%d) Props, (+%d,-%d) Indexes, (+%d,-%d) Constraints.%n", summary.nodesCreated(), summary.nodesDeleted(), summary.labelsAdded(), summary.labelsRemoved(), summary.relationshipsCreated(), summary.relationshipsDeleted(), summary.propertiesSet(), summary.indexesAdded(), summary.indexesRemoved(), summary.constraintsAdded(), summary.constraintsRemoved()));

}