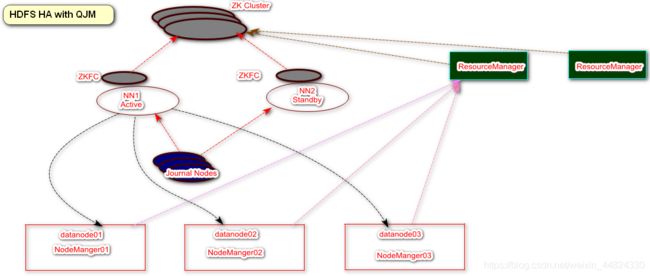

Hadoop的高可用

一、架构

| HadoopNode01 | HadoopNode02 | HadoopNode03 |

|---|---|---|

| nn1 | nn2 | |

| journal node | journal node | journal node |

| zkfc | zkfc | |

| datanode | datanode | datanode |

| zk01 | zk02 | zk03 |

| rm1 | rm2 | |

| nodemanager | nodemanager | nodemanager |

二、HDFS HA

1)基础环境准备

(1)配置主机名和IP的映射关系

[root@HadoopNodeX ~]# vi /etc/hosts

192.168.11.20 HadoopNode00

192.168.11.21 HadoopNode01

192.168.11.22 HadoopNode02

192.168.11.23 HadoopNode03

192.168.11.31 ZK01

192.168.11.32 ZK02

192.168.11.33 ZK03

(2)关闭防火墙

[root@HadoopNodeX ~]# service iptables stop

[root@HadoopNodeX ~]# chkconfig iptables off(3)同步时钟

[root@HadoopNodeX ~]# yum -y install ntpdate

[root@HadoopNodeX ~]# ntpdate -u ntp.api.bz

25 Sep 11:19:26 ntpdate[1749]: step time server 114.118.7.163 offset 201181.363384 sec

[root@HadoopNodeX ~]# date

Wed Sep 25 11:19:52 CST 2019(4)配置SSH 免密登陆

[root@HadoopNodeX ~]# ssh-keygen -t rsa # 现在三台机器上都运行次命令,在运行下面的

[root@HadoopNodeX ~]# ssh-copy-id HadoopNode01

[root@HadoopNodeX ~]# ssh-copy-id HadoopNode02

[root@HadoopNodeX ~]# ssh-copy-id HadoopNode03(5)Java 环境

export JAVA_HOME=/home/java/jdk1.8.0_181

export PATH=$PATH:$JAVA_HOME/bin

2)ZK集群启动

3)安装配置Hadoop

(1)解压配置环境变量

export HADOOP_HOME=/home/hadoop/hadoop-2.6.0

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

(2)配置core-site.xml

fs.defaultFS

hdfs://mycluster

hadoop.tmp.dir

/home/hadoop/hadoop-2.6.0/hadoop-${user.name}

fs.trash.interval

30

net.topology.script.file.name

/home/hadoop/hadoop-2.6.0/etc/hadoop/rack.sh

(3)创建机架脚本

在对应的文件夹中/home/hadoop/hadoop-2.6.0/etc/hadoop/ 创建一个rack.sh文件,将下方内容粘贴进入

while [ $# -gt 0 ] ; do

nodeArg=$1

exec</home/hadoop/hadoop-2.6.0/etc/hadoop/topology.data

result=""

while read line ; do

ar=( $line )

if [ "${ar[0]}" = "$nodeArg" ] ; then

result="${ar[1]}"

fi

done

shift

if [ -z "$result" ] ; then

echo -n "/default-rack"

else

echo -n "$result "

fi

done另外 需要对机架文件进行权限控制,chmod u+x /home/hadoop/hadoop-2.6.0/etc/hadoop/rack.sh

(4)创建机架映射文件

创建 /home/hadoop/hadoop-2.6.0/etc/hadoop/topology.data

192.168.11.21 /rack1

192.168.11.22 /rack1

192.168.11.23 /rack2

(5)配置 hdfs-site.xml

<configuration>

<property>

<name>dfs.replicationname>

<value>3value>

property>

<property>

<name>dfs.ha.automatic-failover.enabledname>

<value>truevalue>

property>

<property>

<name>ha.zookeeper.quorumname>

<value>zk01:2181,zk02:2181,zk03:2181value>

property>

<property>

<name>dfs.nameservicesname>

<value>myclustervalue>

property>

<property>

<name>dfs.ha.namenodes.myclustername>

<value>nn1,nn2value>

property>

<property>

<name>dfs.namenode.rpc-address.mycluster.nn1name>

<value>HadoopNode01:9000value>

property>

<property>

<name>dfs.namenode.rpc-address.mycluster.nn2name>

<value>HadoopNode02:9000value>

property>

<property>

<name>dfs.namenode.shared.edits.dirname>

<value>qjournal://HadoopNode01:8485;HadoopNode02:8485;HadoopNode03:8485/myclustervalue>

property>

<property>

<name>dfs.client.failover.proxy.provider.myclustername>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvidervalue>

property>

<property>

<name>dfs.ha.fencing.methodsname>

<value>sshfencevalue>

property>

<property>

<name>dfs.ha.fencing.ssh.private-key-filesname>

<value>/root/.ssh/id_rsavalue>

property>

configuration>

(6)编辑slaves信息

HadoopNode01

HadoopNode02

HadoopNode03

(7)启动

1)启动HDFS服务(初始化)

[root@HadoopNodeX ~]# rm -rf /home/hadoop/hadoop-2.6.0/hadoop-root/*

[root@HadoopNodeX ~]# hadoop-daemons.sh start journalnode # 在任意节点中运行即可

[root@HadoopNode01 ~]# hdfs namenode -format

[root@HadoopNode01 ~]# hadoop-daemon.sh start namenode

[root@HadoopNode02 ~]# hdfs namenode -bootstrapStandby # 下载主namenode的元数据

[root@HadoopNode02 ~]# hadoop-daemon.sh start namenode

[root@HadoopNode01|02 ~]# hdfs zkfc -formatZK #在任意的一个01|02 节点上格式化即可

[root@HadoopNode01 ~]# hadoop-daemon.sh start zkfc

[root@HadoopNode02 ~]# hadoop-daemon.sh start zkfc

[root@HadoopNodeX ~]# hadoop-daemon.sh start datanode

[root@HadoopNode01 ~]# jps

2324 JournalNode

2661 DFSZKFailoverController

2823 Jps

2457 NameNode

2746 DataNode

[root@HadoopNode02 ~]# jps

2595 DataNode

2521 DFSZKFailoverController

2681 Jps

2378 NameNode

2142 JournalNode

[root@HadoopNode03 .ssh]# jps

2304 Jps

2146 JournalNode

2229 DataNode

2)日常维护

[root@HadoopNode01 ~]# stop-dfs.sh

[root@HadoopNode01 ~]# start-dfs.sh三、YARN 高可用

(1)配置 yarn-site.xml

<property>

<name>yarn.nodemanager.aux-servicesname>

<value>mapreduce_shufflevalue>

property>

<property>

<name>yarn.resourcemanager.ha.enabledname>

<value>truevalue>

property>

<property>

<name>yarn.resourcemanager.zk-addressname>

<value>zk01:2181,zk02:2181,zk03:2181value>

property>

<property>

<name>yarn.resourcemanager.cluster-idname>

<value>rmcluster01value>

property>

<property>

<name>yarn.resourcemanager.ha.rm-idsname>

<value>rm1,rm2value>

property>

<property>

<name>yarn.resourcemanager.hostname.rm1name>

<value>HadoopNode02value>

property>

<property>

<name>yarn.resourcemanager.hostname.rm2name>

<value>HadoopNode03value>

property>(2)配置 mapred-site.xml

<property>

<name>mapreduce.framework.namename>

<value>yarnvalue>

property>(3)启动

[root@HadoopNode02 ~]# yarn-daemon.sh start resourcemanager

[root@HadoopNode03 ~]# yarn-daemon.sh start resourcemanager

[root@HadoopNodeX ~]# yarn-daemon.sh start nodemanager