CDH 6.3.2 高可用安装步骤

CDH 6.3.2 高可用安装步骤

官方安装步骤

https://docs.cloudera.com/documentation/enterprise/6/6.3/topics/cm_ig_reqs_space.html

1、安装准备

阿里云服务器5台:CentOS7.6 JDK1.8 Mysql 5.7 CDH-6.3.2相关安装包

IP分别为 192.168.5.8 , 192.168.5.9 , 192.168.5.10 , 192.168.5.11 , 192.168.5.12

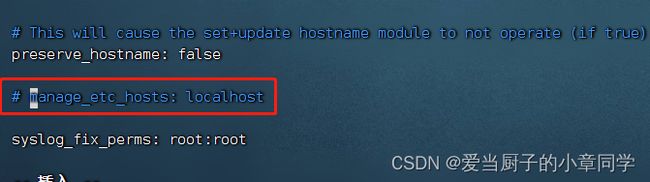

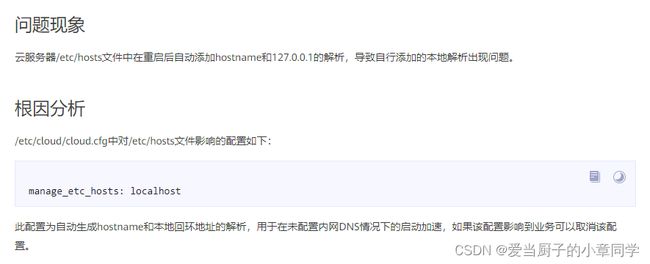

阿里云服务器需要 注释下面文件中的一行数据,避免重启后出现 hosts 自行添加本地解析 (所有服务器)

[root@iZbp1buitxr2g25cqu6hjkZ ~]# vim /etc/cloud/cloud.cfg

[root@iZbp1buitxr2g25cqu6hjkZ ~]#

2、机器列表

机器准备:(这里HA是对HDFS和YARN的高可用)

| IP | 主机名 | cpu内存 |

|---|---|---|

| 192.168.5.8 | hadoop101 | 8c32G |

| 192.168.5.9 | hadoop102 | 8c32G |

| 192.168.5.10 | hadoop103 | 8c16G |

| 192.168.5.11 | hadoop104 | 8c16G |

| 192.168.5.12 | hadoop105 | 8c16G |

3、修改机器hostname

(所有机器)

192.168.5.8

[root@iZkzkn8bx1pjajZ ~]# hostnamectl set-hostname hadoop101

-- 退出重新连接

[root@iZbp1ch91lmmx5lalz1i6iZ ~]# exit

登出

连接断开

连接主机...

连接主机成功

Last login: Tue Jun 28 09:03:59 2022 from 117.64.249.192

Welcome to Alibaba Cloud Elastic Compute Service !

[root@hadoop101 ~]#

192.168.5.9

[root@iZkzkn8bx1pjakZ ~]# hostnamectl set-hostname hadoop102

-- 退出重新连接

[root@iZbp1ch91lmmx5lalz1i6iZ ~]# exit

登出

连接断开

连接主机...

连接主机成功

Last login: Tue Jun 28 09:03:59 2022 from 117.64.249.192

Welcome to Alibaba Cloud Elastic Compute Service !

192.168.5.10

[root@iZkzkn8bx1pjaiZ ~]# hostnamectl set-hostname hadoop103

-- 退出重新连接

[root@iZbp1ch91lmmx5lalz1i6iZ ~]# exit

登出

连接断开

连接主机...

连接主机成功

Last login: Tue Jun 28 09:03:59 2022 from 117.64.249.192

Welcome to Alibaba Cloud Elastic Compute Service !

192.168.5.11

[root@iZkzkn8bx1pjaiZ ~]# hostnamectl set-hostname hadoop104

-- 退出重新连接

[root@iZbp1ch91lmmx5lalz1i6iZ ~]# exit

登出

连接断开

连接主机...

连接主机成功

Last login: Tue Jun 28 09:03:59 2022 from 117.64.249.192

Welcome to Alibaba Cloud Elastic Compute Service !

192.168.5.12

[root@iZkzkn8bx1pjaiZ ~]# hostnamectl set-hostname hadoop105

-- 退出重新连接

[root@iZbp1ch91lmmx5lalz1i6iZ ~]# exit

登出

连接断开

连接主机...

连接主机成功

Last login: Tue Jun 28 09:03:59 2022 from 117.64.249.192

Welcome to Alibaba Cloud Elastic Compute Service !

4、编辑hosts 文件

(所有机器)

[root@hadoop101 /]# vim /etc/hosts

-- 注释掉内网 ip 对应的默认行 # 192.168.5.8 iZbp1ch91lmmx5lalz1i6iZ iZbp1ch91lmmx5lalz1i6iZ

192.168.5.8 hadoop101

192.168.5.9 hadoop102

192.168.5.10 hadoop103

192.168.5.11 hadoop104

192.168.5.12 hadoop105

5、关闭防火墙

(所有机器)

# 如果集群内的所有机器都是内网环境的,直接关闭防火墙是没啥问题的,如果是外网环境,就不能关闭防火墙了,而是开放端口,

# 以下命令需要在所有机子执行,临时和永久自己选择一种即可

# 查看防火墙状态

[root@hadoop101 /]# systemctl status firewalld.service

# 如果开启则关闭

[root@hadoop101 /]# systemctl stop firewalld.service

# 永久关闭

[root@hadoop101 /]# systemctl disable firewalld.service

6、配置免密登录

(主节点hadoop101,hadoop102机上配置)

[root@hadoop101 ~]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa): 回车

Enter passphrase (empty for no passphrase): 回车

Enter same passphrase again: 回车

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:回车

SHA256:nrujQC7ptVXbrqXXjlsS4qksnoDZ+XYnPIUJc+T+9Kg root@hadoop101

The key's randomart image is:

+---[RSA 2048]----+

| |

| . |

| o |

| o o |

| .= oS . |

| + = ++o* . |

|o * +..+*o+.. |

| . =o*=.==o+. |

| .o=oE*==+o. |

+----[SHA256]-----+

# 分别将秘钥拷贝到其他机器,包括本机

[root@hadoop101 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub hadoop101

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host 'hadoop101 (192.168.10.17)' can't be established.

ECDSA key fingerprint is SHA256:LatImGYCEi0W9LPur0rwoix5tTpWXkJDxRHaHcqiMbg.

ECDSA key fingerprint is MD5:6e:f7:8c:be:39:c7:cf:55:75:bd:85:56:39:b9:29:72.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@hadoop101's password: 输入机器密码 1qaz!@#$zyCdhTest

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'hadoop101'"

and check to make sure that only the key(s) you wanted were added.

[root@hadoop101 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub hadoop102

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host 'hadoop102 (192.168.10.18)' can't be established.

ECDSA key fingerprint is SHA256:LatImGYCEi0W9LPur0rwoix5tTpWXkJDxRHaHcqiMbg.

ECDSA key fingerprint is MD5:6e:f7:8c:be:39:c7:cf:55:75:bd:85:56:39:b9:29:72.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@hadoop102's password: 输入机器密码 xxxxx

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'hadoop102'"

and check to make sure that only the key(s) you wanted were added.

[root@hadoop101 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub hadoop103

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host 'hadoop103 (192.168.10.19)' can't be established.

ECDSA key fingerprint is SHA256:LatImGYCEi0W9LPur0rwoix5tTpWXkJDxRHaHcqiMbg.

ECDSA key fingerprint is MD5:6e:f7:8c:be:39:c7:cf:55:75:bd:85:56:39:b9:29:72.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@hadoop103's password: 输入机器密码 xxxxx

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'hadoop103'"

and check to make sure that only the key(s) you wanted were added.

[root@hadoop101 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub hadoop104

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host 'hadoop104 (192.168.10.7)' can't be established.

ECDSA key fingerprint is SHA256:LatImGYCEi0W9LPur0rwoix5tTpWXkJDxRHaHcqiMbg.

ECDSA key fingerprint is MD5:6e:f7:8c:be:39:c7:cf:55:75:bd:85:56:39:b9:29:72.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@hadoop104's password: 输入机器密码 xxxxx

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'hadoop104'"

and check to make sure that only the key(s) you wanted were added.

[root@hadoop101 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub hadoop105

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host 'hadoop105 (192.168.10.8)' can't be established.

ECDSA key fingerprint is SHA256:LatImGYCEi0W9LPur0rwoix5tTpWXkJDxRHaHcqiMbg.

ECDSA key fingerprint is MD5:6e:f7:8c:be:39:c7:cf:55:75:bd:85:56:39:b9:29:72.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@hadoop105's password: 输入机器密码 xxxxx

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'hadoop105'"

and check to make sure that only the key(s) you wanted were added.

# 验证免密登录

[root@hadoop101 /]# ssh hadoop101

[root@hadoop101 /]# ssh hadoop102

[root@hadoop101 /]# ssh hadoop103

[root@hadoop101 /]# ssh hadoop104

[root@hadoop101 /]# ssh hadoop105

7、配置文件转发

(主节点机上hadoop101配置)

[root@hadoop101 ~]# cd /usr/local/bin/

[root@hadoop101 bin]# touch xsync

[root@hadoop101 bin]# vim xsync

加入以下脚本

#!/bin/bash

#1 获取输入参数个数,如果没有参数,直接退出

pcount=$#

if [ $pcount -lt 1 ]

then

echo Not Enough Arguement!

exit;

fi

#2. 遍历集群所有机器

# 也可以采用:

# for host in hadoop{102..105};

for host in hadoop102 hadoop103 hadoop104 hadoop105

do

echo ==================== $host ====================

#3. 遍历所有目录,挨个发送

for file in $@

do

#4 判断文件是否存在

if [ -e $file ]

then

#5. 获取父目录

pdir=$(cd -P $(dirname $file); pwd)

echo pdir=$pdir

#6. 获取当前文件的名称

fname=$(basename $file)

echo fname=$fname

#7. 通过ssh执行命令:在$host主机上递归创建文件夹(如果存在该文件夹)

ssh $host "mkdir -p $pdir"

#8. 远程同步文件至$host主机的$USER用户的$pdir文件夹下

rsync -av $pdir/$fname $USER@$host:$pdir

else

echo $file does not exists!

fi

done

done

将脚本文件xsync 授权 并测试 脚本

[root@hadoop101 bin]# chmod 777 xsync

[root@hadoop101 bin]# xsync xsync

==================== hadoop102 ====================

pdir=/usr/local/bin

fname=xsync

sending incremental file list

xsync

sent 1,170 bytes received 35 bytes 2,410.00 bytes/sec

total size is 1,080 speedup is 0.90

==================== hadoop103 ====================

pdir=/usr/local/bin

fname=xsync

sending incremental file list

xsync

sent 1,170 bytes received 35 bytes 2,410.00 bytes/sec

total size is 1,080 speedup is 0.90

==================== hadoop104 ====================

pdir=/usr/local/bin

fname=xsync

sending incremental file list

xsync

sent 1,170 bytes received 35 bytes 803.33 bytes/sec

total size is 1,080 speedup is 0.90

==================== hadoop105 ====================

pdir=/usr/local/bin

fname=xsync

sending incremental file list

xsync

sent 1,170 bytes received 35 bytes 803.33 bytes/sec

total size is 1,080 speedup is 0.90

8、安装 JDK

(所有节点) 需要使用官网提供的jdk

(1)在hadoop 01的/opt目录下创建software文件夹

[root@hadoop101 opt]# mkdir software

(2)上传oracle-j2sdk1.8-1.8.0+update181-1.x86_64.rpm并安装

[root@hadoop101 software]# rpm -ivh oracle-j2sdk1.8-1.8.0+update181-1.x86_64.rpm

[root@hadoop101 software]# vim /etc/profile

export JAVA_HOME=/usr/java/jdk1.8.0_181-cloudera

export CLASSPATH=.: C L A S S P A T H : CLASSPATH: CLASSPATH:JAVA_HOME/lib

export PATH= P A T H : PATH: PATH:JAVA_HOME/bin

[root@hadoop101 software]# source /etc/profile

[root@hadoop101 software]# java -version

java version “1.8.0_181”

(3)分发,并source

[root@hadoop101 software]# xsync /usr/java/jdk1.8.0_181-cloudera

[root@hadoop101 software]# xsync /etc/profile

[root@hadoop102 module]# source /etc/profile

[root@hadoop102 module]# java -version

[root@hadoop103 module]# source /etc/profile

[root@hadoop103 module]# java -version

[root@hadoop104 module]# source /etc/profile

[root@hadoop104 module]# java -version

[root@hadoop105 module]# source /etc/profile

[root@hadoop106 module]# java -version

9、MySQL中建库

由于使用 mysql 云数据 此处安装步骤省略

提前在云端数据库创建 好相应的库 以及授权

mysql> CREATE DATABASE scm DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci;

mysql> CREATE USER scm@'%' IDENTIFIED BY 'scm';

mysql> GRANT ALL PRIVILEGES ON scm.* TO scm@'%';

mysql> FLUSH PRIVILEGES;

mysql> CREATE DATABASE hive DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci;

mysql> CREATE USER hive@'%' IDENTIFIED BY 'hive';

mysql> GRANT ALL PRIVILEGES ON hive.* TO hive@'%';

mysql> FLUSH PRIVILEGES;

mysql> CREATE DATABASE oozie DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci;

mysql> CREATE USER oozie@'%' IDENTIFIED BY 'oozie';

mysql> GRANT ALL PRIVILEGES ON oozie.* TO oozie@'%';

mysql> FLUSH PRIVILEGES;

mysql> CREATE DATABASE hue DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci;

mysql> CREATE USER hue@'%' IDENTIFIED BY 'hue';

mysql> GRANT ALL PRIVILEGES ON hue.* TO hue@'%';

mysql> FLUSH PRIVILEGES;

mysql 连接信息

ip: 192.168.10.15

端口:xxxx

用户名:xxxx

密码:xxxxx

10、CM安装部署

10.1、CM安装

(1)将mysql-connector-java-5.1.27-bin.jar拷贝到/usr/share/java 路径下,并重命名 mysql-connector-java.jar

[root@hadoop101 software]# mv mysql-connector-java-5.1.47.jar mysql-connector-java.jar

[root@hadoop101 software]# mkdir /usr/share/java

[root@hadoop101 software]# cp mysql-connector-java.jar /usr/share/java/

[root@hadoop101 software]# xsync /usr/share/java/

集群规划

| 节点 | hadoop101 | hadoop102 | hadoop103 | hadoop104 | hadoop105 |

|---|---|---|---|---|---|

| 服务 | cloudera-scm-servercloudera-scm-agent | cloudera-scm-agent | cloudera-scm-agent | cloudera-scm-agent | cloudera-scm-agent |

10.2、创建cloudera-manager目录,存放cdh安装文件

[root@hadoop101 software]# mkdir /opt/cloudera-manager

[root@hadoop101 software]# tar -zxvf cm6.3.1-redhat7.tar.gz

[root@hadoop101 software]# cd cm6.3.1/RPMS/x86_64/

[root@hadoop101 x86_64]# cp cloudera-manager-agent-6.3.1-1466458.el7.x86_64.rpm /opt/cloudera-manager/

[root@hadoop101 x86_64]# cp cloudera-manager-server-6.3.1-1466458.el7.x86_64.rpm /opt/cloudera-manager/

[root@hadoop101 x86_64]# cp cloudera-manager-daemons-6.3.1-1466458.el7.x86_64.rpm /opt/cloudera-manager/

[root@hadoop101 x86_64]# cd /opt/cloudera-manager/

10.3、安装cloudera-manager-daemons,安装完毕后多出/opt/cloudera目录

[root@hadoop101 cloudera-manager]# rpm -ivh cloudera-manager-daemons-6.3.1-1466458.el7.x86_64.rpm

[root@hadoop101 cloudera-manager]# cd /opt

[root@hadoop101 cloudera]# ls

cloudera/ cloudera-manager/ software/

[root@hadoop101 cloudera-manager]# cd /opt/cloudera-manager

[root@hadoop101 opt]# scp -r /opt/cloudera-manager/ hadoop102:/opt/

[root@hadoop101 opt]# scp -r /opt/cloudera-manager/ hadoop103:/opt/

[root@hadoop101 opt]# scp -r /opt/cloudera-manager/ hadoop104:/opt/

[root@hadoop101 opt]# scp -r /opt/cloudera-manager/ hadoop105:/opt/

[root@hadoop102 ~]# cd /opt/cloudera-manager/

[root@hadoop102 cloudera-manager]# rpm -ivh cloudera-manager-daemons-6.3.1-1466458.el7.x86_64.rpm

[root@hadoop103 ~]# cd /opt/cloudera-manager/

[root@hadoop103 cloudera-manager]# rpm -ivh cloudera-manager-daemons-6.3.1-1466458.el7.x86_64.rpm

[root@hadoop104 ~]# cd /opt/cloudera-manager/

[root@hadoop104 cloudera-manager]# rpm -ivh cloudera-manager-daemons-6.3.1-1466458.el7.x86_64.rpm

[root@hadoop105 ~]# cd /opt/cloudera-manager/

[root@hadoop105 cloudera-manager]# rpm -ivh cloudera-manager-daemons-6.3.1-1466458.el7.x86_64.rpm

10.4、安装cloudera-manager-agent

[root@hadoop101 cloudera-manager]# yum install bind-utils psmisc cyrus-sasl-plain cyrus-sasl-gssapi fuse portmap fuse-libs /lib/lsb/init-functions httpd mod_ssl openssl-devel python-psycopg2 MySQL-python libxslt

[root@hadoop101 cloudera-manager]# rpm -ivh cloudera-manager-agent-6.3.1-1466458.el7.x86_64.rpm

[root@hadoop102 cloudera-manager]# yum install bind-utils psmisc cyrus-sasl-plain cyrus-sasl-gssapi fuse portmap fuse-libs /lib/lsb/init-functions httpd mod_ssl openssl-devel python-psycopg2 MySQL-python libxslt

[root@hadoop102 cloudera-manager]# rpm -ivh cloudera-manager-agent-6.3.1-1466458.el7.x86_64.rpm

[root@hadoop103 cloudera-manager]# yum install bind-utils psmisc cyrus-sasl-plain cyrus-sasl-gssapi fuse portmap fuse-libs /lib/lsb/init-functions httpd mod_ssl openssl-devel python-psycopg2 MySQL-python libxslt

[root@hadoop103 cloudera-manager]# rpm -ivh cloudera-manager-agent-6.3.1-1466458.el7.x86_64.rpm

[root@hadoop104 cloudera-manager]# yum install bind-utils psmisc cyrus-sasl-plain cyrus-sasl-gssapi fuse portmap fuse-libs /lib/lsb/init-functions httpd mod_ssl openssl-devel python-psycopg2 MySQL-python libxslt

[root@hadoop104 cloudera-manager]# rpm -ivh cloudera-manager-agent-6.3.1-1466458.el7.x86_64.rpm

[root@hadoop105 cloudera-manager]# yum install bind-utils psmisc cyrus-sasl-plain cyrus-sasl-gssapi fuse portmap fuse-libs /lib/lsb/init-functions httpd mod_ssl openssl-devel python-psycopg2 MySQL-python libxslt

[root@hadoop105 cloudera-manager]# rpm -ivh cloudera-manager-agent-6.3.1-1466458.el7.x86_64.rpm

10.5、安装agent的server节点

[root@hadoop101 cloudera-manager]# vim /etc/cloudera-scm-agent/config.ini

server_host=hadoop101

[root@hadoop102 cloudera-manager]# vim /etc/cloudera-scm-agent/config.ini

server_host=hadoop101

[root@hadoop103 cloudera-manager]# vim /etc/cloudera-scm-agent/config.ini

server_host=hadoop101

[root@hadoop104 cloudera-manager]# vim /etc/cloudera-scm-agent/config.ini

server_host=hadoop101

[root@hadoop105 cloudera-manager]# vim /etc/cloudera-scm-agent/config.ini

server_host=hadoop101

10.6、安装cloudera-manager-server

主节点hadoop101安装

[root@hadoop101 cloudera-manager]# rpm -ivh cloudera-manager-server-6.3.1-1466458.el7.x86_64.rpm

10.7、上传CDH包到 /opt/cloudera/parcel-repo

[root@hadoop101 parcel-repo]# ll

total 2033432

-rw-r--r-- 1 root root 2082186246 May 21 11:10 CDH-6.3.2-1.cdh6.3.2.p0.1605554-el7.parcel

-rw-r--r-- 1 root root 40 May 21 10:56 CDH-6.3.2-1.cdh6.3.2.p0.1605554-el7.parcel.sha1

-rw-r--r-- 1 root root 33887 May 21 10:56 manifest.json

[root@hadoop101 parcel-repo]# mv CDH-6.3.2-1.cdh6.3.2.p0.1605554-el7.parcel.sha1 CDH-6.3.2-1.cdh6.3.2.p0.1605554-el7.parcel.sha

10.8、修改server的db.properties

[root@hadoop101 parcel-repo]# vim /etc/cloudera-scm-server/db.properties

# 此处填写对应的 mysql ip 端口 用户名 密码 数据库

com.cloudera.cmf.db.type=mysql

com.cloudera.cmf.db.host=192.168.10.15:10041

com.cloudera.cmf.db.name=scm

com.cloudera.cmf.db.user=scm

com.cloudera.cmf.db.password=scm

com.cloudera.cmf.db.setupType=EXTERNAL

10.9、启动server服务

[root@hadoop101 software]# cd /opt/cloudera/cm/schema/

[root@hadoop101 schema]# ./scm_prepare_database.sh mysql scm scm scm -h 192.168.10.15:10041

[root@hadoop101 software]# systemctl start cloudera-scm-server

启动 cloudera-scm-server 服务后 等待约2分钟

查看 /var/log/cloudera-scm-server cloudera-scm-server.log 日志

2022-07-04 10:23:31,767 INFO SearchRepositoryManager-0:com.cloudera.server.web.cmf.search.components.SearchRepositoryManager: Constructing repo:2022-07-04T02:23:31.767Z

2022-07-04 10:23:32,118 INFO SearchRepositoryManager-0:com.cloudera.server.web.cmf.search.components.SearchRepositoryManager: Finished constructing repo:2022-07-04T02:23:32.118Z

2022-07-04 10:23:32,480 INFO WebServerImpl:org.eclipse.jetty.server.Server: jetty-9.4.14.v20181114; built: 2018-11-14T21:20:31.478Z; git: c4550056e785fb5665914545889f21dc136ad9e6; jvm 1.8.0_181-b13

2022-07-04 10:23:32,495 INFO WebServerImpl:org.eclipse.jetty.server.AbstractConnector: Started ServerConnector@60d904c5{HTTP/1.1,[http/1.1]}{0.0.0.0:7180}

2022-07-04 10:23:32,495 INFO WebServerImpl:org.eclipse.jetty.server.Server: Started @597572ms

2022-07-04 10:23:32,495 INFO WebServerImpl:com.cloudera.server.cmf.WebServerImpl: Started Jetty server.

出现 Started Jetty server. 表示启动成功 进行下一步

10.10、启动agent节点

[root@hadoop101 software]# systemctl start cloudera-scm-agent

[root@hadoop102 software]# systemctl start cloudera-scm-agent

[root@hadoop103 software]# systemctl start cloudera-scm-agent

[root@hadoop104 software]# systemctl start cloudera-scm-agent

[root@hadoop105 software]# systemctl start cloudera-scm-agent

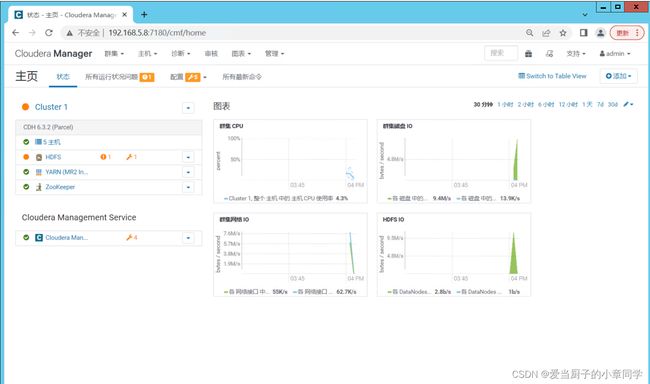

11、CM的集群部署

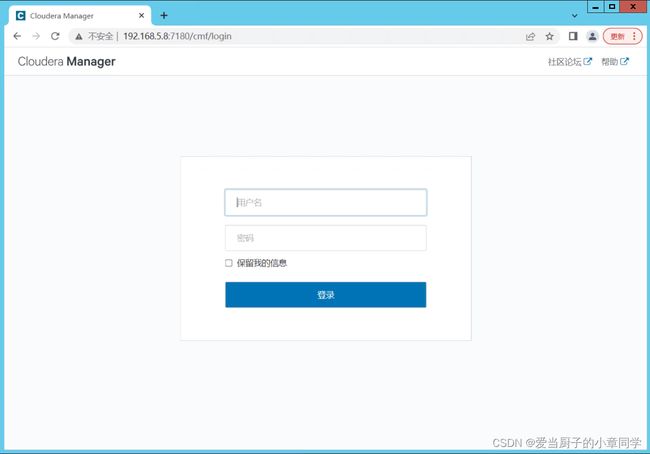

(1)、在浏览器上 输入 http://192.168.5.8:7180/cmf/login 输入用户admin 密码admin

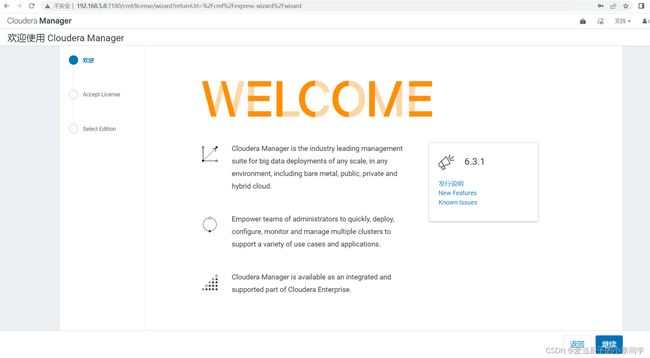

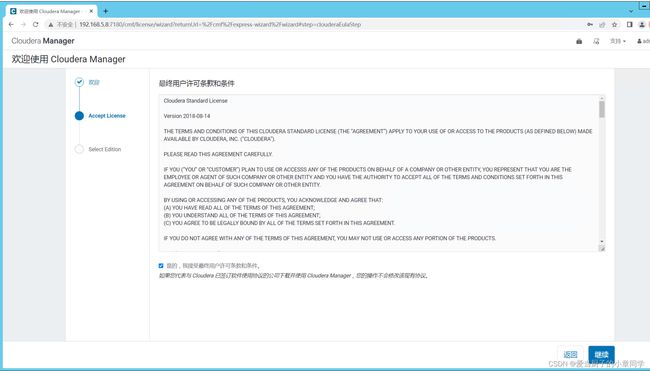

(2)、接受技术协议 点击继续

(3)、接受许可条款,勾选 ,点击继续

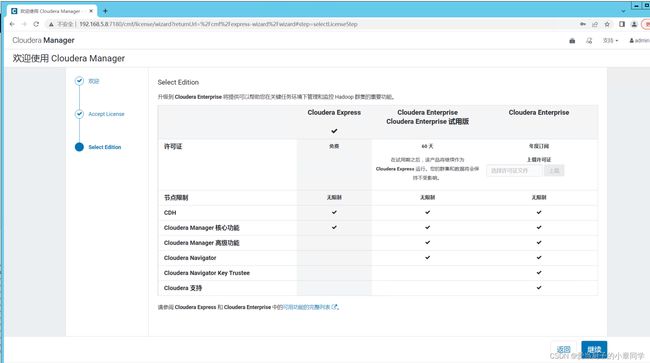

(4)、选择免费版本 ,点击继续

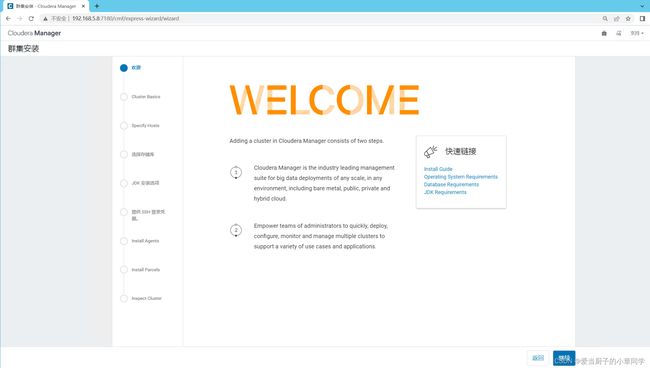

(5)、集群安装 点击 继续

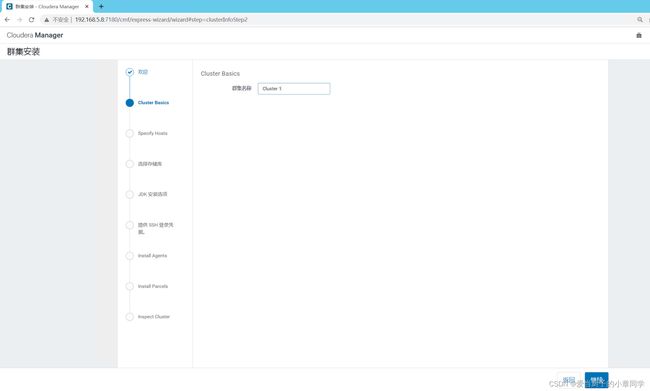

(6)、集群名称,默认即可 点击继续

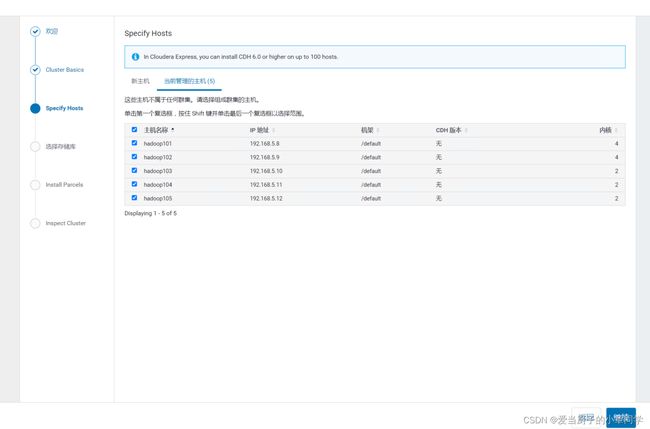

(7)、集群安装 选择hosts 主机 点击当前管理主机

勾选全部,点击继续

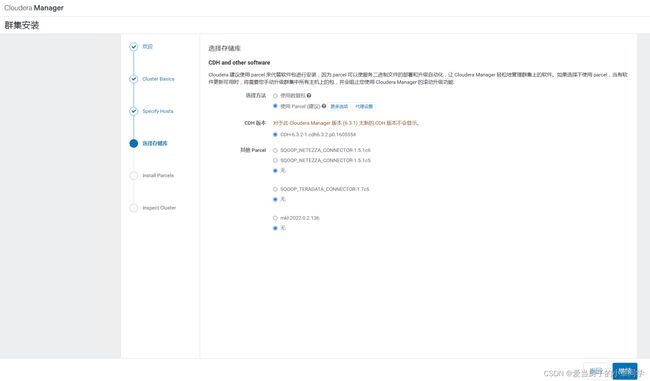

(8)、集群安装 选择存储库 默认即可, 点击 继续

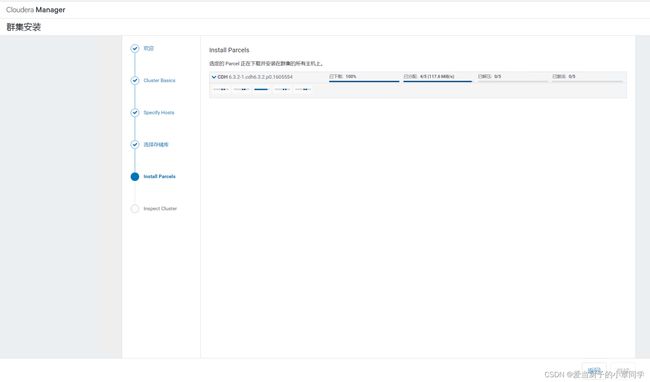

(9)、集群安装 自动分配到每一个主机 分配完成后 点击 继续

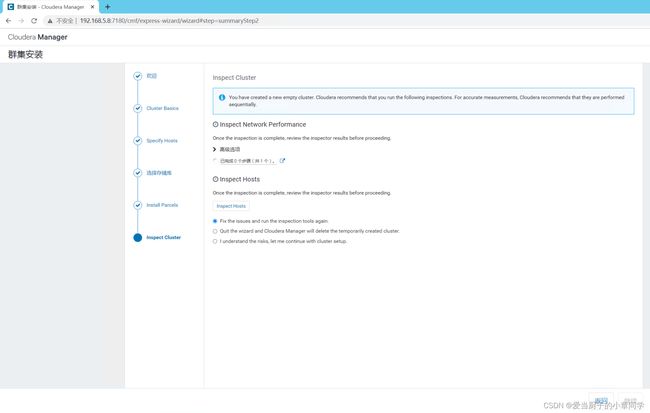

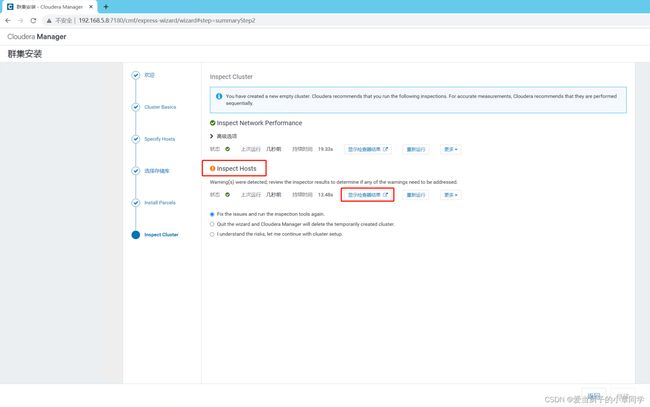

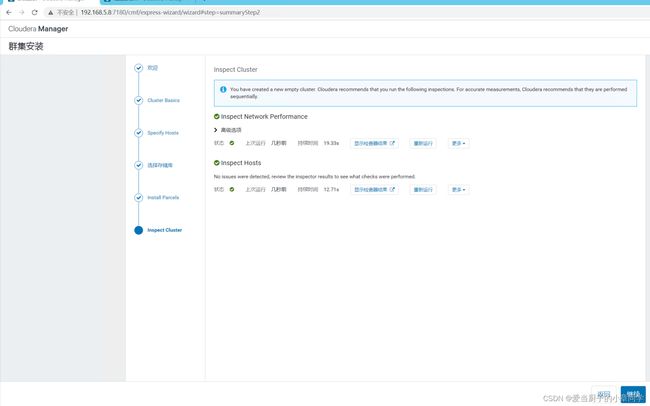

(10)、集群安装 检查集群,点击下面两个按钮 Inspect Network Performance Inspect Hosts

如果出现黄色感叹号,点击显示检查结果查看 具体原因

这里提示 需要关闭透明大页面压缩 点击详细信息,可以看到所有机器都需要执行命令

[root@hadoop101 schema]# echo never > /sys/kernel/mm/transparent_hugepage/defrag

[root@hadoop101 schema]# echo never > /sys/kernel/mm/transparent_hugepage/enabled

[root@hadoop102 schema]# echo never > /sys/kernel/mm/transparent_hugepage/defrag

[root@hadoop102 schema]# echo never > /sys/kernel/mm/transparent_hugepage/enabled

[root@hadoop103 schema]# echo never > /sys/kernel/mm/transparent_hugepage/defrag

[root@hadoop103 schema]# echo never > /sys/kernel/mm/transparent_hugepage/enabled

[root@hadoop104 schema]# echo never > /sys/kernel/mm/transparent_hugepage/defrag

[root@hadoop104 schema]# echo never > /sys/kernel/mm/transparent_hugepage/enabled

[root@hadoop105 schema]# echo never > /sys/kernel/mm/transparent_hugepage/defrag

[root@hadoop105 schema]# echo never > /sys/kernel/mm/transparent_hugepage/enabled

执行完成后,回到刚刚检查页面,点击重新运行 没有问题后,点击继续

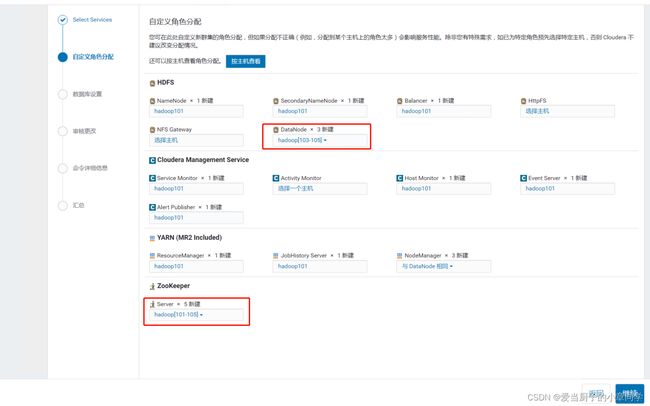

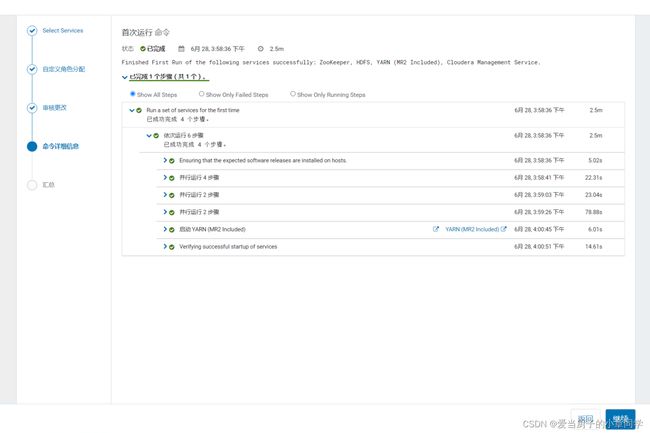

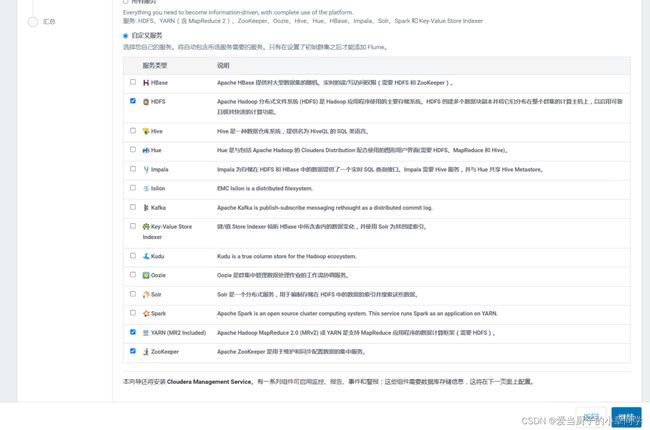

12、HDFS ,YARN , Zookeeper 安装部署

集群设置 点击自定义服务 ,选择HDFS ,YARN , Zookeeper

DataNode 选择 3,4,5 zookeeper 选择全部 1,2,3,4,5 其他默认

点击继续

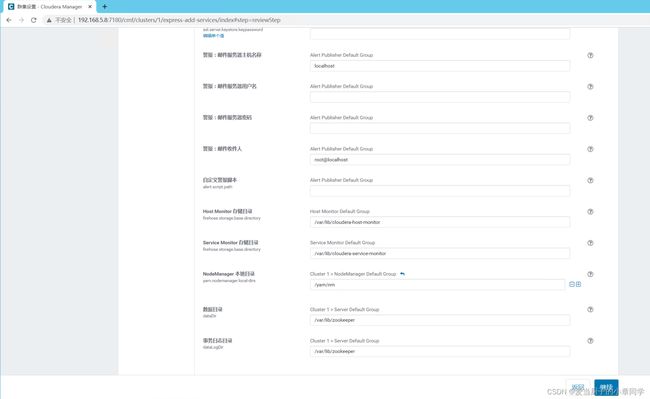

审核更改 保持默认,下拉,点击继续

正在安装启动服务 等待 如果出现报错,打开看一下包错信息 ,没问题,点击继续

点击完成

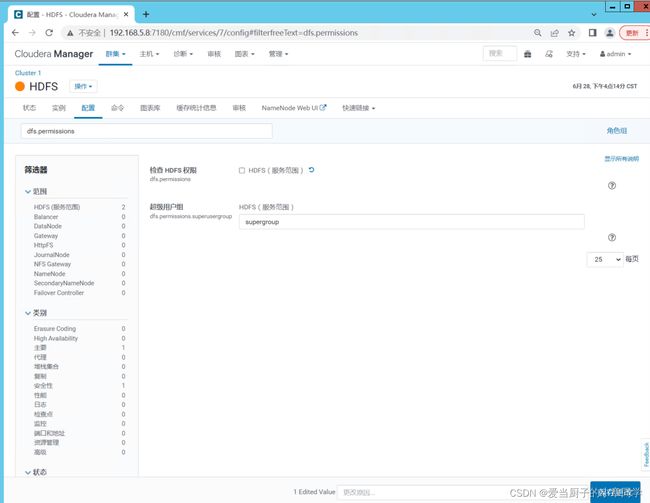

12.2、修改HDFS的权限检查配置

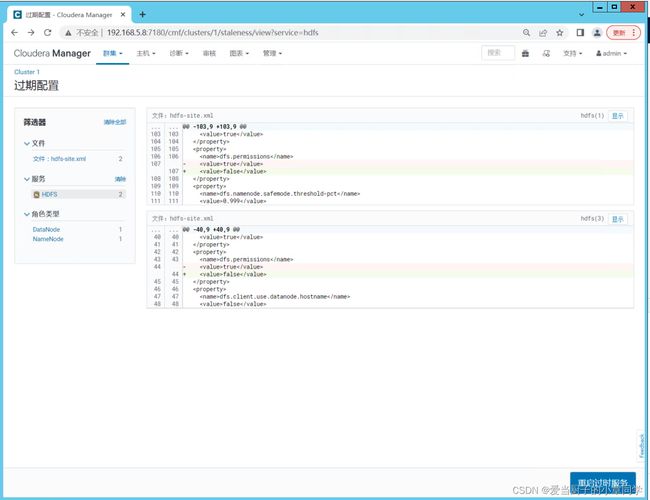

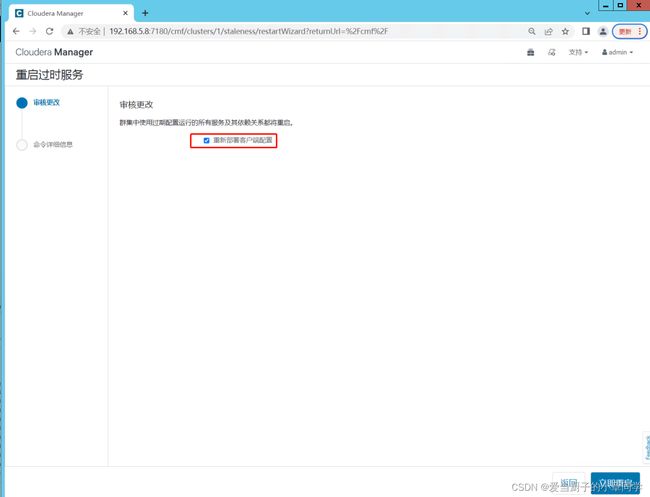

关闭HDFS中的权限检查:dfs.permissions。 保存更改,然后重启hdfs 服务

点击hdfs 组件进入hdfs 组件管理页面 点击配置 搜索 dfs.permissions

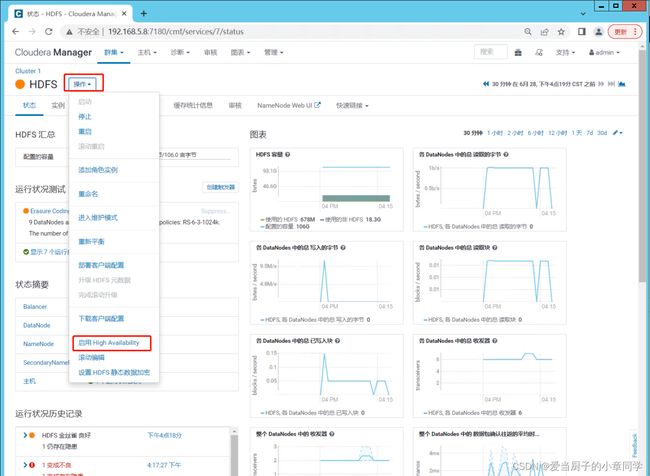

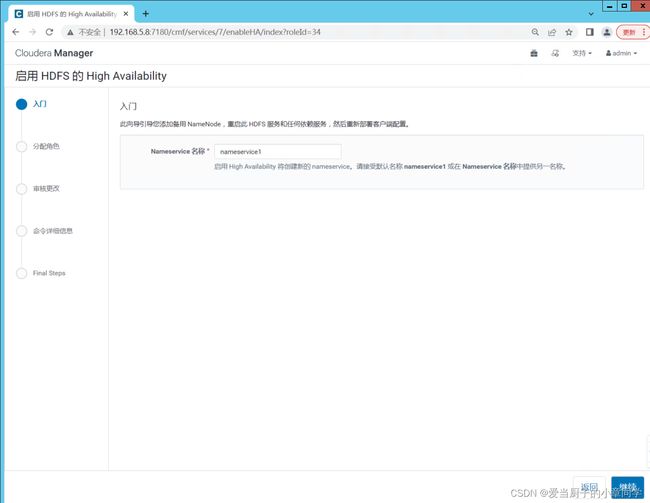

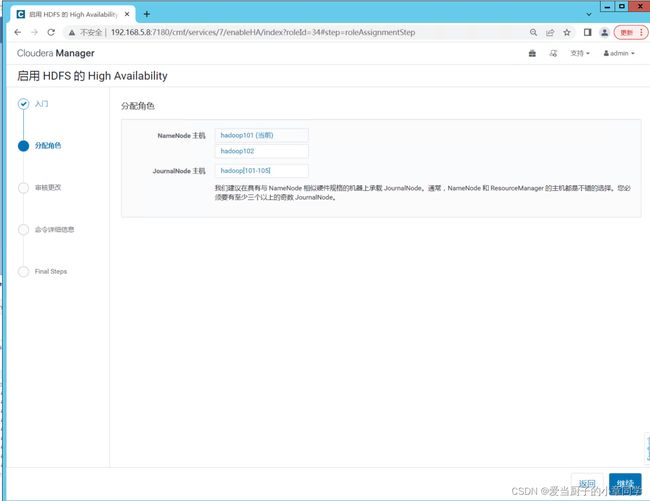

12.3、HDFS高可用配置

点击hdfs 组件进入hdfs 组件管理页面

创建命名空间,

保持默认,点击继续 ,选择主机,然后点击继续

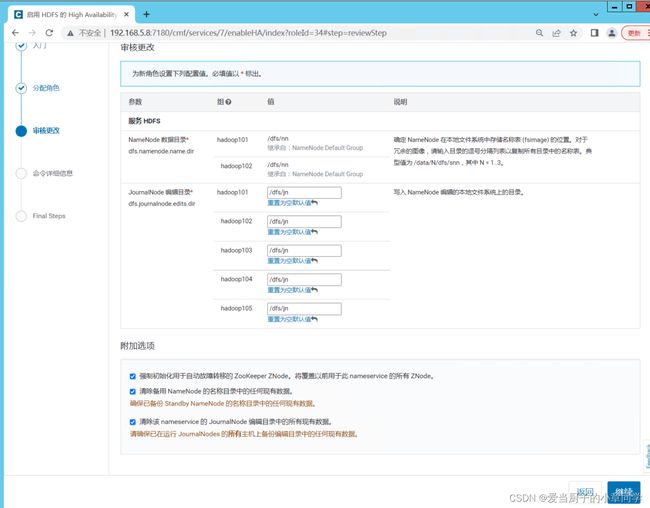

JournalNode 编辑目录配置

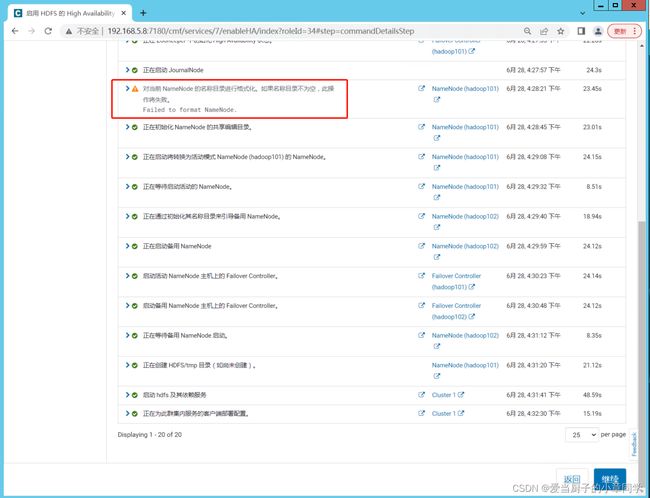

此处失败正常,点击继续 然后点击完成

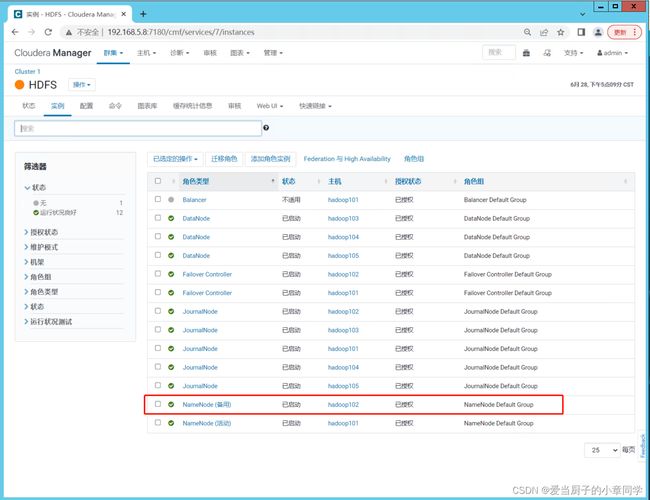

查看hdfs 实例 ,可以看到NameNode 备用节点在hadoop102 上

12.4、YARN 高可用配置

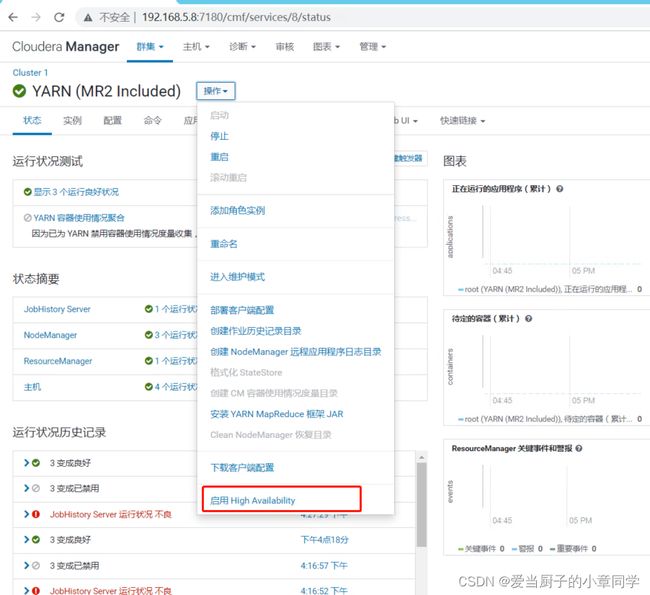

与hdfs 类似 进入 组件管理页面

选择启动 高可用 选择hosts hadoop102

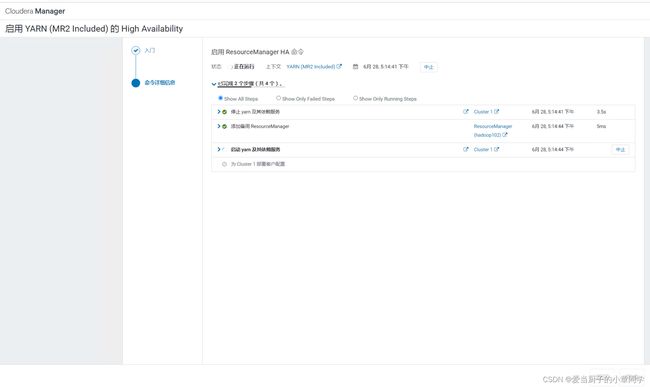

![]()

等待执行结束,点击完成

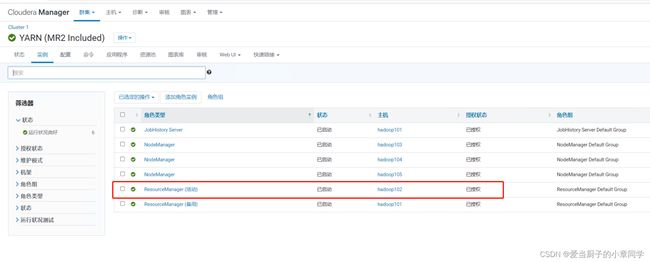

点击实例查看 yarn 实例 高可用节点

13、hive 安装

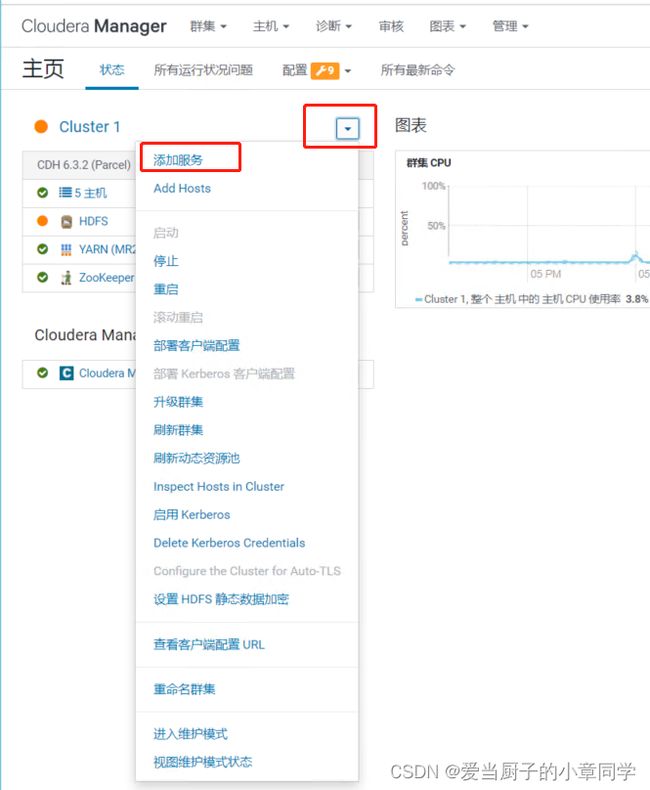

1、添加hive 服务

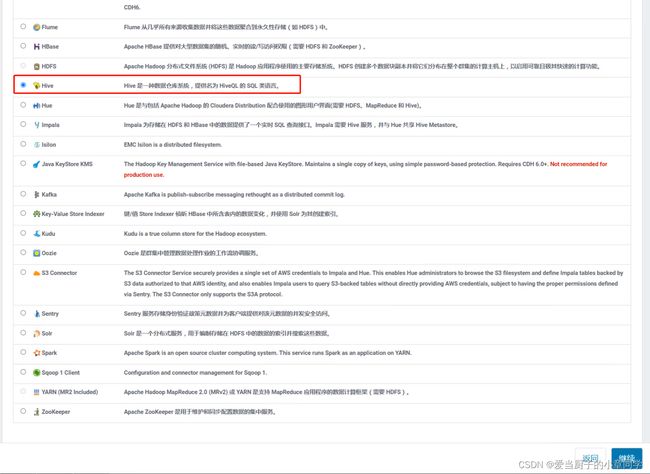

选择hive 点击继续

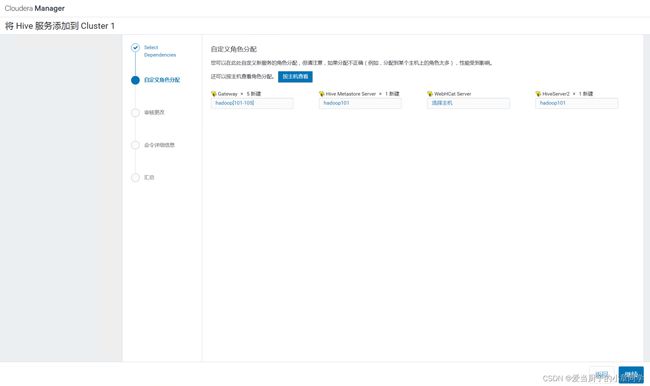

选择主机 默认即可,点击继续

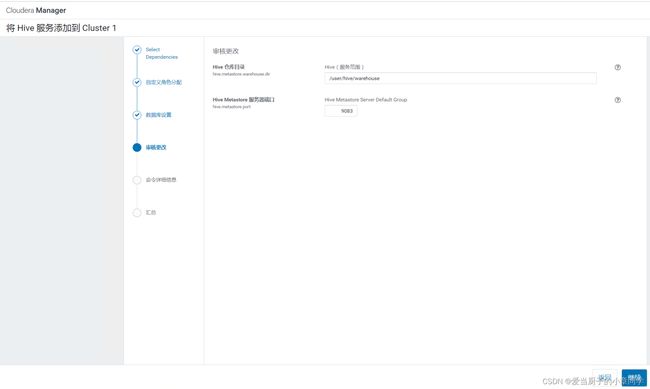

配置数据库访问连接 ,然后点击测试连接 没问题点击继续

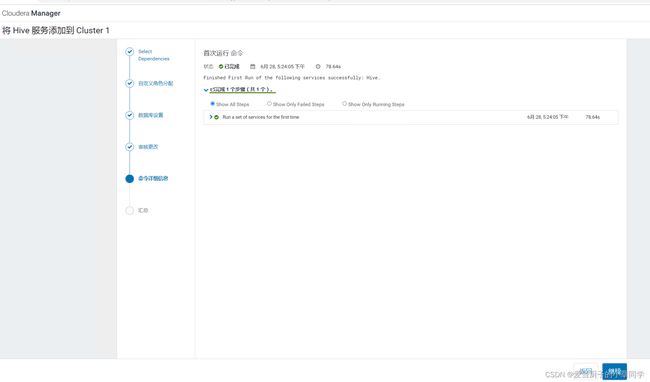

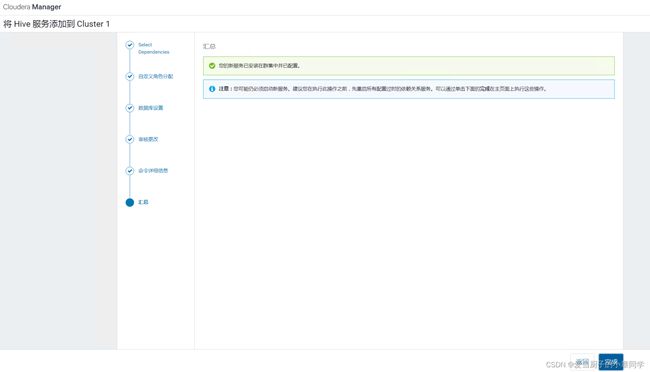

正在安装,安装完成后,点击继续,然后点击完成,hive安装成功

使用 root 进入 hadoop101 服务器 给 hdfs 文件夹授权

[root@hadoop101 ~]# su hdfs

[hdfs@hadoop101 root]$ hadoop fs -chmod -R 777 /

[hdfs@hadoop101 root]$

hive 安装完成后,修改 hive 元数据库表的字符编码集

#修改字段注释字符集

ALTER TABLE `hive1`.`COLUMNS_V2` CHANGE `COMMENT` `COMMENT` VARCHAR(256) character utf8;

#修改表注释字符集

ALTER TABLE TABLE_PARAMS modify column PARAM_VALUE mediumtext CHARACTER set utf8;

#修改分区参数,支持分区建用中文表示

ALTER TABLE PARTITION_PARAMS modify column PARAM_VALUE varchar(4000) CHARACTER set utf8;

ALTER TABLE PARTITION_KEYS modify column PKEY_COMMENT varchar(4000) CHARACTER set utf8;

#修改索引名注释,支持中文注释

ALTER TABLE INDEX_PARAMS modify column PARAM_VALUE varchar(4000) CHARACTER set utf8;

#修改视图,支持视图中文

ALTER TABLE TBLS modify COLUMN VIEW_EXPANDED_TEXT mediumtext CHARACTER SET utf8;

ALTER TABLE TBLS modify COLUMN VIEW_ORIGINAL_TEXT mediumtext CHARACTER SET utf8;

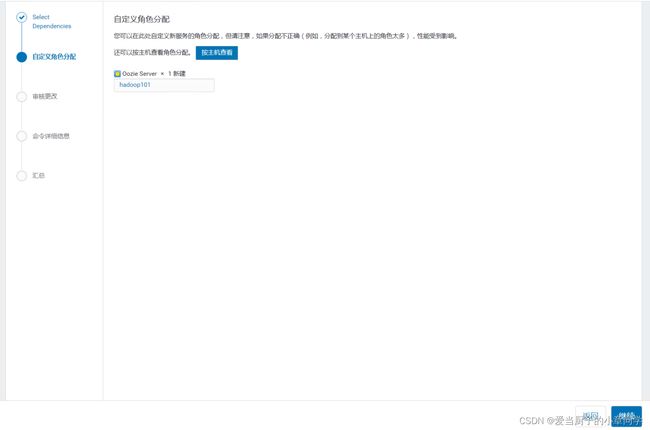

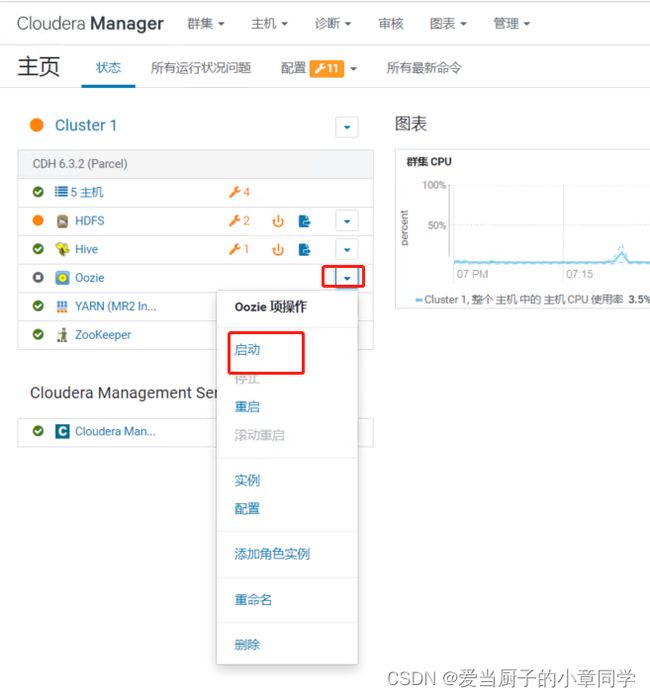

14、Oozie 服务

添加Oozie 服务

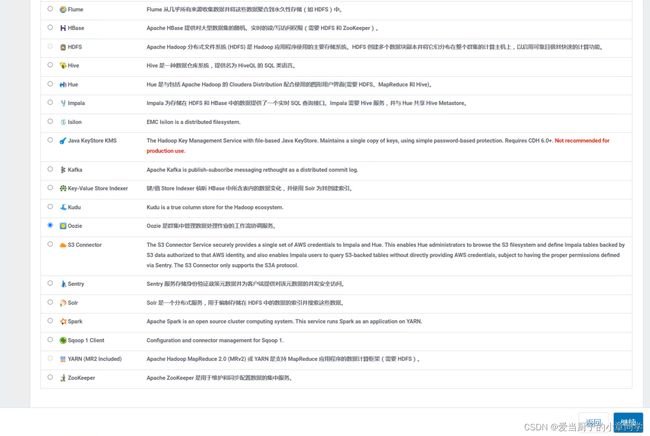

选择 oozie 点击继续

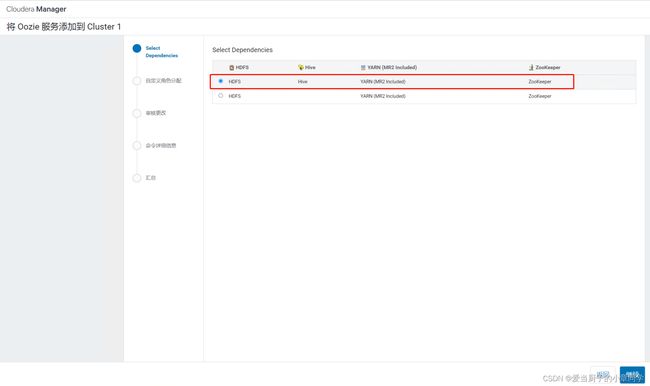

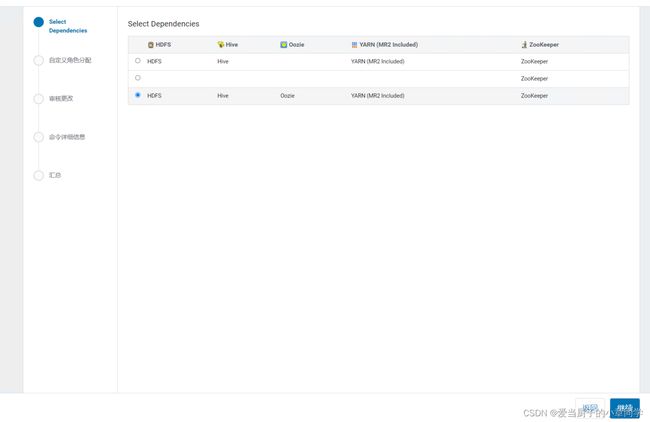

选择 组件多的一行 点击继续

默认 ,点击继续

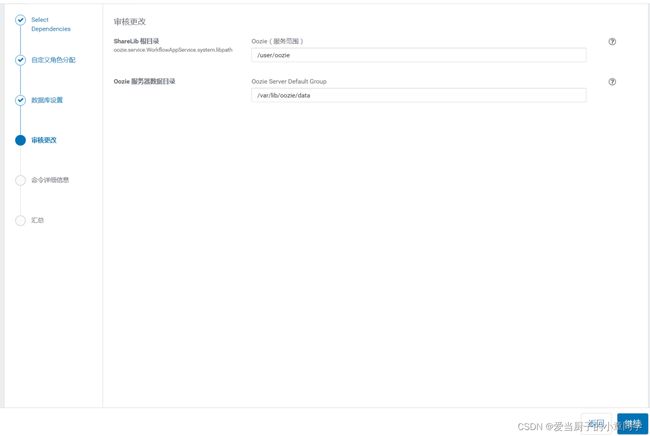

输入oozie 资源库连接信息,点击测试连接,测试成功,点击 继续

默认配置,点击继续

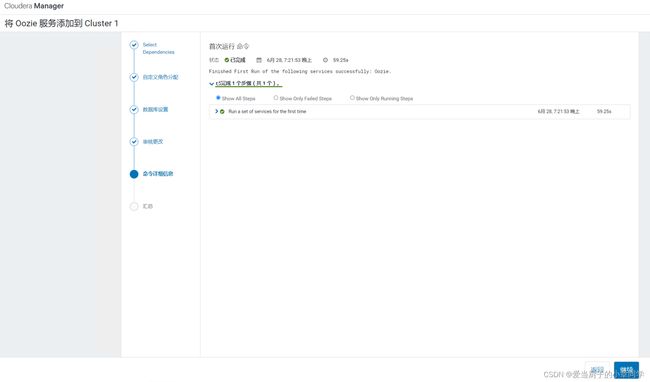

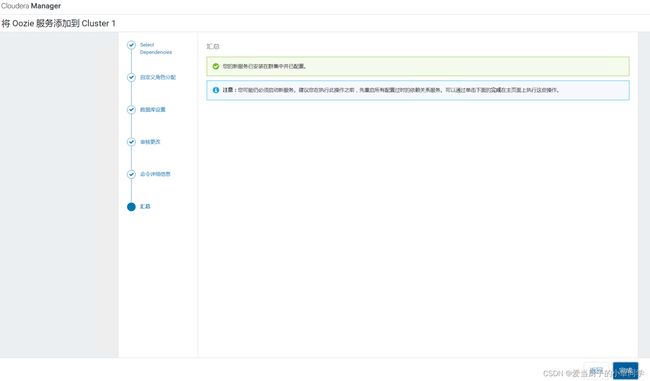

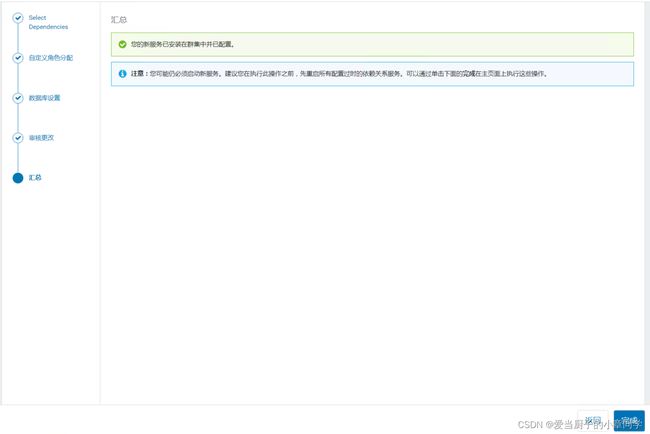

运行完成后,点击继续,,然后点击完成

回到主页面,将oozie 启动

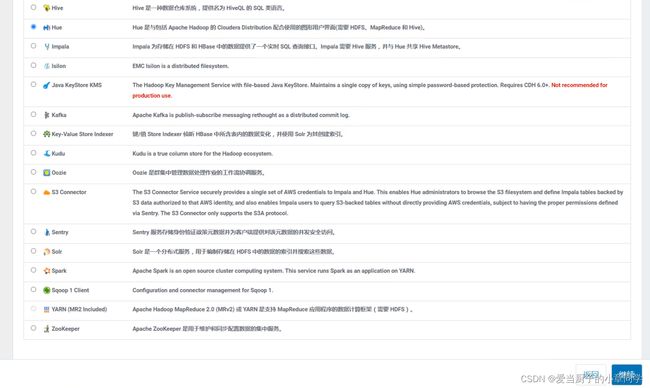

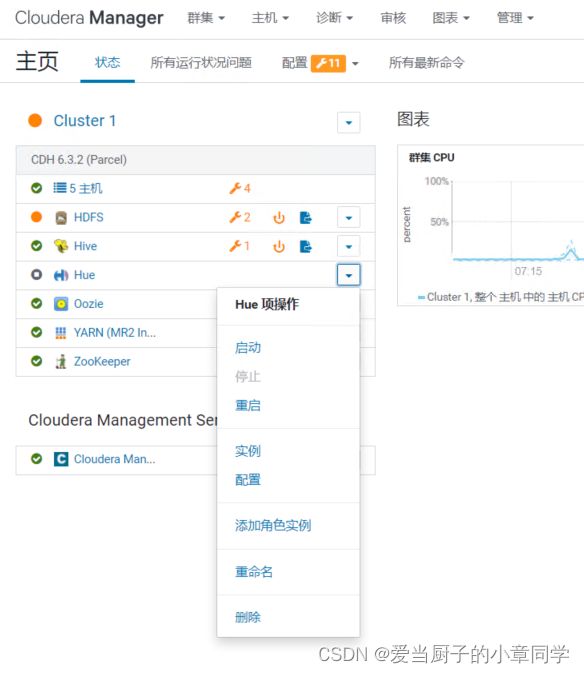

15、hue 安装

添加hue 服务

点击继续 选择组件最多的一行,点击继续

默认,点击继续

配置hue 资源库连接信息,点击测试,没问题,点击继续

点击完成

启动hue

启动成功

16、CDH 集群设置

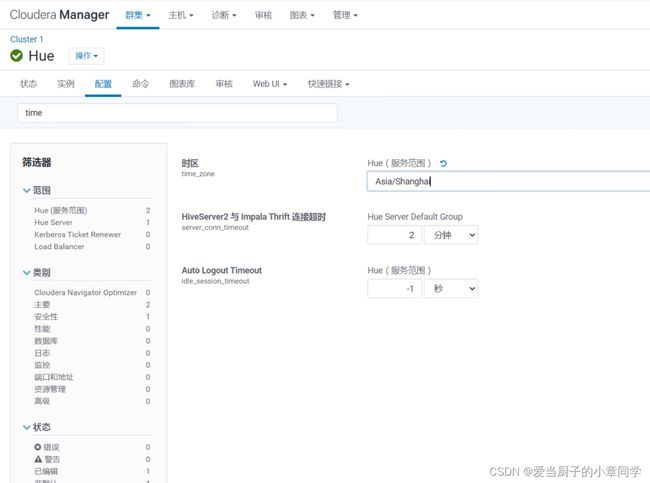

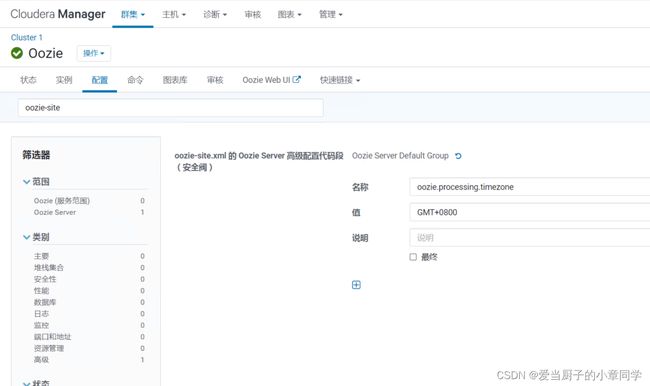

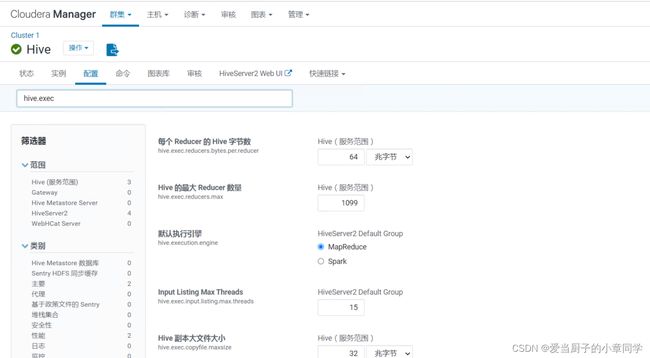

1、 Hue和Oozie时区修改

Hue的默认时区Los_Angeles 改成 Asia/Shanghai 保存

oozie 时区添加 oozie.processing.timezone GMT+0800 保存

Hive执行引擎 设置为 MapReduce 保存

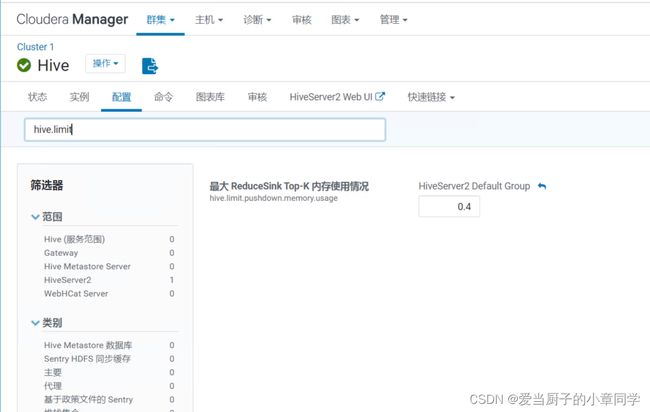

最大 ReduceSink Top-K 内存使用情况 hive.limit.pushdown.memory.usage 改成 0.4 保存

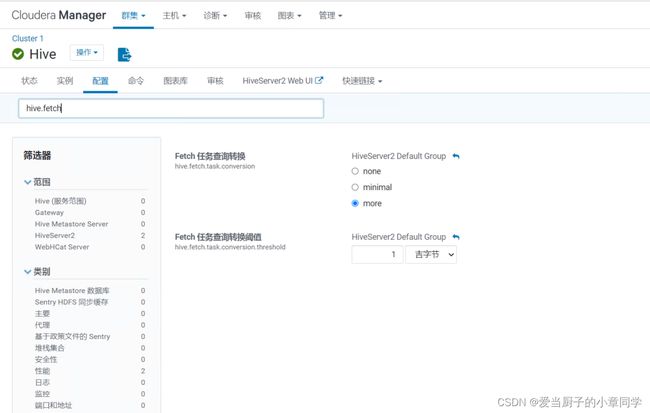

Fetch 任务查询转换 hive.fetch.task.conversion more hive.fetch.task.conversion.threshold 改成 1 G 保存

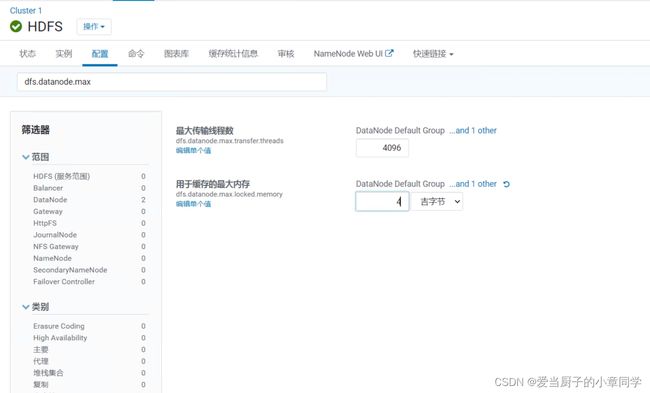

datanode缓存配置 用于缓存的最大内存 dfs.datanode.max.locked.memory 设置成 4G 保存

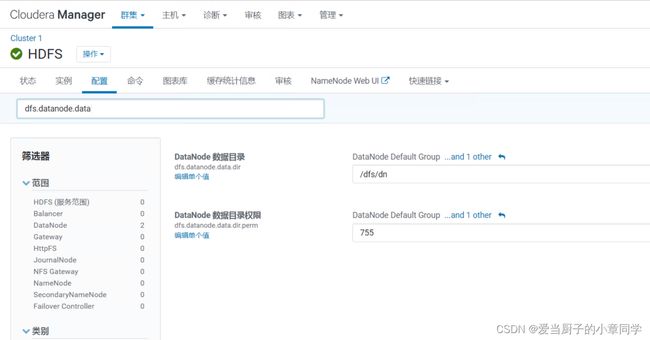

Datanode 数据目录权限 dfs.datanode.data.dir.perm 改成 755 保存

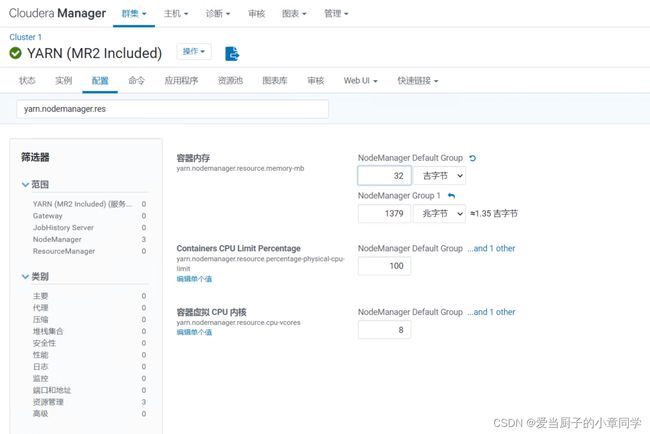

容器内存 yarn.nodemanager.resource.memory-mb 改成 32G 保存

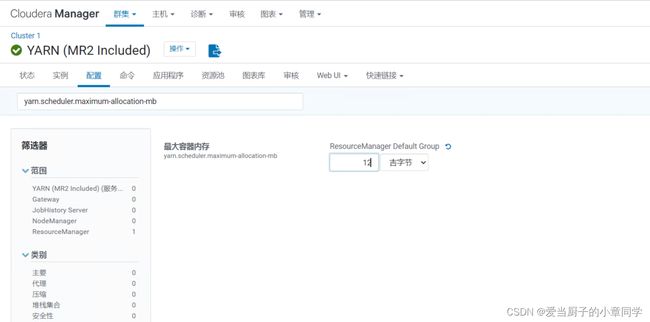

最大容器内存

yarn.scheduler.maximum-allocation-mb 改成 12 G 保存

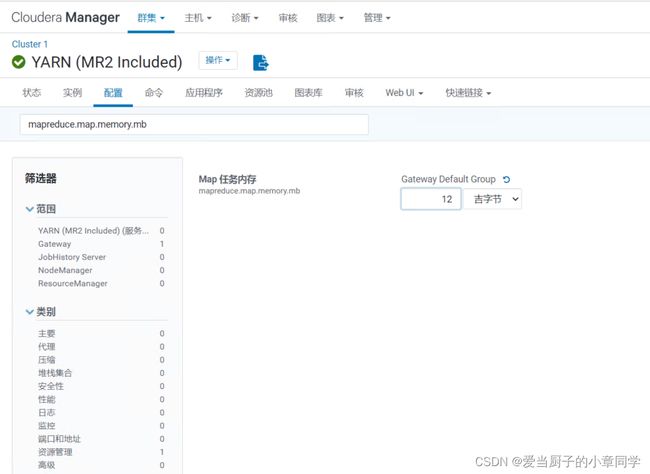

Map和Reduce任务内存 mapreduce.map.memory.mb 改成 12G 保存

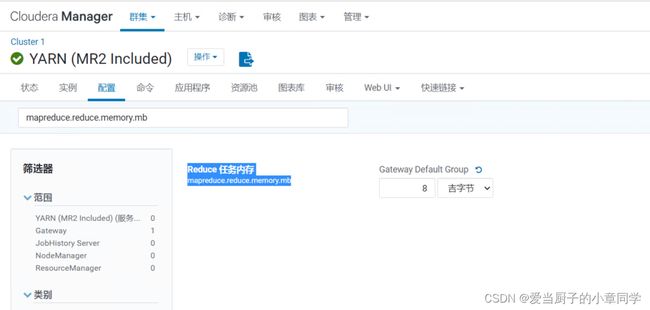

Reduce 任务内存

mapreduce.reduce.memory.mb 改成 8G 保存