编译flink1.12.2以适配cdh6.3.2并制作其parcel

前言

一步一步地实践已成熟的flink1.12以parcel方式部署cdh6.x,并测试flink-sql的kafka、upsert-kafka(含数据过期log.retention.minutes)、jdbc(mysql)功能。

编译

下载kafka-avro-serializer-5.3.0和kafka-schema-registry-client-5.5.2

注意:不要使用flink源码中的kafka-avro-serializer-5.5.2,因为编译报错如下图:

针对上图的解决方案为:

[root@master flink-release-1.12.2]# vi ./flink-end-to-end-tests/flink-end-to-end-tests-common-kafka/pom.xml

110 <dependency>

111 <!-- https://mvnrepository.com/artifact/io.confluent/kafka-avro-serializer -->

112 <groupId>io.confluent</groupId>

113 <artifactId>kafka-avro-serializer</artifactId>

114 <!-- <version>5.5.2</version> -->

115 <version>5.3.0</version>

116 <scope>test</scope>

117 </dependency>

注释掉第114行并新增第115行,即修改版本号。

由于上面两个jar包无法下载,本人手动下载后自己加载到maven本地仓库中

[root@master flink-release-1.12.2]# cd /opt/module/flink-shaded-release-12.0/

[root@master flink-shaded-release-12.0]# mvn clean install -DskipTests -Dhadoop.version=3.0.0-cdh6.3.2

[root@master flink-release-1.12.2]# mvn install:install-file -Dfile=/root/jcz/my_jar/kafka-avro-serializer-5.3.0.jar -DgroupId=io.confluent -DartifactId=kafka-avro-serializer -Dversion=5.3.0 -Dpackaging=jar

[root@master flink-release-1.12.2]# mvn install:install-file -Dfile=/root/jcz/my_jar/kafka-schema-registry-client-5.5.2.jar -DgroupId=io.confluent -DartifactId=kafka-schema-registry-client -Dversion=5.5.2 -Dpackaging=jar

[root@master flink-release-1.12.2]# mvn -T2C clean install -DskipTests -Dfast -Pinclude-hadoop -Pvendor-repos -Dhadoop.version=3.0.0-cdh6.3.2 -Dflink.shaded.version=12.0 -Dscala-2.12

[root@master ~]# scp -r /opt/module/flink-release-1.12.2/flink-dist/target/flink-1.12.2-bin/flink-1.12.2 /var/www/html/

[root@master ~]# cd /var/www/html/

[root@master html]# tar -czvf flink-1.12.2-bin-scala_2.12.tgz flink-1.12.2

[root@master ~]# systemctl start httpd

[root@master soft]# git clone https://github.com/pkeropen/flink-parcel.git

[root@master soft]# chmod -R 777 flink-parcel

[root@master soft]# cd flink-parcel/

[root@master flink-parcel]# vi flink-parcel.properties

#FLINK 下载地址

#FLINK_URL=https://mirrors.tuna.tsinghua.edu.cn/apache/flink/flink-1.9.1/flink-1.9.1-bin-scala_2.12.tgz

FLINK_URL=http://192.168.2.95/flink-1.12.2-bin-scala_2.12.tgz

#flink版本号

FLINK_VERSION=1.12.2

#扩展版本号

EXTENS_VERSION=BIN-SCALA_2.12

#操作系统版本,以centos为例

OS_VERSION=7

#CDH 小版本

CDH_MIN_FULL=5.2

CDH_MAX_FULL=6.3.3

#CDH大版本

CDH_MIN=5

CDH_MAX=6

[root@master flink-parcel]# ./build.sh parcel

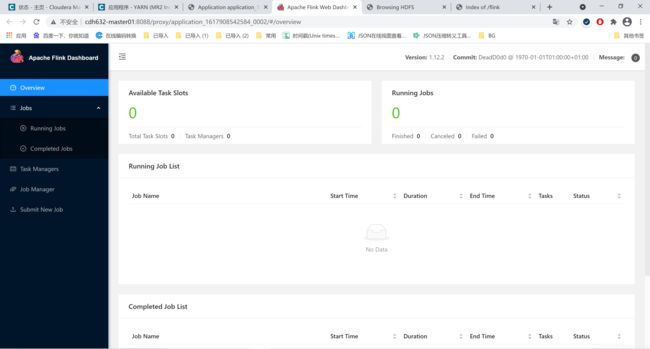

上面在html目录下操作完成后,打开浏览器输入ip后可见:

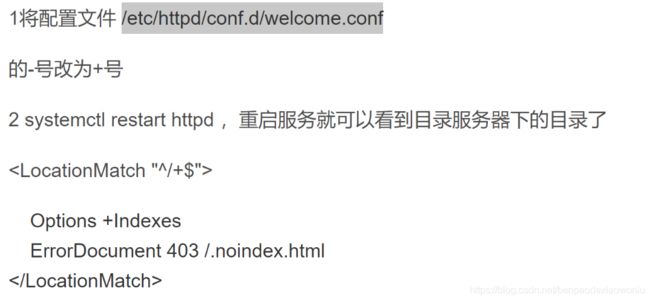

若看不到Centos7虚拟机的上图,则需:

报错解决

- 报错01

[ERROR] Failed to execute goal on project cloudera-manager-schema: Could not resolve dependencies for project com.cloudera.cmf.schema:cloudera-manager-schema:jar:5.8.0: Could not find artifact commons-cli:commons-cli:jar:1.3-cloudera-pre-r1439998 in aliyun (http://maven.aliyun.com/nexus/content/groups/public)

参考livy 集成cdh中编译parcel包出现问题解决,解决方案为:

[root@master ~]# mvn install:install-file -Dfile=/root/jcz/my_jar/commons-cli-1.3-cloudera-pre-r1439998.jar -DgroupId=commons-cli -DartifactId=commons-cli -Dversion=1.3-cloudera-pre-r1439998 -Dpackaging=jar

[root@master flink-parcel]# ./build.sh parcel

Validation succeeded.

[root@master flink-parcel]# ll ./FLINK-1.12.2-BIN-SCALA_2.12_build

total 327520

-rw-r--r-- 1 root root 335369210 Apr 9 00:49 FLINK-1.12.2-BIN-SCALA_2.12-el7.parcel

-rw-r--r-- 1 root root 41 Apr 9 00:49 FLINK-1.12.2-BIN-SCALA_2.12-el7.parcel.sha

-rw-r--r-- 1 root root 583 Apr 9 00:49 manifest.json

[root@master flink-parcel]# ll

total 327540

-rwxrwxrwx 1 root root 5863 Apr 8 22:47 build.sh

drwxr-xr-x 6 root root 142 Apr 9 00:39 cm_ext

drwxr-xr-x 4 root root 29 Apr 9 00:48 FLINK-1.12.2-BIN-SCALA_2.12

drwxr-xr-x 2 root root 123 Apr 9 00:49 FLINK-1.12.2-BIN-SCALA_2.12_build

-rw-r--r-- 1 root root 335366412 Apr 8 02:45 flink-1.12.2-bin-scala_2.12.tgz

drwxrwxrwx 5 root root 53 Apr 8 22:47 flink-csd-on-yarn-src

drwxrwxrwx 5 root root 53 Apr 8 22:47 flink-csd-standalone-src

-rwxrwxrwx 1 root root 411 Apr 8 22:49 flink-parcel.properties

drwxrwxrwx 3 root root 85 Apr 8 22:47 flink-parcel-src

-rwxrwxrwx 1 root root 11357 Apr 8 22:47 LICENSE

-rwxrwxrwx 1 root root 4334 Apr 8 22:47 README.md

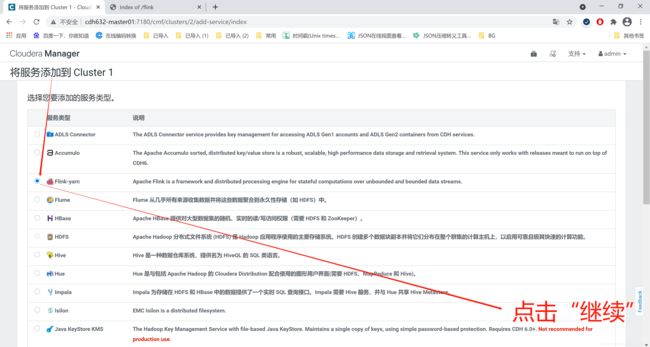

[root@master flink-parcel]# ./build.sh csd_on_yarn

Validation succeeded.

[root@master flink-parcel]# ll FLINK_ON_YARN-1.12.2.jar

-rw-r--r-- 1 root root 8259 Apr 9 00:53 FLINK_ON_YARN-1.12.2.jar

[root@master flink-parcel]# tar -cvf ./FLINK-1.12.2-BIN-SCALA_2.12.tar ./FLINK-1.12.2-BIN-SCALA_2.12_build/

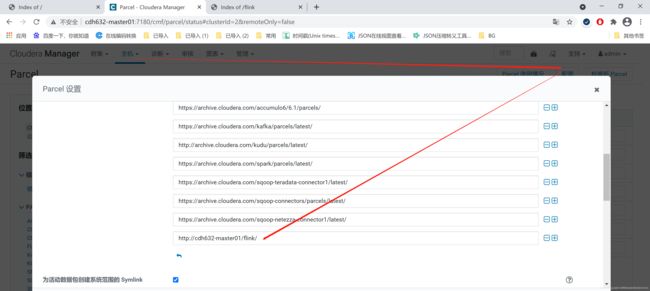

将上述FLINK-1.12.2-BIN-SCALA_2.12.tar和FLINK_ON_YARN-1.12.2.jar上传到正式环境服务器(局域网yum提供的节点)。

[root@cdh632-master01 ~]# mkdir /opt/soft/my-flink-parcel

[root@cdh632-master01 ~]# cd /opt/soft/my-flink-parcel/

[root@cdh632-master01 my-flink-parcel]# tar -xvf FLINK-1.12.2-BIN-SCALA_2.12.tar -C /var/www/html

[root@cdh632-master01 my-flink-parcel]# cd /var/www/html

[root@cdh632-master01 html]# ll

total 8

drwxr-xr-x. 2 root root 4096 Mar 4 2020 cloudera-repos

drwxr-xr-x 2 root root 4096 Apr 9 00:49 FLINK-1.12.2-BIN-SCALA_2.12_build

[root@cdh632-master01 html]# mv FLINK-1.12.2-BIN-SCALA_2.12_build flink

[root@cdh632-master01 html]# cd flink/

[root@cdh632-master01 flink]# createrepo .

[root@cdh632-master01 flink]# cd /etc/yum.repos.d

[root@cdh632-master01 yum.repos.d]# vi flink.repo

[flink]

name=flink

baseurl=http://cdh632-master01/flink

enabled=1

gpgcheck=0

[root@cdh632-master01 yum.repos.d]# cd

[root@cdh632-master01 ~]# yum clean all

[root@cdh632-master01 ~]# yum makecache

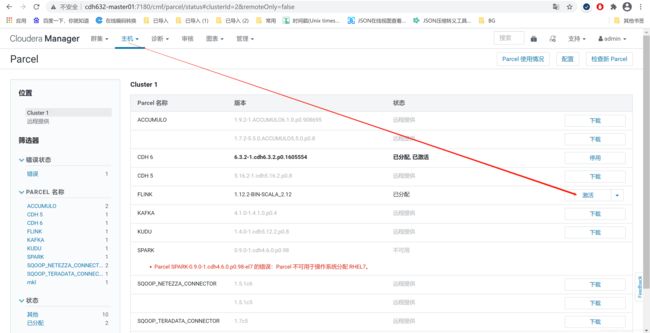

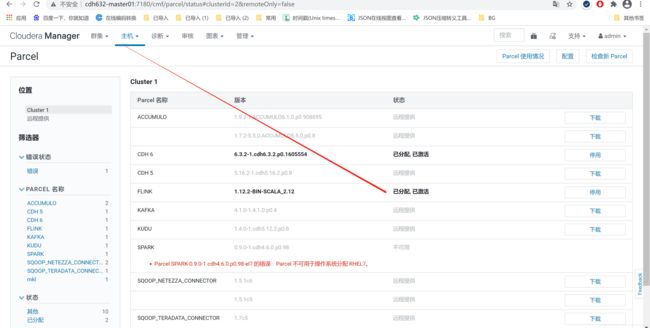

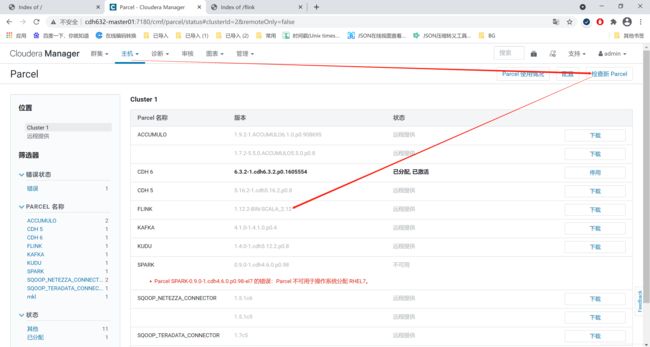

2)点击“下载”后,报错02:Parcel FLINK-1.12.2-BIN-SCALA_2.12-el7.parcel 的错误:哈希验证失败。

参考cloudera manager手动安装flink、livy parcel出现哈希验证失败,解决方案为:

[root@cdh632-master01 ~]# vi /etc/httpd/conf/httpd.conf

AddType application/x-gzip .gz .tgz .parcel #此处添加.parcel

[root@cdh632-master01 ~]# systemctl restart httpd

[root@cdh632-master01 ~]# cp /opt/soft/my-flink-parcel/FLINK_ON_YARN-1.12.2.jar /opt/cloudera/csd/

[root@cdh632-master01 ~]# systemctl stop cloudera-scm-agent

[root@cdh632-worker02 ~]# systemctl stop cloudera-scm-agent

[root@cdh632-worker03 ~]# systemctl stop cloudera-scm-agent

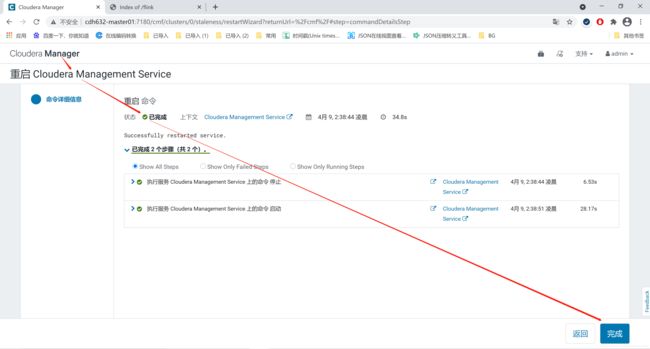

[root@cdh632-master01 ~]# systemctl restart cloudera-scm-server

[root@cdh632-master01 ~]# systemctl start cloudera-scm-agent

[root@cdh632-worker02 ~]# systemctl start cloudera-scm-agent

[root@cdh632-worker03 ~]# systemctl start cloudera-scm-agent

[root@cdh632-master01 ~]# find / -name flink-shaded-hadoop-*

/opt/module/repository/org/apache/flink/flink-shaded-hadoop-2-uber/3.0.0-cdh6.3.2-10.0/flink-shaded-hadoop-2-uber-3.0.0-cdh6.3.2-10.0.jar

[root@cdh632-master01 lib]# scp /opt/module/repository/org/apache/flink/flink-shaded-hadoop-2-uber/3.0.0-cdh6.3.2-10.0/flink-shaded-hadoop-2-uber-3.0.0-cdh6.3.2-10.0.jar root@cdh632-master01:/opt/cloudera/parcels/FLINK/lib/flink/lib/

[root@cdh632-master01 lib]# scp /opt/module/repository/org/apache/flink/flink-shaded-hadoop-2-uber/3.0.0-cdh6.3.2-10.0/flink-shaded-hadoop-2-uber-3.0.0-cdh6.3.2-10.0.jar root@cdh632-worker02:/opt/cloudera/parcels/FLINK/lib/flink/lib/

[root@cdh632-master01 lib]# scp /opt/module/repository/org/apache/flink/flink-shaded-hadoop-2-uber/3.0.0-cdh6.3.2-10.0/flink-shaded-hadoop-2-uber-3.0.0-cdh6.3.2-10.0.jar root@cdh632-worker03:/opt/cloudera/parcels/FLINK/lib/flink/lib/

所有Flink-yarn角色所在机器均执行如下:

[root@cdh632-master01 ~]# vi /etc/profile

export HADOOP_CLASSPATH=/opt/cloudera/parcels/FLINK/lib/flink/lib

[root@cdh632-master01 ~]# source /etc/profile

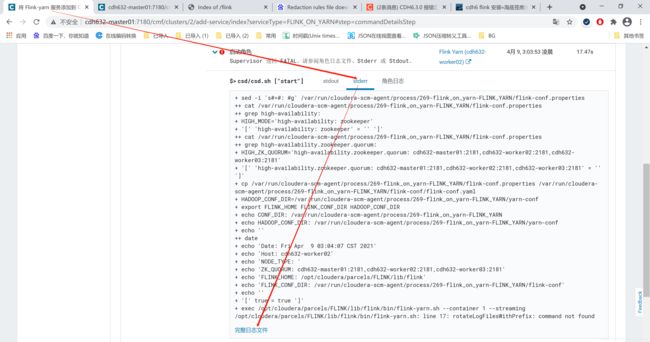

- 上述操作后试图鼠标重启Flink-yarn的所有角色,但多次尝试均报错03:flink-yarn.sh: line 17: rotateLogFilesWithPrefix: command not found,网友也遇到了此问题:https://download.csdn.net/download/orangelzc/15936248,最终的解决方案:

[root@cdh632-master01 ~]# scp /opt/module/repository/org/apache/flink/flink-shaded-hadoop-2-uber/2.7.5-10.0/flink-shaded-hadoop-2-uber-2.7.5-10.0.jar root@cdh632-worker03:/opt/cloudera/parcels/FLINK/lib/flink/lib/

[root@cdh632-master01 ~]# scp /opt/module/repository/org/apache/flink/flink-shaded-hadoop-2-uber/2.7.5-10.0/flink-shaded-hadoop-2-uber-2.7.5-10.0.jar root@cdh632-worker02:/opt/cloudera/parcels/FLINK/lib/flink/lib/

[root@cdh632-master01 ~]# scp /opt/module/repository/org/apache/flink/flink-shaded-hadoop-2-uber/2.7.5-10.0/flink-shaded-hadoop-2-uber-2.7.5-10.0.jar root@cdh632-master01:/opt/cloudera/parcels/FLINK/lib/flink/lib/

再次鼠标重启Flink-yarn的所有角色,竟然成功了。虽然我最初通过:

[root@master flink-shaded-release-12.0]# mvn clean install -DskipTests -Dhadoop.version=3.0.0-cdh6.3.2

编译并产生了flink-shaded-hadoop-2-uber-3.0.0-cdh6.3.2-10.0.jar,但却没识别,好像只识别flink-shaded-hadoop-2-uber-2.7.5-10.0.jar。待探究。

参考

基于CDH-6.2.0编译flink-1.12.1(Hadoop-3.0.0&Hive-2.1.1)

centos7 下httpd服务器开启目录

cdh6 flink 安装

问题

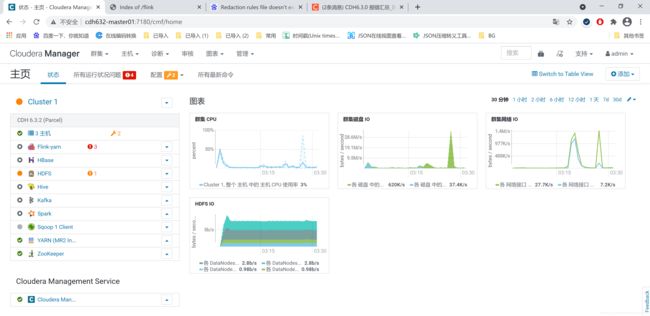

按上述parcel方式把flink1.12.2部署到cdh6.3.2后,会发现三个Flink Yarn角色总是有且不固定其中一个角色会自动挂掉,若查看日志,发现并没有任何明显的ERROR。而继续进行下面的测试,待解决所有需依赖的jar包后,发现重启flink集群后,三个Flink Yarn角色便不会自动挂了(好神奇!)。

先给出最终的解决方案:将8个jar包拷贝到指定目录($FLINK_HOME/lib/或自定义目录),注意:当自定义目录时,flink-sql的启动命令需指定出来(见下文)

/opt/module/flink-release-1.12.2/flink-connectors/flink-connector-hive/target/flink-connector-hive_2.12-1.12.2.jar

/opt/module/flink-release-1.12.2/flink-connectors/flink-connector-jdbc/target/flink-connector-jdbc_2.12-1.12.2.jar

/opt/module/flink-release-1.12.2/flink-connectors/flink-connector-kafka/target/flink-connector-kafka_2.12-1.12.2.jar

/opt/module/flink-release-1.12.2/flink-connectors/flink-sql-connector-hive-2.2.0/target/flink-sql-connector-hive-2.2.0_2.12-1.12.2.jar

/opt/module/flink-release-1.12.2/flink-connectors/flink-sql-connector-kafka/target/flink-sql-connector-kafka_2.12-1.12.2.jar

/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/hive/lib/hive-exec-2.1.1-cdh6.3.2.jar

/opt/module/repository/org/apache/kafka/kafka-clients/2.4.0/kafka-clients-2.4.0.jar

/root/jcz/mysql-connector-java-5.1.47.jar

上述这些包分别是为了解决如下测试环节的报错:

当缺失hive的三个jar时(参见1),报错1:

[root@cdh632-worker03 lib]# $FLINK_HOME/bin/sql-client.sh embedded

Setting HBASE_CONF_DIR=/etc/hbase/conf because no HBASE_CONF_DIR was set.

No default environment specified.

Searching for '/opt/cloudera/parcels/FLINK-1.12.2-BIN-SCALA_2.12/lib/flink/conf/sql-client-defaults.yaml'...found.

Reading default environment from: file:/opt/cloudera/parcels/FLINK-1.12.2-BIN-SCALA_2.12/lib/flink/conf/sql-client-defaults.yaml

No session environment specified.

Exception in thread "main" org.apache.flink.table.client.SqlClientException: Unexpected exception. This is a bug. Please consider filing an issue.

at org.apache.flink.table.client.SqlClient.main(SqlClient.java:215)

Caused by: org.apache.flink.table.client.gateway.SqlExecutionException: Could not create execution context.

at org.apache.flink.table.client.gateway.local.ExecutionContext$Builder.build(ExecutionContext.java:972)

at org.apache.flink.table.client.gateway.local.LocalExecutor.openSession(LocalExecutor.java:225)

at org.apache.flink.table.client.SqlClient.start(SqlClient.java:108)

at org.apache.flink.table.client.SqlClient.main(SqlClient.java:201)

Caused by: org.apache.flink.table.api.NoMatchingTableFactoryException: Could not find a suitable table factory for 'org.apache.flink.table.factories.CatalogFa ctory' in

the classpath.

Reason: Required context properties mismatch.

The following properties are requested:

default-database=default

hive-conf-dir=/etc/hive/conf

type=hive

The following factories have been considered:

org.apache.flink.table.catalog.GenericInMemoryCatalogFactory

at org.apache.flink.table.factories.TableFactoryService.filterByContext(TableFactoryService.java:301)

at org.apache.flink.table.factories.TableFactoryService.filter(TableFactoryService.java:179)

at org.apache.flink.table.factories.TableFactoryService.findSingleInternal(TableFactoryService.java:140)

at org.apache.flink.table.factories.TableFactoryService.find(TableFactoryService.java:109)

at org.apache.flink.table.client.gateway.local.ExecutionContext.createCatalog(ExecutionContext.java:395)

at org.apache.flink.table.client.gateway.local.ExecutionContext.lambda$null$5(ExecutionContext.java:684)

at java.util.HashMap.forEach(HashMap.java:1289)

at org.apache.flink.table.client.gateway.local.ExecutionContext.lambda$initializeCatalogs$6(ExecutionContext.java:681)

at org.apache.flink.table.client.gateway.local.ExecutionContext.wrapClassLoader(ExecutionContext.java:265)

at org.apache.flink.table.client.gateway.local.ExecutionContext.initializeCatalogs(ExecutionContext.java:677)

at org.apache.flink.table.client.gateway.local.ExecutionContext.initializeTableEnvironment(ExecutionContext.java:565)

at org.apache.flink.table.client.gateway.local.ExecutionContext.<init>(ExecutionContext.java:187)

at org.apache.flink.table.client.gateway.local.ExecutionContext.<init>(ExecutionContext.java:138)

at org.apache.flink.table.client.gateway.local.ExecutionContext$Builder.build(ExecutionContext.java:961)

... 3 more

当缺失kafka客户端时,报错2:

Flink SQL> select * from flinksql02;

[ERROR] Could not execute SQL statement. Reason:

java.lang.ClassNotFoundException: org.apache.kafka.common.serialization.ByteArrayDeserializer

当cdh上的kafka配置错误时(参见2),报错3:

Flink SQL> select * from flinksql0202;

[ERROR] Could not execute SQL statement. Reason:

java.net.ConnectException: Connection refused

当错误地使用了编译flink时不兼容的kafka客户端时(参见3),报错4:

Flink SQL> select * from flinksql_upsert_02;

[ERROR] Could not execute SQL statement. Reason:

org.apache.flink.runtime.JobException: Recovery is suppressed by NoRestartBackoffTimeStrategy

当flink-sql建kafka的hive映射表使用csv进行upsert-kafka时,报错5:

Flink SQL> select * from flinksql0202;

[ERROR] Could not execute SQL statement. Reason:

org.apache.flink.shaded.jackson2.com.fasterxml.jackson.dataformat.csv.CsvMappingException: Too many entries: expected at most 4 (value #4 (22 chars) "2021-05-16 05:53:17.29")

at [Source: UNKNOWN; line: 1, column: 15]

当当flink-sql建kafka的hive映射表以进行upsert-kafka却为指定PRIMARY KEY时(参见4),报错6:

Flink SQL> INSERT INTO flinksql_upsert_03 SELECT * FROM flinksql03;

[INFO] Submitting SQL update statement to the cluster...

[ERROR] Could not execute SQL statement. Reason:

org.apache.flink.table.api.ValidationException: 'upsert-kafka' tables require to define a PRIMARY KEY constraint. The PRIMARY KEY specifies which columns should be read from or write to the Kafka message key. The PRIMARY KEY also defines records in the 'upsert-kafka' table should update or delete on which keys.

准备

cdh上kafka命令(自行区别与apache kafka,参见2、参见5):

kafka-topics --create --zookeeper cdh632-master01:2181/kafka --replication-factor 1 --partitions 3 --topic flinksql02

kafka-console-producer --broker-list cdh632-master01:9092,cdh632-worker02:9092,cdh632-worker03:9092 --topic flinksql02

kafka-console-consumer --bootstrap-server cdh632-worker03:9092 --topic flinksql02 --from-beginning

flink-sql启动命令:

$FLINK_HOME/bin/sql-client.sh embedded -d /opt/cloudera/parcels/FLINK-1.12.2-BIN-SCALA_2.12/lib/flink/conf/sql-client-defaults.yaml -l $FLINK_HOME/flinksql-libs

Flink SQL> USE CATALOG myhive;

注意:sql-client.yaml为从sql-client-defaults.yaml复制修改而来;

1)之所以此处通过-l指定外部jar包,而不是统统都放到$FLINK_HOME/lib/下,是为了“原因:每次提交Flink程序,Flink都会去加载/opt/cloudera/parcels/FLINK/lib/flink/lib/ 下的包,会照成资源浪费,依赖包过多,可能会有JAR包冲突的问题!”,参见6

2)myhive作为CATALOG的配置文件为:

cp /opt/cloudera/parcels/FLINK-1.12.2-BIN-SCALA_2.12/lib/flink/conf/sql-client-defaults.yaml /opt/cloudera/parcels/FLINK-1.12.2-BIN-SCALA_2.12/lib/flink/conf/sql-client.yaml

FlinkSql-kafka测试

测试用例,见参考7中“2.1 FlinkSql-kafka常规功能测试”

FlinkSql-upsertKafka常规功能测试

测试用例见参考8

FlinkSql-upsertKafka关于kafka中数据过期测试

[root@cdh632-master01 lib]# kafka-topics --create --zookeeper cdh632-master01:2181/kafka --replication-factor 1 --partitions 3 --topic test01 etention.ms=60000

[root@cdh632-worker02 ~]# kafka-console-producer --broker-list cdh632-master01:9092,cdh632-worker02:9092,cdh632-worker03:9092 --topic flinksql04

Flink SQL> CREATE TABLE t1 (

> name string,

> age BIGINT,

> isStu INT,

> opt STRING,

> optDate TIMESTAMP(3) METADATA FROM 'timestamp',

> WATERMARK FOR optDate as optDate - INTERVAL '5' SECOND -- 在ts上定义watermark,ts成为事件时间列

> ) WITH (

> 'connector' = 'kafka', -- 使用 kafka connector

> 'topic' = 'flinksql04', -- kafka topic

> 'scan.startup.mode' = 'earliest-offset',

> 'properties.bootstrap.servers' = 'cdh632-master01:9092,cdh632-worker02:9092,cdh632-worker03:9092',

> 'format' = 'json' -- 数据源格式为json

> );

[INFO] Table has been created.

Flink SQL> CREATE TABLE t2 (

> name STRING,

> age bigint,

> PRIMARY KEY (name) NOT ENFORCED

> ) WITH (

> 'connector' = 'upsert-kafka',

> 'topic' = 'flinksql04output',

> 'properties.bootstrap.servers' = 'cdh632-master01:9092,cdh632-worker02:9092,cdh632-worker03:9092', -- kafka broker 地址

> 'key.format' = 'json',

> 'value.format' = 'json'

> );

[INFO] Table has been created.

Flink SQL> INSERT INTO t2

> SELECT

> name,

> max(age)

> FROM t1

> GROUP BY name;

[INFO] Submitting SQL update statement to the cluster...

[INFO] Table update statement has been successfully submitted to the cluster:

Job ID: 3c2a042ce59140287bdd442cd4999e15

kafka生产者中放数据:

>21/05/16 19:15:05 INFO clients.Metadata: Cluster ID: dYegvG1_TEuWyXJXed_QoQ

{"name":"zhangsan","age":18,"isStu":1,"opt":"insert"}

>{"name":"lisi","age":20,"isStu":2,"opt":"update"}

>{"name":"wangwu","age":30,"isStu":1,"opt":"delete"}

Flink SQL> select * from t2;

[INFO] Result retrieval cancelled.

见下图1

Flink SQL> select * from t1;

[INFO] Result retrieval cancelled.

见下图2