Kaggle数据集-贷款逾期预测

前段时间在Kaggle上找了一个数据集Loan_Default银行商业数据集来做贷款预期预测的数据分析练习,下面是数据处理与分析预测的过程。

一、数据集

Banks earn a major revenue from lending loans. But it is often associated with risk. The borrower’s may default on the loan. To mitigate this issue, the banks have decided to use Machine Learning to overcome this issue. They have collected past data on the loan borrowers and we would like to develop a strong ML Model to classify if any new borrower is likely to default or not.

The dataset is enormous & consists of multiple deteministic factors like borrowe’s income, gender, loan pupose etc. The dataset is subject to strong multicollinearity & empty values. We are supposed to overcome these factors & build a strong classifier to predict defaulters?

# from https://www.kaggle.com/yasserh/loan-default-dataset

data = pd.read_csv("Loan_Default.csv")

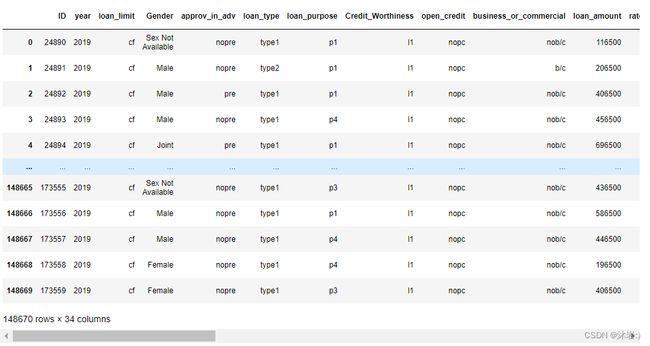

data

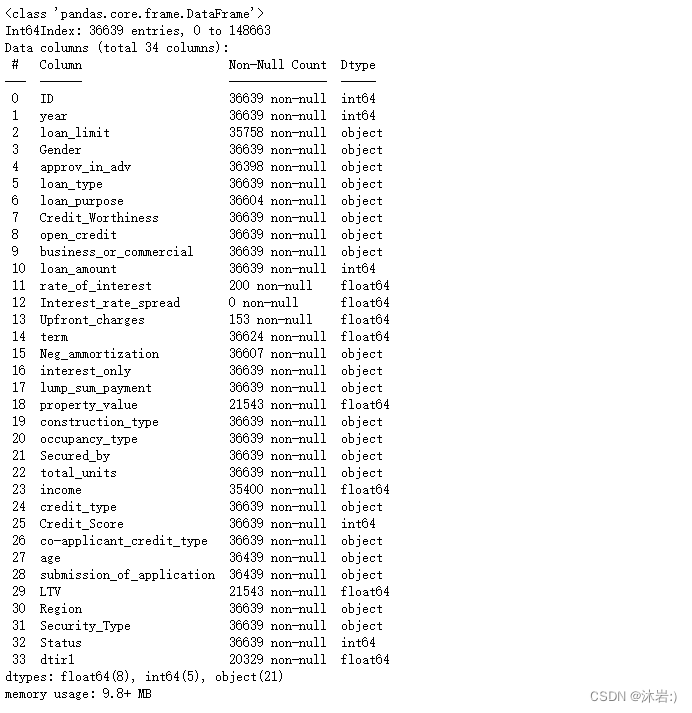

data[data["Status"] == 1].info()

该数据集共有34列,需要对特征进行筛选,其中“Status”列是目标变量。

数据集共有148670条数据,其中“Status”==1的有36639条,“Status”==0的有36639条,属于不平衡数据集,可能会导致结果不准确。

二、数据处理

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

import numpy as np

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import MinMaxScaler

from sklearn.decomposition import PCA

from sklearn.model_selection import GridSearchCV

from sklearn.tree import DecisionTreeClassifier

from sklearn.linear_model import LogisticRegression

from sklearn.svm import SVC

from sklearn.neural_network import MLPClassifier

from sklearn.ensemble import RandomForestRegressor

from sklearn.metrics import classification_report

from sklearn.metrics import confusion_matrix

from sklearn.metrics import ConfusionMatrixDisplay

from sklearn.metrics import plot_roc_curve

pd.set_option('display.max_columns',None)

- 首先删掉显而易见没用的行:ID,year,删除含缺失值太多的列,然后再删除包含缺失值的行:(若直接删除含缺失值的行会将全部Status==1的行删除)

# drop the columns with too much null values in Status == 1

data = data.drop(["rate_of_interest", "Interest_rate_spread", "Upfront_charges", "property_value", "LTV", "dtir1"], axis=1)

# drop the useless columns.

data = data.drop(["ID", "year"], axis=1)

# drop the lines with null values.

data = data.dropna(axis=0, how="any")

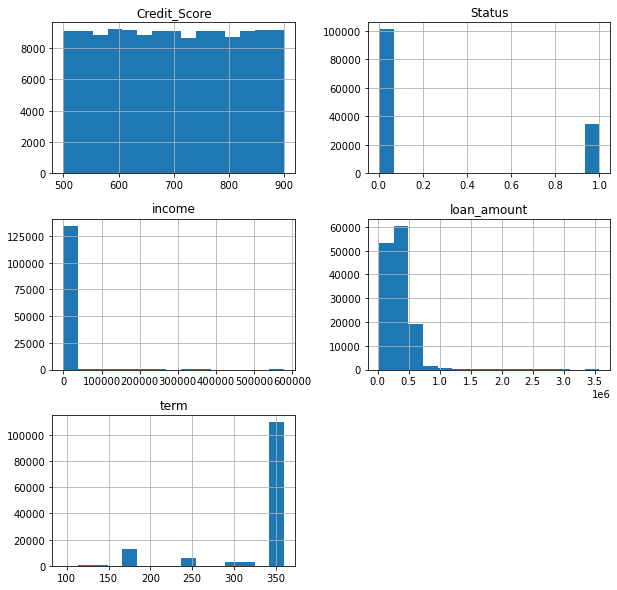

- 查看数值数据的分布:

# check the distribution of numeric columns.

data.hist(bins=15, figsize=(10,10))

plt.show()

# check the distribution of non-numeric columns.

object_list = list(data.columns[data.dtypes == "object"])

fig = plt.figure(figsize=(15,25))

n = 1

for column in object_list:

d = pd.DataFrame(data.loc[:, [column, "Status"]]).groupby(column).count()["Status"]

ax = fig.add_subplot(7, 3, n)

ax.bar(height=d, x=[i for i in range(len(d))], width = 0.5)

ax.set_xticks([i for i in range(len(d))])

ax.set_xticklabels(list(d.index))

ax.set_title(column)

n +=1

plt.show()

- 删除数据极端分布的列:

# drop the columns with extreme distributions, which are useless for predicting.

data = data.drop(["Security_Type", "total_units", "construction_type", "open_credit", "Secured_by", "income"], axis=1)

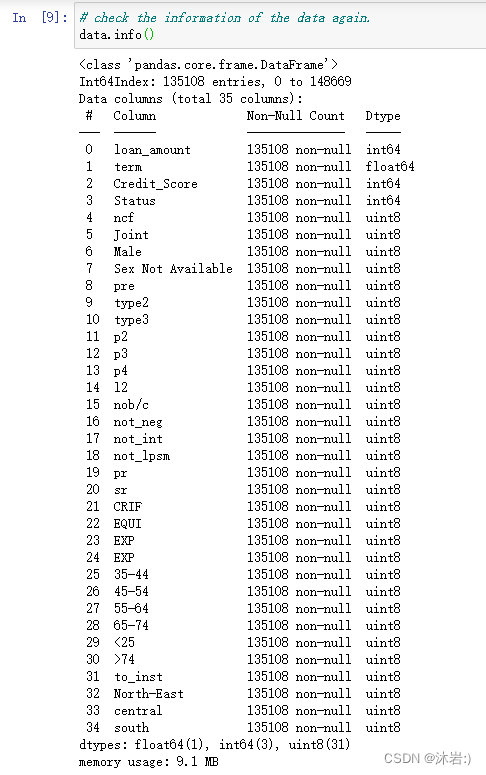

- 把非数值的列用转换为独热编码,并删除首列(防止过拟合):

# change the object columns into one-hot coding.

dummy_list = list(data.columns[data.dtypes == "object"])

for i in dummy_list:

data = pd.concat([data, pd.get_dummies(data[i]).iloc[:, 1:]], axis=1)

data.drop(i, axis=1, inplace=True)

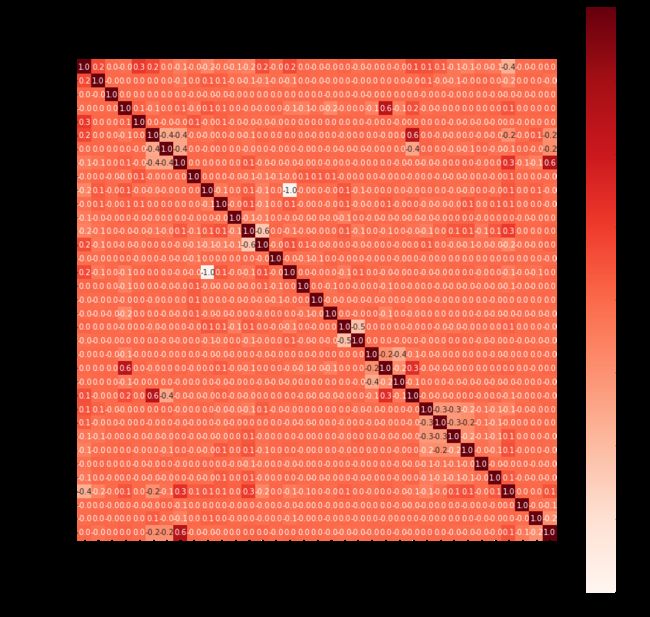

# find the correlation between columns.

corr = data.corr()

plt.figure(figsize=(14,14))

sns.heatmap(corr, annot=True, cmap='Reds',square=True, fmt=".1f")

plt.show()

- 删除相关系数为正负1的列:

# drop the columns with coreelations that are too high.

data = data.drop(["type2"], axis=1)

- 解决数据不平衡的问题常用方法:

- 采集更多数据,最后选取平衡数量的数据。

- 重采样,减少大类的数据,增加小类的数据(甚至可以重复取样)。

- 人为生成小类的数据。

- 细分类,将大类的数据再细分为几个小类,使每个类别数据平衡。

这里我主要使用了重采样的方法处理:

# Reselect data to make the it balance.

status_1 = data[data["Status"] == 1]

status_0 = data[data["Status"] == 0].sample(len(status_1) * 2)

data = pd.concat([status_0, status_1, status_1], axis=0)

data = data.sample(frac=1)

- 分割数据集:

# split the dataset into train set and test set.

X = data.drop("Status", axis=1)

y = data["Status"]

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.15)

- 归一化:(如果使用树的方法进行训练就不需要了)

# scaler

scaler = MinMaxScaler()

scaler.fit(X_train)

X_train = scaler.transform(X_train)

X_test = scaler.transform(X_test)

- PCA降维处理:

# PCA principal component analysis

pca = PCA(n_components=10)

pca.fit(X_train)

X_train = pca.transform(X_train)

X_test = pca.transform(X_test)

三、模型选择

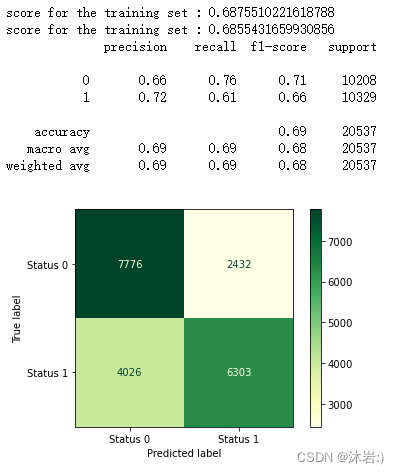

- 决策树:

# Decision tree

DT = DecisionTreeClassifier()

DT.fit(X_train, y_train)

predict_test = DT.predict(X_test)

print("score for the training set :", DT.score(X_train, y_train))

print("score for the training set :", DT.score(X_test, y_test))

print(classification_report(y_test, predict_test))

labels = ["Status 0", "Status 1"]

M = confusion_matrix(y_test, predict_test)

disp = ConfusionMatrixDisplay(confusion_matrix=M, display_labels=labels)

disp.plot(cmap=plt.cm.YlGn)

plt.show()

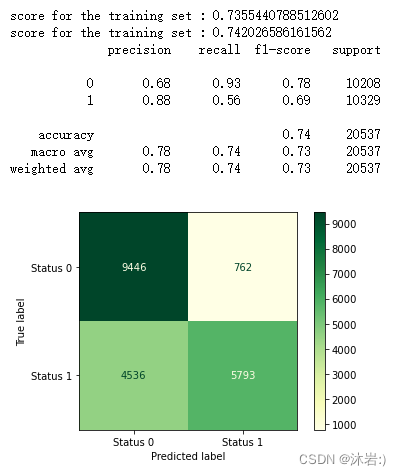

- 逻辑回归:

# Logistic regression

lr = LogisticRegression()

lr.fit(X_train, y_train)

predict_test = lr.predict(X_test)

print("score for the training set :", lr.score(X_train, y_train))

print("score for the training set :", lr.score(X_test, y_test))

print(classification_report(y_test, predict_test))

labels = ["Status 0", "Status 1"]

M = confusion_matrix(y_test, predict_test)

disp = ConfusionMatrixDisplay(confusion_matrix=M, display_labels=labels)

disp.plot(cmap=plt.cm.YlGn)

plt.show()

- 多层感知机(神经网络):

# network

model = MLPClassifier(hidden_layer_sizes=(20,20),learning_rate_init=0.1)

model.fit(X_train, y_train)

predict_test = model.predict(X_test)

print("score for the training set :", model.score(X_train, y_train))

print("score for the training set :", model.score(X_test, y_test))

print(classification_report(y_test, predict_test))

labels = ["Status 0", "Status 1"]

M = confusion_matrix(y_test, predict_test)

disp = ConfusionMatrixDisplay(confusion_matrix=M, display_labels=labels)

disp.plot(cmap=plt.cm.YlGn)

plt.show()

将结果进行对比,最终选择决策树模型,并对超参数进行优化:

四、模型优化

params = {'max_depth': list(range(40, 180, 10))}

grid_search_cv = GridSearchCV(DecisionTreeClassifier(),

params,

verbose=1,

cv=3,

n_jobs = -1,)

grid_search_cv.fit(X_train, y_train)

DT = grid_search_cv.best_estimator_

predict_test = DT.predict(X_test)

五、结果可视化

print("score for the training set :", DT.score(X_train, y_train))

print("score for the training set :", DT.score(X_test, y_test))

print(classification_report(y_test, predict_test))

labels = ["Status 0", "Status 1"]

M = confusion_matrix(y_test, predict_test)

disp = ConfusionMatrixDisplay(confusion_matrix=M, display_labels=labels)

disp.plot(cmap=plt.cm.YlGn)

plot_roc_curve(DT, X_test, y_test)

plt.show()

六、总结

- The accuracy on the test set is 0.86556, which is the highest among these models.

- f1 score is 0.87, which means the performance of the model is balance.

- AUC = 0.87, which means the performance of the model is good.

- What’s more, for banks, it is more important to reduce risk, which means the recall of status=1 should as high as possible and this model achieves this target.