复习整理1

1.groupByKey:

from pyspark import SparkConf, SparkContext

import os

os.environ['PYSPARK_PYTHON'] = r'D:\Tools\Anaconda3\envs\pyspark\python.exe'

if __name__ == '__main__':

conf = SparkConf().setAppName("test").setMaster("local[*]")

sc = SparkContext(conf=conf)

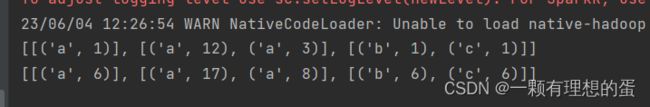

#groupByKey算子:

rdd = sc.parallelize(

[('a', 1), ('a', 12), ('a', 3), ('b', 1), ('c', 1)], 2)

print(rdd.glom().collect())

# 通过groupBy对数据进行分组

# groupBy传入的函数的 意思是: 通过这个函数, 确定按照谁来分组(返回谁即可)

# 分组规则 和SQL是一致的, 也就是相同的在一个组(Hash分组)

rdd3=rdd.groupByKey()

print(rdd3.glom().collect())

rdd4=rdd3.map(lambda x:(x[0],list(x[1])))

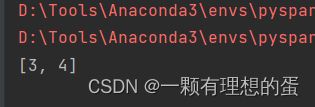

print(rdd4.glom().collect())运行结果:

2.集合运算(交、并)

2.1 join

from pyspark import SparkConf, SparkContext

import os

os.environ['PYSPARK_PYTHON'] = r'D:\Tools\Anaconda3\envs\pyspark\python.exe'

if __name__ == '__main__':

conf = SparkConf().setAppName("test").setMaster("local[*]")

sc = SparkContext(conf=conf)

rdd1 = sc.parallelize([(1, "a1"), (1, "a2"), (2, "b"), (3, "c")])

rdd2 = sc.parallelize([(3, "cc"), (3, "ccc"), (4, "d"), (5, "e")])

rdd3 = rdd1.join(rdd2)

print(rdd3.collect())运行结果:

2.2 leftOuterJoin

from pyspark import SparkConf, SparkContext

import os

os.environ['PYSPARK_PYTHON'] = r'D:\Tools\Anaconda3\envs\pyspark\python.exe'

if __name__ == '__main__':

conf = SparkConf().setAppName("test").setMaster("local[*]")

sc = SparkContext(conf=conf)

rdd1 = sc.parallelize([(1, "a1"), (1, "a2"), (2, "b"), (3, "c")])

rdd2 = sc.parallelize([(3, "cc"), (3, "ccc"), (4, "d"), (5, "e")])

#rdd3 = rdd1.join(rdd2)

rdd3 = rdd1.leftOuterJoin(rdd2)

print(rdd3.collect())运行结果:

2.3 rightOuterJoin

from pyspark import SparkConf, SparkContext

import os

os.environ['PYSPARK_PYTHON'] = r'D:\Tools\Anaconda3\envs\pyspark\python.exe'

if __name__ == '__main__':

conf = SparkConf().setAppName("test").setMaster("local[*]")

sc = SparkContext(conf=conf)

rdd1 = sc.parallelize([(1, "a1"), (1, "a2"), (2, "b"), (3, "c")])

rdd2 = sc.parallelize([(3, "cc"), (3, "ccc"), (4, "d"), (5, "e")])

rdd3 = rdd1.rightOuterJoin(rdd2)

print(rdd3.collect())2.4 intersection

from pyspark import SparkConf, SparkContext

import os

os.environ['PYSPARK_PYTHON'] = r'D:\Tools\Anaconda3\envs\pyspark\python.exe'

if __name__ == '__main__':

conf = SparkConf().setAppName("test").setMaster("local[*]")

sc = SparkContext(conf=conf)

rdd1 = sc.parallelize([1, 2, 3, 4])

rdd2 = sc.parallelize([11, 12, 3, 4])

rdd3 = rdd1.intersection(rdd2)

print(rdd3.collect())

运行结果:

3.4groupBy(未找到)

from pyspark import SparkConf, SparkContext

import os

os.environ['PYSPARK_PYTHON'] = r'D:\Tools\Anaconda3\envs\pyspark\python.exe'

if __name__ == '__main__':

conf = SparkConf().setAppName("test").setMaster("local[*]")

sc = SparkContext(conf=conf)

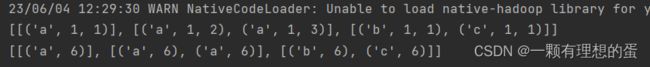

rdd = sc.parallelize(

[('a', 1), ('a', 12), ('a', 3), ('b', 1), ('c', 1)], 2)

# 通过groupBy对数据进行分组

# groupBy传入的函数的 意思是: 通过这个函数, 确定按照谁来分组(返回谁即可)

# 分组规则 和SQL是一致的, 也就是相同的在一个组(Hash分组)

result = rdd.groupBy(lambda t: t[0])

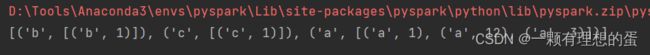

print(result.map(lambda t:(t[0], list(t[1]))).collect())运行结果:

4.reduceByKey

from pyspark import SparkConf, SparkContext

import os

os.environ['PYSPARK_PYTHON'] = r'D:\Tools\Anaconda3\envs\pyspark\python.exe'

if __name__ == '__main__':

conf = SparkConf().setAppName("test").setMaster("local[*]")

sc = SparkContext(conf=conf)

rdd1 = sc.textFile("../data/words.txt", 3) #['sun wu kong', 'zhu ba jie', 'sun ba jie']

print(rdd1.collect())

rdd2 = rdd1.flatMap(lambda x: x.split(" "))#['sun', 'wu', 'kong', 'zhu', 'ba', 'jie', 'sun', 'ba', 'jie']

print(rdd2.collect())

rdd3 = rdd2.map(lambda x : (x,1))

#[('sun', 1), ('wu', 1), ('kong', 1), ('zhu', 1), ('ba', 1), ('jie', 1), ('sun', 1), ('ba', 1), ('jie', 1)]

print(rdd3.collect())

rdd4 = rdd3.reduceByKey(lambda x1,x2: x1+x2)

#[('sun', 2), ('kong', 1), ('wu', 1), ('zhu', 1), ('ba', 2), ('jie', 2)]

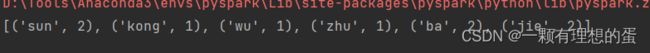

print(rdd4.collect())运行结果:

5.reduce(未找到)

from pyspark import SparkConf, SparkContext

import os

os.environ['PYSPARK_PYTHON'] = r'D:\Tools\Anaconda3\envs\pyspark\python.exe'

if __name__ == '__main__':

conf = SparkConf().setAppName("test").setMaster("local[*]")

sc = SparkContext(conf=conf)

rdd = sc.parallelize(range(1, 10))

rdd_res=rdd.reduce(lambda a, b: a + b)

print("reduce结果为:",rdd_res)

运行结果:从1加到9

6.map

from pyspark import SparkConf, SparkContext

import os

os.environ['PYSPARK_PYTHON'] = r'D:\Tools\Anaconda3\envs\pyspark\python.exe'

def fun(x):

print(x,end="\n")

return x*2

if __name__ == '__main__':

conf = SparkConf().setAppName("test").setMaster("local[*]")

sc = SparkContext(conf=conf)

rdd1 = sc.parallelize([1, 2, 3, 4, 5, 6, 7, 8, 9], 3)

rdd3 = rdd1.map(fun)

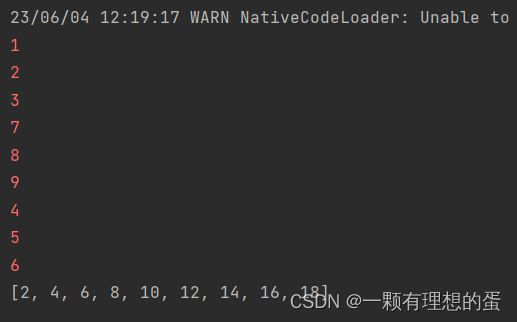

print(rdd3.collect())运行结果:

from pyspark import SparkConf, SparkContext

import os

os.environ['PYSPARK_PYTHON'] = r'D:\Tools\Anaconda3\envs\pyspark\python.exe'

def fun(x):

print(x,end="\n")

return x*2

if __name__ == '__main__':

conf = SparkConf().setAppName("test").setMaster("local[*]")

sc = SparkContext(conf=conf)

rdd1 = sc.parallelize([("a",1),("a",12),("a",3),("b",1),("c",1),],3)

print(rdd1.glom().collect())

rdd2 = rdd1.map(lambda x : (x[0], x[1] + 5))

print(rdd2.glom().collect())运行结果:

from pyspark import SparkConf, SparkContext

import os

os.environ['PYSPARK_PYTHON'] = r'D:\Tools\Anaconda3\envs\pyspark\python.exe'

def fun(x):

print(x,end="\n")

return x*2

if __name__ == '__main__':

conf = SparkConf().setAppName("test").setMaster("local[*]")

sc = SparkContext(conf=conf)

rdd1 = sc.parallelize([("a",1,1),("a",1,2),("a",1,3),("b",1,1),("c",1,1),],3)

print(rdd1.glom().collect())

rdd2 = rdd1.map(lambda x : (x[0], x[1] + 5))

print(rdd2.glom().collect())运行结果:

7.mapValues(上课讲了但是不在提纲中)

from pyspark import SparkConf, SparkContext

import os

os.environ['PYSPARK_PYTHON'] = r'D:\Tools\Anaconda3\envs\pyspark\python.exe'

def fun(x):

print(x,end="\n")

return x*2

if __name__ == '__main__':

conf = SparkConf().setAppName("test").setMaster("local[*]")

sc = SparkContext(conf=conf)

rdd1 = sc.parallelize([("a",(1,1)),("a",1,2),("a",1,3),("b",1,1),("c",1,1),],3)

print(rdd1.glom().collect())

rdd3 = rdd1.mapValues(lambda x : x*5)

print(rdd3.glom().collect())运行结果:

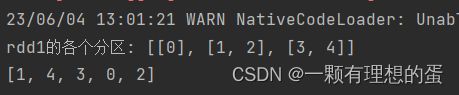

8.takeOrdered

takeOrdered()可以按照升序或降序的方式获取元素,并返回排序后的结果。

from pyspark import SparkConf, SparkContext

import os

os.environ['PYSPARK_PYTHON'] = r'D:\Tools\Anaconda3\envs\pyspark\python.exe'

def fun(x):

print(x,end="\n")

return x*2

if __name__ == '__main__':

conf = SparkConf().setAppName("test").setMaster("local[*]")

sc = SparkContext(conf=conf)

rdd1 = sc.parallelize([3,-5,8,-2,4,1],3)

print("rdd1的各个分区:",rdd1.glom().collect())

result = rdd1.takeOrdered(3, lambda x: x*x)

print(result)运行结果:

9.fold

from pyspark import SparkConf, SparkContext

import os

os.environ['PYSPARK_PYTHON'] = r'D:\Tools\Anaconda3\envs\pyspark\python.exe'

def fun(x):

print(x,end="\n")

return x*2

if __name__ == '__main__':

conf = SparkConf().setAppName("test").setMaster("local[*]")

sc = SparkContext(conf=conf)

rdd1 = sc.parallelize(range(1, 10), 9)

print("rdd1的各个分区:",rdd1.glom().collect())

result = rdd1.fold(10, lambda a,b:a+b)

print(result)运行结果:

10.takeSample

from pyspark import SparkConf, SparkContext

import os

os.environ['PYSPARK_PYTHON'] = r'D:\Tools\Anaconda3\envs\pyspark\python.exe'

# def fun(x):

# print(x,end="\n")

# return x*2

if __name__ == '__main__':

conf = SparkConf().setAppName("test").setMaster("local[*]")

sc = SparkContext(conf=conf)

rdd1 = sc.parallelize(range(5),3)

print("rdd1的各个分区:", rdd1.glom().collect())

result = rdd1.takeSample(False ,10)

print(result)运行结果:

from pyspark import SparkConf, SparkContext

import os

os.environ['PYSPARK_PYTHON'] = r'D:\Tools\Anaconda3\envs\pyspark\python.exe'

# def fun(x):

# print(x,end="\n")

# return x*2

if __name__ == '__main__':

conf = SparkConf().setAppName("test").setMaster("local[*]")

sc = SparkContext(conf=conf)

rdd1 = sc.parallelize(range(5),3)

print("rdd1的各个分区:", rdd1.glom().collect())

result = rdd1.takeSample(False ,3)

print(result)运行结果:

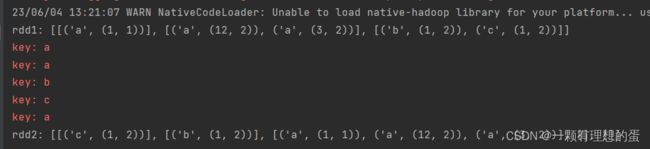

11.partitionBy

from pyspark import SparkConf, SparkContext

import os

os.environ['PYSPARK_PYTHON'] = r'D:\Tools\Anaconda3\envs\pyspark\python.exe'

# def fun(x):

# print(x,end="\n")

# return x*2

def fun(key):

print("key:",key)

if "a"==key:

return 2

elif "b"==key:

return 1

else:

return 0

if __name__ == '__main__':

conf = SparkConf().setAppName("test").setMaster("local[*]")

sc = SparkContext(conf=conf)

rdd1 = sc.parallelize([("a",(1,1)),("a",(12,2)),("a",(3,2)),("b",(1,2)),("c",(1,2)),],3)

print("rdd1:",rdd1.glom().collect())

rdd2 = rdd1.partitionBy(5, partitionFunc=fun)

print("rdd2:",rdd2.glom().collect())运行结果: