LiangGaRy-学习笔记-Day21

1、LVM介绍

1.1、LVM是什么

对于生产环境下的服务器来说,如果存储数据的分区磁盘空间不足,应该如何处理?

- 添加一块硬盘–>可以满足需要

- 再添加一块硬盘也可以满足需求;

- 问题就是拷贝的速度慢;

这里就引入一个技术:LVM在线动态扩容

- raid:支持冗余和安全–>支持速度

- LVM:在数据速度和拓展方面占优

- Linux分区–>固定了分区大小

- 如果在不重新分区的情况下,动态调整文件系统大小–>引入了LVM概念

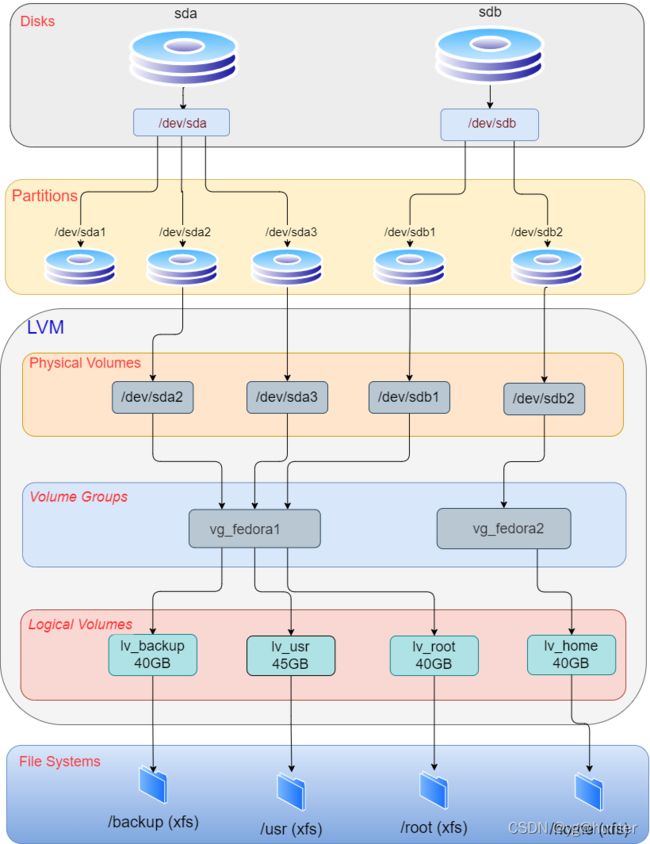

LVM,Logical Volume Manger,是linux内核提供的一种逻辑卷管理功能,由内核驱动和应用层工具组成,它是在硬盘的分区基础上,创建了一个逻辑层,可以非常灵活且非常方便的管理存储设备。

1.2、LVM原理

LVM的基本组成部分

- PE:物理拓展,是默认的最小单位

- PV:物理卷

- VG:卷组,建立在PV的基础之上

- LV:逻辑卷;在VG的基础之上建立

- 真正的文件也就是存储在这里

LVM存储介质可以是磁盘分区,也可以是整个磁盘,也可以是RAID阵列或者是SAN磁盘

- 最小的存储单位:

- 硬盘:扇区–>512zijie

- 文件系统:block 4K

- LVM:PE–>4M

LVM的创建步骤:

- 物理磁盘格式化成为PV

- PV就包含了PE

- 不同的PV加入到同一个VG当中

- 在VG中创建LV逻辑卷,

- 逻辑卷是基于PE创建的

- 创建好LV之后,格式化,然后挂载使用

- 最后:涉及一个LV拓展缩减;

- 原理上都是增加或者减少PE的数量;

1.3、LVM是如何实现的

LVM的优点

- LVM管理灵活

- 可以把多个硬盘空间整合起来变成一个逻辑上的大的硬盘

- 使用逻辑卷(LV),可以创建跨越众多硬盘空间的分区。

- 可以创建小的逻辑卷(LV),在空间不足时再动态调整它的大小。

- 可以在线对LV,VG 进行创建,删除,调整大小等操作,LVM上的文件系统也需要重新调整大小。

- 允许创建快照,可以用来保存文件系统的备份

创建并且使用LVM

- 增加一块硬盘

- 分区:4个主分区

- 创建pv

- 创建vg

- 创建lv

- 挂载使用

RAID+LVM结合

- LVM是软件的卷管理方式,而RAID是磁盘管理的方法。

- 对于重要的数据,使用RAID来保护物理的磁盘不会因为故障而中断业务

- 用LVM用来实现对卷的良性的管理,更好的利用磁盘资源

2、LVM相关命令

2.1、命令汇总

因为命令都是相差不大;因此可以以汇总的形式显示

| 大纲 | PV管理 | VG管理 | LV管理 |

|---|---|---|---|

| scan(扫描) | pvscan | vgscan | lvscan |

| create(创建) | pvcreate | vgcreate | lvcreate |

| display(显示) | pvdisplay | vgdisplay | lvdisplay |

| remove(移除) | pvremove | vgremove | lvremove |

| extend(拓展) | pvextend | vgextend | lvextend |

| reduce(减少) | pvreduce | vgreduce | lvreduce |

简单的对应卷的信息

- pvs:查看物理卷信息

- vgs:查看卷组信息

- lvs:查看逻辑卷信息

2.2、vgcreate命令

作用:创建LVM卷组的命令

语法:vgcreate + 选项

选项:

- -l卷组上允许创建的最大逻辑卷数

- -p卷组中允许添加的最大物理卷数

- -s卷组上的物理卷的PE大小

2.3、lvcreate命令

作用:用于创建LVM的逻辑卷

语法:lvcreate + 选项

选项:

- -L:指定逻辑卷的大小–>直接指定大小

- -l(小写L):指定PE的个数,大小是个数*PE的大小

- -n:指定了逻辑卷的名字

2.4、lvextend命令

作用:拓展LVM的逻辑卷空间

语法:lvextend + 选项

选项:

- -L:指定扩容到哪

- -l(小写L):指定个数*PE的大小就是容量大小

- -r:直接跳过扩容文件系统增加容量

2.5、lvreduce命令

作用:使用lvreduce命令可以减小逻辑卷的大小。当减小逻辑卷的大小时要特别小心,因为减少了的部分数据会丢失。

语法:lvreduce [选项] [逻辑卷名称|逻辑卷路径]

- -r 使用fsadm将与逻辑卷相关的文件系统一起调整-r 使用fsadm将与逻辑卷相关的文件系统一起调整

- -f 强制减少大小,即使它可能会导致数据丢失也不会提示信息

- -L:指定扩容到哪

- -l(小写L):指定个数*PE的大小就是容量大小

3、LVM相关实验

3.1、创建LVM实验

实验过程中使用一块硬盘;

创建过程

- 添加一块硬盘:sdb

- 分四个分区

#查看硬盘情况

[root@Node1 ~]# ls /dev/sdb

/dev/sdb

#对硬盘进行分区

[root@Node1 ~]# fdisk /dev/sdb

..........

Command (m for help): n

Partition type:

p primary (0 primary, 0 extended, 4 free)

e extended

Select (default p): p

Partition number (1-4, default 1):

First sector (2048-41943039, default 2048):

Using default value 2048

Last sector, +sectors or +size{K,M,G} (2048-41943039, default 41943039): +2G

Partition 1 of type Linux and of size 2 GiB is set

#创建多个分区,情况如下

Command (m for help): n

Partition type:

p primary (1 primary, 1 extended, 2 free)

l logical (numbered from 5)

Select (default p): l

Adding logical partition 5

First sector (4198400-41943039, default 4198400):

Using default value 4198400

Last sector, +sectors or +size{K,M,G} (4198400-41943039, default 41943039): +2G

Partition 5 of type Linux and of size 2 GiB is set

Command (m for help): P

............

Device Boot Start End Blocks Id System

/dev/sdb1 2048 4196351 2097152 83 Linux

/dev/sdb2 4196352 41943039 18873344 5 Extended

/dev/sdb5 4198400 8392703 2097152 83 Linux

/dev/sdb6 8394752 12589055 2097152 83 Linux

/dev/sdb7 12591104 16785407 2097152 83 Linux

/dev/sdb8 16787456 20981759 2097152 83 Linux

/dev/sdb9 20983808 25178111 2097152 83 Linux

/dev/sdb10 25180160 29374463 2097152 83 Linux

#保存退出

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

- 创建pv物理卷

- 使用pvcreate命令

#创建pv物理卷

[root@Node1 ~]# pvcreate /dev/sdb{5..8}

Physical volume "/dev/sdb5" successfully created.

Physical volume "/dev/sdb6" successfully created.

Physical volume "/dev/sdb7" successfully created.

Physical volume "/dev/sdb8" successfully created.

#查看物理卷情况

[root@Node1 ~]# pvs

PV VG Fmt Attr PSize PFree

/dev/sda2 centos lvm2 a-- <19.51g 0

/dev/sdb5 lvm2 --- 2.00g 2.00g

/dev/sdb6 lvm2 --- 2.00g 2.00g

/dev/sdb7 lvm2 --- 2.00g 2.00g

/dev/sdb8 lvm2 --- 2.00g 2.00g

#查看更详细的信息

[root@Node1 ~]# pvdisplay

--- Physical volume ---

PV Name /dev/sda2

VG Name centos

PV Size 19.51 GiB / not usable 3.00 MiB

Allocatable yes (but full)

PE Size 4.00 MiB

Total PE 4994

Free PE 0

Allocated PE 4994

PV UUID 0ObL2f-Q3hu-HEGq-ZahS-NCyb-cLcb-HYy5M7

............

- 创建卷组

- 使用的是vgcreate命令

- -s:参数可以指定PE的大小

#创建卷组

#vg + 卷组名 + 分区pv

[root@Node1 ~]# vgcreate vg01 /dev/sdb5

Volume group "vg01" successfully created

#在查看卷组情况

[root@Node1 ~]# vgs

VG #PV #LV #SN Attr VSize VFree

centos 1 2 0 wz--n- <19.51g 0

vg01 1 0 0 wz--n- <2.00g <2.00g

[root@Node1 ~]# vgdisplay

--- Volume group ---

VG Name vg01

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 1

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 0

Open LV 0

Max PV 0

Cur PV 1

Act PV 1

VG Size <2.00 GiB

PE Size 4.00 MiB

Total PE 511

Alloc PE / Size 0 / 0

Free PE / Size 511 / <2.00 GiB

VG UUID ZkGPzV-0Y4q-uS03-eaEU-PL6R-eNFj-7h9F2x

............

#指定PE的大小为16M 创建vg02组

[root@Node1 ~]# vgcreate -s 16M vg02 /dev/sdb6

Volume group "vg02" successfully created

#查看vg02的信息

[root@Node1 ~]# vgdisplay vg02

--- Volume group ---

VG Name vg02

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 1

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 0

Open LV 0

Max PV 0

Cur PV 1

Act PV 1

VG Size 1.98 GiB

PE Size 16.00 MiB

Total PE 127

Alloc PE / Size 0 / 0

Free PE / Size 127 / 1.98 GiB

VG UUID K4dEnG-Bfb3-8SlF-SoXW-e9lC-j9YY-6TpKqZ

- 创建逻辑卷

- 使用lvcreate命令

- -n:指定逻辑卷的名字

- -L:指定lv的大小(默认是M)

- -l(小写L):这里是指定多少个PE

- 使用lvcreate命令

[root@Node1 ~]# lvcreate -n lv01 -L 16M vg01

Logical volume "lv01" created.

#再创建一个lv02

[root@Node1 ~]# lvcreate -n lv02 -l 4 vg01

Logical volume "lv02" created.

#查看情况

[root@Node1 ~]# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

root centos -wi-ao---- <18.51g

swap centos -wi-ao---- 1.00g

lv01 vg01 -wi-a----- 16.00m

lv02 vg01 -wi-a----- 16.00m

[root@Node1 ~]# lvdisplay

--- Logical volume ---

LV Path /dev/vg01/lv01

LV Name lv01

VG Name vg01

LV UUID jn8d0D-9OOr-tXkf-hnha-9eYU-O7Oa-jNJgRK

LV Write Access read/write

LV Creation host, time Node1, 2023-07-07 15:19:20 +0800

LV Status available

# open 0

LV Size 16.00 MiB

Current LE 4

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:2

- 最后挂在使用

- 创建目录–>然后挂载使用

#创建目录

[root@Node1 ~]#

[root@Node1 ~]# mkdir /lv01

#这里就是创建文件系统

[root@Node1 ~]# mkfs.xfs /dev/vg01/lv01

#最后挂载使用

[root@Node1 ~]# mount /dev/vg01/lv01 /lv01/

#最后写入进去/etc/fstab文件完成开机自动挂在

[root@Node1 ~]# echo "/dev/vg01/lv01 xfs defaults 0 0" >> /etc/fstab

3.2、LVM扩容实验

当磁盘容量不足的时候,我们就需要扩容;

- 扩容前查看空间

- 使用lvcreate命令进行扩容

- -L:指定扩容多少容量

#查看卷组情况

[root@Node1 ~]# vgs

VG #PV #LV #SN Attr VSize VFree

centos 1 2 0 wz--n- <19.51g 0

vg01 1 2 0 wz--n- <2.00g 1.96g

vg02 1 0 0 wz--n- 1.98g 1.98g

#然后使用lvextend命令进行扩容

[root@Node1 ~]# lvextend -L +30M /dev/vg01/lv01

Rounding size to boundary between physical extents: 32.00 MiB.

Size of logical volume vg01/lv01 changed from 16.00 MiB (4 extents) to 48.00 MiB (12 extents).

Logical volume vg01/lv01 successfully resized.

#然后查看一下逻辑卷的大小

[root@Node1 ~]# lvs /dev/vg01/lv01

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

lv01 vg01 -wi-ao---- 48.00m

#然后在使用df查看-->这里明显发现,挂载后也没有立刻改变容量

[root@Node1 ~]# df -Th | grep lv01

/dev/mapper/vg01-lv01 xfs 13M 896K 12M 7% /lv01

这里的原因就是要扩容文件系统

- ext4文件:使用的resize2fs命令 + 逻辑卷名

- xfs文件系统:使用的就是xfs_growfs + 逻辑卷路径

#真正让系统识别的就是扩容

[root@Node1 ~]# xfs_growfs /dev/vg01/lv01

meta-data=/dev/mapper/vg01-lv01 isize=512 agcount=1, agsize=4096 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0 spinodes=0

data = bsize=4096 blocks=4096, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal bsize=4096 blocks=855, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

data blocks changed from 4096 to 12288

#最后再次查看-->这里就是扩容成功

[root@Node1 ~]# df -Th | grep lv01

/dev/mapper/vg01-lv01 xfs 45M 992K 44M 3% /lv01

如果直接扩容到80M,而不需要在扩展文件系统的操作方法:

- lvextend 中的-r参数

[root@Node1 ~]# lvextend -L 80M -r /dev/vg01/lv01

Size of logical volume vg01/lv01 changed from 48.00 MiB (12 extents) to 80.00 MiB (20 extents).

Logical volume vg01/lv01 successfully resized.

meta-data=/dev/mapper/vg01-lv01 isize=512 agcount=3, agsize=4096 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0 spinodes=0

data = bsize=4096 blocks=12288, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal bsize=4096 blocks=855, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

data blocks changed from 12288 to 20480

[root@Node1 ~]# df -Th | grep lv01

/dev/mapper/vg01-lv01 xfs 77M 1.1M 76M 2% /lv01

VG也可以扩容

- vgcreate 命令你可以实现

#首先查看一下vg的容量

[root@Node1 ~]# vgs

VG #PV #LV #SN Attr VSize VFree

centos 1 2 0 wz--n- <19.51g 0

vg01 1 2 0 wz--n- <2.00g 1.90g

vg02 1 0 0 wz--n- 1.98g 1.98g

#然后扩容-->在创建的PV中选取硬盘

[root@Node1 ~]# vgextend vg01 /dev/sdb7

Volume group "vg01" successfully extended

#最后再次查看情况

[root@Node1 ~]# vgs vg01

VG #PV #LV #SN Attr VSize VFree

vg01 2 2 0 wz--n- 3.99g <3.90g

3.3、LVM缩容实验

真实的生产环境中,很少出现缩容的实验;

- 数据是无价的,因此很少有人会进行缩容;

在一般情况下,不允许对磁盘设备进行缩容,因为这可能造成数据丢失的风险。尽管如此,LVM还是提供了LV缩容的方法。然而,xfs系统不允许缩容

实验如下:

#我们尝试按照扩容的方式直接指定大小

[root@Node1 ~]# lvreduce -L -20M /dev/vg01/lv01

WARNING: Reducing active and open logical volume to 60.00 MiB.

THIS MAY DESTROY YOUR DATA (filesystem etc.)

Do you really want to reduce vg01/lv01? [y/n]: y

Size of logical volume vg01/lv01 changed from 80.00 MiB (20 extents) to 60.00 MiB (15 extents).

Logical volume vg01/lv01 successfully resized.

#然后在查看容量其实没有改变

[root@Node1 ~]# df -Th | grep lv01

/dev/mapper/vg01-lv01 xfs 77M 1.1M 76M 2% /lv01

#如果加上-r参数,其实也没有改变

[root@Node1 ~]# lvextend -L 10M -r /dev/vg01/lv01

Rounding size to boundary between physical extents: 12.00 MiB.

New size given (3 extents) not larger than existing size (15 extents)

[root@Node1 ~]# df -Th | grep lv01

/dev/mapper/vg01-lv01 xfs 77M 1.1M 76M 2% /lv01

创建一点数据

- 然后在尝试缩容

#创建数据

[root@Node1 ~]# cd /lv01/

[root@Node1 lv01]# touch aa{1..3}.txt

[root@Node1 lv01]# cd

#缩容实验:-->先缩容vg卷组-->这里也明显出现报错-->文件依然在使用当中

[root@Node1 ~]# vgreduce vg01 /dev/sdb5

Physical volume "/dev/sdb5" still in use

#因此需要从pv物理卷来减少

[root@Node1 ~]# pvmove /dev/sdb5

/dev/sdb5: Moved: 21.05%

/dev/sdb5: Moved: 78.95%

/dev/sdb5: Moved: 100.00%

#然后在减少/dev/sdb5

[root@Node1 ~]# vgreduce vg01 /dev/sdb5

Removed "/dev/sdb5" from volume group "vg01"

#最后再查看一下

[root@Node1 ~]# pvs

PV VG Fmt Attr PSize PFree

/dev/sda2 centos lvm2 a-- <19.51g 0

/dev/sdb5 lvm2 --- 2.00g 2.00g

/dev/sdb6 vg02 lvm2 a-- 1.98g 1.98g

/dev/sdb7 vg01 lvm2 a-- <2.00g 1.92g

/dev/sdb8 lvm2 --- 2.00g 2.00g

#监控磁盘空间的使用率

[root@Node1 ~]# df -Th

Filesystem Type Size Used Avail Use% Mounted on

/dev/mapper/centos-root xfs 19G 1.2G 18G 6% /

devtmpfs devtmpfs 979M 0 979M 0% /dev

tmpfs tmpfs 991M 0 991M 0% /dev/shm

tmpfs tmpfs 991M 9.5M 981M 1% /run

tmpfs tmpfs 991M 0 991M 0% /sys/fs/cgroup

/dev/sda1 xfs 497M 126M 372M 26% /boot

tmpfs tmpfs 199M 0 199M 0% /run/user/0

/dev/mapper/vg01-lv01 xfs 77M 1.1M 76M 2% /lv01

3.4、LVM删除实验

创建的流程如下:

- pvcreate -->vgcreate–>lvcreate -->mkfs.xfs–>mount–>正常使用

删除流程如下

- umount(卸载)–>lvremove–>vgremove–>pcremove

#卸载分区

[root@Node1 ~]# umount /lv01/

#移除逻辑卷

[root@Node1 ~]# lvremove /dev/vg01/lv01

Do you really want to remove active logical volume vg01/lv01? [y/n]: y

Logical volume "lv01" successfully removed

#然后移除卷组

[root@Node1 ~]# vgremove vg01

Do you really want to remove volume group "vg01" containing 1 logical volumes? [y/n]: y

Do you really want to remove active logical volume vg01/lv02? [y/n]: y

Logical volume "lv02" successfully removed

Volume group "vg01" successfully removed

#最后移除物理卷

[root@Node1 ~]# pvremove /dev/sdb{5..8}

PV /dev/sdb6 is used by VG vg02 so please use vgreduce first.

(If you are certain you need pvremove, then confirm by using --force twice.)

/dev/sdb6: physical volume label not removed.

Labels on physical volume "/dev/sdb5" successfully wiped.

Labels on physical volume "/dev/sdb7" successfully wiped.

Labels on physical volume "/dev/sdb8" successfully wiped.

3.5、SSM给公司邮件服务器扩容

- 安装工具:SSM–>system-storage-manager

- 先安装好必要的软件:yum -y install

- 检查服务器磁盘信息

- ssm list dev

- 查看存储池信息

- ssm list pool–>用于查看LVM存储池的情况

- 查看完就可以考虑扩容

#安装ssm工具

[root@Node1 ~]# yum -y install system-storage-manager

#查看磁盘信息

[root@Node1 ~]# ssm list dev

---------------------------------------------------------------

Device Free Used Total Pool Mount point

---------------------------------------------------------------

/dev/sda 20.00 GB PARTITIONED

/dev/sda1 500.00 MB /boot

/dev/sda2 0.00 KB 19.51 GB 19.51 GB centos

/dev/sdb 20.00 GB

/dev/sdb1 2.00 GB

/dev/sdb10 2.00 GB

/dev/sdb2 1.00 KB

/dev/sdb5 2.00 GB

/dev/sdb6 1.98 GB 0.00 KB 2.00 GB vg02

/dev/sdb7 2.00 GB

/dev/sdb8 2.00 GB

/dev/sdb9 2.00 GB

/dev/sdc 0.00 KB 19.98 GB 20.00 GB data_lv

/dev/sdd 15.97 GB 4.02 GB 20.00 GB data_lv

#查看lvm的信息

[root@Node1 ~]# ssm list pool

----------------------------------------------------

Pool Type Devices Free Used Total

----------------------------------------------------

centos lvm 1 0.00 KB 19.51 GB 19.51 GB

data_lv lvm 2 15.97 GB 24.00 GB 39.97 GB

vg02 lvm 1 1.98 GB 0.00 KB 1.98 GB

----------------------------------------------------

#到这里为止可以考虑进行扩容了;