sklearn上机笔记3:数据拆分的sklearn实现和决策树的生成

目录

数据拆分的sklearn实现

一、拆分为训练集与测试集

1.简单交叉验证:数据一分为二,结果具有偶然性

2.S折交叉验证和留一交叉验证

二、将拆分与评价合并执行

三、同时使用多个评价指标

四、使用交叉验证后的模型进行预测

sklearn实现决策树

数据拆分的sklearn实现

一、拆分为训练集与测试集

训练集:用来训练模型

测试集:用于对最终对学习方法的评估

1.简单交叉验证:数据一分为二,结果具有偶然性

**sklearn.model_selection.train_test_split**

* (arrays:等长度的需要拆分的数据对象,对于有监督类模型,x和y需要按相同标准同时进行拆分;

* test_size=0.25:float,int,None用于验证模型的样本比例,范围在0~1之间;

* train_state=None:随机种子;

* shuffle=True:是否在拆分前对样本做随机排序;

* stratify=None:array—like or None,是否按指定类别标签对数据做分层拆分)

导入boston数据集

#忽略警报信息 import warnings warnings.filterwarnings("ignore") #导入boston数据集 from sklearn.datasets import load_boston boston = load_boston()

#导入训练集与测试集拆分模块 from sklearn.model_selection import train_test_split x_train,x_test,y_train,y_test=train_test_split(boston.data,boston.target,test_size=0.3,random_state=666) len(x_train),len(x_test),len(y_train),len(y_test)

2.S折交叉验证和留一交叉验证

s折交叉验证:首先随机地将已给数据切分为s个互不相交的大小相同的子集,然后利用s-1个子集的数据训练模型,利用余下的子集测试模型;将这一过程对可能的s种选择重复进行。最后选择s次评测中平均测试误差最小的模型

留一交叉验证:s=n。往往在数据缺乏的情况下使用。

二、将拆分与评价合并执行

sklearn.model_selection.cross_val_score(

* estimator:用于拟合数据的估计器对象名称

* x:array-like,用于拟合模型的数据阵

* groups= None:array-like,形如(n_samples,),样本拆分时使用的分组标签

* scoring = None:string,callable or None,模型评分的计算方法

* cv = None:int,设定交互验证时的拆分策略(None,使用默认的3组拆分;integer,设定具体的拆分组数;object/iterable 用于设定拆分)

* n_jobs = 1,verbose = 0,fit_params = None

* pre_dispatch = '2 * n_jobs')

#导入线性回归模型 from sklearn.linear_model import LinearRegression reg=LinearRegression() scores = cross_val_score(reg,boston.data,boston.target,cv=10) scores

#求平均值和方差 scores.mean(),scores.std()

score = cross_val_score(reg,boston.data,boston.target,scoring='explained_variance',cv=10) scores

随机排序后

对数据进行随机重排,保证拆分的均匀性

#使用numpy进行随机重排 import numpy as np X,y=boston.data,boston.target indices = np.arange(y.shape[0]) np.random.shuffle(indices) X,y = X[indices],y[indices]reg = LinearRegression() scores=cross_val_score(reg,X,y,cv = 10) scores.mean(),scores.std()

三、同时使用多个评价指标

sklearn.model_selection.cross_validate(

* estimator:用于拟合数据的估计器对象名称

* x:array-like,用于拟合模型的数据阵

* **y = None:array-like,有监督模型使用的因变量**

* groups= None:array-like,形如(n_samples,),样本拆分时使用的分组标签

* scoring = None:string,callable,**list/tuple,dict** or None,模型评分的计算方法,**多估计指标时使用list/dict等方式提供**

* cv = None:int,设定交互验证时的拆分策略(None,使用默认的3组拆分;integer,设定具体的拆分组数;object/iterable 用于设定拆分)

* n_jobs = 1,verbose = 0,fit_params = None

* pre_dispatch = '2 * n_jobs'

* **return_train_score = True:boolean,是否返回训练集评分**(返回:每轮模型对应评分的字典,shape=(n_splits)))

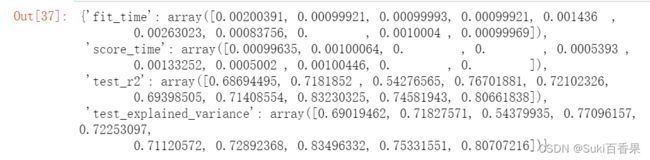

from sklearn.model_selection import cross_validate scoring = ['r2','explained_variance'] scores= cross_validate(reg,X,y,cv = 10,scoring=scoring,return_train_score= False) scores

scores['test_r2'].mean()

四、使用交叉验证后的模型进行预测

sklearn.model_selection.cross_val_predict(

estimator,X,y=None,groups = None,cv = None,n_iobs = 1,verbose = 0,fit_params = None,pre_dispatch = '2 * n_jobs',method = 'predict_proba'时,各列按照升序对应各个类别)返回:ndarray,模型对应的各案例预测值

from sklearn.model_selection import cross_val_predict pred = cross_val_predict(reg,X,y,cv=10) pred[:10]from sklearn.metrics import r2_score r2_score(y,pred)

sklearn实现决策树

class sklear.tree. Decision TreeClassifier(

* erqngn《kcntcopy:衡量节点拆分质量的指标,{gini','entropy'}

* splitter = best节点拆分时的策路 'best'代表最佳拆分,'random'为最佳随机拆分

* max_depth = None:树生长的最大深度(高度)

* min_samples_sp1it = 2:节点允许进一步分枝时的最低样本数

* min_samples_leaf = 1:叶节点的最低样本量

* min_weight_fraction_leaf = 0.0:有权重时叶节点的最低权重分值

* max_features = 'auto':int/loat/string/none,搜索分支时考虑的特征数('auto'/'sqrt'), #max_features = sqrt(n_features)#'log2', max_features=log2(n_features)#None, max features n features

* random_state=None

* max_leaf_nodes=none:最高叶节点数量

* min_impurity_decrease=0.0:分枝时需要的最低信息量下降量

* class_weight = none, presort-False)

#导入决策树分类模块 from sklearn.tree import DecisionTreeClassifier #导入iris数据集 from sklearn.datasets import load_iris iris = load_iris() #决策树实例化 ct = DecisionTreeClassifier() #模型训练 ct.fit(iris.data,iris.target)ct.max_features_

ct.feature_importances_

ct.predict(iris.data)from sklearn.metrics import classification_report print(classification_report(iris.target,ct.predict(iris.data)))

sklearn.tree.export_graphviz(

* decision_tree, out_file ="tree. dot"

* ax_depth = None, feature_names = None, class_names = none

* label = 'all':{'all','root','none'},是否显示杂质测量指标

* filled = False:是否对节点填色加强表示

* leaves_parallel = False:是否在树底部绘制所有叶节点

* impurity = True, node_ids =False

* proportion = False:是否给出节点样本占比而不是样本量

* rotate = False:是否从左至右绘图

* rounded = False:是否绘制圆角框而不是直角长方框

* special_characters = False:是否忽略兼容的特殊字符

* precision = 3)

from sklearn.tree import export_graphviz export_graphviz(ct,out_file = 'tree.dot',feature_names = iris.feature_names,class_names = iris.target_names,rounded = True,filled = True,special_characters = True)在graphviz中打开tree.dot文件可以看到生成的决策树