redhat 6.4安装oracle11g RAC (二)

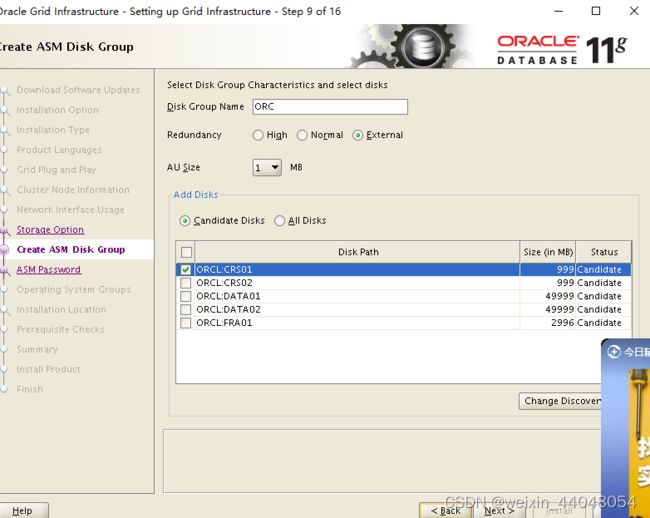

创建一个asm Disk Group Name 组,并给一个名称ORC,并选择下面的三块盘,然后 Next

如果创建用来存放OCR和VOTEDISK的ASM磁盘组,那么External、Normal、High三种冗余级别对应的Failgroup个数是1、3、5。也就是说,创建这三种冗余级别的磁盘组至少分别需要1、3、5个ASM磁盘。

如果创建用于非OCR和VOTEDISK存储的ASM磁盘组,那么External、Normal、High三种冗余级别对应的Failgroup至少是1、2、3。也就是说,创建这三种冗余级别的磁盘组至少分别需要1、2、3个ASM磁盘。

*给这个组,设置一个密码

这里安装向导会帮你填写集群软件的安装路径,我们继续next,这里我们需要注意的是oracle_Home不能是oracle_base的子目录

选择grid安装清单目录

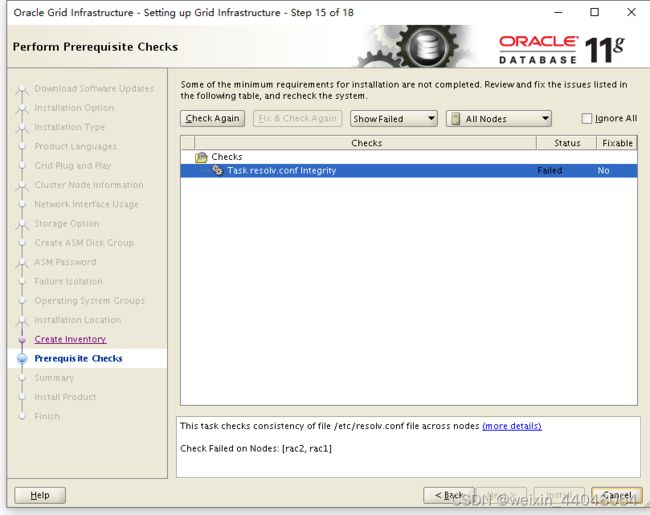

环境检测出现resolv.conf错误,是因为没有配置DNS,可以忽略

Install 继续安装

安装grid完成,提示需要root用户依次执行脚本orainstRoot.sh ,root.sh (一定要先在rac1执行完脚本后,才能在其他节点执行)

/u01/app/oraInventory/orainstRoot.sh

节点一

Last login: Tue Jun 20 00:01:19 2023 from 192.168.186.1

[root@rac1 ~]# /u01/app/oraInventory/orainstRoot.sh

Changing permissions of /u01/app/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete.

[root@rac1 ~]# /u01/app/11.2.0/grid/root.sh

Performing root user operation for Oracle 11g

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/11.2.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Using configuration parameter file: /u01/app/11.2.0/grid/crs/install/crsconfig_params

Creating trace directory

User ignored Prerequisites during installation

Installing Trace File Analyzer

OLR initialization - successful

root wallet

root wallet cert

root cert export

peer wallet

profile reader wallet

pa wallet

peer wallet keys

pa wallet keys

peer cert request

pa cert request

peer cert

pa cert

peer root cert TP

profile reader root cert TP

pa root cert TP

peer pa cert TP

pa peer cert TP

profile reader pa cert TP

profile reader peer cert TP

peer user cert

pa user cert

Adding Clusterware entries to upstart

CRS-2672: Attempting to start 'ora.mdnsd' on 'rac1'

CRS-2676: Start of 'ora.mdnsd' on 'rac1' succeeded

CRS-2672: Attempting to start 'ora.gpnpd' on 'rac1'

CRS-2676: Start of 'ora.gpnpd' on 'rac1' succeeded

CRS-2672: Attempting to start 'ora.cssdmonitor' on 'rac1'

CRS-2672: Attempting to start 'ora.gipcd' on 'rac1'

CRS-2676: Start of 'ora.cssdmonitor' on 'rac1' succeeded

CRS-2676: Start of 'ora.gipcd' on 'rac1' succeeded

CRS-2672: Attempting to start 'ora.cssd' on 'rac1'

CRS-2672: Attempting to start 'ora.diskmon' on 'rac1'

CRS-2676: Start of 'ora.diskmon' on 'rac1' succeeded

CRS-2676: Start of 'ora.cssd' on 'rac1' succeeded

ASM created and started successfully.

Disk Group ORC created successfully.

clscfg: -install mode specified

Successfully accumulated necessary OCR keys.

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

CRS-4256: Updating the profile

Successful addition of voting disk 4e637956089a4f36bf208b0aa3d15441.

Successfully replaced voting disk group with +ORC.

CRS-4256: Updating the profile

CRS-4266: Voting file(s) successfully replaced

## STATE File Universal Id File Name Disk group

-- ----- ----------------- --------- ---------

1. ONLINE 4e637956089a4f36bf208b0aa3d15441 (ORCL:CRS01) [ORC]

Located 1 voting disk(s).

CRS-2672: Attempting to start 'ora.asm' on 'rac1'

CRS-2676: Start of 'ora.asm' on 'rac1' succeeded

CRS-2672: Attempting to start 'ora.ORC.dg' on 'rac1'

CRS-2676: Start of 'ora.ORC.dg' on 'rac1' succeeded

Configure Oracle Grid Infrastructure for a Cluster ... succeeded

[root@rac1 ~]#

[root@rac1 ~]#

节点二在这里插入代码片

Nothing to do

[root@rac2 software]# /u01/app/oraInventory/orainstRoot.sh

Changing permissions of /u01/app/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete.

[root@rac2 software]# /u01/app/11.2.0/grid/root.sh

Performing root user operation for Oracle 11g

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/11.2.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Using configuration parameter file: /u01/app/11.2.0/grid/crs/install/crsconfig_params

Creating trace directory

User ignored Prerequisites during installation

Installing Trace File Analyzer

OLR initialization - successful

Adding Clusterware entries to upstart

CRS-4402: The CSS daemon was started in exclusive mode but found an active CSS daemon on node rac1, number 1, and is terminating

An active cluster was found during exclusive startup, restarting to join the cluster

Configure Oracle Grid Infrastructure for a Cluster ... succeeded

[root@rac2 software]#

回到图形化安装界面

这个报错不用去管,不影响使用,点击ok ,在next

点击yes

安装完成

到这里oracle RAC 就部署完成!!!

验证,基于oracle RAC的特性,查看IP地址,会发现 hosts文件里面的与节点对应的IP都有,VIP和scan IP会启动成功后自动出现在网卡上

[root@rac1 ~]# ifconfig

eth1 Link encap:Ethernet HWaddr 00:0C:29:51:B8:59

inet addr:192.168.186.128 Bcast:192.168.186.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe51:b859/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:2897409 errors:0 dropped:0 overruns:0 frame:0

TX packets:1211909 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:3969304024 (3.6 GiB) TX bytes:117982027 (112.5 MiB)

eth1:1 Link encap:Ethernet HWaddr 00:0C:29:51:B8:59

inet addr:169.254.211.232 Bcast:169.254.255.255 Mask:255.255.0.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

eth2 Link encap:Ethernet HWaddr 00:0C:29:51:B8:63

inet addr:192.168.182.145 Bcast:192.168.182.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe51:b863/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:1704057 errors:0 dropped:0 overruns:0 frame:0

TX packets:3640272 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:152470031 (145.4 MiB) TX bytes:4639969576 (4.3 GiB)

eth2:1 Link encap:Ethernet HWaddr 00:0C:29:51:B8:63

inet addr:192.168.182.101 Bcast:192.168.182.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

eth2:2 Link encap:Ethernet HWaddr 00:0C:29:51:B8:63

inet addr:192.168.182.120 Bcast:192.168.182.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:32558 errors:0 dropped:0 overruns:0 frame:0

TX packets:32558 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:24282862 (23.1 MiB) TX bytes:24282862 (23.1 MiB)

[root@rac1 ~]#

安装grid后的资源检查

[grid@rac1 grid]$ crs_stat -t -v

Name Type R/RA F/FT Target State Host

----------------------------------------------------------------------

ora....ER.lsnr ora....er.type 0/5 0/ ONLINE ONLINE rac1

ora....N1.lsnr ora....er.type 0/5 0/0 ONLINE ONLINE rac1

ora.ORC.dg ora....up.type 0/5 0/ ONLINE ONLINE rac1

ora.asm ora.asm.type 0/5 0/ ONLINE ONLINE rac1

ora.cvu ora.cvu.type 0/5 0/0 ONLINE ONLINE rac1

ora.gsd ora.gsd.type 0/5 0/ OFFLINE OFFLINE

ora....network ora....rk.type 0/5 0/ ONLINE ONLINE rac1

ora.oc4j ora.oc4j.type 0/1 0/2 ONLINE ONLINE rac1

ora.ons ora.ons.type 0/3 0/ ONLINE ONLINE rac1

ora....SM1.asm application 0/5 0/0 ONLINE ONLINE rac1

ora....C1.lsnr application 0/5 0/0 ONLINE ONLINE rac1

ora.rac1.gsd application 0/5 0/0 OFFLINE OFFLINE

ora.rac1.ons application 0/3 0/0 ONLINE ONLINE rac1

ora.rac1.vip ora....t1.type 0/0 0/0 ONLINE ONLINE rac1

ora....SM2.asm application 0/5 0/0 ONLINE ONLINE rac2

ora....C2.lsnr application 0/5 0/0 ONLINE ONLINE rac2

ora.rac2.gsd application 0/5 0/0 OFFLINE OFFLINE

ora.rac2.ons application 0/3 0/0 ONLINE ONLINE rac2

ora.rac2.vip ora....t1.type 0/0 0/0 ONLINE ONLINE rac2

ora....ry.acfs ora....fs.type 0/5 0/ ONLINE ONLINE rac1

ora.scan1.vip ora....ip.type 0/0 0/0 ONLINE ONLINE rac1

[grid@rac1 grid]$ olsnodes -n

rac1 1

rac2 2

[grid@rac1 grid]$ https://blog.csdn.net/u014595668/article/details/51160783#:~:text=ps%20%2Def%7Cgrep%20lsnr%7Cgrep%20%2Dv%20%27grep%27%7Cgrep%20%2Dv%20%27ocfs%27%7Cawk%20%27%7Bprint%249%7D%27^C

[grid@rac1 grid]$ ps -ef|grep lsnr|grep -v 'grep'|grep -v 'ocfs'|awk '{print$9}'

LISTENER_SCAN1

LISTENER

[grid@rac1 grid]$ srvctl status asm -a

ASM is running on rac2,rac1

ASM is enabled.

[grid@rac1 grid]$

检查crs状态

[root@rac2 software]# su - grid

[grid@rac2 ~]$ crsctl check crs

CRS-4638: Oracle High Availability Services is online

CRS-4537: Cluster Ready Services is online

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

检查Clusterware资源

[grid@rac2 ~]$ crs_stat -t -v

Name Type R/RA F/FT Target State Host

----------------------------------------------------------------------

ora....ER.lsnr ora....er.type 0/5 0/ ONLINE ONLINE rac1

ora....N1.lsnr ora....er.type 0/5 0/0 ONLINE ONLINE rac1

ora.ORC.dg ora....up.type 0/5 0/ ONLINE ONLINE rac1

ora.asm ora.asm.type 0/5 0/ ONLINE ONLINE rac1

ora.cvu ora.cvu.type 0/5 0/0 ONLINE ONLINE rac1

ora.gsd ora.gsd.type 0/5 0/ OFFLINE OFFLINE

ora....network ora....rk.type 0/5 0/ ONLINE ONLINE rac1

ora.oc4j ora.oc4j.type 0/1 0/2 ONLINE ONLINE rac1

ora.ons ora.ons.type 0/3 0/ ONLINE ONLINE rac1

ora....SM1.asm application 0/5 0/0 ONLINE ONLINE rac1

ora....C1.lsnr application 0/5 0/0 ONLINE ONLINE rac1

ora.rac1.gsd application 0/5 0/0 OFFLINE OFFLINE

ora.rac1.ons application 0/3 0/0 ONLINE ONLINE rac1

ora.rac1.vip ora....t1.type 0/0 0/0 ONLINE ONLINE rac1

ora....SM2.asm application 0/5 0/0 ONLINE ONLINE rac2

ora....C2.lsnr application 0/5 0/0 ONLINE ONLINE rac2

ora.rac2.gsd application 0/5 0/0 OFFLINE OFFLINE

ora.rac2.ons application 0/3 0/0 ONLINE ONLINE rac2

ora.rac2.vip ora....t1.type 0/0 0/0 ONLINE ONLINE rac2

ora....ry.acfs ora....fs.type 0/5 0/ ONLINE ONLINE rac1

ora.scan1.vip ora....ip.type 0/0 0/0 ONLINE ONLINE rac1

检查集群节点

[grid@rac2 ~]$ olsnodes -n

rac1 1

rac2 2

检查两个节点上的Oracle TNS监听器进程

[grid@rac2 ~]$ ps -ef|grep lsnr|grep -v 'grep'|grep -v 'ocfs'|awk '{print$9}'

LISTENER

确认针对Oracle Clusterware文件的Oracle ASM功能:

如果在 Oracle ASM 上暗转过了OCR和表决磁盘文件,则以Grid Infrastructure 安装所有者的身份,使用给下面的命令语法来确认当前正在运行已安装的Oracle ASM:

[grid@rac2 ~]$ srvctl status asm -a

ASM is running on rac2,rac1

ASM is enabled.

[grid@rac2 ~]$