RFB(Receptive Field Block)

ECCV2018:Receptive Field Block Net for Accurate and Fast Object Detection一文中提出了一种新的特征提取模块——RFB,该文的出发点是模拟人类视觉的感受野从而加强网络的特征提取能力,在结构上RFB借鉴了Inception的思想,主要是在Inception的基础上加入了空洞卷积,从而有效增大了感受野

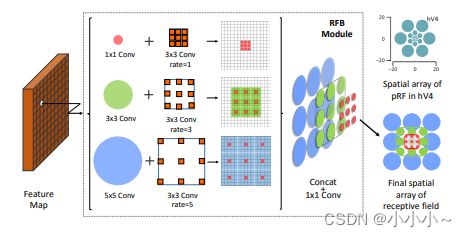

RFB的效果示意图如所示,其中中间虚线框部分就是RFB结构。RFB结构主要有两个特点:

1、不同尺寸卷积核的卷积层构成的多分枝结构,这部分可以参考Inception结构。在Figure2的RFB结构中也用不同大小的圆形表示不同尺寸卷积核的卷积层。

2、引入了dilated卷积层,dilated卷积层之前应用在分割算法Deeplab中,主要作用也是增加感受野,和deformable卷积有异曲同工之处。

在RFB结构中用不同rate表示dilated卷积层的参数。结构中最后会将不同尺寸和rate的卷积层输出进行concat,达到融合不同特征的目的。结构中用3种不同大小和颜色的输出叠加来展示。最后一列中将融合后的特征与人类视觉感受野做对比,从图可以看出是非常接近的,这也是这篇文章的出发点,换句话说就是模拟人类视觉的感受野进行RFB结构的设计。

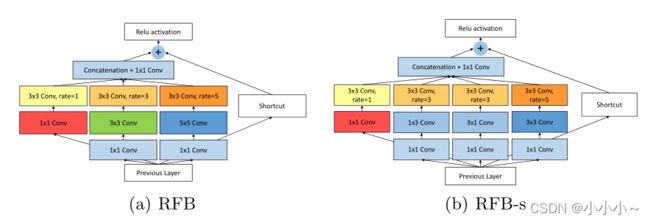

如下图是两种RFB结构示意图。(a)是RFB,整体结构上借鉴了Inception的思想,主要不同点在于引入3个dilated卷积层(比如3×3conv, rate=1),这也是这篇文章增大感受野的主要方式之一。(b)是RFB-s。RFB-s和RFB相比主要有两个改进,一方面用3×3卷积层代替5×5卷积层,另一方面用1×3和3×1卷积层代替3×3卷积层,主要目的应该是为了减少计算量,类似Inception后期版本对Inception结构的改进。

RFB代码实现

class BasicConv(nn.Module):

def __init__(self, in_planes, out_planes, kernel_size, stride=1, padding=0, dilation=1, groups=1, relu=True, bn=True, bias=False):

super(BasicConv, self).__init__()

self.out_channels = out_planes

self.conv = nn.Conv2d(in_planes, out_planes, kernel_size=kernel_size, stride=stride, padding=padding, dilation=dilation, groups=groups, bias=bias)

self.bn = nn.BatchNorm2d(out_planes,eps=1e-5, momentum=0.01, affine=True) if bn else None

self.relu = nn.ReLU(inplace=True) if relu else None

def forward(self, x):

x = self.conv(x)

if self.bn is not None:

x = self.bn(x)

if self.relu is not None:

x = self.relu(x)

return x

class BasicRFB(nn.Module):

def __init__(self, in_planes, out_planes, stride=1, scale = 0.1, visual = 1):

super(BasicRFB, self).__init__()

self.scale = scale

self.out_channels = out_planes

inter_planes = in_planes // 8

self.branch0 = nn.Sequential(

BasicConv(in_planes, 2*inter_planes, kernel_size=1, stride=stride),

BasicConv(2*inter_planes, 2*inter_planes, kernel_size=3, stride=1, padding=visual, dilation=visual, relu=False)

)

self.branch1 = nn.Sequential(

BasicConv(in_planes, inter_planes, kernel_size=1, stride=1),

BasicConv(inter_planes, 2*inter_planes, kernel_size=(3,3), stride=stride, padding=(1,1)),

BasicConv(2*inter_planes, 2*inter_planes, kernel_size=3, stride=1, padding=visual+1, dilation=visual+1, relu=False)

)

self.branch2 = nn.Sequential(

BasicConv(in_planes, inter_planes, kernel_size=1, stride=1),

BasicConv(inter_planes, (inter_planes//2)*3, kernel_size=3, stride=1, padding=1),

BasicConv((inter_planes//2)*3, 2*inter_planes, kernel_size=3, stride=stride, padding=1),

BasicConv(2*inter_planes, 2*inter_planes, kernel_size=3, stride=1, padding=2*visual+1, dilation=2*visual+1, relu=False)

)

self.ConvLinear = BasicConv(6*inter_planes, out_planes, kernel_size=1, stride=1, relu=False)

self.shortcut = BasicConv(in_planes, out_planes, kernel_size=1, stride=stride, relu=False)

self.relu = nn.ReLU(inplace=False)

def forward(self,x):

x0 = self.branch0(x)

x1 = self.branch1(x)

x2 = self.branch2(x)

out = torch.cat((x0,x1,x2),1)

out = self.ConvLinear(out)

short = self.shortcut(x)

out = out*self.scale + short

out = self.relu(out)

return out

class BasicRFB_a(nn.Module):

def __init__(self, in_planes, out_planes, stride=1, scale = 0.1):

super(BasicRFB_a, self).__init__()

self.scale = scale

self.out_channels = out_planes

inter_planes = in_planes //4

self.branch0 = nn.Sequential(

BasicConv(in_planes, inter_planes, kernel_size=1, stride=1),

BasicConv(inter_planes, inter_planes, kernel_size=3, stride=1, padding=1,relu=False)

)

self.branch1 = nn.Sequential(

BasicConv(in_planes, inter_planes, kernel_size=1, stride=1),

BasicConv(inter_planes, inter_planes, kernel_size=(3,1), stride=1, padding=(1,0)),

BasicConv(inter_planes, inter_planes, kernel_size=3, stride=1, padding=3, dilation=3, relu=False)

)

self.branch2 = nn.Sequential(

BasicConv(in_planes, inter_planes, kernel_size=1, stride=1),

BasicConv(inter_planes, inter_planes, kernel_size=(1,3), stride=stride, padding=(0,1)),

BasicConv(inter_planes, inter_planes, kernel_size=3, stride=1, padding=3, dilation=3, relu=False)

)

self.branch3 = nn.Sequential(

BasicConv(in_planes, inter_planes//2, kernel_size=1, stride=1),

BasicConv(inter_planes//2, (inter_planes//4)*3, kernel_size=(1,3), stride=1, padding=(0,1)),

BasicConv((inter_planes//4)*3, inter_planes, kernel_size=(3,1), stride=stride, padding=(1,0)),

BasicConv(inter_planes, inter_planes, kernel_size=3, stride=1, padding=5, dilation=5, relu=False)

)

self.ConvLinear = BasicConv(4*inter_planes, out_planes, kernel_size=1, stride=1, relu=False)

self.shortcut = BasicConv(in_planes, out_planes, kernel_size=1, stride=stride, relu=False)

self.relu = nn.ReLU(inplace=False)

def forward(self,x):

x0 = self.branch0(x)

x1 = self.branch1(x)

x2 = self.branch2(x)

x3 = self.branch3(x)

out = torch.cat((x0,x1,x2,x3),1)

out = self.ConvLinear(out)

short = self.shortcut(x)

out = out*self.scale + short

out = self.relu(out)

return out