Hugging Face PEFT 调优实战附代码

Hugging Face PEFT 调优实战附代码

PEFT调优大模型

- Hugging Face PEFT 调优实战附代码

- 使用Hugging Face PEFT Library

-

- 先快速上手使用PEFT

- LoRA详解

- 实际应用--Kaggle 项目实战

-

- 总结:

- 其他场景应用

-

- DreamBooth fine-tuning with LoRA

- P-tuning for sequence classification

- 后序

使用Hugging Face PEFT Library

Hugging Face PEFT博客链接: link.

这篇博客是因为看了B站《李沐带你读论文》中《大模型时代下做科研的四个思路【论文精读·52】》链接: link.所受到启发,正好hugging face出了PEFT库,所以就打算记录下。

先快速上手使用PEFT

1.让我们考虑使用LoRA对bigscience/mt0-large【model card链接: link】进行微调的情况

备注:代码中的加号指的是增加的模块

from transformers import AutoModelForSeq2SeqLM

**+ from peft import get_peft_model, LoraConfig, TaskType**

model_name_or_path = "bigscience/mt0-large"

tokenizer_name_or_path = "bigscience/mt0-large"

2.创建PEFT方法对应的配置

peft_config = LoraConfig(

task_type=TaskType.SEQ_2_SEQ_LM, inference_mode=False, r=8, lora_alpha=32, lora_dropout=0.1

)

3.通过调用get_peft_model封装基础transformer模型

model = AutoModelForSeq2SeqLM.from_pretrained(model_name_or_path)

+ model = get_peft_model(model, peft_config)

+ model.print_trainable_parameters()

# output: trainable params: 2359296 || all params: 1231940608 || trainable%: 0.19151053100118282

就是这样!训练循环的其余部分保持不变。请参考示例peft_lora_seq2seq。Ipynb的端到端示例。

4.当您准备保存模型以进行推理时,只需执行以下操作。

model.save_pretrained("output_dir")

# model.push_to_hub("my_awesome_peft_model") also works

这将只保存已训练的增量PEFT权重。例如,您可以在这里找到使用LoRA在twitter_complaint raft数据集上调优的bigscience/T0_3B: smangrul/ twitter_埋怨_bigscience_t0_3b_lora_seq_2_seq_lm。请注意,它只包含2个文件:adapter_config。adapter_model. json和adapter_model.bin,后者只有19MB。

5.要加载它以进行推理,请遵循下面的代码片段:

from transformers import AutoModelForSeq2SeqLM

+ from peft import PeftModel, PeftConfig

peft_model_id = "smangrul/twitter_complaints_bigscience_T0_3B_LORA_SEQ_2_SEQ_LM"

config = PeftConfig.from_pretrained(peft_model_id)

model = AutoModelForSeq2SeqLM.from_pretrained(config.base_model_name_or_path)

+ model = PeftModel.from_pretrained(model, peft_model_id)

tokenizer = AutoTokenizer.from_pretrained(config.base_model_name_or_path)

model = model.to(device)

model.eval()

inputs = tokenizer("Tweet text : @HondaCustSvc Your customer service has been horrible during the recall process. I will never purchase a Honda again. Label :", return_tensors="pt")

with torch.no_grad():

outputs = model.generate(input_ids=inputs["input_ids"].to("cuda"), max_new_tokens=10)

print(tokenizer.batch_decode(outputs.detach().cpu().numpy(), skip_special_tokens=True)[0])

# 'complaint'

LoRA详解

关于LoRA模型网上已经有很多很不错的文章解读和分析:

以下搬运一些不错的文章:

文章链接:

论文阅读:LORA-大型语言模型的低秩适应

参数高效微调方法:LoRA

52. LoRA: 大模型微调的高效加速方案

实际应用–Kaggle 项目实战

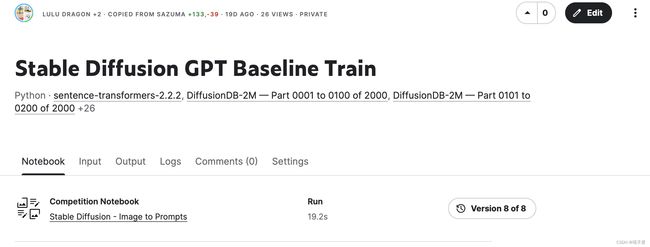

这是Kaggle的竞赛项目《Stable Diffusion - Image to Prompts》链接: link.

比赛任务简要介绍:此次比赛是用生成图片来给出生成这些图片的Prompt,说得简单点,也就是根据图片来生成文本,生成文本与ground truth之间的评价标准是使用mean cosine similarity score平均余弦相似度分数来计量准确度。

比赛任务简要介绍:此次比赛是用生成图片来给出生成这些图片的Prompt,说得简单点,也就是根据图片来生成文本,生成文本与ground truth之间的评价标准是使用mean cosine similarity score平均余弦相似度分数来计量准确度。

这个比赛数据集由选手自己准备,所以kaggle平台上就涌现了很多的由GPT以及Stable Diffsion生成的很多数据集。

我的Kaggle笔记本

笔记链接链接: link.这个是公开的笔记,我的笔记是基于公开笔记的修改,模型换成了vit-gpt2

1.首先导入需要用到的各种库

1.首先导入需要用到的各种库

import os

import random

import numpy as np

import pandas as pd

from PIL import Image

from tqdm.notebook import tqdm

from scipy import spatial

from sklearn.model_selection import train_test_split

import torch

from torch import nn

from torch.utils.data import Dataset, DataLoader

from torch.optim.lr_scheduler import CosineAnnealingLR

from torchvision import transforms

import timm

from timm.utils import AverageMeter

import sys

sys.path.append('../input/sentence-transformers-222/sentence-transformers')

from sentence_transformers import SentenceTransformer

import warnings

import torch

from PIL import Image

import requests

from transformers import VisionEncoderDecoderModel, ViTImageProcessor, AutoTokenizer

import glob

import matplotlib.pyplot as plt

warnings.filterwarnings('ignore')

有些库不一定能用到并且有些库可能是重复的,我们先不管它

2.加载peft轮子,因为kaggle竞赛要求所有提交的笔记是不能联网的,所以在https://pypi.org/这个网站上找轮子,peft轮子的链接link.

!pip install /kaggle/input/peft-whl/peft-0.2.0-py3-none-any.whl

3.导入peft包中要用的工具

from peft import get_peft_model, LoraConfig, TaskType

4.设定好参数

peft_config = LoraConfig(

r=3,

lora_alpha=3,

target_modules=["query", "value"],

lora_dropout=0.1,

bias="none",

#modules_to_save=["classifier"],

)

5.设定好Config参数

class CFG:

model_name = 'vit-gpt2-image-captioning'

#input_size = 224

batch_size = 150

num_epochs = 3

lr = 1e-4

seed = 42

max_length = 512

num_beams = 5 #它是指在生成一个序列的时候,预测模型同时生成的候选解的数量。

gen_kwargs = {"max_length": max_length, "num_beams": num_beams}

6.设定随机种子参数

def seed_everything(seed):

os.environ['PYTHONHASHSEED'] = str(seed)

random.seed(seed)

np.random.seed(seed)

torch.manual_seed(seed)

if torch.cuda.is_available():

torch.cuda.manual_seed(seed)

torch.backends.cudnn.deterministic = True

seed_everything(CFG.seed)

7.加载模型,模型使用的是vit-gpt2模型同样来自Hugging Face

model = VisionEncoderDecoderModel.from_pretrained("nlpconnect/vit-gpt2-image-captioning")

feature_extractor = ViTImageProcessor.from_pretrained("nlpconnect/vit-gpt2-image-captioning")

tokenizer = AutoTokenizer.from_pretrained("nlpconnect/vit-gpt2-image-captioning")

8.将模型装载进PEFT

model = get_peft_model(model, peft_config)

model.print_trainable_parameters()

可以很明显的发现,调用了PEFT之后训练参数下降到了4%,参数下降小了,就说明能够以更快的时间去得到训练结构,GPU运行和占用的时间也能大大降低

可以很明显的发现,调用了PEFT之后训练参数下降到了4%,参数下降小了,就说明能够以更快的时间去得到训练结构,GPU运行和占用的时间也能大大降低

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

9.构建Dataset

关于数据,因为数据集较大,我直接给大家笔记链接链接: link.

class DiffusionDataset(Dataset):

def __init__(self, df):

self.df = df

#self.transform = transform

self.st_model = SentenceTransformer(

'/kaggle/input/sentence-transformers-222/all-MiniLM-L6-v2',

device='cpu'

)

def __len__(self):

return len(self.df)

def __getitem__(self, idx):

row = self.df.iloc[idx]

images = []

#for image_path in row['filepath']:

i_image = Image.open(row['filepath'])

images.append(i_image)

#image = Image.open(row['filepath'])

pixel_values = feature_extractor(images=images, return_tensors="pt").pixel_values

pixel_values = pixel_values.squeeze(0)

#image = self.transform(image)

prompt = row['prompt']

prompt_embeddings = self.st_model.encode(

prompt,

show_progress_bar=False,

convert_to_tensor=True

)

#prompt = prompt.to(device)

return pixel_values, prompt_embeddings

class DiffusionCollator:

def __init__(self):

self.st_model = SentenceTransformer(

'/kaggle/input/sentence-transformers-222/all-MiniLM-L6-v2',

device='cpu'

)

def __call__(self, batch):

pixel_values, prompts = zip(*batch)

#pixel_values = torch.stack(pixel_values, dim=1)

prompt_embeddings = self.st_model.encode(

prompts,

show_progress_bar=False,

convert_to_tensor=True

)

return pixel_values, prompt_embeddings

def get_dataloaders(

trn_df,

val_df,

#input_size,

batch_size

):

#transform = transforms.Compose([

# transforms.Resize(input_size),

# transforms.ToTensor(),

# transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225]),

#])

trn_dataset = DiffusionDataset(trn_df)

val_dataset = DiffusionDataset(val_df)

#collator = DiffusionCollator()

dataloaders = {}

dataloaders['train'] = DataLoader(

dataset=trn_dataset,

shuffle=True,

batch_size=batch_size,

pin_memory=True,

num_workers=2,

drop_last=True,

#collate_fn=collator

)

dataloaders['val'] = DataLoader(

dataset=val_dataset,

shuffle=False,

batch_size=batch_size,

pin_memory=True,

num_workers=2,

drop_last=False,

# collate_fn=collator

)

return dataloaders

10.加载transformer的enbedding模型,它会将文本转化为384维的Embedding,后期用生成的文本也转化为Embedding和ground truth的Embedding去对比,用mean cosine similarity score平均余弦相似度来计算出相似度

from pathlib import Path

sys.path.append('../input/sentence-transformers-222/sentence-transformers')

from sentence_transformers import SentenceTransformer, models

comp_path = Path('../input/stable-diffusion-image-to-prompts/')

st_model = SentenceTransformer('/kaggle/input/sentence-transformers-222/all-MiniLM-L6-v2')

11.余弦相似度函数

def cosine_similarity(y_trues, y_preds):

return np.mean([

1 - spatial.distance.cosine(y_true, y_pred)

for y_true, y_pred in zip(y_trues, y_preds)

])

12.训练模块

def train(

trn_df,

val_df,

model_name,

#input_size,

batch_size,

num_epochs,

lr,

gen_kwargs,

):

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

dataloaders = get_dataloaders(

trn_df,

val_df,

#input_size,

batch_size

)

#model = model

#model.set_grad_checkpointing()

model.to(device)

optimizer = torch.optim.AdamW(model.parameters(), lr=lr)

ttl_iters = num_epochs * len(dataloaders['train'])

scheduler = CosineAnnealingLR(optimizer, T_max=ttl_iters, eta_min=1e-6)

criterion = nn.CosineEmbeddingLoss()

best_score = -1.0

for epoch in range(num_epochs):

train_meters = {

'loss': AverageMeter(),

'cos': AverageMeter(),

}

model.train()

for X, y in tqdm(dataloaders['train'], leave=False):

X, y = X.to(device), y.to(device)

optimizer.zero_grad()

X_out = model.generate(X, **{"max_length": 30, "num_beams": 6})

preds = tokenizer.batch_decode(X_out, skip_special_tokens=True)

embeddings = st_model.encode(preds,convert_to_tensor=True).to(device)

#y = st_model.encode(y).to(device)

#preds = [pred.strip() for pred in preds]

target = torch.ones(X.size(0)).to(device)

loss = criterion(embeddings, y, target).requires_grad_(True)

loss.backward()

optimizer.step()

scheduler.step()

trn_loss = loss.item()

trn_cos = cosine_similarity(

embeddings.detach().cpu().numpy(),

y.detach().cpu().numpy()

)

train_meters['loss'].update(trn_loss, n=X.size(0))

train_meters['cos'].update(trn_cos, n=X.size(0))

print('Epoch {:d} / trn/loss={:.4f}, trn/cos={:.4f}'.format(

epoch + 1,

train_meters['loss'].avg,

train_meters['cos'].avg))

val_meters = {

'loss': AverageMeter(),

'cos': AverageMeter(),

}

model.eval()

for X, y in tqdm(dataloaders['val'], leave=False):

X, y = X.to(device), y.to(device)

with torch.no_grad():

X_out = model.generate(X, **{"max_length": 30, "num_beams": 6})

preds = tokenizer.batch_decode(X_out, skip_special_tokens=True)

embeddings = st_model.encode(preds,convert_to_tensor=True).to(device)

#y_embed = st_model.encode(y).to(device)

target = torch.ones(X.size(0)).to(device)

loss = criterion(embeddings, y, target)

val_loss = loss.item()

val_cos = cosine_similarity(

embeddings.detach().cpu().numpy(),

y.detach().cpu().numpy()

)

val_meters['loss'].update(val_loss, n=X.size(0))

val_meters['cos'].update(val_cos, n=X.size(0))

print('Epoch {:d} / val/loss={:.4f}, val/cos={:.4f}'.format(

epoch + 1,

val_meters['loss'].avg,

val_meters['cos'].avg))

if val_meters['cos'].avg > best_score:

best_score = val_meters['cos'].avg

torch.save(model.state_dict(), f'{model_name}.pth')

13.pandas加载数据

df = pd.read_csv('/kaggle/input/diffusiondb-data-cleansing/diffusiondb.csv')

trn_df, val_df = train_test_split(df, test_size=0.1, random_state=CFG.seed)

14.训练模型

train(trn_df, val_df, CFG.model_name, CFG.batch_size, CFG.num_epochs, CFG.lr, CFG.gen_kwargs)

网络问题,连接超时好像就会这样,建议使用wandb,来跟踪模型loss以及train和val loss的变化

总结:

如果不用PEFT方法,将此笔记本使用vit-gpt2模型运行2轮差不多就需要9小时的时间,但是调用了PEFT方法之后,运行3轮在9小时内就能全部运行完。

其他场景应用

这里给到大家相关github链接,大家可以根据指引在本地进行部署训练和优化。

GitHub PEFT LibraryGithub链接

一些我比较感兴趣的类目以及内容介绍

DreamBooth fine-tuning with LoRA

Github链接

本指南演示了如何使用LoRA(一种低秩近似技术)用CompVis/stable-diffusion-v1-4模型对DreamBooth进行微调。

虽然LoRA最初是作为一种减少大型语言模型中可训练参数数量的技术设计的,但该技术也可以应用于扩散模型。对扩散模型进行完整的模型微调是一项耗时的任务,这就是为什么像DreamBooth或text Inversion这样的轻量级技术受到欢迎的原因。随着LoRA的引入,在特定数据集上定制和微调模型变得更快。

在本指南中,我们将使用PEFT的GitHub仓库中提供的DreamBooth微调脚本。请随意探索它并了解其工作原理。

P-tuning for sequence classification

Github链接

为下游任务微调大型语言模型是具有挑战性的,因为它们有太多的参数。要解决这个问题,您可以使用提示将模型引导到特定的下游任务,而无需完全微调模型。通常,这些提示是手工设计的,可能不切实际,因为您需要非常大的验证集才能找到最佳提示。P-tuning是一种在连续空间中自动搜索和优化更好提示的方法。

阅读GPT理解,也可以了解更多关于p-tuning的信息。

本指南将向您展示如何训练一个roberta-large模型(但您也可以使用任何GPT、OPT或BLOOM模型),并在GLUE基准的mrpc配置上进行p-调优。

在开始之前,请确保你已经安装了所有必要的库:

后序

保留个坑位,后面会逐步更新kaggle PEFT调优的Inference笔记以及更多的项目实战笔记

如果有纰漏,请随时联系我,感谢留下宝贵评论,如果大佬也打kaggle竞赛的话,麻烦大佬带带我(抱大腿)