基于ORB-SLAM2实时构建稠密点云

本篇博客最早发布于实验室公共博客,但已无人维护,现迁移至个人博客

ORB-SLAM2是特征点法的经典之作,但是只能构建稀疏二维点云,限制了其使用范围

因期望可以实现移动机器人的导航功能,需要构建三维点云,再通过octomap_server转化为八叉树地图或者栅格地图,运用到实际中去,因此需要在原代码上做些改动

稠密点云的重建工作高翔博士已经完成了一些,感谢高博!我的改进是基于它做的

ORBSLAM2_with_pointcloud_map安装

首先Git下

https://github.com/gaoxiang12/ORBSLAM2_with_pointcloud_map

安装的时候大家可能会遇到一些问题,幸好已经有大佬帮大家做了整理

安装指导

https://blog.csdn.net/Xuesengxinyi/article/details/93469079?ops_request_misc=%257B%2522request%255Fid%2522%253A%2522164968342916782094899573%2522%252C%2522scm%2522%253A%252220140713.130102334.pc%255Fall.%2522%257D&request_id=164968342916782094899573&biz_id=0&utm_medium=distribute.pc_search_result.none-task-blog-2allfirst_rank_ecpm_v1~rank_v31_ecpm-4-93469079.142v7pc_search_result_control_group,157v4control&utm_term=orb+slam保存为pcd&spm=1018.2226.3001.4187

安装问题总结

https://blog.csdn.net/c417469898/article/details/104631814

建议大家先按着安装指导走一遍,有问题再去看安装问题总结

大家可能需要卸载高版本的Eigen,Eigen的卸载和安装可以看下面的博客

https://blog.csdn.net/qq_45401419/article/details/118358687

ORB_SLAM2_with_pointcloud编译报错

../lib/libORB_SLAM2.so:对‘DBoW2::FORB::fromString(cv::Mat&, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&)’未定义的引用

../lib/libORB_SLAM2.so:对‘DBoW2::FORB::toString[abi:cxx11](cv::Mat const&)’未定义的引用

参考解决:

https://blog.csdn.net/chishuideyu/article/details/77189561

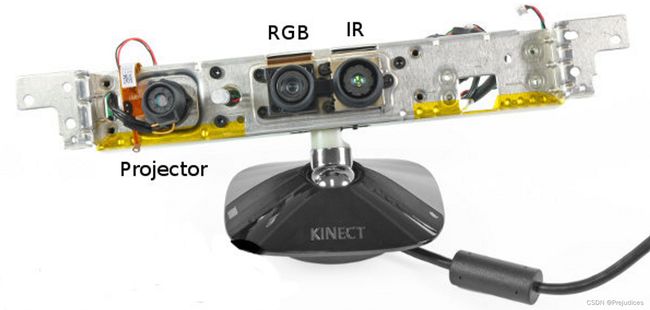

Kinect V1标定与yaml文件编写

实验室暂时只有Kinect V1的RGBD相机,虽然不是最新的产品,但是有的玩就行

ubuntu16.04安装微软kinect V1驱动

主要参考:

https://blog.csdn.net/hongge_smile/article/details/107856087

./RedistMaker的时候报错:

/usr/include/ni/XnOS.h:327:49: error: cannot allocate an object of abstract type 'XnSensorDepthGenerator'

#define XN_NEW(type, ...) new type(__VA_ARGS__)

参考网上的一些资料:

https://stackoverflow.com/questions/36601607/c-abstract-error

https://github.com/avin2/SensorKinect/pull/5

在XnSensorGenerator.h和XnSensorDepthGenerator.h文件中添加:

XnStatus GetPixelCoordinatesInViewPoint(xn::ProductionNode& other, XnUInt32 x, XnUInt32 y, XnUInt32& altX, XnUInt32& altY)

{

return 0;

}

再次./RedistMaker,成功运行,打开终端,输入:

roslaunch freenect_launch freenect.launch

打开rviz,Add→by topic,添加后可查看图像,说明RGBD相机启动成功

Kinect V1相机内参标定

http://wiki.ros.org/openni_launch/Tutorials/IntrinsicCalibration

https://blog.csdn.net/puqian13/article/details/103163585

标定完成后再~/.ros目录下会有camera_info子目录,里面存放着标定完成后的yaml文件

但是该yaml文件和ORB-SLAM2需要的参数设置文件还是有出入的

ORB-SLAM源码中的相机参数设置

https://blog.csdn.net/catpico/article/details/120688795

注意相机内参和畸变系数的对应值

但是ORB-SLAM2设置文件中有几个参数还是有待商榷的,主要是以下两个

- 尺度因子(DepthScaleFactor)

- Camera.bf

有以下讨论:

http://www.luohanjie.com/2017-04-05/the-problem-of-calibration-data-in-orb-slam2.html

https://github.com/raulmur/ORB_SLAM2/issues/737

Camera.bf是fx乘baseline,Kinect的baseline参照上面应该是7.5cm

Kinect V1构造

http://wiki.ros.org/kinect_calibration/technical

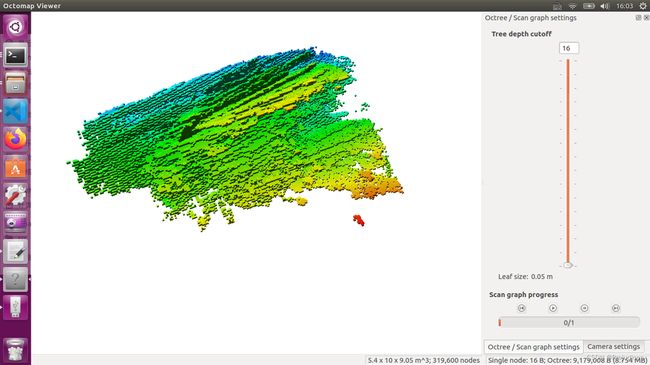

点云地图与八叉树地图保存

高翔博士实现了点云地图的显示功能,我在其基础上增加了3个功能:

- 点云地图的保存

- 八叉树地图的绘制与保存

- rviz实时增量式显示稠密点云

这一节先讲前两个

在ponitcloudmapping.h中添加头文件:

#include 添加八叉树点云生成函数

octomap::Pointcloud generateOctoCloud(KeyFrame* kf, cv::Mat& color, cv::Mat& depth);

在viewer函数中完成具体的功能

void PointCloudMapping::viewer()

{

pcl::visualization::CloudViewer viewer("viewer");

octomap::OcTree tree(0.05);

while(1)

{

{

unique_lock<mutex> lck_shutdown( shutDownMutex );

if (shutDownFlag)

{

break;

}

}

{

unique_lock<mutex> lck_keyframeUpdated( keyFrameUpdateMutex );

keyFrameUpdated.wait( lck_keyframeUpdated );

}

// keyframe is updated

size_t N=0;

{

unique_lock<mutex> lck( keyframeMutex );

N = keyframes.size();

}

for ( size_t i=lastKeyframeSize; i<N ; i++ )

{

PointCloud p = generatePointCloud( keyframes[i], colorImgs[i], depthImgs[i] );

octomap::Pointcloud octo_p = generateOctoCloud( keyframes[i], colorImgs[i], depthImgs[i] );

globalMap += p;

Isometry3d Tcw = ORB_SLAM2::Converter::toSE3Quat( keyframes[i]->GetPose());

Isometry3d Twc = Tcw.inverse();

tree.insertPointCloud(octo_p,octomap::point3d( Twc(0,3), Twc(1,3), Twc(2,3) ));

}

string map_path="/home/redwall/ORB_SLAM2_modified/map";

// 更新中间节点的占据信息并写入磁盘

tree.updateInnerOccupancy();

cout<<"saving octomap ... "<<endl;

tree.writeBinary( map_path+"/octomap/octomap.bt" );

PointCloud tmp;

PointCloud::Ptr cloudPointer(new PointCloud);

cloudPointer = globalMap.makeShared();

cout<<"cloudPointer size:"<<cloudPointer->size()<<endl;

voxel.setInputCloud( cloudPointer );

voxel.filter( tmp );

globalMap.swap( tmp );

cout<<"saving global map ..."<<endl;

pcl::io::savePCDFileBinary(map_path+"/pointcloud/map.pcd",globalMap);

viewer.showCloud( cloudPointer );

cout<<"show global map, size="<<globalMap.points.size()<<endl;

lastKeyframeSize = N;

}

}

实际运行,地图保存至目标位置,我们分别用pcl_viewer和octovis查看,效果如下:

其中运行octovis的时候报错

octovis: error while loading shared libraries: libQGLViewer.so.2: cannot open shared object file: No such file or directory

1、删除octomap和octovis目录下的build文件夹

2、在octomap_devel目录下新建build文件夹,并编译安装

3、locate libQGLViewer.so.2

4、将路径下的libQGLViewer.so*复制到/usr/local/lib目录下

问题解决,顺利查看octomap地图

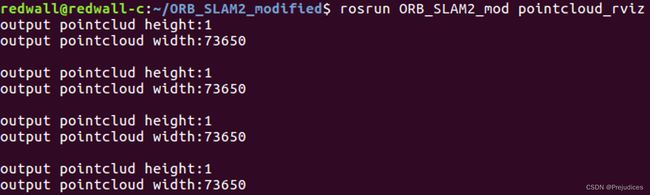

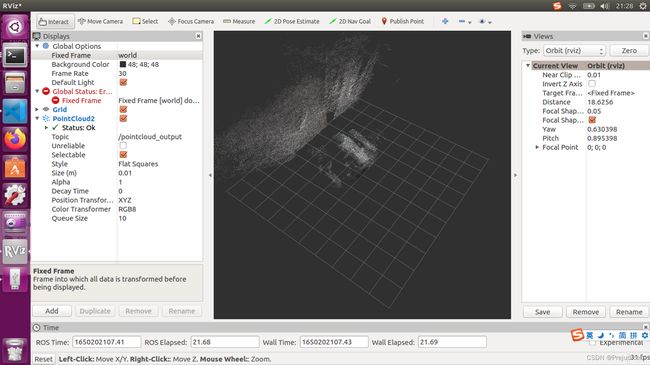

rviz实时增量式显示稠密点云

这部分折磨了我好几天,索性最后解决了,其实博客上已经有许多大佬给出了实现,比如博主熊猫飞天,我也阅读了其博客,写的还是很好的,值得学习

我的实现相较于熊猫飞天稍微简单些,也较易于理解

1版

1版是个失败的版本,大家时间赶的话可以直接跳过

编写测试程序如下:

int main(int argc, char **argv)

{

ros::init(argc, argv, "RGBD");

ros::start();

if(argc != 3)

{

cerr << endl << "Usage: rosrun ORB_SLAM2 RGBD path_to_vocabulary path_to_settings" << endl;

ros::shutdown();

return 1;

}

// Create SLAM system. It initializes all system threads and gets ready to process frames.

ORB_SLAM2::System SLAM(argv[1],argv[2],ORB_SLAM2::System::RGBD,true);

ImageGrabber igb(&SLAM);

ros::NodeHandle nh;

message_filters::Subscriber<sensor_msgs::Image> rgb_sub(nh, "/camera/rgb/image_color", 1);

message_filters::Subscriber<sensor_msgs::Image> depth_sub(nh, "/camera/depth_registered/image_raw", 1);

typedef message_filters::sync_policies::ApproximateTime<sensor_msgs::Image, sensor_msgs::Image> sync_pol;

message_filters::Synchronizer<sync_pol> sync(sync_pol(10), rgb_sub,depth_sub);

sync.registerCallback(boost::bind(&ImageGrabber::GrabRGBD,&igb,_1,_2));

ros::Publisher pcl_pub = nh.advertise<sensor_msgs::PointCloud2>("/pointcloud_output",10);

sensor_msgs::PointCloud2 output;

pcl::PointCloud<pcl::PointXYZRGB> cloud(*SLAM.mpPointCloudMapping->globalMap);

// cout<<"mpTracker globalMap size:"<mpPointCloudMapping->globalMap->size()<

cout<<"system globalMap size:"<<SLAM.mpPointCloudMapping->globalMap->size()<<endl;

// cloud=*SLAM.mpPointCloudMapping->globalMap;

cout<<"cloud size:"<<cloud.size()<<endl;

cout<<"Transform globalMap...."<<endl;

pcl::toROSMsg(*SLAM.mpPointCloudMapping->globalMap,output);

cout<<"output pointclud height:"<<output.height<<endl;

cout<<"output pointcloud width:"<<output.width<<endl;

cout<<"----------------------"<<endl;

output.header.stamp = ros::Time::now();

output.header.frame_id = "world";

double dt=0.1;

ros::Rate loop_rate(1/dt);

while(ros::ok())

{

// cout<<"mpTracker globalMap size:"<mpPointCloudMapping->globalMap->size()<

cout<<"system globalMap size:"<<SLAM.mpPointCloudMapping->globalMap->size()<<endl;

cout<<"cloud size:"<<cloud.size()<<endl;

cout<<"output pointclud height:"<<output.height<<endl;

cout<<"output pointcloud width:"<<output.width<<endl<<endl;

pcl_pub.publish(output);

ros::spinOnce();

loop_rate.sleep();

}

// ros::spin();

// Stop all threads

SLAM.Shutdown();

// Save camera trajectory

SLAM.SaveKeyFrameTrajectoryTUM("KeyFrameTrajectory.txt");

ros::shutdown();

return 0;

}

pcl::PointCloud转化为sensor_msgs::PointCloud2后,实际sensor_msgs::PointCloud2中并没有数据

同时用*SLAM.mpPointCloudMapping->globalMap初始化同类型的cloud后,cloud的size也是0

运行截图如下:

但是地图是可以正常保存的(在pointcloudmapping.cc中更改了地图保存的位置)

编写地图加载与发布rviz话题的测试程序,如下:

#include 运行正常且可在Rviz中显示

并且global map的size应等于output pointcloud width

width是点云的长度

令人头秃

2版

好吧,我实在是有些愚蠢,也实在粗心

我一开始以为是shared_ptr指针的问题,所以把代码里的所有智能指针都替换成了普通指针,结果自然是白费功夫,当然唯一的好处是代码的整洁性有所提高(手动狗头)

//Initialize the Tracking thread

//(it will live in the main thread of execution, the one that called this constructor)

mpPointCloudMapping = new PointCloudMapping( resolution );

mpTracker = new Tracking(this, mpVocabulary, mpFrameDrawer, mpMapDrawer,

mpMap, mpPointCloudMapping, mpKeyFrameDatabase, strSettingsFile, mSensor);

Tracking::Tracking(System *pSys, ORBVocabulary* pVoc, FrameDrawer *pFrameDrawer, MapDrawer *pMapDrawer, Map *pMap, PointCloudMapping* pPointCloud, KeyFrameDatabase* pKFDB, const string &strSettingPath, const int sensor):

mState(NO_IMAGES_YET), mSensor(sensor), mbOnlyTracking(false), mbVO(false), mpORBVocabulary(pVoc),

mpPointCloudMapping( pPointCloud ),

mpKeyFrameDB(pKFDB), mpInitializer(static_cast<Initializer*>(NULL)), mpSystem(pSys),

mpFrameDrawer(pFrameDrawer), mpMapDrawer(pMapDrawer), mpMap(pMap), mnLastRelocFrameId(0)

{

// Load camera parameters from settings file

言归正传,我仔细研究了一下终端的输出,发现下面这段代码实际上只在SLAM系统初始化的时候执行了一次

cout<<"Transform globalMap...."<<endl;

pcl::toROSMsg(*SLAM.mpPointCloudMapping->globalMap,output);

cout<<"output pointclud height:"<<output.height<<endl;

cout<<"output pointcloud width:"<<output.width<<endl;

cout<<"----------------------"<<endl;

所以每次发布点云的width都是0,难怪rviz中啥都没有

在研究一下发现图像的处理都是在回调函数void ImageGrabber::GrabRGBD( )中完成

那么在回调函数中转化点云数据格式是否可行呢?

试了一下可以正常运行,实际运行中会出现fixed frame不存在的问题,运行tf的静态变换可以解决问题,参考如下:

https://blog.csdn.net/xu_fengyu/article/details/86562827

https://blog.csdn.net/weixin_44827364/article/details/104190513

https://stackoverflow.com/questions/52420672/ros-rviz-how-to-visualize-a-point-cloud-that-doesnt-have-a-fixed-frame-transfo

实际效果如下:

(文件太大embed不了…)

roslaunch问题

为了避免每次运行程序都要在终端输上很长一串,费时还容易出错,所以我写了个launch文件,如下:

<launch>

<!-- <include file="$(find freenect_launch)/launch/examples/freenect-registered-xyzrgb.launch" /> -->

<arg name="vocabulary_path" default="/home/redwall/ORB_SLAM2/Vocabulary/ORBvoc.txt"/>

<arg name="settings_path" default="/home/redwall/ORB_SLAM2_modified/Examples/ROS/ORB_SLAM2/my_yaml.yaml"/>

<node pkg="ORB_SLAM2" type="RGBD" name="ORB_ROS_RGBD" output="screen" args="$(arg vocabulary_path) $(arg settings_path)" />

<!-- <node pkg="rviz" type="rviz" name="rviz"/> -->

</launch>

在终端roslaunch上面编写的launch文件,Ctrl+C之后,目录下没有保存点云地图和八叉树地图

而用rosrun命令,则可以保存两种地图

同时修改之后,没有保存“KeyFrameTrajectory.txt”文件

这说明while循环后的语句都没有执行,关键的SLAM.Shutdown( )也没有执行

这说明没有等待各个线程完成,SLAM就终止了,当然也不会去进行全局BA,这可能会影响建图的精度,这是后面需要继续研究和改进的