Pandas学习笔记(1)- Pandas基础

分享一下近期的Pandas学习笔记~

文章目录

- 01 Pandas基本功能

-

- 1-1 Series基本功能

- 1-2 DataFrame基本功能

- 02 Pandas描述性统计

-

- 2-1 基本统计计算

- 2-2 统计信息汇总

-

- .describe()

- 03 Pandas函数应用

-

- 3-0 Python运算符与Pandas方法的映射关系

- 3-1 表格函数

- 3-2 行列函数

-

- 官方文档

-

- .apply

- .method(lambda x: *func*)

- 3.3 元素函数

-

- 官方文档

-

- Series.map()

- DataFrame.applymap()

- 04 Pandas索引重建

-

- 4-1 基本操作

-

- 官方文档

-

- .reindex()

- .reindex_like(*df2*)

- 4-2 Useful application

- 05 Pandas迭代

- 06 Pandas排序

-

- 6-1 按index排序

-

- 官方文档

- 6-2 按values排序

-

- 官方文档

- 6-3 排序算法

- 07 Pandas字符串和文本

- 08 Pandas选项和自定义

- 09 Pandas索引和选择数据

- 10 Pandas统计函数

- 11-Pandas窗口函数

-

- Window functions

-

- 官方文档

-

- Window functions

- .rolling()

- .expanding()

- .ewm()

- 12-DataFrame应用聚合

-

- 01 常用操作

-

- 1-1 DataFrame应用聚合

- 1-2 在整个数据框上应用聚合

- 13-Pandas GroupBy

- 14-Pandas合并/连接

- 15-Pandas处理时间

-

- 15-1 处理时间序列

- 15-2 时间差(Timedelta)

- 16-Pandas分类数据

- 17-Pandas可视化

- 18-Pandas I/O工具

01 Pandas基本功能

1-1 Series基本功能

| No. | 属性或方法 | 描述 |

|---|---|---|

| 1 | axes | 返回行轴标签列表 |

| 2 | dtype | 返回对象的数据类型 |

| 3 | empty | Boolean |

| 4 | ndim | 返回底层数据的维数 |

| 5 | size | 返回元素数量 |

| 6 | values | 将系列作为ndarray返回 |

| 7 | head() | 返回前n行 |

| 8 | tail() | 返回后n行 |

示例

- axes

import pandas as pd

import numpy as np

## Create a series with 100 random numbers

s = pd.Series(np.random.randn(4))

print("The axes are:")

print(s.axes)

输出结果:

>>> The axes are:

>>> [RangeIndex(start=0, stop=4, step=1)]]

上述结果是0到5的值列表的紧凑格式,即:[0,1,2,3,4]。

- values

import pandas as pd

import numpy as np

## Create a series with 4 random numbers

s = pd.Series(np.random.randn(4))

print s

print("The actual data series is:")

print(s.values)

输出结果:

0 1.787373

1 -0.605159

2 0.180477

3 -0.140922

dtype: float64

The actual data series is:

[ 1.78737302 -0.605158851 0.18047664 -0.1409218]

1-2 DataFrame基本功能

| No. | 属性或方法 | 描述 |

|---|---|---|

| 1 | T | 转置 |

| 2 | axes | 返回一个列,行轴标签和列轴标签作为唯一的成员 |

| 3 | dtypes | 返回此对象中的数据类型 |

| 4 | empty | |

| 5 | ndim | 轴/数组维度大小 |

| 6 | shape | 返回表示DataFrame的维度的tuple |

| 7 | size | |

| 8 | values | NDFrame的Numpy表示 |

| 9 | head() | |

| 10 | tail() |

示例

- axes

import pandas as pd

import numpy as np

#Create a Dictionary of series

d = {'Name': pd.Series(['Tom','James','Ricky','Vin','Steve','Minsu','Jack'],

'Age': pd.Series([25,26,25,23,30,29,23]),

'Rating': pd.Series([4.23,3.24,3.98,2.56,3.20,4.6,3.8])}

#Create a DataFrame

df = pd.DataFrame(d)

print("Row axis labels and column axis labels are:")

print(df.axes)

输出结果:

Row axis labels and column axis labels are:

[RangeIndex(start=0, stop=7, step=1), Index([u'Age',u'Name',u'Rating'],

dtype='object')]

- values:将DataFrame中的实际数据作为NDarray返回

import pandas as pd

import numpy as np

#Create a Dictionary of series

d = {'Name': pd.Series(['Tom','James','Ricky','Vin','Steve','Minsu','Jack'],

'Age': pd.Series([25,26,25,23,30,29,23]),

'Rating': pd.Series([4.23,3.24,3.98,2.56,3.20,4.6,3.8])}

#Create a DataFrame

df = pd.DataFrame(d)

print("The actual data in our data frame is:")

print(df.values)

print(type(df.values))

输出结果:

The actual data in our data frame is:

[[25 'Tom' 4.23]

[26 'James' 3.24]

[25 'Ricky' 3.98]

[23 'Vin' 2.56]

[30 'Steve' 3.2]

[29 'Minsu' 4.6]

[23 'Jack' 3.8]]

02 Pandas描述性统计

有很多方法来聚合计算DataFrame的描述性统计信息和其他相关操作。其中大多数是sum(),mean()等聚合函数,但其中一些,如sumsum(),产生一个相同大小的对象。一般来说,这些方法采用轴参数,就像ndarray.{sum, std, …},但轴可以通过名称或整数来指定:

- index (axis=0, 默认)

- columns (axis=1)

2-1 基本统计计算

| No. | 函数 | 描述 |

|---|---|---|

| 1 | count() | 非空观测数 |

| 2 | sum() | 所有值之和 |

| 3 | mean() | 所有值的平均数 |

| 4 | median() | 所有值的中位数 |

| 5 | mode() | 求模 |

| 6 | std | Bressel标准偏差 |

| 7 | min(), max() | |

| 8 | abs() | |

| 9 | prod() | 数组元素的乘积 |

| 10 | cumsum() | 累计总和 |

| 11 | cumprod() | 累计乘积 |

2-2 统计信息汇总

.describe()

重要参数: include

- object:汇总字符串列

- number:汇总数字列

- all:将所有列汇总在一起

示例

import pandas as pd

import numpy as np

#Create a Dictionary of series

d = {'Name': pd.Series(['Tom','James','Ricky','Vin','Steve','Minsu','Jack','Lee','David','Gasper','Betina','Andres']),

'Age': pd.Series([25,26,25,23,30,29,23,34,40,30,51,46]),

'Rating': pd.Series([4.23,3.24,3.98,2.56,3.20,4.6,3.8,3.78,2.98,4.80,4.10,3.65])}

#Create a DataFrame

df = pd.DataFrame(d)

print(df.describe())

print(df.describe(include=['object']))

print(df.describe(include='all'))

输出结果:

[OUTPUT-1]

Age Rating

count 12.000000 12.000000

mean 31.833333 3.743333

std 9.232682 0.661628

min 23.000000 2.560000

25% 25.000000 3.230000

50% 29.500000 3.790000

75% 35.500000 4.132500

max 51.000000 4.800000

[OUTPUT-2]

Name

count 12

unique 12

top Ricky

freq 1

[OUTPUT-3]

Age Name Rating

count 12.000000 12 12.000000

unique NaN 12 NaN

top NaN Ricky NaN

freq NaN 1 NaN

mean 31.833333 NaN 3.743333

std 9.232682 NaN 0.661628

min 23.000000 NaN 2.560000

25% 25.000000 NaN 3.230000

50% 29.500000 NaN 3.790000

75% 35.500000 NaN 4.132500

max 51.000000 NaN 4.800000

03 Pandas函数应用

- 表格函数应用:pipe()

- 行或列函数应用:apply()

- 元素函数应用:applymap()

3-0 Python运算符与Pandas方法的映射关系

| Python运算符 | Pandas方法 |

|---|---|

| + | add() |

| - | sub() 、subtract() |

| * | mul()、multiply() |

| / | truediv()、div()、divide() |

| // | floordiv() |

| % | mod() |

| ** | pow() |

- A motive to use Pandas functions: 可直接传入缺失值处理参数fill_value = value

3-1 表格函数

可以通过将函数和适当数量的参数作为管道参数来执行自定义操作。

提示:pipe()方法不改变原DataFrame,返回一个新的DataFrame。

示例

import pandas as pd

import numpy as np

def adder(ele1,ele2):

return ele1+ele2

df = pd.DataFrame(np.random.randn(5,3),columns=['col1','col2','col3'])

print(df)

print(df.pipe(adder,2))

输出结果:

[Running] set PYTHONIOENCODING=utf8 && python -u "c:\Users\Administrator\Desktop\tester.py"

col1 col2 col3

0 -1.002507 0.378120 -0.190947

1 0.596924 -0.199729 -0.532229

2 -2.944083 -1.040721 -1.952132

3 -0.423783 -0.593296 -0.461555

4 1.799471 0.552055 0.826377

col1 col2 col3

0 0.997493 2.378120 1.809053

1 2.596924 1.800271 1.467771

2 -0.944083 0.959279 0.047868

3 1.576217 1.406704 1.538445

4 3.799471 2.552055 2.826377

[Done] exited with code=0 in 0.822 seconds

官方文档:

#Use .pipe when chaining together functions that expect Series, DataFrames or Groupby objects.

#Instead of writing:

>>> func(g(h(df), arg1=a), arg2=b, arg3=c)

#You can write:

>>> df.pipe(h).pipe(g, arg1=a).pipe(func, arg2=b, arg3=c)

3-2 行列函数

可以使用apply()方法沿DataFrame的轴应用任意函数(axis参数)。默认情况下,操作按列执行,将每列列为数组。

官方文档

.apply

DataFrame.apply(func, axis=0, raw=False, result_type=None, args=(), **kwds)

Apply a function along an axis of the DataFrame.

Objects passed to the function are Series objects whose index is either the DataFrame’s index (axis=0) or the DataFrame’s columns (axis=1). By default (result_type=None), the final return type is inferred from the return type of the applied function. Otherwise, it depends on the result_type argument.

result_type: {‘expand’, ‘reduce’, ‘broadcast’, None}, default None

only act when axis=1

- expand: list-like results will be turned into columns.

- reduce: returns a Series if possible.

- broadcast: results will be broadcast to the original shape of the DataFrame, the original index and columns will be retained.

示例-1

import pandas as pd

import numpy as np

df = pd.DataFrame(np.random.randn(5,3),columns=['col1','col2','col3'])

print(df)

print(df.apply(np.mean))

print(df.apply(np.mean,axis=1))

输出结果:

[OUTPUT-1]

col1 col2 col3

0 0.071918 -0.933174 -0.458476

1 -0.512443 0.455947 0.552345

2 -0.035369 0.563239 -2.477740

3 -1.204645 1.383545 -1.124751

4 0.872696 -0.702149 0.360365

[OUTPUT-2]

col1 -0.161569

col2 0.153481

col3 -0.629651

dtype: float64

[OUTPUT-3]

0 -0.439911

1 0.165283

2 -0.649957

3 -0.315284

4 0.176971

dtype: float64

示例-2

import pandas as pd

import numpy as np

df = pd.DataFrame(np.random.randn(5,3), columns=['col1','col2','col3'])

df_1 = df.apply(lambda x: x.max() - x.min())

print(df)

print(df_1)

输出结果

col1 col2 col3

0 -0.030535 0.165539 1.048389

1 1.679696 -0.845977 -0.597818

2 0.638122 -2.360784 -1.897171

3 0.529273 -1.702445 -0.679899

4 -0.287787 0.065303 0.120348

col1 1.967483

col2 2.526324

col3 2.945560

dtype: float64

.method(lambda x: func)

去官方文档找补充说明

3.3 元素函数

在DataFrame上的方法.applymap()和类似于在Series上的map()接受任何python函数,并返回单个值。

官方文档

Series.map()

Series.map(arg, na_action=None)

Map valuesof Series according to input correspondence.

Used for substituting each value in a Series with another value, that may be derived from a function, a dict or a series.

arg: function, collections.abc.Mapping subclass or Series

na_action: {None, ‘ignore’}, default None

- if ‘ignore’, propagate NaN values, without passing them to the mapping correspondence.

DataFrame.applymap()

DataFrame.applymap(func)

Apply a function to a Dataframe elementwise.

This method applies a function that accepts and returns a scalar to every element of a DataFrame.

Returns a DataFrame.

func: callable (Python function, returns a single value from a single value.)

示例-1

import pandas as pd

import numpy as np

df = pd.DataFrame(np.random.randn(5,3), columns=['col1','col2','col3'])

df_col1 = df['col1'].map(lambda x: x*100)

print(df)

print(df_col1)

输出结果:

col1 col2 col3

0 0.654253 -1.337191 0.609194

1 -1.319780 -0.525150 -1.183926

2 1.325296 2.053831 -0.414354

3 0.947637 -1.838234 -0.615808

4 -2.769647 0.517323 1.485486

0 65.425259

1 -131.977998

2 132.529611

3 94.763670

4 -276.964732

Name: col1, dtype: float64

示例-2

import pandas as pd

import numpy as np

df = pd.DataFrame(np.random.randn(5,3), columns=['col1','col2','col3'])

df_1 = df.applymap(lambda x: x*100)

print(df)

print(df_1)

输出结果:

col1 col2 col3

0 -1.782354 1.762709 2.508919

1 0.068842 -0.120896 0.224085

2 -0.159717 -0.589034 0.606623

3 2.335862 -0.977052 -0.814304

4 0.544693 2.109825 0.925263

col1 col2 col3

0 -178.235406 176.270857 250.891855

1 6.884196 -12.089569 22.408473

2 -15.971700 -58.903353 60.662266

3 233.586210 -97.705154 -81.430437

4 54.469309 210.982525 92.526264

04 Pandas索引重建

4-1 基本操作

官方文档

.reindex()

DataFrame.reindex(**kwargs)

Confrom Series/DataFrame to new index with optional filling logic.

keywords for axes: array-like, optional

method: {None, backfill/bfill, pad/ffill, nearest}

- Method to use for filling holes in reindexed DataFrame.

copy: bool, default True

level: int or name

DataFrame.reindex supports two calling conventions

- (index=index_labels, columns=column_labels,…)

- (labels, axis={‘index’,‘columns’},…)

官方示例

#Create a dataframe with some fictional data.

import pandas as pd

idx = ['Firefox', 'Chrome', 'Safari', 'IE10', 'Konqueror']

df = pd.DataFrame({

'http_status': [200, 200, 404, 404, 301],

'response_time': [0.04, 0.02, 0.07, 0.08, 1.0],

index = idx

})

new_idx = ['Safari', 'Iceweasel', 'Comodo Dragon', 'IE10', 'Chrome']

df_1 = df.reindex(new_idx)

df_2 = df.reindex(new_idx, fill_value=0)

df_3 = df.reindex(['http_status', 'user_agent'], axis='columns')

print(df)

print(df_1)

print(df_2)

print(df_3)

输出结果:

[df] http_status response_time

Firefox 200 0.04

Chrome 200 0.02

Safari 404 0.07

IE10 404 0.08

Konqueror 301 1.00

[df_1] http_status response_time

Safari 404.0 0.07

Iceweasel NaN NaN

Comodo Dragon NaN NaN

IE10 404.0 0.08

Chrome 200.0 0.02

[df_2] http_status response_time

Safari 404 0.07

Iceweasel 0 0.00

Comodo Dragon 0 0.00

IE10 404 0.08

Chrome 200 0.02

[df_3] http_status user_agent

Firefox 200 NaN

Chrome 200 NaN

Safari 404 NaN

IE10 404 NaN

Konqueror 301 NaN

.reindex_like(df2)

DataFrame.reindex_like(other, method=None, copy=True, limit=None, tolerance=None)

官方示例

import pandas as pd

df1 = pd.DataFrame([

[24.3, 75.7, 'high'],

[31, 87.8, 'high'],

[22, 71.6, 'medium'],

[35, 95, 'medium']

],

columns=['temp_celsius', 'temp_fahrenheit', 'windspeed'],

index=pd.date_range(start='2020-10-09', end='2020-10-12', freq='D')

)

df2 = pd.DataFrame([

[28, 'low'],

[30, 'low'],

[35.1, 'medium']

],

columns=['temp_celsius', 'windspeed'],

index=pd.date_range(start='2020-10-09', end='2020-10-11', freq='D')

)

print('df1:\n', df1)

print('df2:\n', df2)

df3=df2.reindex_like(df1)

print('df3')

输出结果:

df1:

temp_celsius temp_fahrenheit windspeed

2020-10-09 24.3 75.7 high

2020-10-10 31.0 87.8 high

2020-10-11 22.0 71.6 medium

2020-10-12 35.0 95.0 medium

df2:

temp_celsius windspeed

2020-10-09 28.0 low

2020-10-10 30.0 low

2020-10-11 35.1 medium

df3:

temp_celsius temp_fahrenheit windspeed

2020-10-09 28.0 NaN low

2020-10-10 30.0 NaN low

2020-10-11 35.1 NaN medium

2020-10-12 NaN NaN NaN

4-2 Useful application

| 序号 | 应用 | expression | note |

|---|---|---|---|

| 1 | 重建索引与其他对象对齐 | df1=df1.reindex_like(df2) | |

| 2 | 填充时重新加注 | df1=df1.reindex_like(df2, method=‘ffill’) | method: {None, backfill/bfill, pad/ffill, nearest} |

| 3 | 重命名 | df.rename(columns={}, index={}, inplace=bool) | inplace=True, 在原DataFrame上进行修改 |

05 Pandas迭代

Pandas对象之间的基本迭代取决于其类型。当迭代一个系列时,它被视为数组。基本迭代产生:

- Series - 值

- DataFrame - 列标签

具体规则

- 迭代DataFrame提供列名。

- iteritems() - 迭代(key, value)对

- iterrows() - 迭代(索引,系列)对

- itertuples() - 以namedtuples迭代行

示例

import pandas as pd

import numpy as np

df = pd.DataFrame(np.random.randn(4,3), columns=['A','B','C'], index = pd.date_range(start='2020-10-09', end='2020-10-12', freq='D'))

print(df)

# 1-迭代列名

for col in df:

print(col)

# 2-迭代(key,value)

for key, value in df.iteritems():

print(key,value)

# 3-迭代(index, Series)

for i, s in df.iterrows():

print(i, s)

# 4-迭代namedtuples

for row in df.itertuples():

print(row)

输出结果:

A B C

2020-10-09 -1.030271 -0.906981 -0.659338

2020-10-10 0.767799 -2.445025 -0.174059

2020-10-11 2.696855 -0.277801 0.759680

2020-10-12 0.062061 -0.026966 -1.539570

# 1-迭代列名

A

B

C

# 2-迭代(key,value)

A 2020-10-09 -1.030271

2020-10-10 0.767799

2020-10-11 2.696855

2020-10-12 0.062061

Freq: D, Name: A, dtype: float64

B 2020-10-09 -0.906981

2020-10-10 -2.445025

2020-10-11 -0.277801

2020-10-12 -0.026966

Freq: D, Name: B, dtype: float64

C 2020-10-09 -0.659338

2020-10-10 -0.174059

2020-10-11 0.759680

2020-10-12 -1.539570

Freq: D, Name: C, dtype: float64

# 3-迭代(index, Series)

2020-10-09 00:00:00 A -1.030271

B -0.906981

C -0.659338

Name: 2020-10-09 00:00:00, dtype: float64

2020-10-10 00:00:00 A 0.767799

B -2.445025

C -0.174059

Name: 2020-10-10 00:00:00, dtype: float64

2020-10-11 00:00:00 A 2.696855

B -0.277801

C 0.759680

Name: 2020-10-11 00:00:00, dtype: float64

2020-10-12 00:00:00 A 0.062061

B -0.026966

C -1.539570

Name: 2020-10-12 00:00:00, dtype: float64

# 4-迭代namedtuples

Pandas(Index=Timestamp('2020-10-09 00:00:00', freq='D'), A=-1.0302714533530397, B=-0.9069810243015767, C=-0.659338259231412)

Pandas(Index=Timestamp('2020-10-10 00:00:00', freq='D'), A=0.7677989980168033, B=-2.445024835320876, C=-0.1740588651490762)

Pandas(Index=Timestamp('2020-10-11 00:00:00', freq='D'), A=2.696855141735038, B=-0.2778005750782568, C=0.7596804345501883)

Pandas(Index=Timestamp('2020-10-12 00:00:00', freq='D'), A=0.062061332307071566, B=-0.02696577075586974, C=-1.5395702171663705)

06 Pandas排序

Pandas有两类排序方式:

- .sort_index() - 按标签

- .sort_values() - 按实际值

6-1 按index排序

官方文档

DataFrame.sort_index (axis=0, level=None, ascending=True, inplace=False, kind=‘quicksort’, na_position=‘last’, sort_remaining=True, ignore_index=False, key=None)

Sort object by labels (along an axis).

Returns a new DataFrame sorted by label if inplace argument is False, otherwise updates the original DataFrame and returns None.

- axis: {0/‘index’, 1/‘columns’}

- ascending: bool or list of bools, default True

- inplace: bool, default False

- kind: {quicksort, mergesort, heapsort}, default quicksort

- sort_remaining: bool, default True

** If true and sorting by level and index is multilevel, sort by other levels too(in order) after sorting by specified level.- ignore_index: bool, default False

** If True, the resulting axis will be labeled 0,1,…,n-1.- key: callable, optional

** If not None,apply the key function to the index values before sorting.

示例

import pandas as pd

df = pd.DataFrame({'b': [1, 2, 3, 4], 'A': [4, 3, 2, 1]}, index=['A', 'C', 'b', 'd'])

df1 = df.sort_index(key=lambda x: x.str.lower())

df2 = df.sort_index(axis=1, key=lambda x: x.str.lower())

print('df:\n',df)

print('df1:\n',df1)

print('df2:\n',df2)

输出结果:

df:

b A

A 1 4

C 2 3

b 3 2

d 4 1

df1:

b A

A 1 4

b 3 2

C 2 3

d 4 1

df2:

A b

A 4 1

C 3 2

b 2 3

d 1 4

6-2 按values排序

官方文档

DataFrame.sort_values (by, axis=0, ascending=True, inplace=False, kind=‘quicksort’, na_position=‘last’, ignore_index=False, key=None)

Sort by the values along either axis.

by: str or list of str

key: callable, optional

** Apply the key function to the values before sorting.

na_position: {‘first’, ‘last’}, default ‘last’

** Puts NaNs at the beginning if ‘first’; ‘last’ puts NaNs at the end.

示例

import pandas as pd

import numpy as np

df = pd.DataFrame({

'col1': ['A', 'A', 'B', np.nan, 'D', 'C'],

'col2': [2, 1, 9, 8, 7, 4],

'col3': [0, 1, 9, 4, 2, 3],

'col4': ['a', 'B', 'a', 'D', 'c', 'd']

})

df1 = df.sort_values(by=['col4','col1'], ascending=False, na_position='first', key=lambda col: col.str.lower())

print('df:\n', df)

print('df1:\n', df1)

输出结果:

df:

col1 col2 col3 col4

0 A 2 0 a

1 A 1 1 B

2 B 9 9 a

3 NaN 8 4 D

4 D 7 2 c

5 C 4 3 d

df1:

col1 col2 col3 col4

3 NaN 8 4 D

5 C 4 3 d

4 D 7 2 c

1 A 1 1 B

2 B 9 9 a

0 A 2 0 a

6-3 排序算法

sort_values()提供了mergesort, heapsort和quicksort三种类型的算法。

具体算法原理需要自己后续进行学习。

07 Pandas字符串和文本

Pandas提供了一组字符串函数,可以方便地对字符串数据进行操作,并忽略NaN值。这些方法几乎都是用Python字符串函数,因此,可以将Series对象转换为String对象执行字符串操作。

| No. | expression | description |

|---|---|---|

| 1 | lower()和upper() | 大小写转换 |

| 2 | len() | |

| 3 | strip() | 删除两侧换行符和空格 |

| 4 | split(’’) | 拆分字符串,默认按空格拆分 |

| 5 | cat(sep=’’) | 使用给定的分隔符链接元素 |

| 6 | get_dummies() | |

| 7 | contains(pattern) | 检查是否包含,返回布尔值 |

| 8 | replace(a,b) | 用b替换a |

| 9 | repeat(value) | 重复每个元素指定的次数 |

| 10 | count(pattern) | |

| 11 | startswith(pattern)和endswith(pattern) | |

| 12 | find(pattern) | 返回pattern第一次出现的位置 |

| 13 | swapcase | 变换大小写 |

| 14 | islower()、isupper()和isnumeric() |

示例

import pandas as pd

import numpy as np

#cat(sep=pattern)

s = pd.Series(['Tom', 'William Rick', 'John', 'Alber@t'])

print('cat(sep=pattern\n)', s.str.cat(sep=' <> '))

#get_dummies()

print('get_dummies()\n',s.str.get_dummies())

输出结果:

cat(sep=pattern

) Tom <> William Rick <> John <> Alber@t

get_dummies()

Alber@t John Tom William Rick

0 0 0 1 0

1 0 0 0 1

2 0 1 0 0

3 1 0 0 0

08 Pandas选项和自定义

Pandas提供API 来定义其行为的某些方面:

- get_option() - 查看参数值

- set_option() - 修改当前值

- reset_option() - 重置为默认值

- describe_option()

- option_context()

常用参数表

| No. | param | default | description |

|---|---|---|---|

| 1 | display.max_rows | 60 | 显示的最大行数 |

| 2 | display.max_columns | 20 | 显示的最大列数 |

| 3 | display.min_rows | 10 | 显示的最小行数 |

| 4 | display.precision | 6 | 显示十进制数的精度 |

option_context(): 上下文管理器,用于临时设置语句中的选项。

import pandas as pd

with pd.option_context('display.max_rows', 10):

print(pd.get_option('display.max_rows'))

print(pd.get_option('display.max_rows'))

输出结果:

>>> 10

>>> 60

09 Pandas索引和选择数据

Pandas目前支持三种类型的多轴索引:

| No. | expression | description | 访问方式 |

|---|---|---|---|

| 1 | .loc() | 基于标签 | 1)单个标量标签;2)标签列表;3)切片对象;4)一个布尔数组 |

| 2 | .iloc() | 基于整数 | 1)整数;2)整数列表;3)系列值 |

| 3 | .ix() | 基于标签和整数 |

属性访问:可以使用属性运算符.来选择列

import pandas as pd

import numpy as np

df = pd.DataFrame(np.random.randn(8,4), columns=['A','B','C','D'])

print(df.A)

输出结果:

0 0.058104

1 -1.791556

2 1.314500

3 -0.393179

4 -0.123881

5 -0.470718

6 0.404181

7 0.331097

Name: A, dtype: float64

10 Pandas统计函数

- pct_change()函数

将每个元素与前一个元素进行比较,并计算变化百分比。

import pandas as pd

import numpy as np

s = pd.Series([1,2,3,4,5,4])

print(s.pct_change())

df = pd.DataFrame(np.random.randn(5,2), columns=['A','B'])

print(df)

print(df.pct_change())

print(df.pct_change(axis=1))

输出结果:

0 NaN

1 1.000000

2 0.500000

3 0.333333

4 0.250000

5 -0.200000

dtype: float64

A B

0 -0.265247 -0.457053

1 -1.457512 -0.789424

2 -0.001411 0.179580

3 1.064182 -2.083008

4 -1.826242 -0.793300

A B

0 NaN NaN

1 4.494926 0.727203

2 -0.999032 -1.227482

3 -755.284391 -12.599323

4 -2.716100 -0.619157

A B

0 NaN -0.766848

1 NaN -1.650977

2 NaN -9.941456

3 NaN 174.946646

4 NaN -2.333735

- 协方差

| 对象 | expression | description |

|---|---|---|

| Series | s1.cov(s2) | 计算序列对象之间的协方差 |

| DataFrame | df.cov() | 计算所有列之间的协方差 |

import pandas as pd

import numpy as np

df = pd.DataFrame(np.random.randn(8,6),columns=['a','b','c','d','e','f'])

pd.set_option('display.precision',2)

print(df)

print(df.cov())

输出结果:

a b c d e f

0 0.60 0.90 0.32 0.25 0.89 -0.40

1 0.03 -0.62 -0.26 -0.66 -0.57 -0.23

2 -0.80 -1.70 1.37 1.99 -0.39 1.40

3 1.32 -0.04 0.08 -0.26 -1.55 0.61

4 1.50 0.80 -0.15 -0.08 -0.04 2.41

5 0.39 -0.53 1.28 0.13 -0.29 0.06

6 1.33 0.12 0.89 -0.46 -0.09 2.04

7 -0.26 0.20 -1.01 0.82 -0.02 1.17

a b c d e f

a 0.70 0.48 -5.45e-02 -0.51 -6.33e-02 2.63e-01

b 0.48 0.71 -3.56e-01 -0.37 2.52e-01 7.28e-02

c -0.05 -0.36 6.78e-01 0.19 -2.60e-03 5.00e-03

d -0.51 -0.37 1.93e-01 0.72 9.47e-02 1.41e-01

e -0.06 0.25 -2.60e-03 0.09 4.62e-01 -3.29e-02

f 0.26 0.07 5.00e-03 0.14 -3.29e-02 1.09e+00

- 相关性

| 对象 | expression | desciption |

|---|---|---|

| Series | s1.corr(s2) | 两个系列之间的线性相关关系 |

| DataFrame | df.corr() | 每个系列之间的相关关系 |

method:

- pearson - default

- spearman

- kendall

- 数据排名

Series.rank(axis=0, method=‘average’, numeric_only=None, na_option=‘keep’, ascending=True, pct=False)

DataFrame.rank(axis=0, method=‘average’, numeric_only=None, na_option=‘keep’, ascending=True, pct=False)

- pct: whether or not to display the returned rankings in percentile form

11-Pandas窗口函数

为了处理数字数据,pandas提供了几个变体,如滚动、展开和指数移动窗口统计的权重。其中包括综合、均值、中位数、方差、协方差、相关性等。

Window functions

官方文档

Window functions

For working with data, a number of window functions are provided for computing common window or rolling statistics. Among these are count, sum, mean, median, correlation, variance, covariance, standard deviation, skewness, and kurtosis.

The rolling() and expanding() functions can be used directly from DataFrameGroupby objects.

.rolling()

DataFrame.rolling(window, min_periods=None, center=False, win_type=None, on=None, axis=0, closed=None)

- window: the number of observations used for calculating the statistic.

- min_periods: Minimum number of observations in window required to have a value (otherwise result is NA), default 1

- center: set the labels at the center of the window

- win_type: Provide a window type. If None, all points are evenly weighted.

- axis: default 0

- closed: Make the interval closed on the ‘right’, ‘left’, ‘both’ or

import pandas as pd

import numpy as np

df = pd.DataFrame(

np.random.randn(10,4),

index = pd.date_range(start='2020-10-01',periods=10),

columns = ['a','b','c','d']

)

print(df)

print('rolling object is:\n', df.rolling(window=3))

df1 = df.rolling(window=3).mean()

df2 = df.mean()

df3 = df.rolling(window=3, axis=1).sum()

print(df1)

print(df2)

print(df3)

输出结果

a b c d

2020-10-01 0.359021 0.975536 -0.410890 0.492394

2020-10-02 0.698119 -0.930699 0.280391 -0.556639

2020-10-03 -1.253823 -0.112911 -0.752055 -2.453324

2020-10-04 -1.598137 -0.279228 1.649673 -1.083345

2020-10-05 -0.194925 0.054404 0.329581 -0.240446

2020-10-06 0.345830 -0.431603 0.746752 -1.536757

2020-10-07 0.604672 -0.411267 -1.343458 0.029782

2020-10-08 -0.847642 -0.685667 0.274624 1.127408

2020-10-09 0.324988 -0.634140 0.426157 1.001843

2020-10-10 0.197737 1.788079 -1.134500 -0.051234

rolling object is:

Rolling [window=3,center=False,axis=0]

a b c d

2020-10-01 NaN NaN NaN NaN

2020-10-02 NaN NaN NaN NaN

2020-10-03 -0.065561 -0.022692 -0.294184 -0.839190

2020-10-04 -0.717947 -0.440946 0.392670 -1.364436

2020-10-05 -1.015628 -0.112578 0.409066 -1.259038

2020-10-06 -0.482411 -0.218809 0.908669 -0.953516

2020-10-07 0.251859 -0.262822 -0.089042 -0.582474

2020-10-08 0.034287 -0.509512 -0.107361 -0.126522

2020-10-09 0.027339 -0.577024 -0.214226 0.719678

2020-10-10 -0.108306 0.156091 -0.144573 0.692672

a -0.136416

b -0.066750

c 0.006628

d -0.327032

dtype: float64

a b c d

2020-10-01 NaN NaN 0.923668 1.057040

2020-10-02 NaN NaN 0.047811 -1.206947

2020-10-03 NaN NaN -2.118789 -3.318290

2020-10-04 NaN NaN -0.227692 0.287100

2020-10-05 NaN NaN 0.189060 0.143539

2020-10-06 NaN NaN 0.660979 -1.221608

2020-10-07 NaN NaN -1.150053 -1.724943

2020-10-08 NaN NaN -1.258685 0.716365

2020-10-09 NaN NaN 0.117005 0.793859

2020-10-10 NaN NaN 0.851316 0.602345

.expanding()

DataFrame.expanding(min_periods=1, center=None, axis=0)

Provide expanding transformations.

Returns: a Window sub-classed for the particular operation

import pandas as pd

import numpy as np

df = pd.DataFrame(

np.random.randn(10,4),

index = pd.date_range(start='2020-10-01',periods=10),

columns = ['a','b','c','d']

)

print(df)

df1 = df.expanding(min_periods=3).sum()

df2 = df.expanding(min_periods=2, axis=1).sum()

print(df1)

print(df2)

a b c d

2020-10-01 0.303716 0.435884 -1.405003 -2.633900

2020-10-02 0.155709 -0.272056 -1.940426 0.539937

2020-10-03 -1.184132 -0.539030 0.496024 -0.224957

2020-10-04 1.709428 -1.639442 -0.509769 -0.643674

2020-10-05 -0.091363 -1.316263 0.863490 -1.228090

2020-10-06 0.140226 -0.439552 1.356944 -0.073533

2020-10-07 0.150266 -1.140866 -1.017271 1.922022

2020-10-08 1.184664 -1.242892 0.424909 2.071605

2020-10-09 0.416593 0.090358 -0.160895 0.172974

2020-10-10 -1.044040 -1.205647 0.274271 -1.460815

a b c d

2020-10-01 NaN NaN NaN NaN

2020-10-02 NaN NaN NaN NaN

2020-10-03 -0.724706 -0.375202 -2.849404 -2.318920

2020-10-04 0.984722 -2.014644 -3.359173 -2.962593

2020-10-05 0.893359 -3.330907 -2.495683 -4.190683

2020-10-06 1.033585 -3.770459 -1.138739 -4.264216

2020-10-07 1.183851 -4.911325 -2.156011 -2.342194

2020-10-08 2.368516 -6.154216 -1.731102 -0.270588

2020-10-09 2.785109 -6.063858 -1.891997 -0.097614

2020-10-10 1.741068 -7.269506 -1.617727 -1.558429

a b c d

2020-10-01 NaN 0.739601 -0.665402 -3.299302

2020-10-02 NaN -0.116347 -2.056773 -1.516836

2020-10-03 NaN -1.723162 -1.227137 -1.452094

2020-10-04 NaN 0.069986 -0.439783 -1.083457

2020-10-05 NaN -1.407625 -0.544136 -1.772225

2020-10-06 NaN -0.299326 1.057618 0.984086

2020-10-07 NaN -0.990600 -2.007871 -0.085849

2020-10-08 NaN -0.058227 0.366681 2.438287

2020-10-09 NaN 0.506951 0.346056 0.519030

2020-10-10 NaN -2.249688 -1.975417 -3.436232

.ewm()

DataFrame.ewm(com=None, span=None, halflife=None, alpha=None, min_periods=0, adjust=True, ignore_na=False, axis=0, times=None)

Provide exponential weighted functions.

Available EW functions: mean(), var(), corr(), cov()

Exactly one parameter: com, span, halflife, or alpha must be provided.

- com: Specify decay in terms of center of mass

- span: Specify decay in terms of span

- halflife: Specify decay in terms of half-life

- alpha: Specify smoothing factor α directrly

- min_periods: Minimum number of observations in window required to have a value

- adjust: Divide by decaying adjustment factor in beginning periods to accout for imbalance in relative weightings (viewing EWMA as a moving average)

** When True, the EW function is calculated using weights ω = ( 1 − α ) i \omega=(1-\alpha)^i ω=(1−α)i. For example, the EW moving average of the series [ x 0 , x 1 , ⋅ ⋅ ⋅ , x t x_0, x_1, \cdot\cdot\cdot, x_t x0,x1,⋅⋅⋅,xt] would be:

y t = x t + ( 1 − α ) x t − 1 + ( 1 − α ) 2 x t − 2 + ⋅ ⋅ ⋅ + ( 1 − α ) t x 0 1 + ( 1 − α ) + ( 1 − α ) 2 + ⋅ ⋅ ⋅ + ( 1 − α ) t y_t=\frac{x_t+(1-\alpha)x_{t-1}+(1-\alpha)^2x_{t-2}+\cdot\cdot\cdot+(1-\alpha)^tx_0}{1+(1-\alpha)+(1-\alpha)^2+\cdot\cdot\cdot+(1-\alpha)^t} yt=1+(1−α)+(1−α)2+⋅⋅⋅+(1−α)txt+(1−α)xt−1+(1−α)2xt−2+⋅⋅⋅+(1−α)tx0

** When False, the exponentially weighted function is calculated recursively:

y 0 = x 0 y_0=x_0 y0=x0

y t = ( 1 − α ) y t − 1 + α x t y_t=(1-\alpha)y_{t-1}+{\alpha}x_t yt=(1−α)yt−1+αxt

import pandas as pd

import numpy as np

df = pd.DataFrame({'B': [0, 1, 2, np.nan, 4]})

print(df)

df1 = df.ewm(com=0.5).mean()

print(df1)

#Specifying times with a timedelta halflife when computing mean

times = pd.date_range(start='2020-10-12', periods=5)

df2 = df.ewm(halflife='4 days', times=pd.DatetimeIndex(times)).mean()

print(df2)

输出结果:

B

0 0.0

1 1.0

2 2.0

3 NaN

4 4.0

B

0 0.000000

1 0.750000

2 1.615385

3 1.615385

4 3.670213

B

0 0.000000

1 0.543214

2 1.114950

3 1.114950

4 2.144696

12-DataFrame应用聚合

01 常用操作

1-1 DataFrame应用聚合

import pandas as pd

import numpy as np

df = pd.DataFrame(

np.random.randn(10,4),

index = pd.date_range('2020-10-14', periods=10),

columns = ['A', 'B', 'C', 'D']

)

print(df)

r = df.rolling(window=3, min_periods=1)

print(r)

输出结果:

A B C D

2020-10-14 -0.293505 0.245022 -1.020912 0.249647

2020-10-15 -0.370049 0.253555 0.431085 0.456073

2020-10-16 -0.526489 3.482717 -0.082019 0.169488

2020-10-17 1.310946 0.033661 -2.182524 -0.056167

2020-10-18 0.245885 0.027550 1.048386 0.091607

2020-10-19 1.241919 -0.629410 0.101962 0.744189

2020-10-20 -0.062255 -0.602408 0.768059 -0.771600

2020-10-21 -1.114848 -0.955441 1.308255 0.023233

2020-10-22 -0.059666 -0.860865 1.058340 -0.236879

2020-10-23 0.314197 0.995166 -0.703913 0.183485

Rolling [window=3,min_periods=1,center=False,axis=0]

1-2 在整个数据框上应用聚合

import pandas as pd

import numpy as np

df = pd.DataFrame(np.random.randn(10,4), index=pd.date_range('2020-10-14', periods=10), columns=['A', 'B', 'C', 'D'])

r = df.rolling(window=3, min_periods=1)

print(r.aggregate(np.sum))

#在数据框的单个列上应用聚合

print(r['A'].aggregate(np.sum))

#在DataFrame的单个列上应用多个函数

print(r['A'].aggregate([np.sum, np.mean]))

#在DataFrame的多个列上应用多个函数

print(r[['A','B']].aggregate([np.sum, np.mean]))

#将不同的函数应用于DataFrame的不同列

print(r.aggregate({'A': np.mean, 'B': np.sum}))

输出结果:

A B C D

2020-10-14 0.081500 0.472049 2.386231 -0.789650

2020-10-15 1.135092 0.288560 0.304266 0.600378

2020-10-16 -1.701276 0.851731 1.070779 0.278490

2020-10-17 1.021226 0.535126 -0.692457 0.386223

2020-10-18 0.118333 0.971667 -1.028922 1.159587

2020-10-19 -0.645824 -0.640767 0.427543 0.689348

2020-10-20 1.722740 -0.211914 -0.217482 -0.011830

2020-10-21 -0.394792 -0.047677 0.510992 0.741355

2020-10-22 -0.273230 -0.313764 0.712516 -0.489515

2020-10-23 0.389186 0.070262 -2.145313 -0.792609

A B C D

2020-10-14 NaN NaN NaN NaN

2020-10-15 NaN NaN NaN NaN

2020-10-16 -0.484684 1.612340 3.761276 0.089219

2020-10-17 0.455043 1.675417 0.682588 1.265092

2020-10-18 -0.561716 2.358524 -0.650599 1.824301

2020-10-19 0.493735 0.866026 -1.293835 2.235158

2020-10-20 1.195249 0.118986 -0.818860 1.837105

2020-10-21 0.682124 -0.900358 0.721053 1.418873

2020-10-22 1.054717 -0.573354 1.006026 0.240011

2020-10-23 -0.278837 -0.291179 -0.921806 -0.540769

2020-10-14 NaN

2020-10-15 NaN

2020-10-16 -0.484684

2020-10-17 0.455043

2020-10-18 -0.561716

2020-10-19 0.493735

2020-10-20 1.195249

2020-10-21 0.682124

2020-10-22 1.054717

2020-10-23 -0.278837

Freq: D, Name: A, dtype: float64

sum mean

2020-10-14 NaN NaN

2020-10-15 NaN NaN

2020-10-16 -0.484684 -0.161561

2020-10-17 0.455043 0.151681

2020-10-18 -0.561716 -0.187239

2020-10-19 0.493735 0.164578

2020-10-20 1.195249 0.398416

2020-10-21 0.682124 0.227375

2020-10-22 1.054717 0.351572

2020-10-23 -0.278837 -0.092946

A B

sum mean sum mean

2020-10-14 NaN NaN NaN NaN

2020-10-15 NaN NaN NaN NaN

2020-10-16 -0.484684 -0.161561 1.612340 0.537447

2020-10-17 0.455043 0.151681 1.675417 0.558472

2020-10-18 -0.561716 -0.187239 2.358524 0.786175

2020-10-19 0.493735 0.164578 0.866026 0.288675

2020-10-20 1.195249 0.398416 0.118986 0.039662

2020-10-21 0.682124 0.227375 -0.900358 -0.300119

2020-10-22 1.054717 0.351572 -0.573354 -0.191118

2020-10-23 -0.278837 -0.092946 -0.291179 -0.097060

A B

2020-10-14 NaN NaN

2020-10-15 NaN NaN

2020-10-16 -0.161561 1.612340

2020-10-17 0.151681 1.675417

2020-10-18 -0.187239 2.358524

2020-10-19 0.164578 0.866026

2020-10-20 0.398416 0.118986

2020-10-21 0.227375 -0.900358

2020-10-22 0.351572 -0.573354

2020-10-23 -0.092946 -0.291179

13-Pandas GroupBy

在许多情况下,我们将数据分成多个集合,并在每个子集上应用一些函数。在应用函数中,可以执行以下操作:

- 聚合

- 转换 - 执行一些特定于组的操作

- 过滤

import pandas as pd

ipl_data = {

'Team': ['Riders', 'Riders', 'Devils', 'Devils', 'Kings', 'Kings', 'Kings', 'Kings', 'Riders', 'Royals', 'Royals', 'Riders'],

'Rank': [1,2,2,3,3,4,1,1,2,4,1,2],

'Year': [2014, 2015, 2014, 2015, 2014, 2015, 2016, 2017, 2016, 2014, 2015, 2017],

'Points': [876, 798, 863, 673, 741, 812, 756, 788, 694, 701, 804, 690]

}

df = pd.DataFrame(ipl_data)

print(df)

#查看分组

print(df.groupby('Team').groups)

#按多列分组

print(df.groupby(['Team', 'Year']).groups)

#迭代遍历分组

grouped = df.groupby('Year')

for name, group in grouped:

print(name)

print(group)

输出结果:

Team Rank Year Points

0 Riders 1 2014 876

1 Riders 2 2015 798

2 Devils 2 2014 863

3 Devils 3 2015 673

4 Kings 3 2014 741

5 Kings 4 2015 812

6 Kings 1 2016 756

7 Kings 1 2017 788

8 Riders 2 2016 694

9 Royals 4 2014 701

10 Royals 1 2015 804

11 Riders 2 2017 690

{'Devils': [2, 3], 'Kings': [4, 5, 6, 7], 'Riders': [0, 1, 8, 11], 'Royals': [9, 10]}

{('Devils', 2014): [2], ('Devils', 2015): [3], ('Kings', 2014): [4], ('Kings', 2015): [5], ('Kings', 2016): [6], ('Kings', 2017): [7], ('Riders', 2014): [0], ('Riders', 2015): [1], ('Riders', 2016): [8], ('Riders', 2017): [11], ('Royals', 2014): [9], ('Royals', 2015): [10]}

#迭代遍历分组

2014

Team Rank Year Points

0 Riders 1 2014 876

2 Devils 2 2014 863

4 Kings 3 2014 741

9 Royals 4 2014 701

2015

Team Rank Year Points

1 Riders 2 2015 798

3 Devils 3 2015 673

5 Kings 4 2015 812

10 Royals 1 2015 804

2016

Team Rank Year Points

6 Kings 1 2016 756

8 Riders 2 2016 694

2017

Team Rank Year Points

7 Kings 1 2017 788

11 Riders 2 2017 690

聚合、转换、过滤

#聚合

print(df.groupby('Team')['Points'].agg([np.sum, np.mean, np.std]))

#转换

grouped = df.groupby('Team')

score = lambda x: (x - x.mean())/x.std()*10

print(grouped.apply(lambda x: (x - x.mean())/x.std()*10))

print(grouped.transform(score))

输出结果:

#聚合

sum mean std

Team

Devils 1536 768.00 134.350288

Kings 3097 774.25 31.899582

Riders 3058 764.50 89.582364

Royals 1505 752.50 72.831998

#转换

Points Rank Team Year

0 12.446646 -15.000000 NaN -11.618950

1 3.739575 5.000000 NaN -3.872983

2 7.071068 -7.071068 NaN -7.071068

3 -7.071068 7.071068 NaN 7.071068

4 -10.423334 5.000000 NaN -11.618950

5 11.834011 11.666667 NaN -3.872983

6 -5.721078 -8.333333 NaN 3.872983

7 4.310401 -8.333333 NaN 11.618950

8 -7.869853 5.000000 NaN 3.872983

9 -7.071068 7.071068 NaN -7.071068

10 7.071068 -7.071068 NaN 7.071068

11 -8.316369 5.000000 NaN 11.618950

Rank Year Points

0 -15.000000 -11.618950 12.446646

1 5.000000 -3.872983 3.739575

2 -7.071068 -7.071068 7.071068

3 7.071068 7.071068 -7.071068

4 5.000000 -11.618950 -10.423334

5 11.666667 -3.872983 11.834011

6 -8.333333 3.872983 -5.721078

7 -8.333333 11.618950 4.310401

8 5.000000 3.872983 -7.869853

9 7.071068 -7.071068 -7.071068

10 -7.071068 7.071068 7.071068

11 5.000000 11.618950 -8.316369

14-Pandas合并/连接

- .merge()

DataFrame.merge(right, how=‘inner’, on=None, left_on=None, right_on=None, left_index=False, right_index=False, sort=False, suffixes=’_x’,’_y’, copy=True, indicator=False, validate=None)

Merge DataFrame or named Series objects with a database-style join.

right: Object to merge with

how: {‘left’, ‘right’, ‘outer’, ‘inner’}, default inner

on: Column or index level names to join on.

left_on: Column or index level names to join on in the left DataFrame.

right_on: Column or index level names to join on in the right DataFrame.

left_index: Use the index from the left DataFrame as the join keys;

right_index: Use the index from the right DataFrame as the join key.

sort: Sort the join keys lexicographically in the result DataFrame.

suffixes: A length-2 sequesnce where each element is optionally a string indicating the suffix to add to overlapping column names in left and right respectively.

copy: If False, avoid copy if possible

indicator: If True, adds a column to the output DataFrame called “_merge” with information on the source of each row. The column will have a Categorical type with the value of “left_only” for ovservations whose merge key only appears in the left DataFrame, “right_only” for observations whose merge key only appear in the right DataFrame, and “both” if the observation’s merge key is found in both DataFrames.

validate: If specified, checks if merge is of specified type.

- “one_to_one” or “1:1”: check if merge keys are unique in both left and right datasets.

“one_to_many” or “1:m”: check if merge keys are unique in left dataset.

“many_to_one” or “m:1”: check if merge keys are unique in right dataset.

“many_to_many” or “m:m”: allowed, but does not result in checks.

- concat()

pandas.concat()

Concatenate pandas objects along a particular axis with optional set logic along the other axes.

objs: a sequence or mapping of Series or DataFrame objects

- If a mapping is passed, the sorted keys will be used as the keys argument, unless it is passed, in which case the values will be selected. Any None objects will be dropped silently unless they are all None in which case a ValueError will be raised.

axis

join: “inner” or “outer”, default “outer”

ignore_index: If True, the resulting axis wil be labeled 0,…,n-1

keys: if multiple levels passed, should contain tuples. Construct hierarchical index using the passed keys as the outermost level.

levels: Specific levels to use for constructing a MultiIndex.

names: Names for the levels in the resulting hierarchical index.

verify_integrity: check whether the new concatenated axis contains duplicates.

copy: If False, do not copy data unnecessarily

连接的一个有用的快捷方式是在Series和DataFrame实例的append方法,相当于concat按axis=0连接。例如:

df_new = df1.append([df2, df3, df4])

15-Pandas处理时间

15-1 处理时间序列

- datetime.now()

- Timestamp()

- date_range()

- bdate_range()

- to_datetime()

| Expression | 功能 | 备注 |

|---|---|---|

| pd.datetime.now() | 获取当前的日期和时间 | |

| pd.Timestamp(‘2020-10-19’) | 创建一个时间戳 | |

| pd.Timestamp(1588686880, unit=‘s’) | 利用标准时间创建时间戳 | 可以选择时间单位 |

| time = pd.date_range(“12:00”,“23:59”,freq=“30min”).time | 创建一个时间范围 | 返回一个numpy数组 |

| pd.to_datetime(pd.Series) | 返回时间序列的Series | |

| pd.to_datetime([list]) | 返回DatetimeIndex对象 | |

| pd.bdate_range(‘2020/10/19’, periods=5) | 创建时间范围 | 用来表示商业日期范围,不包括星期六和星期天 |

- 偏移别名

| expression | account |

|---|---|

| B | 工作日频率 |

| D | 自然日频率 |

| W | 自然周频率 |

| M | 月结束频率 |

| Q | 季度结束频率 |

| SM | 半月 |

| H | 小时 |

| T, min | 分钟 |

| S | 秒 |

| L, ms | 毫秒 |

| N | 纳秒 |

15-2 时间差(Timedelta)

时间差(Timedelta)是时间上的差异,以不同的单位来表示。例如:日,小时,分钟,秒。它们可以是正值,也可以是负值。

可以使用各种参数创建Timedelta对象。

import pandas as pd

#使用字符串创建timedelta对象

timediff = pd.Timedelta('2 days 2 hours 15 minutes 30 seconds')

print(timediff)

#传递整数与指定单位来创建Timedelta对象

timediff = pd.Timedelta(6, unit='h')

print(timediff)

#周,天,小时,分钟,秒,毫秒,微秒,纳秒的数据偏移也可用于构建。

timediff = pd.Timedelta(days=2)

print(timediff)

运算操作:直接在时间戳上加/减时间差,得到新的时间戳

import pandas as pd

s = pd.Series(pd.date_range('2020-10-19', periods=3, freq='D'))

td = pd.Series([pd.Timedelta(days=i) for i in range(3)])

df = pd.DataFrame(dict(A = s, B=td))

df['C'] = df['A']+df['B']

print(df)

输出结果:

A B C

0 2020-10-19 0 days 2020-10-19

1 2020-10-20 1 days 2020-10-21

2 2020-10-21 2 days 2020-10-23

16-Pandas分类数据

分类是Pandas数据类型。分类变量只能采用有限的数量,而且通常是固定的数量。除了固定长度,分类数据可能有顺序,但不能执行数字操作。

分类数据类型在一下情况非常有用:

- 一个字符串变量,只包含几个不同的值。将这样的字符串变量转换为分类变量将会节省一些内存。

- 变量的词汇顺序与逻辑顺序不同(如one, two, three),通过转换为分类并指定类别上的顺序,排序和最小/最大将使用逻辑顺序。

- 作为其他python库的一个信号,这个列应该被当做一个分类变量(例如,使用合适的统计方法或plot类型)

# 对象创建

# 方法1:指定dtype="category"

import pandas as pd

s = pd.Series(["a","b","c","a"], dtype="category")

print(s)

# 方法2:使用标准Pandas分类构造函数

# pandas.Categorical(values, categories, ordered)

cat = pd.Categorical(['a','b','c','a','c'])

print(cat)

# 添加categories参数并排序

cat2 = pd.Categorical(['a','b','c','a','c'], ['c','b','a'], ordered=True)

print(cat2)

df = pd.DataFrame({

'col1': cat2,

'col2': pd.date_range(start='2020-10-19',periods=5)

})

print(df)

df_sorted=df.sort_values(['col1','col2'],ignore_index=True)

print(df_sorted)

输出结果:

0 a

1 b

2 c

3 a

dtype: category

Categories (3, object): ['a', 'b', 'c']

['a', 'b', 'c', 'a', 'c']

Categories (3, object): ['a', 'b', 'c']

['a', 'b', 'c', 'a', 'c']

Categories (3, object): ['c' < 'b' < 'a']

col1 col2

0 a 2020-10-19

1 b 2020-10-20

2 c 2020-10-21

3 a 2020-10-22

4 c 2020-10-23

col1 col2

0 c 2020-10-21

1 c 2020-10-23

2 b 2020-10-20

3 a 2020-10-19

4 a 2020-10-22

category变量上的其他操作

| expression | description |

|---|---|

| .describe() | 数据描述 |

| obj.cat.categories | 获取类别属性 |

| obj.ordered | 获取对象的顺序 |

| obj.cat.categories=[] | 通过将新值分配给series.cat.categories属性来重命名类别 |

| obj.cat.add_categories([]) | 追加新的类别 |

| obj.cat.remove_categories([]) | 删除类别 |

17-Pandas可视化

基本绘图

Series和DataFrame上的绘图功能使用matplotlib库的plot()方法。

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

df = pd.DataFrame(np.random.randn(10,4), index=pd.date_range('2020-10-20', periods=10), columns=list('ABCD'))

df.plot()

plt.show()

输出结果:

绘图方法允许除默认线图之外的少数绘图样式,这些方法可以作为plot()的kind关键字参数提供。这些包括:

- bar或barh - 条形图/水平条形图

- hist - 直方图

- boxplot - 盒型图

- area - 面积图

- scatter - 散点图

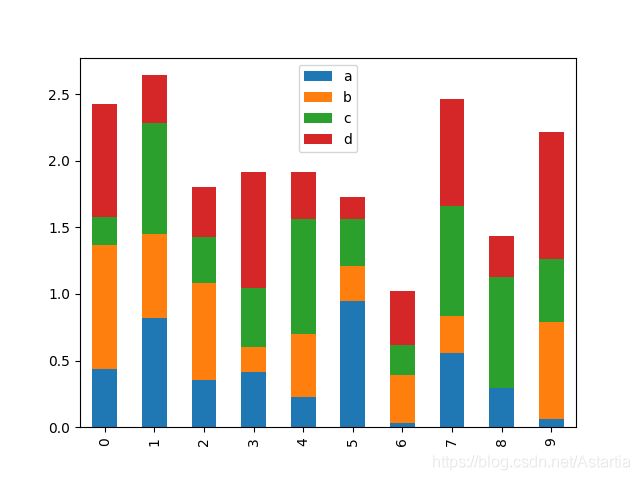

堆积条形图

- stacked=True

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

df = pd.DataFrame(np.random.rand(10,4), columns=['a','b','c','d'])

df.plot.bar(stacked=True)

plt.show()

输出结果:

水平条形图

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

df = pd.DataFrame(np.random.rand(10,4), columns=['a','b','c','d'])

df.plot.barh(stacked=True)

plt.show()

直方图

使用plot.hist()方法绘制直方图,可以指定bins的数量值。

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

df = pd.DataFrame({

'a': np.random.randn(1000)+1,

'b': np.random.randn(1000),

'c': np.random.randn(1000)-1}

)

df.plot.hist(bins=20)

plt.show()

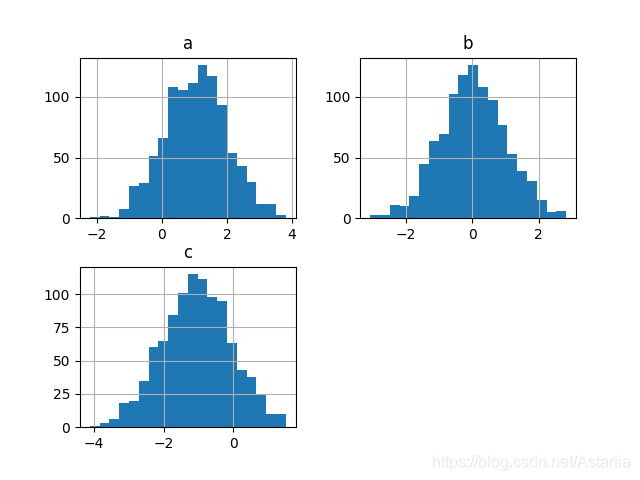

绘制不同系列的直方图

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

df = pd.DataFrame({

'a': np.random.randn(1000)+1,

'b': np.random.randn(1000),

'c': np.random.randn(1000)-1}

)

df.hist(bins=20)

plt.show()

输出结果:

箱图

Boxplot可以绘制调用Series.box.plot()和DataFrame.box.plot()或DataFrame.boxplot()来可视化每列中值的分布。

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

df = pd.DataFrame(np.random.randn(10,5), columns=list('ABCDE'))

print(df)

df.plot.box()

plt.show()

输出结果:

区域图

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

df = pd.DataFrame(np.abs(np.random.randn(10,4)), columns=list('ABCD'))

print(df)

df.plot.area()

plt.show()

输出结果:

散点图

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

df = pd.DataFrame(np.random.rand(50,4), columns=list('abcd'))

print(df)

df.plot.scatter(x='a', y='b')

plt.show()

输出结果:

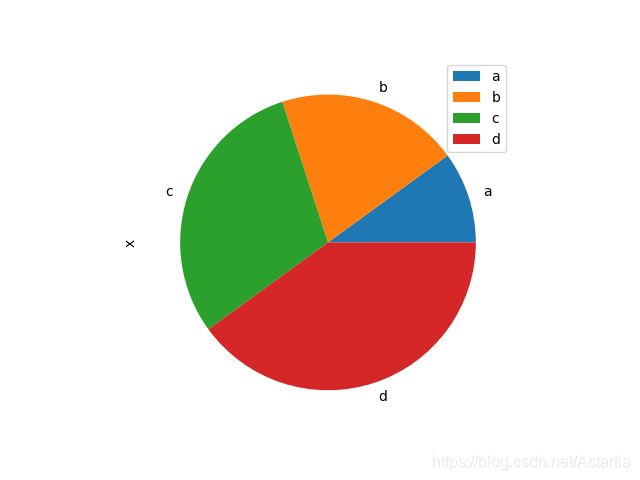

Pie Chart

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

df = pd.DataFrame(pd.Series([5,10,15,20],index=list('abcd')), columns=['x'])

print(df)

df.plot.pie(subplots=True)

plt.show()

输出结果:

18-Pandas I/O工具

Pandas I/O API是一套像pg.read_csv()一样返回Pandas对象的顶级读取器函数。

读取文本文件(或平面文件)的两个主要功能是read_csv()和read_table()。他们都使用相同的解析代码来智能地将表格数据转换为DataFrame对象。

形式1:

pandas.read_csv(filepath_or_buffer, sep=’,’, delimiter=None, header=‘infer’, names=None, index_col=None, usecols=None)

形式2:

pandas.read_csv(filepath_or_buffer, sep=’\t’, delimiter=None, header=‘infer’, names=None, index_col=None, usecols=None)

- 基础操作

import pandas as pd

import numpy as np

# 基础操作

df = pd.read_csv('temp.csv')

print(df)

print(df.dtypes)

输出:

S.No Name Age City Salary

0 1 Tom 28 Toronto 20000

1 2 Lee 32 HongKong 3000

2 3 Steven 43 Bay Area 8300

3 4 Ram 38 Hyderabad 3900

S.No int64

Name object

Age int64

City object

Salary int64

dtype: object

- 通过index_col参数(传递列表)指定索引列

# 通过index_col参数(传递列表)指定索引列

df = pd.read_csv('temp.csv', sep=',', index_col=['S.No'])

print(df)

输出:

Name Age City Salary

S.No

1 Tom 28 Toronto 20000

2 Lee 32 HongKong 3000

3 Steven 43 Bay Area 8300

4 Ram 38 Hyderabad 3900

- 通过dtype参数(传递字典)转换数据类型

# 通过dtype参数(传递字典)转换数据类型

df = pd.read_csv('temp.csv', dtype={'Salary': np.float64})

print(df.dtypes)

输出:

S.No int64

Name object

Age int64

City object

Salary float64

dtype: object

- 通过skiprows参数跳过读取的行数

# 通过skiprows参数跳过读取的行数

df = pd.read_csv('temp.csv', skiprows=1,names=list('abcde'))

print(df)

输出:

a b c d e

0 1 Tom 28 Toronto 20000

1 2 Lee 32 HongKong 3000

2 3 Steven 43 Bay Area 8300

3 4 Ram 38 Hyderabad 3900

功能是read_csv()和read_table()。他们都使用相同的解析代码来智能地将表格数据转换为DataFrame对象。

> 形式1:

> *pandas*.**read_csv**(*filepath_or_buffer*, *sep*=',', *delimiter*=None, *header*='infer', *names*=None, *index_col*=None, *usecols*=None)

> 形式2:

> *pandas*.**read_csv**(*filepath_or_buffer*, *sep*='\t', *delimiter*=None, *header*='infer', *names*=None, *index_col*=None, *usecols*=None)

1. 基础操作

```python

import pandas as pd

import numpy as np

# 基础操作

df = pd.read_csv('temp.csv')

print(df)

print(df.dtypes)

输出:

S.No Name Age City Salary

0 1 Tom 28 Toronto 20000

1 2 Lee 32 HongKong 3000

2 3 Steven 43 Bay Area 8300

3 4 Ram 38 Hyderabad 3900

S.No int64

Name object

Age int64

City object

Salary int64

dtype: object

- 通过index_col参数(传递列表)指定索引列

# 通过index_col参数(传递列表)指定索引列

df = pd.read_csv('temp.csv', sep=',', index_col=['S.No'])

print(df)

输出:

Name Age City Salary

S.No

1 Tom 28 Toronto 20000

2 Lee 32 HongKong 3000

3 Steven 43 Bay Area 8300

4 Ram 38 Hyderabad 3900

- 通过dtype参数(传递字典)转换数据类型

# 通过dtype参数(传递字典)转换数据类型

df = pd.read_csv('temp.csv', dtype={'Salary': np.float64})

print(df.dtypes)

输出:

S.No int64

Name object

Age int64

City object

Salary float64

dtype: object

- 通过skiprows参数跳过读取的行数

# 通过skiprows参数跳过读取的行数

df = pd.read_csv('temp.csv', skiprows=1,names=list('abcde'))

print(df)

输出:

a b c d e

0 1 Tom 28 Toronto 20000

1 2 Lee 32 HongKong 3000

2 3 Steven 43 Bay Area 8300

3 4 Ram 38 Hyderabad 3900

Source:易百教程