数据迁移 mongo → mysql

文章目录

- 过程分析

-

- mongo 百万数据全部查询

-

- _id

- 排序查询

- 错误分页查询

- mongo 批量插入

- 找出两个集合不同元素

-

- 法1

- 法2

- mysql

-

- 数据表

-

- 测试

- mysql mybatis 批量插入

-

- foreach

-

- 测试

- 连接串参数 rewriteBatchedStatements=true

- mp

- The total number of locks exceeds the lock table size

- delete删除

- 其他插入方式

-

- 存储过程插入大批量数据

- 代码分析

-

- 纯数据转移

- 数据检查

过程分析

数据量是百万级别,需要考虑迁移速度和用时

一个一个插入太慢,要批量插入

其他方法

https://www.cnblogs.com/kagol/p/5946525.html

mongo 百万数据全部查询

百万数据不能一次性查询、获取

mongodb 自带的 skip() 方法,跳跃查询导致虽然做了分页,但大数据环境下还是慢:

query.skip((pageNum - 1) * pageSize);

query.limit(pageSize);

前30万条数据,1秒9000条数据,后来1秒1000条数据,

越到后面越慢!

官方文档也不推荐skip,skip会扫描全部文档,然后再返回结果。

The cursor.skip() method requires the server to scan from the beginning of the input results set before beginning to return results. As the offset increases, cursor.skip() will become slower.

优化:

https://blog.csdn.net/weixin_42267411/article/details/127412376

Criteria criteria = new Criteria();

Query query;

for (long i = 0; i < page; i++) {

query = new Query(criteria).limit(PAGE_LIMIT);

List<BookmarkEntity> mongoList1 =

mongoTemplate.find(query, BookmarkEntity.class);

String id = mongoList1.get(mongoList1.size() - 1).getId();

criteria = Criteria.where("_id").gt(new ObjectId(id));

}

gt > _id > value

lt < _id < value

gte >= _id >= value

lte <= _id <= value

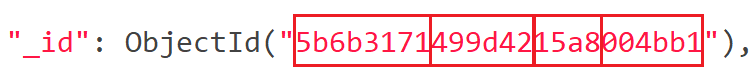

where("_id").lt("xxx")将查询为空 = 失败:

https://devpress.csdn.net/mongodb/63047f5ac67703293080cc2d.html

解决办法:where("_id").lt(new ObjectId("xxx"))

https://cloud.tencent.com/developer/article/1172517

//Page 1

db.users.find().limit(pageSize);

//Find the id of the last document in this page

last_id = ...

//Page 2

users = db.users.find({

'_id' :{ "$gt":ObjectId("5b16c194666cd10add402c87")}

}).limit(10)

//Update the last id with the id of the last document in this page

last_id = ...

mongo 查询 + 记录表(数据插入 写入)

优化前插入236.4万数据,用时33分钟

优化后插入240万数据,用时 144秒

2023-07-21 09:46:19.002 INFO 51696 --- [ main] com.example.controller.Mycontroller : 开始转移 1000 个数据量 - 0

2023-07-21 09:46:19.111 INFO 51696 --- [ main] com.example.controller.Mycontroller : 开始转移 1000 个数据量 - 1

。。。

2023-07-21 09:48:43.217 INFO 51696 --- [ main] com.example.controller.Mycontroller : 本次迁移完毕,已转移数据量:2400000

2023-07-21 09:48:43.217 INFO 51696 --- [ main] com.example.controller.Mycontroller : 数据完全转移成功

_id

https://www.navicat.com.cn/company/aboutus/blog/274-%E5%85%B3%E4%BA%8E-mongodb-%E7%9A%84-_id-%E5%AD%97%E6%AE%B5

_id 是集合中文档的主键,索引

ObjectID 长度为 12 字节:

一个 4 字节的值,表示自 Unix 纪元以来的秒数

一个 3 字节的机器标识符

一个 2 字节的进程 ID

一个 3 字节的计数器,以随机值开始

_id(ObjectId)是由MongoDB自动生成的唯一标识符

_id的生成不是严格按照升序进行,但我们可以通过对_id字段进行排序来按照升序或降序获取文档

排序查询

Sort.Order.asc是升序 .desc降序

Query query = new Query();

Criteria criteria = Criteria.where("key").is("value");

query.addCriteria(criteria);

//先注入MongoTemplate

query.with(Sort.by(Sort.Order.asc("batch"))); //查询结果按照batch字段升序

List<Client> clients = mongoTemplate.find(query, Client.class, "client_info");

错误分页查询

根本行不通的:

Aggregation.newAggregation(

// 关联member表

Aggregation.lookup(

"transfer", // 从表 表名

"bookmark_id", // 如bookmarks被查询主表的bookmark_id

"_id", // 如transfer从表的主键_id

"flag" // 联合查询出的别名,用于多条件查询表明前缀,相当于SQL中的临时表名

),

Aggregation.unwind("transfer", true),

// 查询条件

Aggregation.match(

Criteria.where("flag.id").ne(null) // 若没有这个条件,那后面就要跳转(下一行代码) ne不等于

),

Aggregation.match(

Criteria.where("_id").gt(new ObjectId("xxx")) // 若没有这个条件,那后面就要跳转(下一行代码) ne不等于

),

// 分页:每次只查 PAGE_LIMIT 条数据

Aggregation.limit(PAGE_LIMIT));

mongo 批量插入

mongoTemplate.insert(list,Flag.class);

mongoTemplate.insertAll(list);

两者效率一样

@Data

@AllArgsConstructor

@NoArgsConstructor

@ToString

@Document(collection = "transfer")

public class Flag {

private String id;

private String flag;

private List<BookmarkEntityCopy> list;

}

insert VS save

插入重复数据

insert: 若新增数据的主键已经存在,则抛 org.springframework.dao.DuplicateKeyException 异常提示主键重复,不保存当前数据

save: 若新增数据的主键已经存在,则会对当前已经存在的数据进行修改操作

批操作

insert: 可以一次性插入一整个列表,而不用进行遍历操作,效率相对较高

save: 需要遍历列表,进行一个个的插入

找出两个集合不同元素

法1

https://developer.aliyun.com/article/999073#slide-6

/**

* 找出两个集合中不同的元素

*

* @param collmax

* @param collmin

* @return

*/

public static Collection getDifferListByMapPlus(Collection collmax, Collection collmin) {

//使用LinkedList防止差异过大时,元素拷贝

Collection csReturn = new LinkedList();

Collection max = collmax;

Collection min = collmin;

long beginTime = System.currentTimeMillis();

//先比较大小,这样会减少后续map的if判断次数

if (collmax.size() < collmin.size()) {

max = collmin;

min = collmax;

}

//直接指定大小,防止再散列

Map<Object, Integer> map = new HashMap<Object, Integer>(max.size());

for (Object object : max) {

map.put(object, 1);

}

for (Object object : min) {

if (map.get(object) == null) {

csReturn.add(object);

} else {

map.put(object, 2);

}

}

for (Map.Entry<Object, Integer> entry : map.entrySet()) {

if (entry.getValue() == 1) {

csReturn.add(entry.getKey());

}

}

long endTime = System.currentTimeMillis();

System.out.println("集合A和集合B不同的元素:"+csReturn);

System.out.println("使用map方式遍历, 对比耗时: " + (endTime - beginTime)+"毫秒。");

return csReturn;

}

法2

本来我用的是这个办法:

百万数据,对应 原表和记录表

一千一千的查,一个一个对比,似乎不如法1

但mongo数据得分页查询,不能一次性得到全部数据

我的办法反而有优势

// 分页查询

Criteria criteria1 = Criteria.where("_id").ne(null); // ne 是 !=

Query query1 = new Query(criteria1);

long amount = mongoTemplate.count(query1, "transfer");

int PAGE_LIMIT = 1000;

int page = (int) amount / PAGE_LIMIT;

if (amount % PAGE_LIMIT > 0) {

// 余数不为0时,要加1

page += 1;

}

Criteria criteriaT = new Criteria();

Criteria criteriaB = new Criteria();

Query queryT;

Query queryB;

String idT = "0";

String idB = "0";

int indexT = 0;

int indexB = 0;

int count = 0;

List<Flag> mongoListT = null;

for (int i = 0; i < page; i++) {

queryT = new Query(criteriaT).limit(PAGE_LIMIT);

mongoListT = mongoTemplate.find(queryT, Flag.class);

queryB = new Query(criteriaB).limit(PAGE_LIMIT);

List<BookmarkEntity> mongoListB = mongoTemplate.find(queryT, BookmarkEntity.class);

List<Flag> result = new ArrayList<>();

indexT = 0;

indexB = 0;

for (indexT = 0; indexT < PAGE_LIMIT && indexT < mongoListT.size(); indexT++) {

if (!mongoListT.get(indexT).getId().equals(mongoListB.get(indexB).getId())) {

Criteria criteria = Criteria.where("_id").is(new ObjectId(mongoListT.get(indexT).getId()));

Query query = new Query(criteria);

mongoTemplate.remove(query, "transfer");

Map map = new HashMap();

map.put("id", mongoListT.get(indexT).getBookmarkId());

repository.delete(map);

// sqlSession.commit();

count++;

} else {

indexB++;

}

}

idT = mongoListT.get(indexT - 1).getId();

criteriaT = Criteria.where("_id").gt(new ObjectId(idT));

idB = mongoListT.get(indexB - 1).getId();

criteriaB = Criteria.where("_id").gt(new ObjectId(idB));

LOGGER.info("已清理 " + count + " 个数据 - " + i + " / " + page);

sqlSession.commit();

}

mysql

数据表

原来数据表有自己的主键,还不是自增的

当数据表很大时(10万条数据),再插入数据时,寻找插入位置就很耗时了

再增加一个自增的主键id

删除主键、删除索引

删除主键索引

ALTER TABLE 表名 DROP PRIMARY KEY

删除索引

drop index 索引名 on 表名;

删除 自增长的主键索引:

1 重新定义列类型,去除自增属性

alter table 表名 modify id int ;

2 删除主键

alter table 表名 drop primary key;

添加自增主键,该列(该属性)不存在

alter table 表名 add 属性名 INT primary key AUTO_INCREMENT after 已有属性名;

添加自增主属性,该列已存在

ALTER TABLE 表名 MODIFY 属性名 INT AUTO_INCREMENT;

删除主键有两种情况:

1、如果主键不带自增属性,则可以直接使用drop来删除主键;

2、如果主键带自增属性,则要先将这个列的自动增长属性去掉,才可以删除主键

先插入数据再建索引 vs 先建索引再插入数据

https://blog.csdn.net/weixin_42056745/article/details/101693848

表记录越大,索引个数越多,速度越慢

某表记录有1亿条左右,12个索引

删除全部索引的插入速度 > 保留这12个索引的,插入速度百倍。

原理很简单,边插入边维护索引,开销太大了

测试

240万数据

有主键和索引,17分12秒

仅有主键(非自增),14分45秒

仅有主键(自增),8分15秒

PRIMARY KEY (`bookmarkId`) USING BTREE,

KEY `bookmarks_02` (`sourceTenantCode`) USING BTREE,

KEY `bookmarks_03` (`resType`) USING BTREE,

KEY `bookmarks_04` (`w3Account`) USING BTREE

有主键和索引

023-07-24 09:20:51.866 INFO 34592 --- [ main] com.example.controller.Mycontroller : dataCount = 1

...

2023-07-24 09:38:03.169 INFO 34592 --- [ main] com.example.controller.Mycontroller : dataCount = 2400

仅有主键(非自增)

2023-07-24 10:08:57.143 INFO 34592 --- [ main] com.example.controller.Mycontroller : dataCount = 1

...

2023-07-24 10:23:42.066 INFO 34592 --- [ main] com.example.controller.Mycontroller : dataCount = 2400

仅有主键(自增)

2023-07-24 10:37:36.941 INFO 34592 --- [ main] com.example.controller.Mycontroller : dataCount = 1

...

2023-07-24 10:45:52.961 INFO 34592 --- [ main] com.example.controller.Mycontroller : dataCount = 2400

mysql mybatis 批量插入

foreach

https://blog.csdn.net/weixin_44117767/article/details/105910170

List<BookmarkEntity> result = new ArrayList<>();

for (BookmarkEntity bookmarkEntity : mongoList1) {

result.add(bookmarkEntity);

}

repository.save(result);

public int save(@Param("list") List<BookmarkEntity> entityMysql);

<insert id="save" parameterType="java.util.List">

insert into bookmarks

(bookmarkId,resKey,title,titleIcon,`desc`,`from`,fromIcon,sourceTenantCode,

sourceModuleCode,sourceAppName,resType,pcUrl,mUrl,welinkUrl,nativeUrl,w3Account,

content,callbackContent,osType,paramsPrivate,createTime,runCount,callBackStatus,

resSupportVersion,updateDate,operaType,extraParm,clientVersion,status,dataSource,

createTimeStr,updateDateStr,oneboxFileType,deleteFlag)

values

<foreach collection ="list" item="bookmarkEntity" separator = ",">

(#{bookmarkEntity.bookmark_id},#{bookmarkEntity.resKey},#{bookmarkEntity.title},#{bookmarkEntity.title_icon},#{bookmarkEntity.desc},#{bookmarkEntity.from},#{bookmarkEntity.from_icon},null,

#{bookmarkEntity.source_moduleCode},#{bookmarkEntity.source_appname},#{bookmarkEntity.resType},#{bookmarkEntity.pcUrl},#{bookmarkEntity.mUrl},#{bookmarkEntity.weLinkUrl},null,#{bookmarkEntity.w3Account},

#{bookmarkEntity.content},#{bookmarkEntity.callback_content},null,null,#{bookmarkEntity.createTime},null,null,

#{bookmarkEntity.res_support_version},#{bookmarkEntity.updateDate},null,null,null,#{bookmarkEntity.status},null,

null,null,#{bookmarkEntity.oneboxFileType},'N')

</foreach >

</insert>

不推荐下面的写法

<foreach collection="list" item="item" index="index" separator=";">

insert into user(name,age) values(#{item.name},#{item.age})

</foreach>

1 每批次可插入的数量减少

2 这种方式执行返回值还是(0、1),是已经尝试插入的最后一条数据是否成功。这种foreach 拼接成的sql语句以分号“;”分隔的多条insert语句。导致前面的数据项都插入成功了。

(默认数据库的事务处理是单条提交的,出错前的执行都是一个个单条语句,所以并并没有回滚数据。)

测试

https://developer.aliyun.com/article/1009263

http://www.imooc.com/article/291781

https://blog.csdn.net/yangxiao_hui/article/details/103262704

连接长度values,效率比较

100 - 11min 43s

200 - 10min 3s

300 - 9min 22s

500 - 8min 53s

1000 - 8min 35s

2000 - 8min 27s

3000 - 8min 36s

5000 - 8min 19s

100

2023-07-24 10:55:46.170 INFO 37340 --- [ main] com.example.controller.Mycontroller : dataCount = 1

...

2023-07-24 11:07:29.916 INFO 37340 --- [ main] com.example.controller.Mycontroller : dataCount = 24000

200

2023-07-24 11:21:07.220 INFO 28312 --- [ main] com.example.controller.Mycontroller : dataCount = 1 / 12000

...

2023-07-24 11:31:10.271 INFO 28312 --- [ main] com.example.controller.Mycontroller : dataCount = 12000 / 12000

300

2023-07-24 11:32:54.989 INFO 28312 --- [ main] com.example.controller.Mycontroller : dataCount = 1 / 8000

...

2023-07-24 11:42:16.303 INFO 28312 --- [ main] com.example.controller.Mycontroller : dataCount = 8000 / 8000

500

2023-07-24 11:44:32.770 INFO 28312 --- [ main] com.example.controller.Mycontroller : dataCount = 1 / 4800

...

2023-07-24 11:53:25.392 INFO 28312 --- [ main] com.example.controller.Mycontroller : dataCount = 4800 / 4800

1000

2023-07-24 12:25:39.500 INFO 28312 --- [ main] com.example.controller.Mycontroller : dataCount = 1 / 2400

...

2023-07-24 12:34:04.108 INFO 28312 --- [ main] com.example.controller.Mycontroller : dataCount = 2400 / 2400

2000

2023-07-24 13:56:58.899 INFO 28312 --- [ main] com.example.controller.Mycontroller : dataCount = 1 / 1200

...

2023-07-24 14:05:24.494 INFO 28312 --- [ main] com.example.controller.Mycontroller : dataCount = 1200 / 1200

3000

2023-07-24 14:27:38.951 INFO 43688 --- [ main] com.example.controller.Mycontroller : dataCount = 1 / 800

...

2023-07-24 14:36:14.054 INFO 43688 --- [ main] com.example.controller.Mycontroller : dataCount = 800 / 800

5000

2023-07-24 14:43:49.472 INFO 43688 --- [ main] com.example.controller.Mycontroller : dataCount = 1 / 480

...

2023-07-24 14:52:08.735 INFO 43688 --- [ main] com.example.controller.Mycontroller : dataCount = 480 / 480

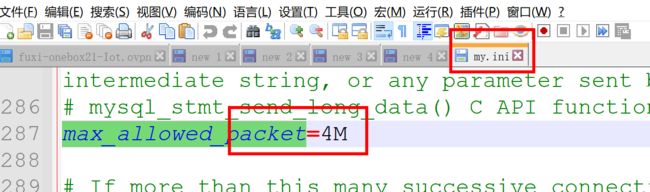

3000长度时报错:

java.sql.BatchUpdateException: Packet for query is too large (4,623,464 > 4,194,304). You can change this value on the server by setting the ‘max_allowed_packet’ variable

4MB = 4*1024KB = 4,194,304B

max_allowed_packet mysql5.7的客户端默认是16M,服务端默认是4M

我改成100M,然后重启mysql服务

连接串参数 rewriteBatchedStatements=true

在我这不影响性能

https://blog.csdn.net/chuangxin/article/details/83447387

https://www.bmabk.com/index.php/post/127993.html

mp

在我这不影响性能

https://cloud.tencent.com/developer/article/1888620

The total number of locks exceeds the lock table size

锁的总数超过锁表大小

同一时刻有太多的锁被占用,导致锁表大小超出 MySQL 设置的最大值

http://tunzao.me/articles/the-total-number-of-locks-exceeds-the-lock-table-size/

https://blog.csdn.net/weixin_42096792/article/details/118146269

innodb_buffer_pool_size默认8M,用来指定InnodDB缓存表的数据和索引使用的内存大小

越大磁盘交互就会越少,性能也会越好

delete删除

删除条件不是主键 + 数据百万,删除会很慢

alter TABLE bookmarks MODIFY `id` int(11) NOT NULL;

Alter table bookmarks drop primary key;

alter TABLE bookmarks MODIFY `bookmarkId` varchar(64) NOT NULL COMMENT '主键ID' PRIMARY KEY;

其他插入方式

https://xie.infoq.cn/article/c4dbd1c02192797350e3f6346

这个确实最快

仅主键(非自增)

2023-07-24 19:44:51.111 INFO 41072 --- [ main] com.example.controller.JavaJbdc : 成功插入数据耗时:20 - 0 / 24

...

2023-07-24 19:58:24.843 INFO 41072 --- [ main] com.example.controller.JavaJbdc : 成功插入数据耗时:44 - 23 / 24

2023-07-24 19:58:24.844 INFO 41072 --- [ main] com.example.controller.JavaJbdc : 插入数据共耗时:834秒

13min27s

仅主键(自增)

2023-07-24 20:28:29.594 INFO 30340 --- [ main] com.example.controller.JavaJbdc : 成功插入数据耗时:20 - 1 / 24

...

2023-07-24 20:36:23.555 INFO 30340 --- [ main] com.example.controller.JavaJbdc : 成功插入数据耗时:20 - 24 / 24

7min 54s

存储过程插入大批量数据

https://blog.csdn.net/ztchun/article/details/84894957

先删除主键,在

alter table bookmarks2 drop PRIMARY key;

delimiter //

create procedure batchInsert()

begin

declare num int;

set num=1;

while num<=2400000 do

insert into bookmarks2(`bookmarkId`,`resKey`) values('asdsad', '123456');

set num=num+1;

end while;

end

//

delimiter ; #恢复;表示结束

call batchInsert;

240万数据

无主键,有3个索引 22min 28s

无主键、无索引 22min 28s

自增主键,无索引 22min 52s

代码分析

纯数据转移

先获取我们要转移的数据量有多少

Criteria criteria1 = Criteria.where("_id").gt(new ObjectId(initialId)); // ne 是 !=

Query query1 = new Query(criteria1);

long amount = mongoTemplate.count(query1, BookmarkEntity.class);

分批次转移数据,每次1000数据

记录表插入记录数据 + mysql转移数据

public String findAndSave(String initialId) throws IOException {

SqlSession sqlSession = getSqlSession();

mysqlRepository repository = sqlSession.getMapper(mysqlRepository.class);

try {

LOGGER.info("Start transfer:");

// 分页查询

Criteria criteria1 = Criteria.where("_id").gt(new ObjectId(initialId)); // ne 是 !=

Query query1 = new Query(criteria1);

long amount = mongoTemplate.count(query1, BookmarkEntity.class);

int PAGE_LIMIT = 1000;

int page = (int) amount / PAGE_LIMIT;

if (amount % PAGE_LIMIT > 0) {

// 余数不为0时,要加1

page += 1;

}

Criteria criteria = Criteria.where("_id").gt(new ObjectId(initialId));

Query query;

String id = null;

for (long i = 0; i < page; i++) {

query = new Query(criteria).limit(PAGE_LIMIT);

query.with(Sort.by(Sort.Order.asc("id"))); // 升序排序

List<BookmarkEntity> mongoList1 = mongoTemplate.find(query, BookmarkEntity.class);

id = mongoList1.get(mongoList1.size() - 1).getId();

criteria = Criteria.where("_id").gt(new ObjectId(id));

List<BookmarkEntity> result = new ArrayList<>();

for (BookmarkEntity bookmarkEntity : mongoList1) {

result.add(bookmarkEntity);

}

LOGGER.info("开始转移 " + result.size() + " 个数据量" + " - " + i + " / " + page);

List<Flag> list = result.stream().map(temp -> {

Flag flag = new Flag();

flag.setId(temp.getId());

flag.setBookmarkId(temp.getBookmark_id());

flag.setFlag("Y");

return flag;

}).collect(Collectors.toList());

// mongoTemplate.insert(list,Flag.class);

mongoTemplate.insertAll(list);

repository.save(result);

sqlSession.commit();

}

} catch (Exception e) {

throw new RuntimeException(e);

} finally {

Criteria criteria = Criteria.where("_id").gt(new ObjectId(initialId)); // ne 是 !=

Query query = new Query(criteria);

long amountMongo = mongoTemplate.count(query, BookmarkEntity.class, "bookmarks");

long amountFlag = mongoTemplate.count(query, Flag.class, "transfer");

criteria = Criteria.where("_id").gte(new ObjectId(initialId));

query = new Query(criteria);

query.with(Sort.by(Sort.Order.desc("id")));

BookmarkEntity bookmarkEntity = mongoTemplate.findOne(query, BookmarkEntity.class, "bookmarks");

Flag flag = mongoTemplate.findOne(query, Flag.class, "transfer");

LOGGER.info("本次迁移完毕,已转移数据量:" + amountFlag);

if (amountMongo == amountFlag && bookmarkEntity.getId().equals(flag.getId())) {

LOGGER.info("数据完全迁移成功");

return "success";

} else {

return "continue";

}

}

}

数据检查

先获取我们要转移的数据量有多少

Criteria criteria1 = Criteria.where("_id").gt(new ObjectId(initialId)); // ne 是 !=

Query query1 = new Query(criteria1);

long amount = mongoTemplate.count(query1, BookmarkEntity.class);

分批次检查数据,每次1000数据

记录表删除记录数据 + mysql删除数据

mongo记录表对应的list每次能到最底

mongo数据表不一定到最底

idT = mongoListT.get(indexT - 1).getId();

criteriaT = Criteria.where("_id").gt(new ObjectId(idT));

idB = mongoListT.get(indexB - 1).getId();

criteriaB = Criteria.where("_id").gt(new ObjectId(idB));

public String findAndDelete() throws IOException {

SqlSession sqlSession = getSqlSession();

mysqlRepository repository = sqlSession.getMapper(mysqlRepository.class);

try {

LOGGER.info("Start check:");

// 分页查询

Criteria criteria1 = Criteria.where("_id").ne(null); // ne 是 !=

Query query1 = new Query(criteria1);

long amount = mongoTemplate.count(query1, "transfer");

int PAGE_LIMIT = 1000;

int page = (int) amount / PAGE_LIMIT;

if (amount % PAGE_LIMIT > 0) {

// 余数不为0时,要加1

page += 1;

}

Criteria criteriaT = new Criteria();

Criteria criteriaB = new Criteria();

Query queryT;

Query queryB;

String idT = "0";

String idB = "0";

int indexT = 0;

int indexB = 0;

int count = 0;

List<Flag> mongoListT = null;

for (int i = 0; i < page; i++) {

queryT = new Query(criteriaT).limit(PAGE_LIMIT);

mongoListT = mongoTemplate.find(queryT, Flag.class);

queryB = new Query(criteriaB).limit(PAGE_LIMIT);

List<BookmarkEntity> mongoListB = mongoTemplate.find(queryT, BookmarkEntity.class);

List<Flag> result = new ArrayList<>();

indexT = 0;

indexB = 0;

for (indexT = 0; indexT < PAGE_LIMIT && indexT < mongoListT.size(); indexT++) {

if (!mongoListT.get(indexT).getId().equals(mongoListB.get(indexB).getId())) {

Criteria criteria = Criteria.where("_id").is(new ObjectId(mongoListT.get(indexT).getId()));

Query query = new Query(criteria);

mongoTemplate.remove(query, "transfer");

Map map = new HashMap();

map.put("id", mongoListT.get(indexT).getBookmarkId());

repository.delete(map);

// sqlSession.commit();

count++;

} else {

indexB++;

}

}

idT = mongoListT.get(indexT - 1).getId();

criteriaT = Criteria.where("_id").gt(new ObjectId(idT));

idB = mongoListT.get(indexB - 1).getId();

criteriaB = Criteria.where("_id").gt(new ObjectId(idB));

LOGGER.info("已清理 " + count + " 个数据 - " + i + " / " + page);

sqlSession.commit();

}

// 处理 新增数据

LOGGER.info("处理新增数据");

while (!findAndSave(mongoListT.get(indexT - 1).getId()).equals("success")) {

}

} catch (Exception e) {

throw new RuntimeException(e);

} finally {

LOGGER.info("本次数据检查完毕");

Criteria criteria = new Criteria();// ne 是 !=

Query query = new Query(criteria);

long amountMongo = mongoTemplate.count(query, BookmarkEntity.class, "bookmarks");

long amountFlag = mongoTemplate.count(query, Flag.class, "transfer");

query = new Query(criteria);

query.with(Sort.by(Sort.Order.desc("id")));

BookmarkEntity bookmarkEntity = mongoTemplate.findOne(query, BookmarkEntity.class, "bookmarks");

Flag flag = mongoTemplate.findOne(query, Flag.class, "transfer");

if (amountMongo == amountFlag && bookmarkEntity.getId().equals(flag.getId())) {

LOGGER.info("数据完全迁移成功");

return "success";

} else {

return "continue";

}

}

}