使用shell脚本自动部署单master节点多node k8s集群。

使用shell脚本自动部署单master节点多node k8s集群。

一、前言

因公司软件运行环境都是kubeadm+k8s+docker+rancher的方式,有的项目有需求K8S需要做高可用,有的只需要做单master即可。

项目比较多基本上每个客户都有正式环境、开发环境、测试环境,为了避免做重复性的工作,做一个合格的运维懒人这里通过shell脚本来做一个自动化部署k8s集群。

脚本使用首先需要定义环境变量,即当前目录下的:environment.sh文件,所有的关键信息都通过environment.sh进行修改。

shell脚本地址:https://gitee.com/ws123w/kubeadm-single-master.git

注:网络使用ipvs,所以系统内核必须升级到4.4或以上!

否则会有coredns无法解析的问题!

二、脚本目录结构

clone项目到服务器,目录结构如下:

[root@k8s-01 kubeadm-single-master]# ls -l

total 20

-rw-r--r--. 1 root root 2675 Feb 11 10:21 deploy-k8s-single.sh #部署脚本 bash ./deploy-k8s-single.sh 执行。

-rw-r--r--. 1 root root 2431 Feb 11 10:21 environment.sh #环境变量配置文件,脚本会从环境变量中取master 、node 的IP地址。

-rw-r--r--. 1 root root 1154 Feb 11 10:21 README.md

drwxr-xr-x. 2 root root 4096 Feb 11 10:21 scripts #脚本文件

drwxr-xr-x. 2 root root 4096 Feb 11 10:21 soft #离线软件包

drwxr-xr-x. 2 root root 29 Feb 11 10:21 work #临时工作目录

三、准备工作

注:这里使用三台服务器作为演示。

172.29.6.187 k8s-01 master

172.29.6.161 k8s-02 work

172.29.6.164 k8s-03 work

3.1、修改服务器计算机名称,设置hosts。

修改计算机名

[root@localhost kubeadm-single-master]# hostnamectl set-hostname k8s-01

断开连接重新登录就能看到计算机名是否修改成功。

设置主机hosts

[root@k8s-01 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.29.6.187 k8s-01

172.29.6.161 k8s-02

172.29.6.164 k8s-03

3.2、运行脚本的机器(默认使用k8s master机器执行脚本)做ssh免密登录其他服务器。

生产ssh私钥公钥

[root@k8s-01 ~]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

/root/.ssh/id_rsa already exists.

Overwrite (y/n)?

[root@k8s-01 ~]#

添加互信,依次所有node节点添加进来。

[root@k8s-01 ~]# ssh-copy-id [email protected]

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host '172.29.6.187 (172.29.6.187)' can't be established.

ECDSA key fingerprint is SHA256:Ip3mEORwf47ub60M8lCI3SBApfz4rWmOYVac7vs1JwU.

ECDSA key fingerprint is MD5:52:96:42:ec:8b:55:f7:50:94:a6:eb:c5:ba:27:3c:87.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system.

(if you think this is a mistake, you may want to use -f option)

3.3、修改environment.sh 环境变量脚本

[root@k8s-01 kubeadm-single-master]# cat environment.sh

#!/usr/bin/bash

# 集群master 服务器ip 数组 默认 etcd集群也是用集群master服务器

export MASTER_NODE_IPS=(172.29.6.187)

#Master 服务器主机名 数组

export MASTER_NODE_NAMES=(k8s-01)

# 集群各Worker机器 IP 数组。

export NODE_IPS=(172.29.6.161 172.29.6.164)

# 集群各Worker IP 对应的主机名数组

export NODE_NAMES=(k8s-02 ks8-03)

四、部署K8S集群

4.1、master、work节点安装基础环境依赖包

例如:关闭防火墙、关闭selinux、关闭swap等。

4.2、二进制方式安装docker

4.3、master、work节点安装kubeadm、kubelet、kubectl工具。默认版本为:1.18.20

4.4、master初始化kubeadm。

以上步骤执行 deploy-k8s-single.sh 脚本即可。

[root@k8s-01 kubeadm-single-master]# bash deploy-k8s-single.sh

看到如下信息即表明脚本已经跑完了

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join 172.29.6.187:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:f6dea2fe18eb8b08347430e2a50ff401a5332332eb920f5ba8c3812a928fac0b \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 172.29.6.187:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:f6dea2fe18eb8b08347430e2a50ff401a5332332eb920f5ba8c3812a928fac0b

查看node节点

[root@k8s-01 kubeadm-single-master]# mkdir -p $HOME/.kube

[root@k8s-01 kubeadm-single-master]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-01 kubeadm-single-master]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@k8s-01 kubeadm-single-master]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-01 NotReady master 50s v1.18.20

部署网络插件calico

calico 的yaml镜像做过优化,改成从阿里云仓库拉去镜像。

[root@k8s-01 kubeadm-single-master]# kubectl apply -f soft/calico.yaml

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

poddisruptionbudget.policy/calico-kube-controllers created

当一切准备妥当之后再来看看node

[root@k8s-01 kubeadm-single-master]# kubectl get pod -o wide -A

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system calico-kube-controllers-8648d6684-sxjdn 1/1 Running 0 2m58s 172.30.61.195 k8s-01

kube-system calico-node-mrch4 1/1 Running 0 2m58s 172.29.6.187 k8s-01

kube-system coredns-7ff77c879f-hrnf9 1/1 Running 0 4m20s 172.30.61.194 k8s-01

kube-system coredns-7ff77c879f-z8q56 1/1 Running 0 4m20s 172.30.61.193 k8s-01

kube-system etcd-k8s-01 1/1 Running 0 4m28s 172.29.6.187 k8s-01

kube-system kube-apiserver-k8s-01 1/1 Running 0 4m28s 172.29.6.187 k8s-01

kube-system kube-controller-manager-k8s-01 1/1 Running 0 4m28s 172.29.6.187 k8s-01

kube-system kube-proxy-ztnrp 1/1 Running 1 4m20s 172.29.6.187 k8s-01

kube-system kube-scheduler-k8s-01 1/1 Running 0 4m28s 172.29.6.187 k8s-01

[root@k8s-01 kubeadm-single-master]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-01 Ready master 4m40s v1.18.20

4.5、work节点需要手工操作加入k8s集群。

加入集群的命令保存在以下文件:

tail -f /usr/local/src/kubeadm-deploy.log

另外两个节点加入集群。

[root@k8s-02 ~]# kubeadm join 172.29.6.187:6443 --token abcdef.0123456789abcdef \

> --discovery-token-ca-cert-hash sha256:f6dea2fe18eb8b08347430e2a50ff401a5332332eb920f5ba8c3812a928fac0b

W0211 11:20:40.628691 15427 join.go:346] [preflight] WARNING: JoinControlPane.controlPlane settings will be ignored when control-plane flag is not set.

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.18" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

k8s-03同样如此操作。

查看node

[root@k8s-01 kubeadm-single-master]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-01 Ready master 6m39s v1.18.20

k8s-02 NotReady 35s v1.18.20

k8s-03 NotReady 33s v1.18.20

[root@k8s-01 kubeadm-single-master]# kubectl get pod -o wide -A

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system calico-kube-controllers-8648d6684-sxjdn 1/1 Running 0 5m9s 172.30.61.195 k8s-01

kube-system calico-node-56j65 0/1 Init:0/3 0 44s 172.29.6.161 k8s-02

kube-system calico-node-mrch4 1/1 Running 0 5m9s 172.29.6.187 k8s-01

kube-system calico-node-xpb5j 0/1 Init:0/3 0 42s 172.29.6.164 k8s-03

kube-system coredns-7ff77c879f-hrnf9 1/1 Running 0 6m31s 172.30.61.194 k8s-01

kube-system coredns-7ff77c879f-z8q56 1/1 Running 0 6m31s 172.30.61.193 k8s-01

kube-system etcd-k8s-01 1/1 Running 0 6m39s 172.29.6.187 k8s-01

kube-system kube-apiserver-k8s-01 1/1 Running 0 6m39s 172.29.6.187 k8s-01

kube-system kube-controller-manager-k8s-01 1/1 Running 0 6m39s 172.29.6.187 k8s-01

kube-system kube-proxy-v7lsz 0/1 ContainerCreating 0 44s 172.29.6.161 k8s-02

kube-system kube-proxy-w7mnd 0/1 ContainerCreating 0 42s 172.29.6.164 k8s-03

kube-system kube-proxy-ztnrp 1/1 Running 1 6m31s 172.29.6.187 k8s-01

kube-system kube-scheduler-k8s-01 1/1 Running 0 6m39s 172.29.6.187 k8s-01

等待work节点calico、kube-proxy状态都为Running

[root@k8s-01 kubeadm-single-master]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-01 Ready master 10m v1.18.20

k8s-02 Ready 4m38s v1.18.20

k8s-03 Ready 4m36s v1.18.20

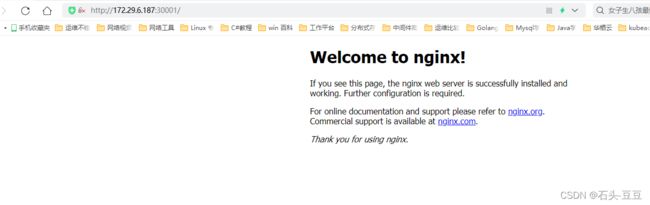

五、验证。

[root@k8s-01 work]# pwd

/mnt/kubeadm-single-master/work

[root@k8s-01 work]# kubectl apply -f nginx-demo.yaml

deployment.apps/nginx-deployment created

service/nginx-deployment created