自制多肉查询工具

背景:

复习python qt、网页解析的常用操作

准备:

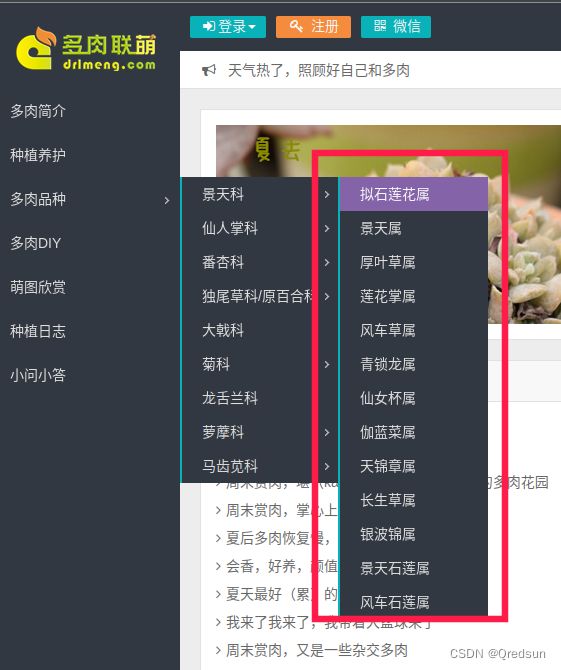

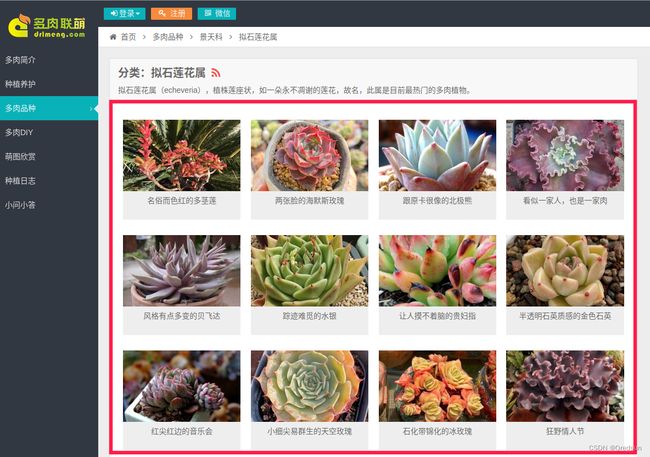

- 多肉信息网站

- 涉及python的第三方库:

- lxml

- PyQt5

实现效果:

功能:

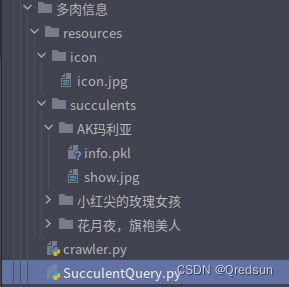

- 随机读取:从本地加载已存储的多肉信息

- 数据更新:从多肉信息网站更新5条多肉数据

- 查询:根据多肉的名字,查询本地存储的多肉信息

- 展示内容:

- 多肉名称

- 多肉介绍

- 多肉图片

实现过程:

-

多肉信息爬取:

-

实现代码:

# -*- coding:UTF-8 -*- """ @ProjectName : pyExamples @FileName : crawler @Description : 爬取多肉信息 @Time : 2023/8/21 下午2:28 @Author : Qredsun """ import os import time import random import pickle import requests from lxml import etree from loguru import logger '''多肉数据爬虫''' class SucculentCrawler(): DEFAULT_UPDATE_NUM = 5 # 每次更新的多肉信息数量 def __init__(self, **kwargs): self.__url = 'https://www.drlmeng.com/' self.referer_list = ["http://www.google.com/", "http://www.bing.com/", "http://www.baidu.com/", "https://www.360.cn/"] self.ua_list = ['Mozilla/5.0 (Linux; Android 5.1.1; Z828 Build/LMY47V) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/42.0.2311.111 Mobile Safari/537.36', 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/50.0.2661.75 Safari/537.36', 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_8_2) AppleWebKit/537.22 (KHTML, like Gecko) Chrome/25.0.1364.172 Safari/537.22', 'Mozilla/5.0 (iPad; CPU OS 8_3 like Mac OS X) AppleWebKit/600.1.4 (KHTML, like Gecko) CriOS/47.0.2526.107 Mobile/12F69 Safari/600.1.4', 'Mozilla/5.0 (iPad; CPU OS 11_2_5 like Mac OS X) AppleWebKit/604.1.34 (KHTML, like Gecko) CriOS/64.0.3282.112 Mobile/15D60 Safari/604.1', 'Mozilla/5.0 (Linux; Android 7.1.1; SM-T350 Build/NMF26X) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.111 Safari/537.36', 'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/61.0.3163.98 Safari/537.36', 'Mozilla/5.0 (Windows NT 5.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/43.0.2357.124 Safari/537.36', 'Mozilla/5.0 (Linux; Android 6.0.1; SM-G610F Build/MMB29K) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/56.0.2924.87 Mobile Safari/537.36', 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_5) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/54.0.2840.98 Safari/537.36', 'Mozilla/5.0 (Linux; Android 5.1.1; 5065N Build/LMY47V; wv) AppleWebKit/537.36 (KHTML, like Gecko) Version/4.0 Chrome/46.0.2490.76 Mobile Safari/537.36', 'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/51.0.2704.79 Safari/537.36', 'Mozilla/5.0 (Windows NT 6.2; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/47.0.2526.80 Safari/537.36'] self.page_urls = self.__getAllPageUrls() self.savedir = 'resources/succulents' self.page_pointer = 0 '''爬取下一页数据''' def next(self): data = self.__parse_drlmeng_subject() self.__saveItem(data) time.sleep(random.random()) return False '''多肉科目''' def __parse_drlmeng_subject(self): drlmeng_title = '' drlmeng_category_desc = [] drlmeng_img_url = '' # 获取链接 page_url = self.page_urls[self.page_pointer] # 提取该页中多肉的图片+详情页链接 res = requests.get(page_url, headers=self.__randomHeaders()) html = etree.HTML(res.text) archive_head = html.xpath('//*[@id="archive-head"]') if not len(archive_head): logger.debug('解析多肉所属科失败') return drlmeng_title, drlmeng_img_url, drlmeng_category_desc archive_head = archive_head[0] drlmeng_subject = archive_head.xpath('.//h1') if len(drlmeng_subject): drlmeng_subject = drlmeng_subject[0].text.strip() logger.debug(f'科:{drlmeng_subject}') drlmeng_category_desc.append(drlmeng_subject) drlmeng_type_description = archive_head.xpath('.//div/p') if len(drlmeng_type_description): drlmeng_type_description = drlmeng_type_description[0].text.strip() logger.debug(f'科介绍:{drlmeng_type_description}') drlmeng_category_desc.append(drlmeng_type_description) drlmengs = html.xpath('//ul[@class="posts-ul"]/li') drlmengs = drlmengs if drlmengs else html.xpath('//li[@class="row-thumb"]') if len(drlmengs): random_index = random.randint(0, len(drlmengs)) drlmeng_info = drlmengs[random_index].xpath('.//a[@class="post-thumbnail"]') if len(drlmeng_info): drlmeng_info = drlmeng_info[0] drlmeng_title = drlmeng_info.attrib.get('title') drlmeng_title = drlmeng_title.replace('/','_') drlmeng_desc_url = drlmeng_info.attrib.get('href') drlmeng_category_desc.append(self.__parse_drlmeng_category(drlmeng_desc_url)) drlmeng_img_urls = drlmeng_info.xpath('.//img') if len(drlmeng_img_urls): drlmeng_img_url = drlmeng_img_urls[0].attrib.get('lazydata-src') self.page_pointer += 1 return drlmeng_title, drlmeng_img_url, drlmeng_category_desc '''多肉属''' def __parse_drlmeng_category(self, drlmeng_desc_url): content = '' # # 提取该页中多肉的图片+详情页链接 res = requests.get(drlmeng_desc_url, headers=self.__randomHeaders()) html = etree.HTML(res.text) entry = html.xpath('//div[@class="entry"]') if len(entry): entry = entry[0] line_content = entry.xpath('.//p/text()') for line in line_content: line = line.replace('\u3000', '') line = line.strip() content += line logger.debug(f'解析多肉详细介绍:{content}') return content '''数据保存''' def __saveItem(self, data): if not os.path.exists(self.savedir): os.mkdir(self.savedir) if not data[0]: return savepath = os.path.join(self.savedir, data[0]) if not os.path.exists(savepath): os.mkdir(savepath) f = open(os.path.join(savepath, 'show.jpg'), 'wb') f.write(requests.get(data[1], headers=self.__randomHeaders()).content) f.close() f = open(os.path.join(savepath, 'info.pkl'), 'wb') pickle.dump(data, f) f.close() '''获得所有链接''' def __getAllPageUrls(self): res = requests.get(self.__url, headers=self.__randomHeaders()) html = etree.HTML(res.text) # html = etree.parse('/home/redsun/Downloads/pycharm-2020.2/run/config/scratches/scratch.html', etree.HTMLParser()) ul_list = html.xpath('//ul[@class="sub-menu"]') page_urls = [] for ul in ul_list: page_urls.extend(ul.xpath('.//a/@href')) if page_urls.__len__() >= self.DEFAULT_UPDATE_NUM: page_urls = page_urls[:self.DEFAULT_UPDATE_NUM] break return page_urls '''随机请求头''' def __randomHeaders(self): return {'user-agent': random.choice(self.ua_list), 'referer': random.choice(self.referer_list)} if __name__ == '__main__': c = SucculentCrawler() c.next()

-

窗口交互:

# -*- coding:UTF-8 -*- """ @ProjectName : pyExamples @FileName : SucculentQuery @Description : 多肉信息查询工具外壳 @Time : 2023/8/21 下午2:28 @Author : Qredsun """ import io import os import sys import random import threading from PIL import Image from PyQt5.QtGui import * from PyQt5.QtWidgets import * from PyQt5 import QtGui from crawler import * '''多肉数据''' class SucculentQuery(QWidget): def __init__(self, parent=None, **kwargs): super(SucculentQuery, self).__init__(parent) self.setWindowTitle('多肉数据查询-微信公众号:Qredsun') self.setWindowIcon(QIcon('resources/icon/icon.jpg')) # 定义组件 self.label_name = QLabel('多肉名称: ') self.line_edit = QLineEdit() self.button_find = QPushButton() self.button_find.setText('查询') self.label_result = QLabel('查询结果:') self.show_label = QLabel() self.show_label.setFixedSize(300, 300) self.showLabelImage('resources/icon/icon.png') self.text_result = QTextEdit() self.button_random = QPushButton() self.button_random.setText('随机读取') self.button_update = QPushButton() self.button_update.setText('数据更新') self.tip_label = QLabel() self.tip_label.setText('数据状态: 未在更新数据, 数据更新进度: 0/0') # 排版 self.grid = QGridLayout() self.grid.addWidget(self.label_name, 0, 0, 1, 1) self.grid.addWidget(self.line_edit, 0, 1, 1, 30) self.grid.addWidget(self.button_find, 0, 31, 1, 1) self.grid.addWidget(self.button_random, 0, 32, 1, 1) self.grid.addWidget(self.button_update, 0, 33, 1, 1) self.grid.addWidget(self.tip_label, 1, 0, 1, 31) self.grid.addWidget(self.label_result, 2, 0) self.grid.addWidget(self.text_result, 3, 0, 1, 34) self.grid.addWidget(self.show_label, 3, 34, 1, 1) self.setLayout(self.grid) self.resize(600, 400) # 事件绑定 self.button_find.clicked.connect(self.find) self.button_random.clicked.connect(self.randomRead) self.button_update.clicked.connect(lambda _: threading.Thread(target=self.update).start()) '''数据查询''' def find(self): datadir = os.path.join('resources/succulents/', self.line_edit.text()) if os.path.exists(datadir): self.showLabelImage(os.path.join(datadir, 'show.jpg')) intro = pickle.load(open(os.path.join(datadir, 'info.pkl'), 'rb'))[-1] self.showIntroduction(intro) '''随机读取''' def randomRead(self): datadir = random.choice(os.listdir('resources/succulents/')) self.line_edit.setText(datadir) datadir = os.path.join('resources/succulents/', self.line_edit.text()) if os.path.exists(datadir): self.showLabelImage(os.path.join(datadir, 'show.jpg')) intro = pickle.load(open(os.path.join(datadir, 'info.pkl'), 'rb'))[-1] self.showIntroduction(intro) '''数据更新''' def update(self): crawler_handle = SucculentCrawler() while True: self.tip_label.setText( '数据状态: 正在在更新数据, 数据更新进度: %s/%s' % (crawler_handle.page_pointer + 1, len(crawler_handle.page_urls))) crawler_handle.next() if crawler_handle.page_pointer == len(crawler_handle.page_urls): break self.tip_label.setText('数据状态: 未在更新数据, 数据更新进度: 0/0') '''在文本框里显示多肉介绍''' def showIntroduction(self, intro): self.text_result.setText('\n\n'.join(intro)) '''在Label对象上显示图片''' def showLabelImage(self, imagepath): image = Image.open(imagepath).resize((300, 300), Image.ANTIALIAS) fp = io.BytesIO() image.save(fp, 'JPEG') qtimg = QtGui.QImage() qtimg.loadFromData(fp.getvalue(), 'JPEG') qtimg_pixmap = QtGui.QPixmap.fromImage(qtimg) self.show_label.setPixmap(qtimg_pixmap) '''run''' if __name__ == '__main__': app = QApplication(sys.argv) query_demo = SucculentQuery() query_demo.show() sys.exit(app.exec_())