李沐《动手学深度学习》课程笔记:05 线性代数

目录

05 线性代数

1.线性代数

2.线性代数实现

3.按特定轴求和

05 线性代数

1.线性代数

2.线性代数实现

2.线性代数实现

import torch

# 标量由只有一个元素的张量表示

x = torch.tensor(3.0)

y = torch.tensor(2.0)

print(x+y)

print(x*y)

print(x/y)

print(x**y)

# 可以将向量视为标量组成的列表

x = torch.arange(4)

print(x)

# 通过张量的索引来访问任意元素

print(x[3])

# 访问张量的长度

print(len(x))

# 只有一个轴的张量,形状只有一个元素

print(x.shape)

# 通过指定两个分量m和n来创建一个形状为m x n的矩阵

A = torch.arange(20).reshape(5, 4)

print(A)

# 矩阵的转置

print(A.T)

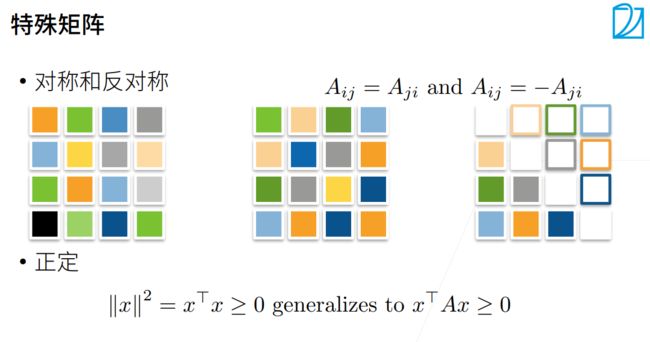

# 对称矩阵的转置等于自身

B = torch.tensor([[1, 2, 3], [2, 0, 4], [3, 4, 5]])

print(B)

print(B == B.T)

# 就像向量是标量的推广,矩阵是向量的推广一样,我们可以构建具有更多轴的数据

X = torch.arange(24).reshape(2, 3, 4)

print(X)

# 给定具有相同形状的任何两个张量,任何按元素二元运算的结果都将是相同形状的张量

A = torch.arange(20, dtype=torch.float32).reshape(5, 4)

B = A.clone() # 通过分配新内存,将A的一个副本分配给B

print(A)

print(A+B)

# 两个矩阵的按元素乘法称为哈达玛积

print(A * B)

a = 2

x = torch.arange(24).reshape(2, 3, 4)

print(a + x)

print((a * x).shape)

# 计算其元素的和

x = torch.arange(4, dtype=torch.float32)

print(x)

print(x.sum())

# 表示任意形状张量的元素和

a = torch.arange(20*2).reshape(2, 5, 4)

print(a.shape)

print(a.sum())

# 指定求和汇总张量的轴

a_sum_axis0 = A.sum(axis=0)

print(a_sum_axis0)

print(a_sum_axis0.shape)

a_sum_axis1 = a.sum(axis=1)

print(a_sum_axis1)

print(a_sum_axis1.shape)

print(a.sum(axis=[0, 1]))

print(a.sum(axis=[0, 1]).shape)

# 一个与求和相关的量是平均值(mean或average)

a = torch.arange(20*2, dtype=torch.float32).reshape(2, 5, 4)

print(a.mean())

print(a.sum() / a.numel())

# 计算总和或均值时保持轴数不变

sum_a = a.sum(axis=0, keepdims=True)

print(sum_a)

# 通过广播将A除以sum_A

print(a / sum_a)

# 某个轴计算A元素的累积总和

print(a.cumsum(axis=0))

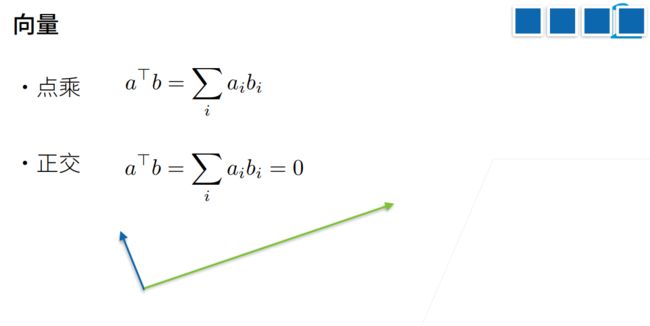

# 点积是相同位置的按元素乘积的和

a = torch.tensor([[1., 2., 3., 4.]])

b = torch.ones(4, dtype=torch.float32)

print(b)

print(torch.dot(a, b))

# 我们可以通过执行按元素乘法,然后进行求和来表示两个向量的点积

print(torch.sum(a * b))

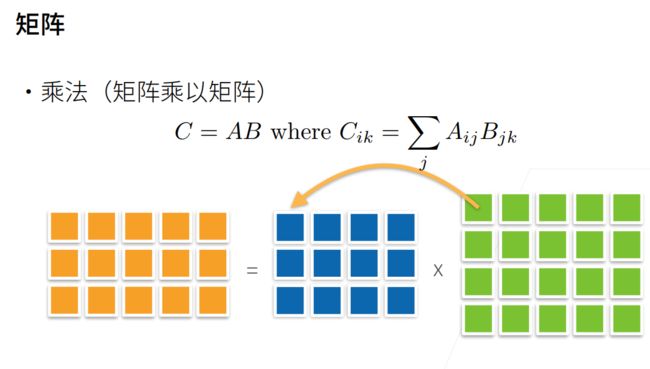

# 我们可以将矩阵-矩阵乘法AB看作是简单执行m次矩阵-向量积,并将结果拼接在一起,形成一个n * m矩阵

a = torch.ones(3, 4)

b = torch.ones(4, 3)

print(torch.mm(a, b))

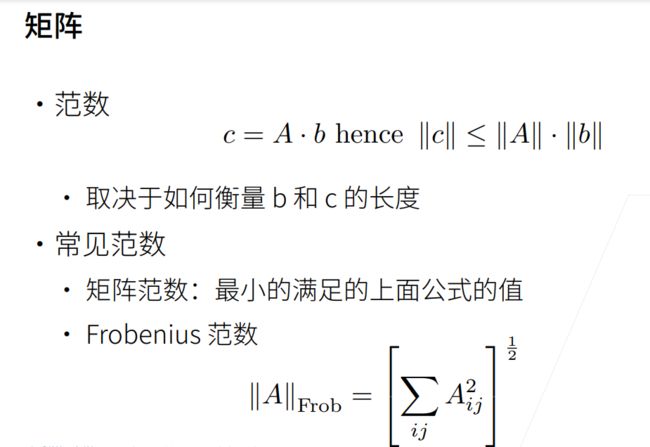

# L2范式是向量元素平方和的平方根

x = torch.tensor([3.0, -4.0])

print(torch.norm(x))

# L1范数,它表示为向量元素的绝对值之和

print(torch.abs(x).sum())

# 矩阵的Frobenius norm是矩阵元素的平方和的平方根

x = torch.norm(torch.ones((4, 9)))

print(x)

tensor(5.)

tensor(6.)

tensor(1.5000)

tensor(9.)

tensor([0, 1, 2, 3])

tensor(3)

4

torch.Size([4])

tensor([[ 0, 1, 2, 3],

[ 4, 5, 6, 7],

[ 8, 9, 10, 11],

[12, 13, 14, 15],

[16, 17, 18, 19]])

tensor([[ 0, 4, 8, 12, 16],

[ 1, 5, 9, 13, 17],

[ 2, 6, 10, 14, 18],

[ 3, 7, 11, 15, 19]])

tensor([[1, 2, 3],

[2, 0, 4],

[3, 4, 5]])

tensor([[True, True, True],

[True, True, True],

[True, True, True]])

tensor([[[ 0, 1, 2, 3],

[ 4, 5, 6, 7],

[ 8, 9, 10, 11]],

[[12, 13, 14, 15],

[16, 17, 18, 19],

[20, 21, 22, 23]]])

tensor([[ 0., 1., 2., 3.],

[ 4., 5., 6., 7.],

[ 8., 9., 10., 11.],

[12., 13., 14., 15.],

[16., 17., 18., 19.]])

tensor([[ 0., 2., 4., 6.],

[ 8., 10., 12., 14.],

[16., 18., 20., 22.],

[24., 26., 28., 30.],

[32., 34., 36., 38.]])

tensor([[ 0., 1., 4., 9.],

[ 16., 25., 36., 49.],

[ 64., 81., 100., 121.],

[144., 169., 196., 225.],

[256., 289., 324., 361.]])

tensor([[[ 2, 3, 4, 5],

[ 6, 7, 8, 9],

[10, 11, 12, 13]],

[[14, 15, 16, 17],

[18, 19, 20, 21],

[22, 23, 24, 25]]])

torch.Size([2, 3, 4])

tensor([0., 1., 2., 3.])

tensor(6.)

torch.Size([2, 5, 4])

tensor(780)

tensor([40., 45., 50., 55.])

torch.Size([4])

tensor([[ 40, 45, 50, 55],

[140, 145, 150, 155]])

torch.Size([2, 4])

tensor([180, 190, 200, 210])

torch.Size([4])

tensor(19.5000)

tensor(19.5000)

tensor([[[20., 22., 24., 26.],

[28., 30., 32., 34.],

[36., 38., 40., 42.],

[44., 46., 48., 50.],

[52., 54., 56., 58.]]])

tensor([[[0.0000, 0.0455, 0.0833, 0.1154],

[0.1429, 0.1667, 0.1875, 0.2059],

[0.2222, 0.2368, 0.2500, 0.2619],

[0.2727, 0.2826, 0.2917, 0.3000],

[0.3077, 0.3148, 0.3214, 0.3276]],

[[1.0000, 0.9545, 0.9167, 0.8846],

[0.8571, 0.8333, 0.8125, 0.7941],

[0.7778, 0.7632, 0.7500, 0.7381],

[0.7273, 0.7174, 0.7083, 0.7000],

[0.6923, 0.6852, 0.6786, 0.6724]]])

tensor([[[ 0., 1., 2., 3.],

[ 4., 5., 6., 7.],

[ 8., 9., 10., 11.],

[12., 13., 14., 15.],

[16., 17., 18., 19.]],

[[20., 22., 24., 26.],

[28., 30., 32., 34.],

[36., 38., 40., 42.],

[44., 46., 48., 50.],

[52., 54., 56., 58.]]])

tensor([1., 1., 1., 1.])

tensor(10.)

tensor(10.)

tensor([[4., 4., 4.],

[4., 4., 4.],

[4., 4., 4.]])

tensor(5.)

tensor(7.)

tensor(6.)3.按特定轴求和

import torch

a = torch.ones((2, 5, 4))

print(a.shape)

print(a.sum(axis=1).shape)

print(a.sum(axis=1))

print(a.sum(axis=[0, 2]).shape)

print(a.sum(axis=1, keepdims=True).shape)torch.Size([2, 5, 4])

torch.Size([2, 4])

tensor([[5., 5., 5., 5.],

[5., 5., 5., 5.]])

torch.Size([5])

torch.Size([2, 1, 4])