【翻译】智能制造中EDA 应用及益处系列之四:精密故障检测与分类(FDC)

在EDA应用程序和智能制造系列的好处的第三篇文章中,我们重点介绍了一系列在领先的半导体制造商中使用EDA/Interface A标准的制造应用程序中的第一个,在第四篇文章中,我们将重点介绍到目前为止业界采用EDA的主要驱动,即故障检测和分类(FDC)。

问题陈述

FDC所解决的问题是防止由于某种设备由于某种原因从其可接受的操作窗口漂移出而导致的废料。目前领先的FDC系统普遍使用的技术,是基于 “良好”和“不良”运行的训练集,为不同的生产操作点开发“降维”统计故障模型。

然后利用加工过程中从设备中采集到的关键参数(通常是跟踪数据)来对这些模型进行实时评估,以检测工艺偏差并预测即将发生的设备故障。在最先进的晶圆厂,FDC软件与管理流程的系统深度集成,甚至可以在运行中干预设备运行,以防止/减少废料的产生。

当然,这类算法的挑战在于开发足够“紧凑”的模型,以捕获所有潜在故障源(即消除漏报) 的同时,要留出足够的回旋余地,尽量减少误报的数量(也称为假警报,或喊“狼来了!”)。这反过来又需要来自设备的高质量数据,以及大量的工艺工程和统计分析专业知识,用于开发和更新处理必须的生产案例范围所用到的故障模型。高度混合的代工环境加剧了这种情况。

解决方案组件

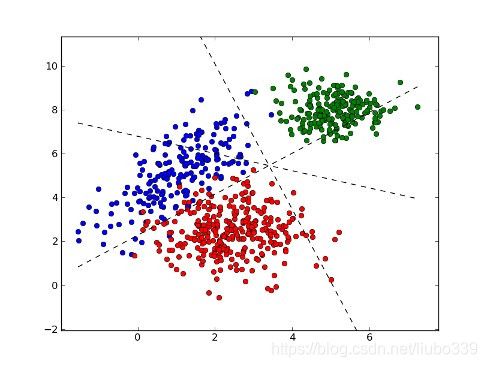

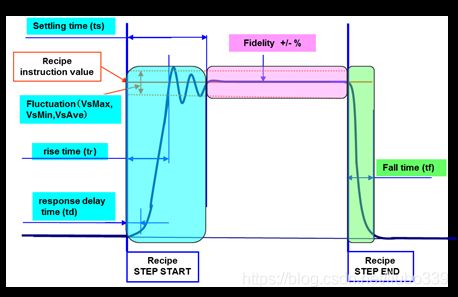

现代FDC系统的核心是一个强健的多元统计分析工具箱,能够处理大量的时间序列数据。“大量”指的是不同设备参数的数量和每个参数的样本数量。这些软件工具将可能的数百个参数分解为一小组“主要组件”,这些“主要组件”可以使用有限的一组设备参数(比如20-30个)实时计算。这些主要组件中的一些组合在一起准确地表示了流程的实际状态,足以检测到与规范的偏差。由于它们可以实时地计算出来,因此应用程序可以作为在线的设备健康状况监视器。

生产线FDC系统的另一个主要解决方案组件是能够处理大量模型的故障模型库管理功能。这是必要的,因为多元方法包括很少或没有意识到这些主要组件的实际物理意义(即 它们不是“第一原则”),因此设备的不同操作点必须有自己的故障模型集。通过将特定运行的“上下文参数”的值与用于存储模型的值匹配,可以为给定的操作点选择合适的模型。即使一些模型可以在一系列操作点之间共享,一个代工厂Mega Fab的模型的数量仍将达到数千个。

EDA (设备数据采集)标准的杠杆作用

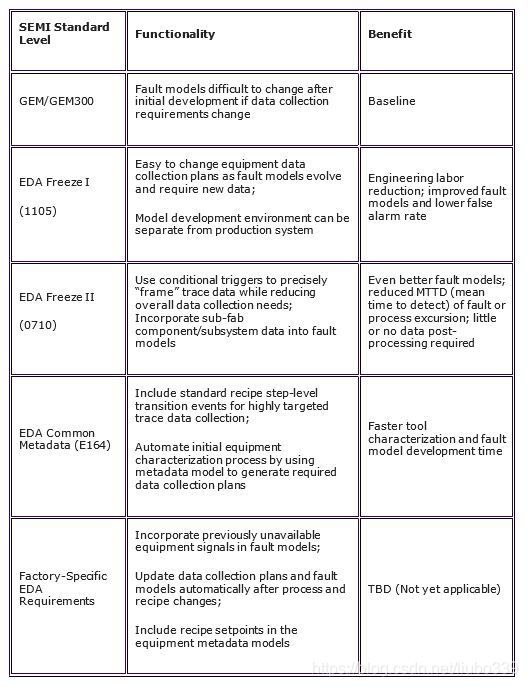

在一个先进的fab中,对于给定的应用程序,有一系列数据收集备选方案,从基本的批次的汇总信息到详细的实时数据,这些数据可以是基片级甚至是基于芯片/点的。对于FDC,这种可能性的范围如下表所示。

| SEMI 标准级别 |

功能 |

益处 |

| GEM/GEM300 |

一旦开发完成,当收集采集需求修改时,错误模型很难修改。 |

基准线。 |

| EDA Freeze I (1105)

|

很容易随着故障模型改进和新数据要求来修改设备数据采集计划; 模型开发环境可以同生产环境分离开来。 |

工程人力减少;改进的故障模型和更低的误报警比率。 |

| EDA Freeze II (0710)

|

使用有条件的触发来执行跟踪数据,减少整体数据采集需求; 将sub-fab的组件和子系统整合进故障模型。 |

更好的故障模型;降低故障或者工艺执行的MTTD(平均检测时间);很少或者无需后期数据处理。 |

| EDA 通用元数据 (E164) |

包含配方步骤级别的变化时间,实现更有针对性的跟踪数据采集; 通过使用元数据模型自动化初始设备特性描述过程来生成需要的数据采集计划。 |

更快的设备特性描述和故障模型开发。 |

| 工厂特殊EDA需求 |

将之前不能利用的设备信号整合进故障模型; 工艺和配方变化后自动更新数据采集计划; 将配方设定值放进设备元数据模型。 |

待定。 |

左边第一列是指用于提供必要设备数据的SEMI标准水平。“功能”列描述如何在FDC上下文中使用该数据,“益处”列强调这些功能可能产生的潜在影响。假设fab实现了表的第3行和第4行描述的功能(EDA Freeze II(0710)和符合E164的EDA通用元数据)。在这种情况下,工艺设备将能够按照配方步骤特定的采样率提供详细的工艺参数,足以用来评估即便是最苛刻的FDC模型的“特征提取”算法,并使用上下文数据为给定的工艺条件选择精确的模型集。即使具体的设备参数处理是相关的, 但软件中监控配方执行事件,生成提供跟踪数据的数据收集计划, 组装模型管理库所使用的上下文数据这些部分是通用的,因为整个fab范围内设备接口的一致性都E164来规范的。

FDC团队可以利用EDA标准的另一个方面是多客户端功能支持的系统结构灵活性。即使将设备连接到生产数据管理基础设施时,开发和细化故障模型的工艺工程师和统计人员也可以使用根据过程行为分析、实验和持续改进而量身定制的独立数据收集系统。当新的故障模型准备好投入生产时,生产使用的DCPs仍可以根据这些新需求进行更新。

受影响的KPI

因为非计划设备停机的高成本和保持高产品产量的重要性,FDC被认为是当今晶圆厂的“关键任务”应用。简单地说,“如果FDC宕机,工具就宕机了”,这意味着支持该应用程序的实时数据收集基础设施同样是关键任务。因此,FDC性能的改进可以对晶圆厂的性能产生重大影响。

具体地说,通过更高的故障检测灵敏度,FDC直接影响工艺良率和报废率KPI,通过减少需要将设备移出生产线错误报警次数, FDC可以影响设备的可用性和相关KPI。

那又如何?

在我职业生涯的早期,一位明智的同事建议我在每次演讲、文章或谈话的结尾都要回答这个问题。为了从财务的角度回答这个问题,让我们考虑一下在一个300mm的生产晶圆厂的,一个FDC错误警报的成本。

假设

- 一个小时的设备时间价值2200美元,一个合格晶圆片价值250美元,一个小时的工程/技术时间价值150美元

- 如果发生一个错误报警,需要5个小时的工具时间,2个小时的工程时间,以及6个qual晶圆片来解决。

那么,每一次错误警报都会让公司损失近1.2万美元。对于一个拥有2000台设备和平均每年每台工具2次误报率的晶圆厂来说,每年的成本接近5000万美元! 虚报率(这并非不合理)降低50%,每年可节省2500万美元。

如果你觉得这听起来像“真正的钱”,那么联系我们。我们可以帮助您理解那些支持最新一代FDC和其他系统所需要的标准的数据收集基础设施,是如何帮助您走上智能制造之路。

要了解更多关于自动化需求标准EDA/Interface的信息,请访问www.cimetrix.com下载EDA/Interface白皮书。

EDA Applications and Benefits for Smart

Manufacturing Episode 4: Precision Fault Detection and Classification (FDC)

In the third article of this EDA Applications and Benefits in Smart Manufacturing series, we highlighted the first of a series of manufacturing applications that leverage the capabilities of the EDA / Interface A suite of standards in leading semiconductor manufacturers. In this fourth article, we’ll highlight the application that has been the principal driver for the adoption of EDA across the industry thus far, namely Fault Detection and Classification (FDC).

Problem Statement

The problem that FDC addresses is the prevention of scrap that may result from processing material by an equipment that has drifted out of its acceptable operating window for whatever reason. The prevalent technique used by today’s leading FDC systems is to develop “reduced dimension” statistical fault models for the various production operating points based on training sets of “good” and “bad” runs. These models are then evaluated in real time with key parameters (usually trace data) collected from the equipment during processing to detect process deviation and predict impending tool failure. In the most advanced fabs, the FDC software is deeply integrated with the systems that manage process flow, and can even interdict equipment operation in mid-run to prevent/reduce scrap production.

Of course, the challenge with this type of algorithm is developing models that are “tight” enough to catch all sources of potential faults (i.e., eliminating false negatives) while leaving enough wiggle room to minimize the number of false positives (also known as false alarms, or crying “Wolf!”). This in turn requires high quality data from the equipment, and lots of process engineering and statistical analysis expertise to develop and update the fault models for the range of production cases that must be handled. High-mix foundry environments exacerbate this situation.

Solution Components

The core of modern FDC systems is a robust multivariate statistics analysis toolbox, capable of handling large amounts of time series data. By “large,” we mean both the number of distinct equipment parameters and the number of samples for each parameter. These software tools collapse potentially hundreds of parameters into a small set of “principal components” that can be calculated on-the-fly using a limited set (say, 20-30) of equipment parameters. Some number of these principal components in aggregate represent the actual state of the process accurately enough to detect deviations from the norm, and since they can be realistically calculated in real time, the application serves as an on-line equipment health monitor.

The other major solution component for a production FDC system is a fault model library management capability that can handle large numbers of models. This is necessary because the multivariate approach includes little or no awareness of the physical meaning of the principal components (i.e., they are not “first principles” based), so different operating points for the equipment must have their own sets of fault models. The proper models for a given operating point are selected by matching the values of the “context parameters” for a specific run to those used to store the models. Even if some models can be shared across a range of operating points, the number of distinct models for a foundry megafab will still number in the thousands.

EDA (Equipment Data Acquisition) Standards Leverage

In an advanced fab, there is a spectrum of data collection alternative available for a given application, from basic lot-level summary information to detailed real-time data that can used at the substrate level or even on a die/site basis. For FDC, this spectrum of possibilities is shown in the table below.

The left column refers to the level of SEMI standards used to provide the necessary equipment data. The “Functionality” column describes how that data is used in an FDC context, and the “Benefit” column highlights the potential impact these functions can have.

Let’s say that a fab implemented the capability described on rows 3 and 4 of the table (EDA Freeze II (0710) and E164-compliant EDA Common Metadata). In this case, the process equipment will be able to provide detailed process parameters at recipe step-specific sampling rates sufficient to evaluate “feature extraction” algorithms of even the most demanding FDC models…with context data to select the precise set of models for a given process condition. And even though the specific equipment parameters are necessarily process dependent, much of the software that monitors recipe execution events, generates the data collection plans (DCPs) that provide the trace data, and assembles the context data used by the model management library can be truly generic because of the fab-wide consistency of the equipment interfaces dictated by the E164 standards.

Another aspect of the EDA standards that FDC teams can leverage is the system architecture flexibility enabled by the multi-client capability. Even while a piece of equipment is connected to a production data management infrastructure, the process engineers and statisticians who develop and refine the fault models can use an independent data collection system tailored for process behavior analysis, experimentation, and continuous improvement. When the new fault models are ready for production, the production DCPs can be updated with these new requirements.

KPIs Affected

FDC is considered a “mission-critical” application in today’s fabs because of the high cost of unscheduled equipment downtime and the importance of maintaining high product yield. Simply stated, “if FDC is down, the tool is down,” which means that the real-time data collection infrastructure supporting this application is likewise mission critical. As such, improvements in FDC performance can have a major impact on fab performance.

Specifically, FDC directly affects the process yield and scrap rate KPIs through higher fault detection sensitivity, and it affects equipment availability and related KPIs by reducing the number of false alarms that often require equipment to be taken out of production.

So what?

A wise colleague advised me early in my career to always have an answer for this question at the end of every presentation, article, or conversation. To answer this question in financial terms for this posting, let’s consider the cost of FDC false alarms for a production 300mm fab.

Assuming that

![]() an hour of tool time is worth US$2200, a qual wafer costs $250, and an hour of engineering/technician time costs $150, and

an hour of tool time is worth US$2200, a qual wafer costs $250, and an hour of engineering/technician time costs $150, and

![]() it takes 5 hours of tool time, 2 hours of engineering time, and 6 qual wafers to resolve a false alarm, then

it takes 5 hours of tool time, 2 hours of engineering time, and 6 qual wafers to resolve a false alarm, then

each false alarm costs the company almost $12K. For a fab with 2000 pieces of equipment and an average false alarm rate of 2 per tool per year, that comes to an annual cost of almost $50M! A 50% reduction in the false alarm rate (which is not unreasonable) nets $25M of savings per year.

If this sounds like “real money” to you, give us a call. We can help you understand how to get on the Smart Manufacturing path with the kind of standards-based data collection infrastructure that is needed to support the latest generation of FDC systems and beyond.

If this sounds like “real money” to you, give us a call. We can help you understand how to get on the Smart Manufacturing path with the kind of standards-based data collection infrastructure that is needed to support the latest generation of FDC systems and beyond.

To Learn more about the EDA/Interface A Standard for automation requirements, download the EDA/Interface A white paper today on www.cimtrix.com.